* Your assessment is very important for improving the work of artificial intelligence, which forms the content of this project

Download Halpotyping - CS, Technion

Survey

Document related concepts

Transcript

Haplotype Analysis based on

Markov Chain Monte Carlo

By Konstantin Sinyuk

Overview

Haplotype, Haplotype Analysis

Markov Chain Monte Carlo (MCMC)

The algorithm based on (MCMC)

Compare with other algorithms

result

Discussion on algorithm accuracy

What is haplotype ?

A haplotype is a particular pattern of sequential

SNPs found on a single chromosome.

Haplotype has a block-wise structure separated by

hot spots.

Within each block, recombination is rare due to

tight linkage and only very few haplotypes really

occur

Haplotype analysis motivation

Use of haplotypes in disease association studies

reduces the number of tests to be carried out, and

hence the penalty for multiple testing.

The genome can be partitioned onto 200,000 blocks

With haplotypes we can conduct evolutionary

studies.

International HapMap Project started in October

2002 and planned to be 3 years long.

Haplotype analysis algorithms

Given a random sample of multilocus genotypes at

a set of SNPs the following actions can be taken:

Estimate the frequencies of all possible

haplotypes.

Infer the haplotypes of all individuals.

Haplotyping Algorithms:

Clark algorithm

EM algorithm

Haplotyping programs:

HAPINFEREX ( Clark Parsimony algorthm)

EM-Decoder ( EM algorithm)

PHASE

( Gibbs Sampler)

HAPLOTYPER

Motivation for MCMC method

MCMC algorithm considers the underlying

configurations in proportion to their likelihood

Estimates most probable haplotype configuration

Prof. Donnelly:

“If a statistician cannot solve a problem, s/he makes it more complicated”

Discrete-Time Markov Chain

Discrete-time stochastic process {Xn: n = 0,1,2,…}

Takes values in {0,1,2,…}

Memoryless property:

P{ X n 1 j | X n i, X n 1 in 1 ,..., X 0 i0 } P{ X n 1 j | X n i}

Pij P{ X n 1 j | X n i}

Transition probabilities Pij

Pij 0,

P

j 0

ij

1

Transition probability matrix P=[Pij]

Chapman-Kolmogorov

Equations

n step transition probabilities

Pijn P{X nm j | X m i},

n, m 0, i, j 0

Chapman-Kolmogorov equations

nm

ij

P

Pikn Pkjm ,

n, m 0, i , j 0

k 0

Pijn is element (i, j) in matrix Pn

Recursive computation of state probabilities

State Probabilities – Stationary

Distribution

State probabilities (time-dependent)

πnj P{X n j},

πn (π0n , π1n ,...)

P{ X n j} P{ X n 1 i}P{ X n j | X n 1 i} π πin 1Pij

n

j

i 0

i 0

In matrix form:

π n π n 1P π n 2 P 2 ... π 0 P n

If time-dependent distribution converges to a limit

π lim π n

n

p is called the stationary distribution

π πP

Existence depends on the structure of Markov chain

Classification of Markov Chains

Irreducible:

States i and j communicate:

n, m : P 0, P 0

n

ij

Aperiodic:

m

ji

Irreducible Markov chain: all

states communicate

1

2

State i is periodic:

d 1: Piin 0 n d

Aperiodic Markov chain: none

of the states is periodic

1

2

0

0

3

4

3

4

Existence of Stationary

Distribution

Theorem 1: Irreducible aperiodic Markov chain. There

are two possibilities for scalars:

π j lim P{ X n j | X 0 i} lim Pijn

n

1.

2.

pj = 0, for all states j

pj > 0, for all states j

n

No stationary distribution

p is the unique stationary

distribution

Remark: If the number of states is finite, case 2 is the

only possibility

Ergodic Markov Chains

Markov chain with a stationary distribution

π j 0, j 0,1, 2,...

States are positive recurrent: The process returns to

state j “infinitely often”

A positive recurrent and aperiodic Markov chain is

called ergodic

Ergodic chains have a unique stationary distribution

π j lim Pijn

n

Ergodicity ⇒ Time Averages = Stochastic Averages

Balanced Markov Chain

Global Balance Equations (GBE)

i 0

i 0

π j Pji πi Pij π j Pji πi Pij ,

i j

Detailed Balance Equations (DBE)

π j Pji πi Pij

i j

j0

i , j 0,1,...

π j Pji is the frequency of transitions from j to i

Frequency of Frequency of

transitions out of j transitions into j

Markov Chain Summary

Markov chain is a set of random processes

with stationary transition probabilities

- matrix of transition probabilities,

and

Markov chain is Ergodic if:

Aperiodic –

Irreducible Ergodic Markov chain has stationary distribution property:

pij(n) exists and is independent of i ( lim n p ij( n ) p j )

lim

n

p

The vector

between

(p j ), p i 1 is stationary distribution of the chain

pP p , p p p

i

i

ij

j

Ergodic Markov chain is detailed balanced if:

i

p p p p

i

ij

j

ji

Markov Chain Monte Carlo

MCMC is used when we wish to simulate from a

distribution p known only up to a constant

(normalization) factor:p i C i (C is hard to

calculate)

Metropolis proposed to construct Markov chain { X S}

with stationary distribution p using onlyp i

pj

ratio

Define transition matrix P indirectly via Q = (qij)

matrix:

p q

*

q Pr(

j | X T i ) - proposal probability

X

ij

ij

- acceptance probability, selected such that

ij

ij

ij

Markov chain will be detailed balanced

Metropolis-Hastings algorithm

Metropolis-Hasting (MH) algorithm steps:

Start with X0 = any state

Given Xt-1 = i, choose j with probability qij

Accept this j (put Xt = j) with acceptance probability

1

ij hij

hij

p q

j

ji

pq

i

- Hastings ratio

ij

Otherwise accept i (put Xt = i)

Repeat step 2 through 4 a needed number of times

With such hij detailed balance is satisfied

With rejection steps the Markov chain is surely

irreducible

ij

Metropolis-Hastings Graph

Example of

Metropolis-Hastings

Suppose we want to simulate from

p ( x) c * exp( x ), x R

4

Metropolis algorithm steps:

Start with X0 = 0

Generate Xt from the proposal distribution

N(Xt-1,1) p ( x* )

*4

4

t

exp( xt xt )

Compute ht

p ( xt 1)

Repeat step 2 through 4 a needed number of times

Gibbs Sampler

The Gibbs sampler is a special case of the MH

algorithm that considers states that can be

partitioned into coordinates i (i1 , i2 ,..., in )

At each step, a single coordinate of the state is

updated.

*

*

Step from i (i1 ,... i r 1 , i r , i r 1 ..., in ) to i (i1 ,... ir1 , ir , ir1 ..., in )

given by

Gibbs sampler is used where each variable depends

on other variable within some neighborhood

The acceptance probabilities are all equal to 1

MCMC in haplotyping

The Gibbs sampler is good for multilocus genotyping

of n persons.

Lets define:

d

is the

observed

phenotype of

individual i

at locus j

Ordered

genotype of

person i

i

j

The conditional distribution P(g|d) can be estimated

,

The Markov chain obtained with Gibbs sampler may

not be ergodic.

The proposed algorithm

Most algorithms search g maxthat maximize P(g|d)

The proposed algorithm seeks for

f (c) {g : P( g | d ) c}

An ergodic Markov chain is constructed such that

stationary distribution is P(f(c)|d)

The sampling is done with Gibbs sampler

An Ergodic property of Markov chain is satisfied with

use of Metropolis jump kernels

The Gibbs-Jumping name is assigned to algorithm

Gibbs step of algorithm

i

For each individual i and locus j, alleles a j1 and

are sampled from the conditional distribution:

a

i

j2

children of

i and s

spouses of i

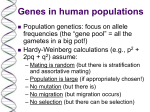

The following assumption are commonly made in

order to compute transition probability P( g i | g f , g )

Hardy-Weinberg Equilibrium

Linkage Equilibrium

No interference

i

mi

Jumping step of algorithm

After Gibbs step the algorithm attempts to jump

from current state of multilocus genotype g to the

state g* in a different irreducible set.

The Metropolis jumping kernel Q

is used

{g , g ,..., g r }

G

Let

be the set of nonD

D

D

D

communicating genotypic configurations on locus j

|

j

1

j

D

j

2

j

j

j

j

set of individuals who “characterize” irreducible set at j

A new state g* is formed by replacing the alleles

k

pair in g by those from g D for individuals in D j

P( g* | d )

min{

1

,

}

The g* is accepted with probability

j

P( g | d )

Gibbs-Jump trajectories

Results Comparison

Gibbs-Jumping algorithm is estimating one.

So the algorithm should be tested on well-explored

genetic diseases.

Such explored diseases are:

The original exploration was done by programs

Krabbe disease (autosomal, recessive disorder)

Episodic Ataxia disease (autosomal, dominant disorder)

LINKAGE (Krabbe) – enumerating linkage analysis

SIMWALK (Ataxia) – using simulated annealing (MC)

The comparison of various haplotyping method was

carried out by Sobel.

So proposed algorithm results are compared to Sobel

work.

Krabbe disease

(Globoid-cell leukodystrophy)

This autosomal recessively-inherited disease results

from a deficiency of the lysosomal enzyme

b-galactosylceramidase (GALC).

GALC enzyme plays a role in the normal turnover of

lipids that form a significant part of myelin, the

insulating material around certain neurons.

Affected individuals show progressive mental and

motor (movement) deterioration and usually die

within a year or two of birth.

Krabbe disease cont

Krabbe disease result compare

The input data is genetic map of 8

polymorphic genetic markers on

chromosome 14.

The Gibbs-Jump algorithm assigned

the most likely haplotype

configuration with probability 0.69,

the same configuration as obtained

by Sobol enumerative approach.

By Sobol they enumerated 262,144

haplotype variations with CPU

time of couple of hours instead of

less than 1 minute run of 100

iterations of Gibbs-Jump.

Episodic Ataxia disease

Episodic ataxia, a autosomal dominantlyinherited disease affecting the cerebellum.

Point mutations in the human voltage-gated

potassium channel (Kv1.1) gene on

chromosome 12p13

Affected individuals are normal between

attacks but become ataxic under stressful

conditions.

Episodic Ataxia result compare

The input data is genetic map of 9 polymorphic

genetic markers on chromosome 12.

The Gibbs-Jump algorithm assigned the most likely

haplotype configuration with probability 0.41, that is

very similar to the obtained by Sobel with SIMWALK.

The second most probable haplotype configuration

obtained with 0.09 probability and is identical to the

one picked by Sobel.

Simulation data

To evaluate the performance of Gibbs-Jump on

large pedigrees (with loops) a haplotype

configuration was simulated.

The genetic map of 10 co dominant markers

(5 alleles per marker) with = 0.05 was taken.

The founders haplotypes were sampled randomly

from population distribution of haplotypes.

Haplotypes for nonfounders where then simulated

conditional on their parents’ haplotypes.

Assuming HW equilibrium ,Linkage equilibrium and

Haldane’s no interference model for recombination.

Simulation results

100 iteration of Gibbs-Jump were performed.

The most probable configuration (with probability

0.41) is identical to the true (simulated ) one

There are 3 configurations with second largest

probability (0.07)

All 3 differ from the true configuration in one

person with one extra recombination event in each

The algorithm execution time took several minutes

Simulation accuracy

Results of 10 runs of 100

realizations each.

In runs 1 and 3-10 the

most frequent configuration

was the true one .

The most frequent

configuration in run 2

differed from the true one

at one individual.

Simulation run-length

Results of 5 runs of 10000

realizations each.

The figure shows that

there is a fair amount of

variability in the

estimates, but with very

little correlation between

consecutive estimates.

Autocorrelation = -0.02

Dot plot of the estimated

frequency of the

underlying true haplotype

configuration for 100

iterations.

Simulation run-length cont

Estimates converges to the

true haplotype configuration

after 2000 steps.

The confidence bound is

95%

Four other runs also inferred

the true configuration with

probabilities:

34.54%,35.75%,37.08% and

35.27% respectively.

Cumulative frequency of

the most probable

configuration , plotted for

every 100 iterations and

the confidence bound.

Results of Sensitivity Analysis

Computation of P(g) requires an assignment of

haplotype probabilities to the founders.

How inaccurate prediction of founder probabilities

affects the results?

The 4 sets for gene frequencies (different from

simulated) for one of 10 markers were used (other

markers were leaved unchanged)

For the above simulation set the resulting haplotype

configuration was as simulated one.

Conclusion

In this discussion was presented a new method,

Gibbs-Jump, for haplotype analysis, which explores the

whole distribution of haplotypes conditional on the

observed phenotypes.

The method is very time-efficient.

The result accuracy was compared to obtained by

other methods (described by Sobol).

Method demonstrated the sensitivity tolerance to

founders probabilities sample.

The End…

Wake up!