* Your assessment is very important for improving the work of artificial intelligence, which forms the content of this project

Download Chapitre 4 (style : Chapitre)

Radiosurgery wikipedia , lookup

Industrial radiography wikipedia , lookup

Backscatter X-ray wikipedia , lookup

Center for Radiological Research wikipedia , lookup

Nuclear medicine wikipedia , lookup

Positron emission tomography wikipedia , lookup

Medical imaging wikipedia , lookup

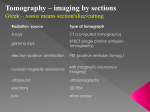

Chapter 1 Introduction to tomography 1.1. Introduction Tomographic imaging systems are designed to analyze the structure and composition of objects by examining them with waves or radiation and by calculating virtual cross sections through them. They cover all imaging techniques that permit the mapping of one or more physical parameters across one or more planes. In this book, we are mainly interested in calculated, or computer aided tomography, in which the final image of the spatial distribution of a parameter is computed from measurements of the radiation that is emitted, transmitted, or reflected by the object. In combination with the electronic measurement system, the processing of the collected information thus plays a crucial role in the production of the final image. Tomography complements the range of imaging instruments dedicated to observation, such as the radar, the sonar, the lidar, the echograph, and the seismograph. Currently, these instruments are mostly used to detect or localize an object, for instance an airplane by its echo on a radar screen, or to measure heights and thicknesses, for instance of the earth’s surface or of a geological layer. They mainly rely on depth imaging techniques, which are described in another book of the French version of this series [GAL 02]. By contrast, tomographic systems calculate the value of the respective physical parameter at all vertices of the grid that serves the spatial encoding of the image. An important part of imaging systems such as cameras, camcorders, or microscopes is the sensor that directly delivers the observed image. In tomography, the sensor performs indirect measurements of the image by detecting the radiation with which the object is examined. These measurements are described by the radiation transport equations, which lead to what the mathematicians call the direct problem, i.e. the measurement Chapter written by Pierre GRANGEAT. 2 Tomography or signal equation. To obtain the final image, appropriate algorithms are applied to solve this equation and to reconstruct the virtual cross sections. The reconstruction thus solves the inverse problem [OFT 99]. Tomography evidently yields the desired image only indirectly by calculation. 1.2. Observing contrasts A broad range of physical phenomena may be exploited to examine objects. Electromagnetic waves, acoustic waves, or photonic radiation are used to carry the information to the sensor. The choice of the exploited physical phenomenon and of the associated imaging instrument depends on the desired contrast, which must assure a good discrimination between the different structures present in the objects. This differentiation is characterized by its specificity, i.e. by its ability to discriminate between inconsequential normal structures and abnormal structures, and by its sensitivity, i.e. its capacity for measuring the weakest possible intensity level of the relevant abnormal structures. In medical imaging, for example, tumors are characterized by a metabolic hyperactivity, which leads notably to an increased glucose consumption. A radioactive marker such as fluorodeoxyglucose (FDG) permits detecting this increase in the metabolism, but it is not absolutely specific, since other phenomena, like an inflammation, also entail a local increase in glucose consumption. Therefore, the physician must interpret the physical measure in the context of the results of other clinical examinations. It is preferable to use coherent radiation whenever possible, which is the case for ultrasound, microwaves, laser radiation, and optical waves. Coherent radiation permits measuring not only its attenuation but also its dephasing. The latter serves associating a depth with the measured information, because the propagation time difference results in a dephasing. Each material is characterized by its attenuation coefficient and its refractive index, which describe the speed of propagation of waves in it. In diffraction tomography, one essentially aims at reconstructing the surfaces of the interfaces between materials with different indices. In materials with complex structure, however, the multiple interferences rapidly render the phase information unusable. Moreover, sources of coherent radiation are often more expensive, like lasers. With X-rays, only phase contrast phenomena that are linked to the spatial coherence of photons are currently observable, using microfocus sources or synchrotrons. This concept of spatial coherence reflects the fact that an interference phenomenon may only be observed behind two slits if they are separated by less than the coherence length. In such a situation, a photon interferes solely with itself. Truly coherent X-ray sources, such as the X-FEL (X-ray Free Electron Laser), are only emerging. Introduction to tomography 3 In case of non-coherent radiation, like γ-rays, and in most cases of X-ray imaging and optical imaging with conventional light sources, each material is mainly characterized by its attenuation and diffusion coefficients. The elastic or Rayleigh diffusion is distinguished from the Compton diffusion, which results from inelastic photon-electron collisions. In γ- and X-ray imaging, one tries to keep the attenuated direct radiation only, since it propagates, in contrast to the diffused radiation, along a straight line and thus permits obtaining a very high spatial resolution. The attenuation coefficients reflect in first approximation the density of the traversed material. Diffusion tomography is, for instance, considered in infrared imaging to study the blood and its degree of oxygenation. Since diffusion spreads light in all directions, the spatial resolution of such systems is limited. After the interaction between radiation and matter, we now consider the principle of generating the radiation. We distinguish between active and passive systems. In the former, the source of radiation is controlled by an external generator that is activated at the moment of the measurement. The object is explored by direct interrogation if the generated incident radiation and the emerging measured radiation are of the same type. This is the case in X-ray, optical, microwave, and ultrasound imaging, for example. The obtained contrast consequently corresponds to propagation parameters of the radiation. For these active systems with direct interrogation, we distinguish measurement systems based on reflection or backscattering, where the emerging radiation is measured on the side of the object the generator is placed, and measurement systems based on transmission, where the radiation is measured on the opposite side. The choice depends on the type of radiation employed and on constraints linked to the overall dimensions of the object. Magnetic resonance imaging (MRI) is a system with direct interrogation, since the incident radiation and the emerging radiation are both radiofrequency waves. However, the temporal variations of the excitation and receive signals are very different. The object may also be explored by indirect interrogation, which means that the generated incident radiation and the emerging measured radiation are of different type. This is the case in fluorescence imaging, where the incident wave produces fluorescent light with a different wavelength (see Chap. 6). The observed contrast corresponds in this case to the concentration of the emitter. In contrast to active systems, passive systems rely on internal sources of radiation, which are inside the analyzed matter or patient. This is the case in magnetoand electro-encephalography (MEG and EEG), where the current dipoles linked to synaptic activity are the sources. This is also the case in nuclear emission or photoluminescence tomography, where the tracer concentration in tissues or materials is measured. The observed contrast again corresponds to the concentration of the emitter. Factors such as attenuation and diffusion of the emitted radiation consequently lead to errors in the measurements. 4 Tomography On these different systems, the contrasts may be natural or artificial. Artificial contrasts correspond to the injection of contrast agents, such as agents based on iodine in X-ray imaging and gadolinium in MRI, and of specific tracers dedicated to passive systems, such as tracers based on radioisotopes and fluorescence. Within the new field of nanomedicine, new activatable markers are investigated, such as luminescent or fluorescent markers, which become active when the molecule is in a given chemical environment, exploiting for instance the quenching effect, or when the molecule is activated or dissociated by an external signal. Finally, studying the kinetics of contrasts provides complementary information, such as uptake and redistribution time constants of radioactive compounds. Moreover, multispectral studies permit characterizing flow velocity by the Doppler effect, and dual-energy imaging allows decomposing matter into two reference materials like water and bone, and thus enhancing the contrast. In Tab. 1.1, principal tomographic imaging modalities are listed, including in particular those addressed in this book. In the second column, entitled “contrast”, the physical parameter visualized by each modality is stated. This parameter serves in certain cases, as in functional imaging, the calculation of physiological or biological parameters via a model, which describes the interaction of the tracer with the organism. In the third column, we propose a classification of these systems according to their accuracy. We differentiate between very accurate systems, which are marked by + and may reach a reproducibility of the basic measurement of 0.1 %, thus permitting quantitative imaging, accurate systems, which are marked by = and attain a reproducibility in the order of 1 %, and less accurate systems, which are marked by – and provide a reproducibility in the order of 10%, thus allowing qualitative imaging only. Finally, in the fourth and fifth column, typical orders of magnitude are given for the spatial and temporal resolution delivered by these systems. In view of the considerable diversity of existing systems, they primarily allow a distinction between systems with a high spatial resolution and systems with a high temporal resolution. They also illustrate the discussion in Sec. 1.3. The spatial and temporal resolutions are often conflicting parameters that depend on how the data acquisition and the image reconstruction are configured. For example, increasing the time of exposure improves the signal-to-noise ratio and thus the accuracy at the expense of the temporal resolution. Likewise, spatial smoothing alleviates the effect of noise and ameliorates the statistical reproducibility at the expense of the spatial resolution. Another important parameter is the sensitivity of the imaging system. For instance, nuclear imaging systems are very sensitive and are able to detect traces of radioisotopes, but need very long acquisition times to reach a good signal-to-noise ratio. Therefore, the accuracy is often limited. The existence of disturbing effects often corrupts the measurement in tomographic imaging and makes it difficult to attain an absolute quantification. One is then satisfied with a relative quantification, according to the accuracy of the measurement. Introduction to tomography 5 Tomographic imaging modality Contrast Accuracy Spatial resolution Temporal resolution CT attenuation coefficient, density of matter + axial 0.4 mm 0.1 s – 2.0 s per set of slices associated with 180° rotation transaxial 0.6 - 5 mm X-ray tomography in radiology attenuation coefficient, density of matter = 0.25 mm 3-6s by volumetric acquisition (180° rotation) MRI amplitude of transverse magnetization = 0.5 mm 0.1 – 10.0 s SPECT tracer concentration, associated physiological or biological parameters - 10 - 20 mm 600 - 3 600 s per acquisition PET tracer concentration, associated physiological or biological parameters = 3 - 10 mm 300 - 600 s per acquisition fMRI amplitude of transverse magnetization, concentration of oxy-deoxyhemoglobine = 0.5 mm 0.1 s MEG current density - some mm 10-3 s EEG current density - 10 - 30 mm 10-3 s TEM attenuation coefficient - 10-6 mm some 100 s (function of image size) CLSM concentration of fluorophore - 2 · 10-4 mm some 100 s (function of image size) Coherent optical tomography refractive index - 10-2 mm 10-1 s Non-coherent optical tomography attenuation and diffusion coefficients - 10 mm some 100 s Synchrotron tomography attenuation coefficient, density of matter + 10-3 - 10-2 mm 800 - 3 600 s per acquisition (180° rotation) 6 Tomography Tomographic imaging modality Contrast Accuracy Spatial resolution Temporal resolution X-ray tomography in NDT attenuation coefficient, density of matter + 10-2 - 102 mm (dependent on system) 1 - 1 000 s per acquisition (dependent on system) Emission tomography in industrial flow visualization concentration of tracer - some 10 % of the diameter of the object (some 10 mm) 10-1 s Impedance tomography electric impedance (resistance, capacitance, inductance) - 3 - 10 % of the diameter of the object (1 - 100 mm) 10-3 - 10-2 s Microwave tomography refractive index - - /10 with wavelength 10-2 - 10 s (1 - 10 mm) Industrial ultrasound tomography ultrasonic refractive index, reflective index, speed of propagation, attenuation coefficient - with wavelength 10-2 - 10-1 s (0.4 – 10.0 mm) + : very accurate systems, reproducibility of the basic measurement in the order of 0.1%, quantitative imaging. = : accurate systems, reproducibility of the basic measurement in the order of 1%. - : less accurate systems, reproducibility of the basic measurement in the order of 10%, qualitative imaging. Table 1.1. Comparison of principal tomographic imaging modalities In most cases, the interaction between radiation and matter is accompanied by energy deposit, which may be associated with diverse phenomena, like a local increase of the thermal agitation, a change in state, an ionization of atoms, and a breaking of chemical bonds. Improving image quality in terms of contrast- or signalto-noise ratio unavoidably leads to an increase in the applied dose. In medical imaging, a compromise has to be found to assure that image quality is compatible with the demands of the physicians and the dose tolerated by the patient. After stopping the irradiation, the storage of energy may be emptied by returning to the equilibrium, by thermal dissipation, or by biological mechanisms that try to repair or replace the defective elements. Introduction to tomography 7 1.3. Localization in space and time Tomographic systems provide images, i.e. a set of samples of a quantity on a spatial grid. When this grid is two-dimensional (2D) and associated with the plane of a cross section, one speaks of 2D imaging, where each sample represents a pixel. When the grid is three-dimensional (3D) and associated with a volume of interest, one speaks of 3D imaging, where each element of the volume is called a voxel. When the measurement system provides a single image at a given moment in time, the imaging system is static. One may draw an analogy with photography, in which an image is instantaneously captured. When the measurement system acquires an image sequence over a period of time, the imaging system is dynamic. One may draw an analogy with cinematography, in which image sequences of animated scenes are recorded. In each of these cases, the basic information associated with each sample of the image is the local value in space and time of a physical parameter characteristic of the employed radiation. The spatial grid serves as support of the mapping of the physical parameter. The spatial sampling step is defined by the spatial resolution of the imaging system and its ability to separate two elementary objects. The acquisition time, which is equivalent to the time of exposure in photography, determines the temporal resolution. Each tomographic imaging modality possesses its own characteristic spatial and temporal resolution. When exploring an object non-destructively, it is impossible to access the desired, local information directly. Therefore, one has to use penetrating radiation to measure the characteristic state of the matter. The major difficulty is that the emerging radiation will provide a global, integral measure of contributions from all traversed regions. The emerging radiation thus delivers a projection of the desired image, in which the contribution of each point of the explored object is weighted by an integration kernel, whose support geometrically characterizes the traversed regions. To illustrate these projective measurements, let us consider X-ray imaging as an example, where all observed structures superimpose on a planar image. On an X-ray image of the thorax seen from the front, the anterior and posterior parts superimpose, the diaphragm is combined with the vertebrae. On these projection images, only the objects with high contrast are observable. In addition, only their profile perpendicular to the direction of observation may be identified. Their 3D form may not be recognized. To obtain a tomographic image, a set of projections is acquired under varying measurement conditions. These variations change the integration kernel, in particular the position of its support, to completely describe a projective transformation that is characteristic of the employed physical means of exploration. In confocal microscopy, for example, a Fredholm integral transform characterizes the impulse response of the microscope. In MRI, it is a Fourier transform, and in X-ray tomography, it is a Radon transform. In the last case, describing the Radon transform implies acquiring projections, i.e. radiographs, with repeated measurements under 8 Tomography angles that are regularly distributed around the patient or the explored object. Once the transformation of the image is described, the image may be reconstructed from the measurements by applying the inverse transformation, which corresponds to solving the signal equation. By these reconstruction operations, global projection measurements are transformed into local values of the examined quantity. This localization has the effect of increasing the contrast. It thus becomes possible to discern organs, or defects, with low contrast. This is typically the case in X-ray imaging, where one may basically observe the bones on the radiographs, while one may identify the soft organs on the tomographs. This increase in contrast permits being more sensitive in the search for anomalies characterized by either hyper- or hypo-attenuation, or by hyper- or hypo-uptake of a tracer. Thanks to the localization, it also becomes possible to separate organs from their environment and thus to gain characteristic information on them, such as the form of the contour, the volume, the mass, and the number, and to position them with respect to other structures in the image. This localization of information is of primary interest and justifies the success of tomographic techniques. A presentation of the principles of computerized tomographic imaging can be found in [KAK 01, HSI 03, KAL 06] for X-rays and [BEN 98, BAI 05] for positron emission tomography (PET). The inherent cost of tomographic procedures is linked to the necessity of completely describing the space of projection measurements to reconstruct the image. Tomographic systems are thus “scanners”, i.e. systems that measure, or scan, each point in the transformed domain. The number of basic measurements is of the same order of magnitude as the number of vertices of the grid on which the image is calculated, typically between 642 and 10242 in 2D and between 643 and 10243 in 3D. The technique used for data acquisition thus greatly influences the temporal resolution and the cost of the tomographic system. The higher the number of detectors and generators placed around the object gets, the better the temporal resolution will be, but the higher the price will rise. A major evolution in the detection of nuclear radiation is the replacement of one-dimensional (1D) by 2D, either multi-line or surface, detectors. In this way, the measurements may be performed in parallel. In PET, one observes a linear increase in the number of detectors available on high-end systems with the year of the product launch. However, scanning technology must also be taken into account for changing the conditions of the acquisition. The most rapid techniques are those of electronic scanning. This is the case in electromagnetic measurements and in MRI [HAA 99]. In the latter, only a single detector, a radiofrequency antenna, is used and the information is gathered by modifying the ambient magnetic fields, in such a way that the whole measurement domain is covered. The obtained information is intrinsically 3D. The temporal resolution achieved with these tomographic systems is very good, permitting dynamic imaging at video rates, i.e. at about 10 images/s. When electronic scanning is impossible, mechanical scanning is employed instead. This is the case for rotating gantries in X- Introduction to tomography 9 ray tomography, which rotate the source-detector combination around the patient. To improve the temporal resolution, the rotation time must be decreased - the most powerful systems currently complete a rotation in the order of 0.3 s - and the rotation must be combined with an axial translation of the bed to efficiently cover the whole volume of interest. Today, it is possible to acquire the whole thoracic area in less than 10 s, i.e. in a time that patients can hold their breath. The principle of acquiring data by scanning, adopted by tomographic systems, poses problems of motion compensation when objects that evolve over time are observed. This is the case in medical imaging when the patient moves or when organs which are animated by continuous physiological motion like the heart or the lungs are studied. This is also the case in non-destructive testing on assembly or luggage inspection lines, or in process monitoring, where chemical or physical reaction are followed. The problem must be considered as a reconstruction problem in space and time, and appropriate reconstruction techniques for compensating the motion or the temporal variation of the measured quantities must be introduced. The evolution in tomographic techniques manifests itself mainly in the localization in space and time. Growing acquisition rates permit increasing the dimensions of the explored regions. Thus, the transition from 2D to 3D imaging has been accomplished. In medical imaging, certain applications like X-ray and MR angiography and oncological imaging by PET require a reconstruction of the whole body. Accelerating the acquisition also makes possible dynamic imaging, for instance to study the kinetics of organs and metabolism or to guide interventions based on images. An interactive tomographic imaging is thus approached. Finally, the general trend towards miniaturization leads to growing interest in microtomography, where an improved spatial resolution of the systems is the primary goal. In this way, one may test micro-systems in non-destructive testing (NDT), study animal models like mice in biotechnology, or analyze biopsies in medicine. Nanotomography of molecules such as proteins, molecule assemblies, or atomic layers within integrated microelectronic devices is also investigated using either electron microscopy or synchrotron X-ray sources. 1.4. Image reconstruction Tomographic systems combine the examination of matter by radiation with the calculation of characteristic parameters, which are either linked to the generation of this radiation or the interaction between this radiation and matter. Generally, each measurement of the emerging radiation provides an analysis of the examined matter, like a sensor. Tomographic systems thus acquire a set of partial measurements by scanning. The image reconstruction combines these measurements to assign a local value, which is characteristic of the employed radiation, to each vertex of the grid that supports the spatial encoding of the image. For each measurement, the emerging radiation is the sum of the contributions of the quantity to be reconstructed along the traversed path, each 10 Tomography elementary contribution being either an elementary source of radiation or an elementary interaction between radiation and matter. It is thus an integral measurement which defines the signal equation. The set of measurements constitutes the direct problem, linking the unknown quantities to the measurements. The image reconstruction amounts to solving this system of equations, i.e. the inverse problem [OFT 99]. The direct problem is deduced from the fundamental equations of the underlying physics, such as the Boltzmann equation for the propagation of photons in matter, the Maxwell equation for the propagation of electromagnetic waves [DEH 95], and the elastodynamic equation for the propagation of acoustic waves [DEH 95]. The measurements provide integral equations, often entailing numerically instable differentiations in the image reconstruction. In this case, the reconstruction corresponds to an inverse problem that is ill-posed in the sense of Hadamard [HAD 32]. The acquisition is classified as complete when it provides all measurements that are necessary to calculate the object, which is in particular the case when the measurements cover the whole support of the transformation modeling the system. The inverse problem is considered weakly ill-posed, if the principal causes of errors are instabilities associated with noise or the bias introduced by an approximate direct model. This is the case in X-ray tomography, for instance. If additionally different solutions may satisfy the signal equation, even in absence of noise, the inverse problem is considered strongly ill-posed. This is the case in magneto-encephalography and in X-ray tomography using either a small angular range or a small number of positions of the X-ray source, for example. The acquisition is classified as incomplete when some of the necessary measurements are missing, in particular when technological constraints reduce the number of measurements that can be made. This situation may be linked to a subsampling of measurements or an absence of data caused by missing or truncated acquisitions. In this case, the inverse problem is also strongly ill-posed, and the reconstruction algorithm must choose between several possible solutions. To resolve these ill-posed inverse problems in the sense of Hadamard, the reconstruction must be regularized by imposing constraints on the solution. The principal techniques of regularization reduce the number or the range of the unknown parameters – for instance by using a coarser spatial grid to encode the image, by parameterizing the unknown image, or by imposing models on the dynamics –, introduce regularity constraints on the image to be reconstructed, or eliminate from the inverse operator the smallest spectral or singular values of the direct operator. All regularization techniques require that a compromise is made between the fidelity to the given data and the fidelity to the constraints on the solution. This compromise reflects the uncertainty relations between the localization and the quantification in images, applied to tomographic systems. Introduction to tomography 11 Several approaches may be pursued to define algorithms for image reconstruction, for which we refer the reader to [HER 79, NAT 86, NAT 01]. Like for numerical methods in signal processing, deterministic and statistical methods, and continuous and discrete methods are distinguished. For a review on recent advances in image reconstruction, we refer the reader to [CEN 08]. The methods most widely used are continuous approaches, also called analytical methods. The direct problem is described by an operator over a set of functions. In the case of systems of linear measurements, the unknown image is linked to the measurements by a Fredholm transform of the first kind. In numerous cases, this equation simplifies either to a convolution equation, a Fourier transform, or a Radon transform. In these cases, the inverse problem admits an explicit solution, either in form of an inversion formula, or in form of a concatenation of explicit transformations. A direct method of calculating the unknown image is obtained in this way. These methods are thus more rapid than the iterative methods employed for discrete approaches. In addition, these methods are of general use, because the applied regularization techniques, which perform a smoothing, do not rely on specific a priori knowledge about the solution. The discretization is only introduced for the numerical implementation. However, when the direct operator becomes more complex to better describe the interaction between radiation and matter, or when the incorporation of a priori information is necessary to choose a solution in case of incomplete data, an explicit formula for calculating the solution does not exist anymore. In these specific cases, discrete approaches are preferable. They describe the measurements and the unknown image as two finite, discrete sets of sampled values, represented by vectors. When the measurement system is linear or can be linearized by a simple transformation of the data, the relation between the image and the measurements may be expressed by a matrix-vector product. The problem of image reconstruction then leads to the problem of solving a large system of linear equations. In the case of slightly underdetermined, complete acquisitions, regularization is introduced by imposing smoothness constraints. The solution is formulated as the argument of a composite criterion that combines the fidelity to the given data and the regularity of the function. In the case of highly underdetermined, incomplete acquisitions, regularization is introduced by choosing an optimal solution among a set of possible solutions, which are, for example, associated with a discrete subspace that depends on a finite number of parameters. The solution is thus defined as the argument of a linear system under constraints. In both cases, the solution is calculated with iterative algorithms. When the statistic fluctuation of the measurements becomes important, as in emission tomography, it is preferable to explicitly describe the images and measurements statistically, following the principle of statistical methods. The solution is expressed as a statistical estimation problem. In the case of weakly ill-posed problems, Bayesian estimators corresponding to the minimization of a composite criterion have 12 Tomography been proposed. They choose the solution with the highest probability for the processed measurement, or on average for all measurements, taking the measurements and the a priori information about the object into account. In the case of strongly illposed problems, constrained estimators, for example linked to Kullback distances, have been envisioned. Also in these cases, one resorts to iterative reconstruction algorithms. When the ill-posed character becomes too severe, for example in case of very limited numbers of measurement angles, constraints at low levels of the image samples are no longer sufficient. One must then employ a geometric description of the object to be reconstructed, in form of a set of elementary objects, specified by a graphical model or a set of primitives. The image reconstruction amounts to matching the calculated and the measured projections. In this work, these approaches, which do not directly concern the problem of mapping a characteristic physical parameter, but rather problems in computer vision and pattern recognition, are not considered any further. We refer the reader to [GAR 06]. The image reconstruction algorithms require considerable processing performance. The elementary operations are the backprojection, or the backpropagation, in which a basic measurement is propagated through a volume by following the path traversed in the acquisition in reverse direction. For this reason, the use of more and more sophisticated algorithms, and the reconstruction of larger and larger images, with higher spatial and temporal resolution, are only made possible by advances in the processing performance and the memory size of the employed computers. Often, the use of dedicated processors and of parallelization and vectorization techniques is necessary. In view of these demands, dynamic tomographic imaging in real-time constitutes a major technological challenge today. 1.5. Application domains By using radiation to explore the matter and calculations to compute the map of the physical parameter characteristic of the employed radiation, tomographic imaging systems provide virtual cross sections through objects without resorting to destructive means. These virtual cross sections may easily be interpreted and enable the identification and localization of internal structures. Computed tomography techniques directly provide a digital image, thus offering the possibility of applying the richness of image processing algorithms. The examination of these images notably permits a characterization of each image element for differentiation, a detection of defects for control and testing, a quantification for measurement, and a modeling for description and understanding. The imaging becomes 3D when the tomographic system provides a set of parallel cross sections that cover the region of interest. Thus, cross sections in arbitrary planes may be calculated, and surfaces, shapes, and the architecture of internal struc- Introduction to tomography 13 tures may be represented. In applications associated with patients or materials with solid structures in which fluids circulate, a distinction is made between imaging systems that primarily map the solid architecture, i.e. the structures – one then speaks of morphological imaging – and imaging systems that mainly follow the exchange of fluids, i.e. the contents – one then speaks of functional imaging. In optical imaging, the development of the first, simple microscopes, which were equipped with a single lens, dates back to the 17th century. From a physical point of view, the use of X-rays for the examination of matter started with their discovery by Röntgen in 1895. In the course of experiments conducted to ascertain the origin of this radiation, Becquerel found the radiation that uranium spontaneously emits in 1896 [BIM 99]. After the discovery of polonium and radium in 1898, Pierre and Marie Curie characterized the phenomenon that produces this radiation and called it “radioactivity”. For these discoveries, Henri Becquerel, Marie Curie and Pierre Curie received the Nobel price for physics in 1903. The use of radioactive tracers was initiated in 1913 by Hevesy, Nobel price for chemistry in 1943. The discovery of artificial radioactivity by Irène and Frédéric Joliot-Curie in 1934 opened the way for the use of numerous tracers to study the functioning of the living, from cells to entire organisms. Important research has then been carried out on the production and the detection of these radiations. The first microscope using electrons to study the structure of an object was developed by Ernst Ruska in 1929. From a mathematical point of view, the Fourier transform constitutes an important tool in the study of the analytical methods used in tomography. Several scholars like Maxwell, Helmholtz, and Born have studied the equation of wave propagation. The name of Fredholm remains linked to the theory of integral equations. The problem of reconstructing an image from line integrals goes back to the original publication by Radon in 1917 [RAD 17], reissued in 1986 [RAD 86], and the studies of John published in 1934 [JOH 34]. During the second half of the 20th century, a rapid expansion of tomographic techniques took place. In X-ray imaging, the first tomographic systems were mechanic devices which combined movements of the source and of the radiological film to eliminate information off the plane of the cross section by a blurring effect. This is called longitudinal tomography, since the plane of the cross section is parallel to the patient axis. The first digitally reconstructed images were obtained by radio astronomers at the end of the 1950s [BRA 79]. The first studies of Cormack at the end of the 1950s and the beginning of the 1960s showed that it is possible to precisely reconstruct the image of a transverse cross section of a patient from its radiographic projections [COR 63, COR 64]. In the 1960s, several experiments with medical applications were carried out [COR 63, COR 64, KUH 63, OLD 61]. The problem of reconstructing images from projections in electron microscopy was also tackled in this time [DER 68, DER 71]. In 1971, Hounsfield built the first prototype of an X-ray tomograph dedicated to cerebral imaging [HOU 73]. Hounsfield and Cormack received the Nobel price for physiology and medicine in 1979 [COR 80, HOU 80]. The beginnings of MRI go back to the first 14 Tomography publication by Lauterbur in 1973 [LAU 73]. In nuclear medicine, the pioneering work in single photon emission computed tomography (SPECT) date back to Kuhl and Edwards in 1963 [KUH 63], followed by Anger in 1967 [ANG 67], Muehllehner in 1971 [MUE 71], and Keyes et al. [BOW 73, KEY 73]. The first PET scanner was built at the beginning of the 1960s by Rankowitz et al. [RAN 62], followed in 1975 by TerPogossian and Phelps [PHE 75, TER 75]. For more references covering the beginning of tomography, we refer the interested reader to the works of Herman [HER 79], Robb [ROB 85], and Webb [WEB 90], and to the following papers summarizing the developments achieved during the past 50 years in X-ray computed tomography [KAL 06], MRI [MAL 06], SPECT [JAS 06], PET [MUE 06], and image reconstruction [DEF 06]. In France, we keep of this time the pioneering work of the group of Robert Di Paola at the Institute Gustave Roussy (IGR) on gamma tomography and of the groups of the Laboratory of Electronics, Technology, and Instrumentation (LETI) at the Atomic Energy Commission (CEA). In 1976, under the direction of Edmond Tournier and Robert Allemand, the first French prototype of an X-ray tomograph was installed at the Grenoble hospital, in collaboration with the company CGR [PLA 98]. In 1981, the LETI installed at the Service Hospitalier Frédéric Joliot (SHFJ) of the CEA its first prototype of a time-of-flight PET scanner (TTV01), followed by three systems of the next generation (TTV03). The SHFJ participated in the specification of these systems and carried out their experimental evaluation. The SHFJ was the first center in France having a cyclotron for medical use and a radiochemistry laboratory for synthesizing the tracers used in PET. In 1990, two prototypes of 3D X-ray scanners called morphometers were developed by the LETI, one of which was installed at the hospital of Rennes and the other at the neurocardiological hospital of Lyon, in collaboration with the company General Electric-MSE and the groups of these hospitals. In parallel, several prototypes of X-ray scanners were built for applications in non-destructive testing. The 1980s saw the development of 3D imaging and digital detection technology, as well as tremendous advances in the processing performance of computers. One pioneer project was the DSR project (Dynamic Spatial Reconstructor at the Mayo Clinic in Rochester [ROB 85], which aimed at imaging the beating heart and the breathing lung using several pairs of X-ray sources and detectors. The evolution continued in the 1990s, focusing notably on increasing the sensitivity and the acquisition rates of the systems, the latter in particular linked with the development of 2D multi-line or surface radiation detectors. Several references describe the advances achieved in this period [BEN 98, GOL 95, KAL 00, LIA 00, ROB 85, SHA 89]. Medical imaging is an important application domain for tomographic techniques. Currently, X-, -, and -ray tomography, and MRI together make up a third of the medical imaging market. Tomographic systems are heavy and expensive devices that Introduction to tomography 15 often require specific infrastructure, like protection against ionizing radiation or magnetic fields or facilities for preparing radiotracers. Nevertheless, tomography plays a crucial role in diagnostic imaging today, and it has a major impact on public health. Medical imaging and the life sciences thus constitute the primary application domains of tomographic systems. In the life sciences, the scanning transmission electron microscope permits studying fine structures, like those of proteins or viruses, and, at a larger scale, the confocal laser scanning microscope (CLSM) allows visualizing the elements of cells. In neuroscience, PET, fMRI (functional MRI), MEG, and EEG have contributed to the identification and the localization of cortical areas and thus to the understanding of the functioning of the brain. By permitting a tracking of physiological parameters, PET contributes to the study of primary and secondary effects of drugs. Finally, the technique of gene reporters opens up the possibility of genetic studies in vivo. For these studies on drugs or in biotechnology, animal models are necessary, which explains the current development of platforms for tomographic imaging on animals. For small animal studies, optical tomography associated either with luminescence or laser-induced fluorescence is also progressing. In particular, it allows linking in vitro studies on cell cultures and in vivo studies on small animal using the same markers. Generally speaking, through MRI, nuclear and optical imaging, molecular imaging is an active field of research nowadays. In medicine, X-ray tomography and MRI are anatomic, morphological imaging modalities, while SPECT, PET, fMRI, and magneto- and electroencephalography (MEG and EEG) are employed in functional studies. X-ray tomography is well suited for examining dense structures, like the bones or the vasculature after injection of a contrast agent. MRI is better suited for visualizing contrast based on the density of hydrogen atoms, as between the white and gray matter in the brain [HAA 99]. SPECT is used a lot to study the blood perfusion of the myocardium, and PET is about to establish itself for staging of cancer in oncology. For medical application, optical tomography [MÜL 93] remains for the moment restricted to a few dedicated applications, such as the imaging of the cornea or the skin by optical coherence tomography. Other applications like the exploration of the breast or prostate using laser-induced fluorescence tomography are still under investigation. These aspects are elaborated in the following chapters that are dedicated to the individual modalities. Tomographic system are used in all stages of patient care, including the diagnosis, the therapy or surgical planning, the image-based guidance of interventions for minimal invasive surgery, and the therapy follow-up. Digital assistants may help with the image analysis. Moreover, robots use these images for guidance. These digital images are easily transmitted over imaging networks and stored to establish a reference database, to be shared among operators or students. In the future, veritable virtual patients 16 Tomography and digital anatomic atlases will serve the training of certain medical operations, or even surgical procedures. Such digital models also facilitate the design of prostheses. The second application domain of tomographic imaging systems are astronomy and the material sciences. They often involve large instruments, such as telescopes, synchrotrons, and plasma chambers, and nuclear simulation devices, such as the installations Mégajoule and Airix in France. Geophysics is another important domain in which, on a large scale, oceans or geological layers are examined and, on a smaller scale, rocks and their properties, such as their porosity. Astrophysics, associated with telescopes operating at different ranges of wavelengths, is also concerned, which exploits the natural rotation of the stars for tomographic acquisitions [BRA 79, FRA 05]. The last application domain is the industry and services. Several industries are interested, like the nuclear, aeronautic, automobile, agriculture, food, pharmaceutical, petroleum, and chemical industry [BAR 00, BEC 97, MAY 94]. Here, a broad range of materials in different phases is concerned. Tomography is first of all applied in design and development departments, for the development of fabrication processes, the characterization and modeling of the behavior of materials, reverse engineering, and the adjustment of graphical models in computer aided design (CAD). Second, tomography is also applied in process control [BEC 97], for instance in mixers, thermal exchangers, multi-phase transport, fluidified bed columns, motors, and reactors. It concerns in particular the identification of functioning regimes, the determination of resting times and of the kinetics of products, and the validation of thermo-hydraulic models. However, in non-destructive testing, once the types of defects or malfunction are identified on a prototype, one often tries to reduce the number of measurements and thus the cost, and to simplify the systems. Therefore, the use of tomographic systems remains limited in this application domain. Lastly, tomography is applied to security and quality control. This includes for instance production control, such as on-line testing after assembly to check dimensions or to study defects, or the inspection of nuclear waste drums. Security control is also becoming a major market. This concerns in particular luggage inspection with identification of materials to detect drugs or explosives. In conclusion, from the mid of the 20th century up to now, tomography has become a major technology with a large range of applications. 1.6. Bibliography [ANG 67] ANGER H, PRICE DC, YOST PE, “Transverse section tomography with the gamma camera”, J. Nucl. Med., vol. 8, pp. 314-315, 1967. [BAI 05] BAILEY DL, TOWNSEND DW, VALK PE, MAISEY MN, Positron Emission Tomography: Basic Science and Clinical Practice, Springer, 2005. Introduction to tomography 17 [BAR 00] BARUCHEL J, BUFFIÈRE JY, MAIRE E, MERLE P, PEIX G (Eds.), X-Ray Tomography in Material Science, Hermès, 2000. [BEC 97] BECK MS, DYAKOWSKI T, WILLIAMS RA, “Process tomography – the state of the art”, Frontiers in Industrial Process Tomography II, Proc. Engineering Foundation Conference, pp. 357-362, 1997. [BEN 98] BENDRIEM B, TOWNSEND D (Eds.), The Theory and Practice of 3D PET, Kluwer Academic Publishers, Developments in Nuclear Medicine Series, vol. 32, 1998. [BIM 99] BIMBOT R, BONNIN A, DELOCHE R, LAPEYRE C, Cent Ans après, la Radioactivité, le Rayonnement d’une Découverte, EDP Sciences, 1999. [BOW 73] BOWLEY AR, TAYLOR CG, CAUSER DA, BARBER DC, KEYES WI, UNDRILL PE, CORFIELD JR, MALLARD JR, “A radioisotope scanner for rectilinear arc, transverse section, and longitudinal section scanning (ASS, the Aberdeen Section Scanner)”, Br. J. Radiol., vol. 46, pp. 262-271, 1973. [BRA 79] BRACEWELL RN, “Image reconstruction in radio astronomy”, in Herman GT (Ed.), Image Reconstruction from Projections, Springer, pp. 81-104, 1979. [CEN 08] CENSOR Y, JIANG M, LOUIS AK (Eds.), Mathematical Methods in Biomedical Imaging and Intensity-Modulated Radiation Therapy (IMRT), Edizioni Della Normale, 2008. [COR 63] CORMACK AM, “Representation of a function by its line integrals, with some radiological applications”, J. Appl. Phys., vol. 34, pp. 2722-2727, 1963. [COR 64] CORMACK AM, “Representation of a function by its line integrals, with some radiological applications II”, J. Appl. Phys., vol. 35, pp. 195-207, 1964. [COR 80] CORMACK AM, “Early two-dimensional reconstruction (CT scanning) and recent topics stemming from it, Nobel Lecture, December 8, 1979”, J. Comput. Assist. Tomogr., vol. 4, n° 5, p. 658, 1980. [DEF 06] DFRISE M, GULLBERG GT, “Image reconstruction”, Phys. Med. Biol., vol. 51, n° 13, pp. R139-R154, 2006. [DEH 95] DE HOOP AT, Handbook of Radiation and Scattering of Waves, Academic Press, 1995. [DER 68] DE ROSIER DJ, KLUG A, “Reconstruction of three-dimensional structures from electron micrographs”, Nature, vol. 217, pp. 130-134, 1968. [DER 71] DE ROSIER DJ, “The reconstruction of three-dimensional images from electron micrographs”, Contemp. Phys., vol. 12, pp. 437-452, 1971. [FRA 05] FRAZIN RA, KAMALABADI F, “Rotational tomography for 3D reconstruction of the white-light and EUV corona in the post-SOHO era”, Solar Physics, vol. 228, pp. 219237, 2005. [GAL 02] GALLICE J (Ed.), Images de Profondeur, Hermès, 2002. [GAR 06] GARDNER RJ, Geometric Tomography, Encyclopedia of Mathematics and its Applications, Cambridge University Press, 2006. 18 Tomography [GOL 95] GOLDMAN LW, FOWLKES JB (Eds.), Medical CT and Ultrasound: Current Technology and Applications, Advanced Medical Publishing, 1995. [HAA 99] HAACKE E, BROWN RW, THOMPSON MR, VENKATESAN R, Magnetic Resonance Imaging. Physical Principles and Sequence Design, John Wiley and Sons, 1999. [HAD 32] HADAMARD J, Le Problème de Cauchy et les Equations aux Dérivées Partielles Linéaires Hyperboliques, Hermann, 1932. [HER 79] HERMAN GT (Ed.), Image Reconstruction from Projections: Implementation and Applications, Springer, 1979. [HOU 73] HOUNSFIELD GN, “Computerized transverse axial scanning (tomography). I. Description of system”, Br. J. Radiol., vol. 46, pp. 1016-1022, 1973. [HOU 80] HOUNSFIELD GN, “Computed medical imaging, Nobel Lecture, December 8, 1979”, J. Comput. Assist. Tomogr., vol. 4, n° 5, p. 665, 1980. [HSI 03] HSIEH J, Computed Tomography: Principles, Design, Artifacts, and Recent Advances, SPIE Press, 2003. [JAS 06] KASZCZAK RJ, “The early years of single photon emission computed tomography (SPECT): an anthology of selected reminiscences”, Phys. Med. Biol., vol. 51, n°13, pp. R99-R115, 2006. [JOH 34] JOHN F, “Bestimmung einer Funktion aus ihren Integralen über gewisse Mannigfaltigkeiten”, Mathematische Annalen, vol. 109, pp. 488-520, 1934. [KAL 00] KALENDER WA, Computed Tomography, Wiley, New York, 2000. [KAL 06] KALENDER WA, Computed Tomography: Fundamentals, System Technology, Image Quality, Applications, Wiley-VCH, 2006. [KAK 01] KAK AC, SLANEY M, Principles of Computerized Tomographic Imaging, SIAM, 2001. [KEY 73] KEYES JW, KAY DB, SIMON W, “Digital reconstruction of three-dimensional radionuclide images”, J. Nucl. Med., vol. 14, pp. 628-629, 1973. [KUH 63] KUHL DE, EDWARDS RQ, “Image separation radioisotope scanning”, Radiology, vol. 80, pp. 653-661, 1963. [LAU 73] LAUTERBUR PC, “Image formation by induced local interactions: examples employing nuclear magnetic resonance”, Nature, vol. 242, pp. 190-191, 1973. [LIA 00] LIANG ZP, LAUTERBUR PC, Principles of Magnetic Resonance Imaging. A Signal Processing Perspective, IEEE Press, Series in Biomedical Imaging, 2000. [MAL 06] MALLARD JR, “Magnetic resonance imaging - the Aberdeen perspective on developments in the early years”, Phys. Med. Biol., vol. 51, n° 13, pp. R45-R60, 2006. [MAY 94] MAYINGER F (Ed.), Optical Measurements: Techniques and Applications, Springer, 1994. [MUE 71] MUEHLLEHNER G, WETZEL R, “Section imaging by computer calculation”, J. Nucl. Med., vol. 12, pp. 76-84, 1971. Introduction to tomography 19 [MUE 06] MUEHLLEHNER G, KARP JS, “Positron emission tomography”, Phys. Med. Biol., vol. 51, n° 13, pp. R117-R137, 2006. [MÜL 93] MÜLLER G, CHANCE B, ALFANO R, et al. Medical Optical Tomography: Functional Imaging and Monitoring, SPIE Optical Engineering Press, vol. IS11, 1993. [NAT 86] NATTERER F, The Mathematics of Computerized Tomography, John Wiley and Sons, 1986. [NAT 01] NATTERER F, WUBBELING F, Mathematical Methods in Image Reconstruction, SIAM, 2001. [OFT 99] OBSERVATOIRE FRANÇAIS DES TECHNIQUES AVANCEES, Problèmes Inverses : de l’Expérimentation à la Modélisation, Tec & Doc, Série ARAGO, n° 22, 1999. [OLD 61] OLDENDORF WH, “Isolated flying detection of radiodensity discontinuities displaying the internal structural pattern of a complex object”, IRE Trans. BME, vol. 8, pp. 6872, 1961. [PHE 75] PHELPS ME, HOFFMAN EJ, MULLANI NA, TER-POGOSSIAN MM, “Application of annihilation coincidence detection to transaxial reconstruction tomography”, J. Nucl. Med., vol. 16, pp. 210-224, 1975. [PLA 98] PLAYOUST B, De l’Atome à la Puce – Le LETI: Trente Ans de Collaborations Recherche Industrie, LIBRIS, 1998. [RAD 17] RADON J, “Über die Bestimmung von Funktionen durch ihre Integralwerte längs gewisser Mannigfaltigkeiten”, Math.-Phys. Kl. Berichte d. Sächsischen Akademie der Wissenschaften, vol. 69, pp. 262-267, 1917. [RAD 86] RADON J, “On the determination of functions from their integral values along certain manifolds”, IEEE Trans. Med. Imaging, pp. 170-176, 1986. [RAN 62] RANKOWITZ S, “Positron scanner for locating brain tumors”, IEEE Trans. Nucl. Sci., vol. 9, pp. 45-49, 1962. [ROB 85] ROBB RA (Ed.), Three-dimensional Biomedical Imaging, vol. I and II, CRC Press, 1985. [SHA 89] SHARP PF, GEMMEL HG, SMITH FW (Eds.), Practical Nuclear Medicine, IRL Press at Oxford University Press, Oxford, 1989. [TER 75] TER-POGOSSIAN MM, PHELPS ME, HOFFMAN EJ, MULLANI NA, “A positron emission transaxial tomograph for nuclear imaging (PETT)”, Radiology, vol. 114, pp. 8998, 1975. [WEB 90] WEBB S, From the Watching of the Shadows: the Origins of Radiological Tomography, Adam Hilger, 1990. [WIL 95] WILLIAMS RA, BECK MS (Eds.), Process Tomography: Principles, Techniques and Applications, Butterworth Heinemann, 1995. 20 Tomography accuracy, 4 acoustic waves, 2 acquisition time, 7 active system, 3 analytical methods, 11 anatomic imaging, 15 Anger, 14 astronomy, 16 attenuation coefficient, 2, 3 backprojection, 12 backpropagation, 12 backscattering, 3 Boltzmann equation, 10 CEA, 14 coherent radiation, 2 complete, 10 computed tomography, 12 computer vision, 12 concentration, 3 continuous method, 11 contrast, 3, 4, 8 contrast agent, 4 Cormack, 13 cost, 8 density, 3 design, 16 deterministic method, 11 diagnosis, 15 diffusion coefficient, 3 direct interrogation, 3 direct problem, 1, 10 discrete method, 11 dual-energy, 4 dynamic, 7 dynamic imaging, 9, 12 Dynamic Spatial Reconstrutor, 14 Edwards, 14 elastodynamic equation, 10 electromagnetic waves, 2 electronic scanning, 8 estimation, 11 fluorescence, 3 Fourier transform, 7, 11 Fredholm transform, 7, 11 functional imaging, 13 gamma ray, 3 Hounsfield, 13 ill-posed, 10 image-based guidance, 15 incomplete, 10 indirect interrogation, 3 indirect measurements, 1 industry, 16 integral measurement, 10 inverse problem, 2, 10 iterative algorithm, 11, 12 kinetics, 4, 9 Kuhl, 14 Lauterbur, 14 LETI, 14 life science, 15 localization, 8 magnetic resonance imaging, 3 material science, 16 matrix-vector product, 11 Maxwell equation, 10 mechanical scanning, 8 medical imaging, 14, 15 microtomography, 9 minimal invasive surgery, 15 molecular imaging, 15 morphological imaging, 13, 15 morphometer, 14 motion compensation, 9 non-coherent radiation, 3 passive system, 3 pattern recognition, 12 Phelps, 14 photonic radiation, 2 pixel, 7 process control, 16 projection, 7 Introduction to tomography public health, 15 quality control, 16 Radon, 13 Radon transform, 7, 11 Rankowitz, 14 reconstruction, 8, 9, 12 reflection, 3 refractive index, 2 regularization, 10, 11 security, 16 sensitivity, 2 service, 16 SHFJ, 14 spatial grid, 7 spatial resolution, 4, 7 specificity, 2 static, 7 21 statistical method, 11 strongly ill-posed, 10 surgical planning, 15 temporal resolution, 4, 8 Ter-Pogossian, 14 therapy, 15 therapy follow-up, 15 three-dimensional imaging, 7, 9, 14 tomographic imaging systems, 1 tomography, 1, 15 transmission, 3 two-dimensional imaging, 7, 9 uncertainty relation, 10 voxel, 7 weakly ill-posed, 10 whole body, 9 X-ray, 3, 7