Leveraging Massive Data Analysis for Competitive

... model your data. After we validate our results using analytical techniques, we are ready to deploy the model. This final step is where we will predict, classify, and diagnose on new data. EFRON-SFL will guide you through the entire CRISP-DM cycle to give your organization true market advantages. Con ...

... model your data. After we validate our results using analytical techniques, we are ready to deploy the model. This final step is where we will predict, classify, and diagnose on new data. EFRON-SFL will guide you through the entire CRISP-DM cycle to give your organization true market advantages. Con ...

HCLS$$HCLSIG_DemoHomePage_HCLSIG_Demo$$Slides

... HCLSIG is chartered to develop and support the use of Semantic Web technologies and practices to improve collaboration, research and development, and innovation adoption in the of Health Care and Life ...

... HCLSIG is chartered to develop and support the use of Semantic Web technologies and practices to improve collaboration, research and development, and innovation adoption in the of Health Care and Life ...

Abstract - Compassion Software Solutions

... database D, a set _ of conditional functional dependencies (CFDs), the set V of violations of the CFDs in D, and updates _D to D, it is to find, with minimum data shipment, changes _V to V in response to _D. The need for the study is evident since real-life data is often dirty, distributed and frequ ...

... database D, a set _ of conditional functional dependencies (CFDs), the set V of violations of the CFDs in D, and updates _D to D, it is to find, with minimum data shipment, changes _V to V in response to _D. The need for the study is evident since real-life data is often dirty, distributed and frequ ...

Kosakoski_Spatiotemporal Visualization v2

... such as R-Trees and hash trees to further increase the efficiency of the filtering while preserving ease-of-use for the end user. ...

... such as R-Trees and hash trees to further increase the efficiency of the filtering while preserving ease-of-use for the end user. ...

Data Warehousing Multidimensional OLAP

... multidimensional views of data. With multidimensional data stores, the storage utilization may be low if the data set is sparse. Therefore, many MOLAP servers use two levels of data storage representation to handle dense and sparse data-sets. ...

... multidimensional views of data. With multidimensional data stores, the storage utilization may be low if the data set is sparse. Therefore, many MOLAP servers use two levels of data storage representation to handle dense and sparse data-sets. ...

Document

... Seacoos netcdf convention (format, data dictionary of variable and attribute standard names) applied to provider in-situ and model data, screen-scraped data from outside sources. (30 Gigabytes since September 2004) Raster images(.png’s) copied to fileserver with timestamp and projection info collece ...

... Seacoos netcdf convention (format, data dictionary of variable and attribute standard names) applied to provider in-situ and model data, screen-scraped data from outside sources. (30 Gigabytes since September 2004) Raster images(.png’s) copied to fileserver with timestamp and projection info collece ...

UK e-Science National e-Science Centre 22 January 2003

... NeSC + IBM Support & Secondment Dr Andrew Knox Data Integration over the Grid OGSA-DAI Software Released ...

... NeSC + IBM Support & Secondment Dr Andrew Knox Data Integration over the Grid OGSA-DAI Software Released ...

BUSINESS ANALYTICS … Creating Data Driven Decision Makers

... * Develop students quantitative capabilities & technical expertise to create business and social value . The Business Analytics program is designed to prepare students entering the workforce in the rapidly emerging field of business analytics. It intends at sharpening student problem solving and dec ...

... * Develop students quantitative capabilities & technical expertise to create business and social value . The Business Analytics program is designed to prepare students entering the workforce in the rapidly emerging field of business analytics. It intends at sharpening student problem solving and dec ...

Privacy-Preserving Outsourced Association Rule Mining on

... privacy. Our solutions leak less information about the raw data than most existing solutions. In comparison to the only known solution achieving a similar privacy level as our proposed solutions, the performance of our proposed solutions is three to five orders of magnitude higher. Based on our expe ...

... privacy. Our solutions leak less information about the raw data than most existing solutions. In comparison to the only known solution achieving a similar privacy level as our proposed solutions, the performance of our proposed solutions is three to five orders of magnitude higher. Based on our expe ...

Vigillo Joins Geotab Marketplace

... Geotab is a leading global provider of premium quality, end-to-end telematics technology. Geotab's intuitive, full-featured solutions help businesses of all sizes better manage their drivers and vehicles by extracting accurate and actionable intelligence from real-time and historical trips data. Wit ...

... Geotab is a leading global provider of premium quality, end-to-end telematics technology. Geotab's intuitive, full-featured solutions help businesses of all sizes better manage their drivers and vehicles by extracting accurate and actionable intelligence from real-time and historical trips data. Wit ...

doc

... herogeneus) databases, transform extracted data, and load transformed data in data warehouse. We also explain about front-end tools use for reporting, querying and analysing data. After that, we describe algorithms are represented for efficient query processing in data warehouses and specify advanta ...

... herogeneus) databases, transform extracted data, and load transformed data in data warehouse. We also explain about front-end tools use for reporting, querying and analysing data. After that, we describe algorithms are represented for efficient query processing in data warehouses and specify advanta ...

Data Warehousing and Business Intelligence

... The digital revolution implies abundant and rich data easily available as never before. As the volume of data grows, extracting information gets more and more challenging and the only way to deal with this complexity is through computing and expertise in programming environments. The recent explosio ...

... The digital revolution implies abundant and rich data easily available as never before. As the volume of data grows, extracting information gets more and more challenging and the only way to deal with this complexity is through computing and expertise in programming environments. The recent explosio ...

DATA DEFINITION LANGUAGE - MUET-CRP

... • Specify the storage structure of each table on disk. • Integrity constraints on various tables. • Security and authorization information of each table. • Specify the structure of each table. • Overall design of the Database. 2) DATA MANIPULATION LANGUAGE (DML) A language that enables users to acce ...

... • Specify the storage structure of each table on disk. • Integrity constraints on various tables. • Security and authorization information of each table. • Specify the structure of each table. • Overall design of the Database. 2) DATA MANIPULATION LANGUAGE (DML) A language that enables users to acce ...

Data Mining and Data Validation

... Progress in digital data acquisition and storage technology has resulted in the growth of huge databases ...

... Progress in digital data acquisition and storage technology has resulted in the growth of huge databases ...

WG2N1944_ISO_IEC_11179-5_Relevance_to_Big_Data

... Use Case: Run multiple Big Data Processing (e.g. batch analytics, interactive queries, stream processing, network analysis) on top of shared horizontally scalable distributive processing and data store resources Use Case: Combine data from heterogeneous Cloud databases and non-Cloud data stores for ...

... Use Case: Run multiple Big Data Processing (e.g. batch analytics, interactive queries, stream processing, network analysis) on top of shared horizontally scalable distributive processing and data store resources Use Case: Combine data from heterogeneous Cloud databases and non-Cloud data stores for ...

Business Intelligence Analyst (BIA)

... Design and development of online analytical data processing (OLAP); Creation and maintenance of a data warehouse; Gathering business requirements; Development of data mining solutions; ETL processes & Data modeling; Design and development of reporting applications; Physical DB design; DB performance ...

... Design and development of online analytical data processing (OLAP); Creation and maintenance of a data warehouse; Gathering business requirements; Development of data mining solutions; ETL processes & Data modeling; Design and development of reporting applications; Physical DB design; DB performance ...

Interpreting Standard Deviation Interpreting Standard Error

... A small standard deviation means that the values in a statistical data set are close to the mean of the data set, on average. A large standard deviation means that the values in the data set are farther away from the mean, on average. ...

... A small standard deviation means that the values in a statistical data set are close to the mean of the data set, on average. A large standard deviation means that the values in the data set are farther away from the mean, on average. ...

marine research infrastructures as big data producers

... Biodiversity models and Environmental Enrichment of species occurrence data ...

... Biodiversity models and Environmental Enrichment of species occurrence data ...

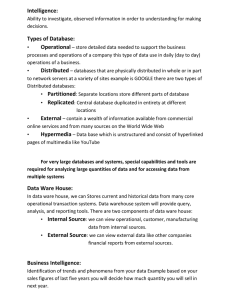

File - Ghulam Hassan

... processes and operations of a company this type of data use in daily (day to day) operations of a business. ...

... processes and operations of a company this type of data use in daily (day to day) operations of a business. ...

Network Fact Sheet: NetOps Workshop 2006

... Digital: public Internet, and dedicated phone line Digital data arrive via internet TCP/IP/UDP to a dedicated Reftek RTPD server where they, along with data from stations from other regional and national networks, are fed to a dedicated Earthworm Server (v 6.2), which in turn exports the data to a s ...

... Digital: public Internet, and dedicated phone line Digital data arrive via internet TCP/IP/UDP to a dedicated Reftek RTPD server where they, along with data from stations from other regional and national networks, are fed to a dedicated Earthworm Server (v 6.2), which in turn exports the data to a s ...

Big data

Big data is a broad term for data sets so large or complex that traditional data processing applications are inadequate. Challenges include analysis, capture, data curation, search, sharing, storage, transfer, visualization, and information privacy. The term often refers simply to the use of predictive analytics or other certain advanced methods to extract value from data, and seldom to a particular size of data set. Accuracy in big data may lead to more confident decision making. And better decisions can mean greater operational efficiency, cost reduction and reduced risk.Analysis of data sets can find new correlations, to ""spot business trends, prevent diseases, combat crime and so on."" Scientists, business executives, practitioners of media and advertising and governments alike regularly meet difficulties with large data sets in areas including Internet search, finance and business informatics. Scientists encounter limitations in e-Science work, including meteorology, genomics, connectomics, complex physics simulations, and biological and environmental research.Data sets grow in size in part because they are increasingly being gathered by cheap and numerous information-sensing mobile devices, aerial (remote sensing), software logs, cameras, microphones, radio-frequency identification (RFID) readers, and wireless sensor networks. The world's technological per-capita capacity to store information has roughly doubled every 40 months since the 1980s; as of 2012, every day 2.5 exabytes (2.5×1018) of data were created; The challenge for large enterprises is determining who should own big data initiatives that straddle the entire organization.Work with big data is necessarily uncommon; most analysis is of ""PC size"" data, on a desktop PC or notebook that can handle the available data set.Relational database management systems and desktop statistics and visualization packages often have difficulty handling big data. The work instead requires ""massively parallel software running on tens, hundreds, or even thousands of servers"". What is considered ""big data"" varies depending on the capabilities of the users and their tools, and expanding capabilities make Big Data a moving target. Thus, what is considered ""big"" one year becomes ordinary later. ""For some organizations, facing hundreds of gigabytes of data for the first time may trigger a need to reconsider data management options. For others, it may take tens or hundreds of terabytes before data size becomes a significant consideration.""