Vector Spaces and Linear Transformations

... If H is a subspace of V , then H is closed for the addition and scalar multiplication of V , i.e., for any u, v ∈ H and scalar c ∈ R, we have u + v ∈ H, cv ∈ H. For a nonempty set S of a vector space V , to verify whether S is a subspace of V , it is required to check (1) whether the addition and s ...

... If H is a subspace of V , then H is closed for the addition and scalar multiplication of V , i.e., for any u, v ∈ H and scalar c ∈ R, we have u + v ∈ H, cv ∈ H. For a nonempty set S of a vector space V , to verify whether S is a subspace of V , it is required to check (1) whether the addition and s ...

AIMS Lecture Notes 2006 4. Gaussian Elimination Peter J. Olver

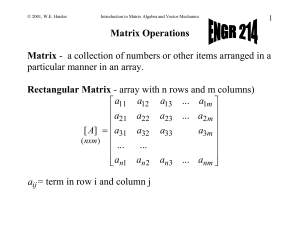

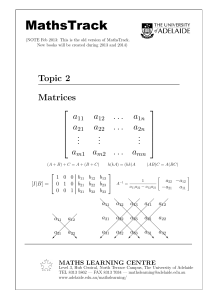

... With the basic matrix arithmetic operations in hand, let us now return to our primary task. The goal is to develop a systematic method for solving linear systems of equations. While we could continue to work directly with the equations, matrices provide a convenient alternative that begins by merely ...

... With the basic matrix arithmetic operations in hand, let us now return to our primary task. The goal is to develop a systematic method for solving linear systems of equations. While we could continue to work directly with the equations, matrices provide a convenient alternative that begins by merely ...

Multiequilibria analysis for a class of collective decision

... Consider the system (7) (or (6)) where each nonlinear function ψi (xi ) satisfies the properties (A.1)÷(A.4). Let us start by recalling what is known for this system when we vary the parameter π. By construction, the origin is always an equilibrium point for (7) (or (6)). When π < 1, x = 0 is the on ...

... Consider the system (7) (or (6)) where each nonlinear function ψi (xi ) satisfies the properties (A.1)÷(A.4). Let us start by recalling what is known for this system when we vary the parameter π. By construction, the origin is always an equilibrium point for (7) (or (6)). When π < 1, x = 0 is the on ...

MATH1014-LinearAlgeb..

... If u 6= 0, then Span {u} is a 1 - dimensional subspace. These subspaces are lines through the origin. If u and v are linearly independent vectors in R3 , then Span {u, v} is a 2 - dimensional subspace. These subspaces are planes through the origin. If u, v and w are linearly independent vectors in R ...

... If u 6= 0, then Span {u} is a 1 - dimensional subspace. These subspaces are lines through the origin. If u and v are linearly independent vectors in R3 , then Span {u, v} is a 2 - dimensional subspace. These subspaces are planes through the origin. If u, v and w are linearly independent vectors in R ...

POLYNOMIALS IN ASYMPTOTICALLY FREE RANDOM MATRICES

... random matrix p(X, Y ), where X and Y are independent Gaussian and, respectively, Wishart random matrices: p(X, Y ) = X + Y (left); p(X, Y ) = XY + Y X + X 2 (right). In the left case, the asymptotic eigenvalue distribution is relatively easy to calculate; in the right case, no such solution was kno ...

... random matrix p(X, Y ), where X and Y are independent Gaussian and, respectively, Wishart random matrices: p(X, Y ) = X + Y (left); p(X, Y ) = XY + Y X + X 2 (right). In the left case, the asymptotic eigenvalue distribution is relatively easy to calculate; in the right case, no such solution was kno ...

Row and Column Spaces of Matrices over Residuated Lattices 1

... (2) For L = {0, 1} the concept of an i-subspace coincides with the concept of a subspace from the theory of Boolean matrices [15]. In fact, closedness under ⊗-multiplication is satisfied for free in the case of Boolean matrices. Note also that for Boolean matrices, V forms a c-subspace iff V = {C | ...

... (2) For L = {0, 1} the concept of an i-subspace coincides with the concept of a subspace from the theory of Boolean matrices [15]. In fact, closedness under ⊗-multiplication is satisfied for free in the case of Boolean matrices. Note also that for Boolean matrices, V forms a c-subspace iff V = {C | ...

Non-negative matrix factorization

NMF redirects here. For the bridge convention, see new minor forcing.Non-negative matrix factorization (NMF), also non-negative matrix approximation is a group of algorithms in multivariate analysis and linear algebra where a matrix V is factorized into (usually) two matrices W and H, with the property that all three matrices have no negative elements. This non-negativity makes the resulting matrices easier to inspect. Also, in applications such as processing of audio spectrograms non-negativity is inherent to the data being considered. Since the problem is not exactly solvable in general, it is commonly approximated numerically.NMF finds applications in such fields as computer vision, document clustering, chemometrics, audio signal processing and recommender systems.