DOI: 10.1515/aucts-2015-0011 ACTA UIVERSITATIS CIBINIENSIS

... In 1955 John McCarthy gives us the definition to be the most accepted for “artificial intelligence”: "a machine which behaves in a way that could be considered intelligent if it was a man." The artificial neuron (Figure 2) is a lot more simplified copy of the biological neuron and it represents the ...

... In 1955 John McCarthy gives us the definition to be the most accepted for “artificial intelligence”: "a machine which behaves in a way that could be considered intelligent if it was a man." The artificial neuron (Figure 2) is a lot more simplified copy of the biological neuron and it represents the ...

Oct2011_Computers_Brains_Extra_Mural

... functional information processing architecture. The Hypothalamus is the core of the brain having spontaneously active neurons that “animate” everything else. Other brain regions just layer on various constraints to these basic animating signals. The Thalamus (Diencephalon) seems to have started out ...

... functional information processing architecture. The Hypothalamus is the core of the brain having spontaneously active neurons that “animate” everything else. Other brain regions just layer on various constraints to these basic animating signals. The Thalamus (Diencephalon) seems to have started out ...

Spiking neural networks for vision tasks

... computer vision applications like handwritten digits recognition [1] they where believed to be unsuitable for more complex problems like object detection. It was not before 2012 when A. Krizhevsky et. al [2] proposed a deep convolutional neural network at the ImageNet Large Scale Visual Recognition ...

... computer vision applications like handwritten digits recognition [1] they where believed to be unsuitable for more complex problems like object detection. It was not before 2012 when A. Krizhevsky et. al [2] proposed a deep convolutional neural network at the ImageNet Large Scale Visual Recognition ...

Document

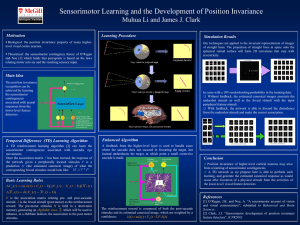

... Sensorimotor Learning and the Development of Position Invariance Muhua Li and James J. Clark ...

... Sensorimotor Learning and the Development of Position Invariance Muhua Li and James J. Clark ...

presentation

... each other. The network connected to M1 spikes at a higher frequency and is able to trigger SICs (Slow Inward Currents) in both NTs networks. NTs N3 ...

... each other. The network connected to M1 spikes at a higher frequency and is able to trigger SICs (Slow Inward Currents) in both NTs networks. NTs N3 ...

SM-718: Artificial Intelligence and Neural Networks Credits: 4 (2-1-2)

... Resolution, refutation, deduction, theorem proving, inferencing, monotonic and non-monotonic reasoning, Semantic networks, scripts, schemas, frames, conceptual dependency, forward and backward reasoning. UNIT II: Adversarial Search: Game playing techniques like minimax procedure, alpha-beta cut-offs ...

... Resolution, refutation, deduction, theorem proving, inferencing, monotonic and non-monotonic reasoning, Semantic networks, scripts, schemas, frames, conceptual dependency, forward and backward reasoning. UNIT II: Adversarial Search: Game playing techniques like minimax procedure, alpha-beta cut-offs ...

Ch. 11: Machine Learning: Connectionist

... • Parallelism in AI is not new. - spreading activation, etc. • Neural models for AI is not new. - Indeed, is as old as AI, some subdisciplines such as computer vision, have continuously thought this way. • Much neural network works makes biologically implausible assumptions about how neurons work ...

... • Parallelism in AI is not new. - spreading activation, etc. • Neural models for AI is not new. - Indeed, is as old as AI, some subdisciplines such as computer vision, have continuously thought this way. • Much neural network works makes biologically implausible assumptions about how neurons work ...

PowerPoint

... equivalent in results (output), but they do not arrive at those results in the same way Strong equivalence ...

... equivalent in results (output), but they do not arrive at those results in the same way Strong equivalence ...

Practical 6: Ben-Yishai network of visual cortex

... d) Take λ0 = 5, λ1 = 0, ϵ = 0.1. This means that there is uniform recurrent inhibition. Vary the contrast c (range 0.1 to 10) and observe the steady state. You will see three regimes: no output, a rectified cosine, and a cosine plus offset. e) Next, take a small value for ϵ, take λ0 = 2, and vary λ1 ...

... d) Take λ0 = 5, λ1 = 0, ϵ = 0.1. This means that there is uniform recurrent inhibition. Vary the contrast c (range 0.1 to 10) and observe the steady state. You will see three regimes: no output, a rectified cosine, and a cosine plus offset. e) Next, take a small value for ϵ, take λ0 = 2, and vary λ1 ...

Deep Neural Networks for Anatomical Brain Segmentation

... feature detectors. Recursively, deeper neurons learn to detect new features formed by those detected by the previous layer. The result is a hierarchy of higher and higher level feature detectors. This is natural for images as they can be decomposed into edges, motifs, parts of regions and regions th ...

... feature detectors. Recursively, deeper neurons learn to detect new features formed by those detected by the previous layer. The result is a hierarchy of higher and higher level feature detectors. This is natural for images as they can be decomposed into edges, motifs, parts of regions and regions th ...

Artificial Intelligence

... something more powerful is needed. • As has already been indicated, neural networks consist of a number of neurons that are connected together, usually arranged in layers. • A single perceptron can be thought of as a single-layer perceptron. Multilayer perceptrons are capable of modeling more comple ...

... something more powerful is needed. • As has already been indicated, neural networks consist of a number of neurons that are connected together, usually arranged in layers. • A single perceptron can be thought of as a single-layer perceptron. Multilayer perceptrons are capable of modeling more comple ...

Artificial Intelligence, Neural Nets and Applications

... software to do the following: 1) estimate a known function, 2) make projections with time-series data, and ...

... software to do the following: 1) estimate a known function, 2) make projections with time-series data, and ...

nn1-02

... contact with around 103 other neurons • STRUCTUREs: feedforward and feedback and self-activation recurrent ...

... contact with around 103 other neurons • STRUCTUREs: feedforward and feedback and self-activation recurrent ...

Week7

... • The learning process is based on the training data from the real world, adjusting a weight vector of inputs to a perceptron. • In other words, the learning process is to begin with random weighs, then iteratively apply the perceptron to each training example, modifying the perceptron weights whene ...

... • The learning process is based on the training data from the real world, adjusting a weight vector of inputs to a perceptron. • In other words, the learning process is to begin with random weighs, then iteratively apply the perceptron to each training example, modifying the perceptron weights whene ...

Intelligent Systems - Teaching-WIKI

... weight Wi in the network, and for each training suite in the training set. • One such cycle through all weighty is called an epoch of training. • Eventually, mostly after many epochs, the weight changes converge towards zero and the training process terminates. • The perceptron learning process alwa ...

... weight Wi in the network, and for each training suite in the training set. • One such cycle through all weighty is called an epoch of training. • Eventually, mostly after many epochs, the weight changes converge towards zero and the training process terminates. • The perceptron learning process alwa ...

IV3515241527

... Cascade Forward Back propagation Network Cascade forward back propagation model is similar to feed-forward networks, but include a weight connection from the input to each layer and from each layer to the successive layers. While two- layer feedforward networks can potentially learn virtually any in ...

... Cascade Forward Back propagation Network Cascade forward back propagation model is similar to feed-forward networks, but include a weight connection from the input to each layer and from each layer to the successive layers. While two- layer feedforward networks can potentially learn virtually any in ...

CLASSIFICATION AND CLUSTERING MEDICAL DATASETS BY

... elements (nodes) that are tied together with weighted connections (links). Learning in biological systems involves adjustments to the synaptic connections that exist between the neurons. This is true for ANN as well. Learning typically occurs by example through training, or exposure to a set of inpu ...

... elements (nodes) that are tied together with weighted connections (links). Learning in biological systems involves adjustments to the synaptic connections that exist between the neurons. This is true for ANN as well. Learning typically occurs by example through training, or exposure to a set of inpu ...

Appendix 4 Mathematical properties of the state-action

... The heart of the ANNABELL model is the state-action association system, which is responsible for all decision processes, as described in Sect. “Global organization of the model”. This system is implemented as a neural network (state-action association neural network, abbreviated as SAANN) with input ...

... The heart of the ANNABELL model is the state-action association system, which is responsible for all decision processes, as described in Sect. “Global organization of the model”. This system is implemented as a neural network (state-action association neural network, abbreviated as SAANN) with input ...

Questions and Answers

... 1. What are the most prominent strengths and weaknesses of neural networks, as compared to other machine learning techniques (for example logistic regression, random forests, support vector machines). A: Neural networks are typically more general than any of those. For example SVM can (usually) be m ...

... 1. What are the most prominent strengths and weaknesses of neural networks, as compared to other machine learning techniques (for example logistic regression, random forests, support vector machines). A: Neural networks are typically more general than any of those. For example SVM can (usually) be m ...

Cognitive Psychology

... neurons behave. Use these models to try and better understand cognitive processing in the brain. ...

... neurons behave. Use these models to try and better understand cognitive processing in the brain. ...

Capacity Analysis of Attractor Neural Networks with Binary Neurons and Discrete Synapses

... experiments, the attractor states of neural network dynamics are considered to be the underlying mechanism of memory storage in neural networks. For the simplest network with binary neurons and standard asynchronous dynamics, we show that the dynamics cannot be stable if all synapses are excitatory. ...

... experiments, the attractor states of neural network dynamics are considered to be the underlying mechanism of memory storage in neural networks. For the simplest network with binary neurons and standard asynchronous dynamics, we show that the dynamics cannot be stable if all synapses are excitatory. ...

Improving DCNN Performance with Sparse Category

... 2015], GoogLe-BN [Ioffe and Szegedy, 2015]) usually requires CPU/GPU clusters and ultra-large training data, and will be out of reach of small research groups with a limited research budget. In this paper, we propose a DCNN performance improvement method that does not need increased network complexi ...

... 2015], GoogLe-BN [Ioffe and Szegedy, 2015]) usually requires CPU/GPU clusters and ultra-large training data, and will be out of reach of small research groups with a limited research budget. In this paper, we propose a DCNN performance improvement method that does not need increased network complexi ...

Slide ()

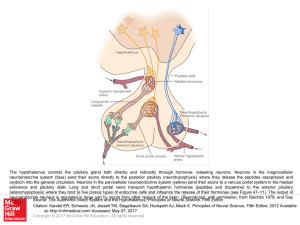

... neuroendocrine system (blue) send their axons directly to the posterior pituitary (neurohypophysis) where they release the peptides vasopressin and oxytocin into the general circulation. Neurons in the parvicellular neuroendocrine system (yellow) send their axons to a venous portal system in the med ...

... neuroendocrine system (blue) send their axons directly to the posterior pituitary (neurohypophysis) where they release the peptides vasopressin and oxytocin into the general circulation. Neurons in the parvicellular neuroendocrine system (yellow) send their axons to a venous portal system in the med ...

Slide ()

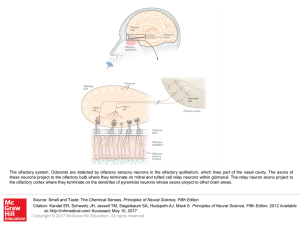

... The olfactory system. Odorants are detected by olfactory sensory neurons in the olfactory epithelium, which lines part of the nasal cavity. The axons of these neurons project to the olfactory bulb where they terminate on mitral and tufted cell relay neurons within glomeruli. The relay neuron axons p ...

... The olfactory system. Odorants are detected by olfactory sensory neurons in the olfactory epithelium, which lines part of the nasal cavity. The axons of these neurons project to the olfactory bulb where they terminate on mitral and tufted cell relay neurons within glomeruli. The relay neuron axons p ...

Slide ()

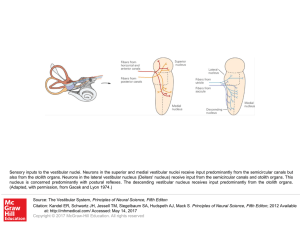

... Sensory inputs to the vestibular nuclei. Neurons in the superior and medial vestibular nuclei receive input predominantly from the semicircular canals but also from the otolith organs. Neurons in the lateral vestibular nucleus (Deiters' nucleus) receive input from the semicircular canals and otolith ...

... Sensory inputs to the vestibular nuclei. Neurons in the superior and medial vestibular nuclei receive input predominantly from the semicircular canals but also from the otolith organs. Neurons in the lateral vestibular nucleus (Deiters' nucleus) receive input from the semicircular canals and otolith ...