Artificial Neural Networks

... – The first layer is the input and the last layer is the output. – If there is more than one hidden layer, we call them “deep” neural networks. They compute a series of transformations that change the similarities between cases. – The activities of the neurons in each layer are a non-linear function ...

... – The first layer is the input and the last layer is the output. – If there is more than one hidden layer, we call them “deep” neural networks. They compute a series of transformations that change the similarities between cases. – The activities of the neurons in each layer are a non-linear function ...

Slide 1

... Responses in excitatory and inhibitory networks of firing-rate neurons. A. Response of a purely excitatory recurrent network to a square step of input (hE). The blue curve is the response without excitatory feedback. Adding recurrent excitation increases the response but makes it rise and fall more ...

... Responses in excitatory and inhibitory networks of firing-rate neurons. A. Response of a purely excitatory recurrent network to a square step of input (hE). The blue curve is the response without excitatory feedback. Adding recurrent excitation increases the response but makes it rise and fall more ...

Kein Folientitel - Institut für Grundlagen der Informationsverarbeitung

... neuroscience (memory, top-level-control) • Discussion of work in related EU-research projects (in which students could become involved) ...

... neuroscience (memory, top-level-control) • Discussion of work in related EU-research projects (in which students could become involved) ...

An Application Interface Design for Backpropagation Artificial Neural

... of which is the training and the other is the testing. It uses samples to establish the relationship events, and decides to solve problems that will occur after learning the relationships and comments. ANN is formed in three layers, called an input layer, an output layer and one or more hidden layer ...

... of which is the training and the other is the testing. It uses samples to establish the relationship events, and decides to solve problems that will occur after learning the relationships and comments. ANN is formed in three layers, called an input layer, an output layer and one or more hidden layer ...

nips2.frame - /marty/papers/drotdil

... For all training sets, the receptive fields of all units contained regions of all-max weights and all-min weights within the direction-speed subspace at each x-y point. For comparison, if the model is trained on uncorrelated direction noise (a different random local direction at each x-y point), thi ...

... For all training sets, the receptive fields of all units contained regions of all-max weights and all-min weights within the direction-speed subspace at each x-y point. For comparison, if the model is trained on uncorrelated direction noise (a different random local direction at each x-y point), thi ...

Focusing on connections and signaling mechanisms to

... My thoughts about the science of learning start from the point of view that the engram, the result of learning, must consist of some reasonably specific set of changes in neural connections corresponding to the thing learned. In the area of my own research, the development and plasticity of the cent ...

... My thoughts about the science of learning start from the point of view that the engram, the result of learning, must consist of some reasonably specific set of changes in neural connections corresponding to the thing learned. In the area of my own research, the development and plasticity of the cent ...

Determining the Efficient Structure of Feed

... Neural Networks are biologically inspired and mimic the human brain. A neural network consists of neurons which are interconnected with connecting links, where each link have a weight that multiplied by the signal transmitted in the network. The output of each neuron is determined by using an activa ...

... Neural Networks are biologically inspired and mimic the human brain. A neural network consists of neurons which are interconnected with connecting links, where each link have a weight that multiplied by the signal transmitted in the network. The output of each neuron is determined by using an activa ...

An Evolutionary Framework for Replicating Neurophysiological Data

... matched, thus the average firing rate correlation was about 0.47 per neuron. The lowest observed fitness was 104.7, resulting in a correlation of about 0.46 per neuron (strong correlations by experimental standards). At the start of each evolutionary run, the average maximum fitness score was 84.57 ...

... matched, thus the average firing rate correlation was about 0.47 per neuron. The lowest observed fitness was 104.7, resulting in a correlation of about 0.46 per neuron (strong correlations by experimental standards). At the start of each evolutionary run, the average maximum fitness score was 84.57 ...

read more

... techniques, which allow us to instantaneously perturb neural activity and record the response. We do not yet have a theoretical framework to adequately describe the neural response to such optogenetic perturbations, nor do we understand how neural networks can perform computations amid a background ...

... techniques, which allow us to instantaneously perturb neural activity and record the response. We do not yet have a theoretical framework to adequately describe the neural response to such optogenetic perturbations, nor do we understand how neural networks can perform computations amid a background ...

Project #2

... been computed as follows: (1) First, ten real-valued features have been computed for each cell nucleus; namely, (a) radius (mean of distances from center to points on the perimeter), (b) texture (standard deviation of gray-scale values), (c) perimeter, (d) area, (e) smoothness (local variation in ra ...

... been computed as follows: (1) First, ten real-valued features have been computed for each cell nucleus; namely, (a) radius (mean of distances from center to points on the perimeter), (b) texture (standard deviation of gray-scale values), (c) perimeter, (d) area, (e) smoothness (local variation in ra ...

Analysis of Back Propagation of Neural Network Method in the

... learning mechanism. Information is stored in the weight matrix of a neural network. Learning is the determination of the weights. All learning methods used for adaptive neural networks can be classified into two major categories: supervised learning and unsupervised learning. Supervised learning inc ...

... learning mechanism. Information is stored in the weight matrix of a neural network. Learning is the determination of the weights. All learning methods used for adaptive neural networks can be classified into two major categories: supervised learning and unsupervised learning. Supervised learning inc ...

Document

... Source: ‘Chronic neural recordings using silicon microelectrode arrays electrochemically deposited with a poly(3,4-ethylenedioxythiophene) (PEDOT) film’, K. Ludwig, J. Neural Eng. 3. 2006, 59-70. ...

... Source: ‘Chronic neural recordings using silicon microelectrode arrays electrochemically deposited with a poly(3,4-ethylenedioxythiophene) (PEDOT) film’, K. Ludwig, J. Neural Eng. 3. 2006, 59-70. ...

Document

... the unit produces an output value of 1 – If it does not exceed the threshold, it produces an output value of 0 ...

... the unit produces an output value of 1 – If it does not exceed the threshold, it produces an output value of 0 ...

Self Organizing Maps: Fundamentals

... classifications of the training data without external help. To do this we have to assume that class membership is broadly defined by the input patterns sharing common features, and that the network will be able to identify those features across the range of input patterns. One particularly interesti ...

... classifications of the training data without external help. To do this we have to assume that class membership is broadly defined by the input patterns sharing common features, and that the network will be able to identify those features across the range of input patterns. One particularly interesti ...

Document

... – Integrate the absolute value of the synaptic activity over 50msec – Convolve with a hemodynamic response function (e.g., Boynton model) – Downsample every TR to get fMRI data MEG – Local MEG signal is proportional to the difference between the excitatory and inhibitory synaptic activity on the exc ...

... – Integrate the absolute value of the synaptic activity over 50msec – Convolve with a hemodynamic response function (e.g., Boynton model) – Downsample every TR to get fMRI data MEG – Local MEG signal is proportional to the difference between the excitatory and inhibitory synaptic activity on the exc ...

PowerPoint Presentation - The City College of New York

... Dr. Maria Uriarte, Columbia University Tropical Forest responses to climate variability and human land use: From stand dynamics to ecosystem services ...

... Dr. Maria Uriarte, Columbia University Tropical Forest responses to climate variability and human land use: From stand dynamics to ecosystem services ...

Topic 4

... of two or more layers of artificial neurons or nodes, with each node in a layer connected to every node in the following layer Signals usually flow from the input layer, which is directly subjected to an input pattern, across one or more hidden layers towards the output layer. ICT619 ...

... of two or more layers of artificial neurons or nodes, with each node in a layer connected to every node in the following layer Signals usually flow from the input layer, which is directly subjected to an input pattern, across one or more hidden layers towards the output layer. ICT619 ...

Lecture 14

... of the input patterns. Let’s now look at how the training works. The network is first initialised by setting up all its weights to be small random numbers - say between -1 and +1. Next, the input pattern is applied and the output calculated (this is called the forward pass). The calculation gives an ...

... of the input patterns. Let’s now look at how the training works. The network is first initialised by setting up all its weights to be small random numbers - say between -1 and +1. Next, the input pattern is applied and the output calculated (this is called the forward pass). The calculation gives an ...

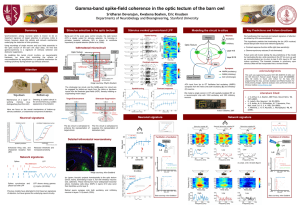

poster - Stanford University

... Future work will involve testing the key predictions of the model by inactivating the Ipc, while recording in the OT (in-vivo), as well as microstimulating Ipc (in-vitro) to test if ACh input to OT can induce synchrony. The transient increase in synchrony upon stimulus offset will be incorporated in ...

... Future work will involve testing the key predictions of the model by inactivating the Ipc, while recording in the OT (in-vivo), as well as microstimulating Ipc (in-vitro) to test if ACh input to OT can induce synchrony. The transient increase in synchrony upon stimulus offset will be incorporated in ...

Getting on your Nerves

... Therefore, each synapse can be adjusted during a process of learning to produce the correct output. This allows for procedural learning, where each time an action is performed, it becomes somewhat more accurate since the "right synapses" are contributing to the response. ...

... Therefore, each synapse can be adjusted during a process of learning to produce the correct output. This allows for procedural learning, where each time an action is performed, it becomes somewhat more accurate since the "right synapses" are contributing to the response. ...

Damien Lescal , Jean Rouat, and Stéphane Molotchnikoff

... neural network to identify highly textured regions of an image. In others words, highly textured portions of an image would be isolated and the most homogeneous segment would be identified. Using this neural network, it would be possible to identify textured objects (natural objects) and non-texture ...

... neural network to identify highly textured regions of an image. In others words, highly textured portions of an image would be isolated and the most homogeneous segment would be identified. Using this neural network, it would be possible to identify textured objects (natural objects) and non-texture ...

What are Neural Networks? - Teaching-WIKI

... weight Wi in the network, and for each training suite in the training set. • One such cycle through all weighty is called an epoch of training. • Eventually, mostly after many epochs, the weight changes converge towards zero and the training process terminates. • The perceptron learning process alwa ...

... weight Wi in the network, and for each training suite in the training set. • One such cycle through all weighty is called an epoch of training. • Eventually, mostly after many epochs, the weight changes converge towards zero and the training process terminates. • The perceptron learning process alwa ...

Document

... In this chapter the synthesis RBF-neural networks, that aimed at solving classification tasks. The classifier, in general, is a function that on attributes vector base of the object makes a decision, which is a class it belongs, respectively (1). In the binary classification the class identifiers ca ...

... In this chapter the synthesis RBF-neural networks, that aimed at solving classification tasks. The classifier, in general, is a function that on attributes vector base of the object makes a decision, which is a class it belongs, respectively (1). In the binary classification the class identifiers ca ...

Rat Brain Robot

... Controlled by neurons from a rat’s brain A robot consisting of two wheels with a sonar sensor ...

... Controlled by neurons from a rat’s brain A robot consisting of two wheels with a sonar sensor ...