Introduction to Randomized Algorithms.

... uniformly at random. We only consider deterministic algorithms that does not probe the same box twice. By symmetry we can assume that the probe order for the deterministic algorithm is 1 through n. B Yao’s in-equality, we have Min A έ A E[C(A; Ip)] =∑i/n = (n+1)/2 <= max I έ I E[C(I;Aq)] Therefore a ...

... uniformly at random. We only consider deterministic algorithms that does not probe the same box twice. By symmetry we can assume that the probe order for the deterministic algorithm is 1 through n. B Yao’s in-equality, we have Min A έ A E[C(A; Ip)] =∑i/n = (n+1)/2 <= max I έ I E[C(I;Aq)] Therefore a ...

Variance Reduction for Stable Feature Selection

... Class label: a weighted sum of all feature values with optimal feature weight vector ...

... Class label: a weighted sum of all feature values with optimal feature weight vector ...

Sentiment Analysis of Movie Ratings System

... et al on “Extracting Aspects and Mining Opinions in Product Reviews using Supervised Learning Algorithm” [2] discusses phrase- level opinion mining which performs finer grained analysis and directly look at the opinion in the online reviews which is used to extract important aspects of an item and t ...

... et al on “Extracting Aspects and Mining Opinions in Product Reviews using Supervised Learning Algorithm” [2] discusses phrase- level opinion mining which performs finer grained analysis and directly look at the opinion in the online reviews which is used to extract important aspects of an item and t ...

Multi - Variant Spatial Outlier Approach to

... be used to detect less developed sites in giveen region. We have used multiple non-spatial attributes of many spatially distributed sites. We have applied two veryy popular mean and median based spatial outlier detection technnique on a real data set of twenty one sites in the state of Haryanna. Res ...

... be used to detect less developed sites in giveen region. We have used multiple non-spatial attributes of many spatially distributed sites. We have applied two veryy popular mean and median based spatial outlier detection technnique on a real data set of twenty one sites in the state of Haryanna. Res ...

IJARCCE 20

... from entangled or uncertain information and can be Customer Development: This phase aims to grow the size utilized to concentrate patterns and distinguish patterns of the customer transactions with the organization. Basics that are too immense to possibly be seen by either people of customer develop ...

... from entangled or uncertain information and can be Customer Development: This phase aims to grow the size utilized to concentrate patterns and distinguish patterns of the customer transactions with the organization. Basics that are too immense to possibly be seen by either people of customer develop ...

A Review of Feature Selection Algorithms for Data Mining Techniques

... namely Filter, Wrapper and Hybrid Method [6]. Filter Method selects the feature subset on the basis of intrinsic characteristics of the data, independent of mining algorithm. It can be applied to data with high dimensionality. The advantages of Filter method are its generality and high computation e ...

... namely Filter, Wrapper and Hybrid Method [6]. Filter Method selects the feature subset on the basis of intrinsic characteristics of the data, independent of mining algorithm. It can be applied to data with high dimensionality. The advantages of Filter method are its generality and high computation e ...

Classification Based On Association Rule Mining Technique

... then is used to predict the class of objects whose class label is not known. The model is trained so that it can distinguish different data classes. The training data is having data objects whose class label is known in advance. Classification analysis is the organization of data in given classes. A ...

... then is used to predict the class of objects whose class label is not known. The model is trained so that it can distinguish different data classes. The training data is having data objects whose class label is known in advance. Classification analysis is the organization of data in given classes. A ...

Cost-Efficient Mining Techniques for Data Streams

... In this section, we present the application of the algorithm output granularity to light weight K-Nearest-Neighbors classification LWClass. The algorithm starts with determining the number of instances according to the available space in the main memory. When a new classified data element arrives, t ...

... In this section, we present the application of the algorithm output granularity to light weight K-Nearest-Neighbors classification LWClass. The algorithm starts with determining the number of instances according to the available space in the main memory. When a new classified data element arrives, t ...

A Survey: Outlier Detection in Streaming Data Using

... compared with real and synthetic data sets. The proposed Incremental K Means variant is faster than the already quite fast Scalable K means and finds solution of comparable quality. The K means variants are compared with respect to quality of speed and results. The proposed algorithms can be used to ...

... compared with real and synthetic data sets. The proposed Incremental K Means variant is faster than the already quite fast Scalable K means and finds solution of comparable quality. The K means variants are compared with respect to quality of speed and results. The proposed algorithms can be used to ...

Cluster

... • global: represents each cluster by a prototype and assigns a pattern to a cluster with most similar prototype. (e.g. K-means, Self Organizing Maps) • Many other techniques in literature such as density estimation and mixture decomposition. • From [Jain & Dubes] Algorithms for Clustering Data, 1988 ...

... • global: represents each cluster by a prototype and assigns a pattern to a cluster with most similar prototype. (e.g. K-means, Self Organizing Maps) • Many other techniques in literature such as density estimation and mixture decomposition. • From [Jain & Dubes] Algorithms for Clustering Data, 1988 ...

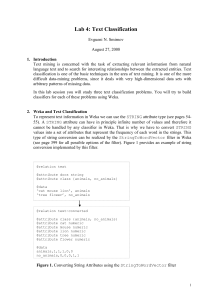

DM3: Input: Concepts, instances, attributes

... What’s in an example? Instance: specific type of example Thing to be classified, associated, or clustered Individual, independent example of target concept Characterized by a predetermined set of attributes ...

... What’s in an example? Instance: specific type of example Thing to be classified, associated, or clustered Individual, independent example of target concept Characterized by a predetermined set of attributes ...

DM3: Input: Concepts, instances, attributes

... What’s in an example? Instance: specific type of example Thing to be classified, associated, or clustered Individual, independent example of target concept Characterized by a predetermined set of attributes ...

... What’s in an example? Instance: specific type of example Thing to be classified, associated, or clustered Individual, independent example of target concept Characterized by a predetermined set of attributes ...

PEBL: Web Page Classification without Negative

... labeled data and unlabeled data and the other for controlling the quantity of mixture components corresponding to one class [16]. Another semisupervised learning occurs when it is combined with SVMs to form transductive SVM [17]. With careful parameter setting, both of these works show good results ...

... labeled data and unlabeled data and the other for controlling the quantity of mixture components corresponding to one class [16]. Another semisupervised learning occurs when it is combined with SVMs to form transductive SVM [17]. With careful parameter setting, both of these works show good results ...

Genetic Algorithms for Multi-Criterion Classification and Clustering

... divided amongst the clusters. Figure 1 shows encoding of the clustering {{O1, O2, O4}, {O3, O5, O6}} by group number and matrix representations, respectively. Group-number encoding is based on the first encoding scheme and represents a clustering of n objects as a string of n integers where the ith ...

... divided amongst the clusters. Figure 1 shows encoding of the clustering {{O1, O2, O4}, {O3, O5, O6}} by group number and matrix representations, respectively. Group-number encoding is based on the first encoding scheme and represents a clustering of n objects as a string of n integers where the ith ...

K-nearest neighbors algorithm

In pattern recognition, the k-Nearest Neighbors algorithm (or k-NN for short) is a non-parametric method used for classification and regression. In both cases, the input consists of the k closest training examples in the feature space. The output depends on whether k-NN is used for classification or regression: In k-NN classification, the output is a class membership. An object is classified by a majority vote of its neighbors, with the object being assigned to the class most common among its k nearest neighbors (k is a positive integer, typically small). If k = 1, then the object is simply assigned to the class of that single nearest neighbor. In k-NN regression, the output is the property value for the object. This value is the average of the values of its k nearest neighbors.k-NN is a type of instance-based learning, or lazy learning, where the function is only approximated locally and all computation is deferred until classification. The k-NN algorithm is among the simplest of all machine learning algorithms.Both for classification and regression, it can be useful to assign weight to the contributions of the neighbors, so that the nearer neighbors contribute more to the average than the more distant ones. For example, a common weighting scheme consists in giving each neighbor a weight of 1/d, where d is the distance to the neighbor.The neighbors are taken from a set of objects for which the class (for k-NN classification) or the object property value (for k-NN regression) is known. This can be thought of as the training set for the algorithm, though no explicit training step is required.A shortcoming of the k-NN algorithm is that it is sensitive to the local structure of the data. The algorithm has nothing to do with and is not to be confused with k-means, another popular machine learning technique.