Lecture 1: Overview

... In a classification problem, we do not necessarily need density estimation. Generative model --- care about class density function. Discriminative model --- care about boundary. Example: Classifying belt fish and carp. Looking at the length/width ratio is enough. Why should we care how many teeth ea ...

... In a classification problem, we do not necessarily need density estimation. Generative model --- care about class density function. Discriminative model --- care about boundary. Example: Classifying belt fish and carp. Looking at the length/width ratio is enough. Why should we care how many teeth ea ...

IOSR Journal of Computer Engineering (IOSR-JCE)

... Using Ensemble Methods for Improving Classification of the KDD CUP ’99 Data Set 3.1: Bootstrap aggregating (bagging) Bagging is a machine learning ensemble algorithm used to improve the accuracy of the algorithms used in statistical classification and regression experiments .Most importantly, it re ...

... Using Ensemble Methods for Improving Classification of the KDD CUP ’99 Data Set 3.1: Bootstrap aggregating (bagging) Bagging is a machine learning ensemble algorithm used to improve the accuracy of the algorithms used in statistical classification and regression experiments .Most importantly, it re ...

slides - Charu Aggarwal

... • A total of 12,313 English words are extracted from the paper titles. • We segment the data into 10 synthetic time periods. • DBLP: a set of authors and their collaborations • Each node is an author and each edge is a collaboration. • A total of 194 English words in the domain of computer science a ...

... • A total of 12,313 English words are extracted from the paper titles. • We segment the data into 10 synthetic time periods. • DBLP: a set of authors and their collaborations • Each node is an author and each edge is a collaboration. • A total of 194 English words in the domain of computer science a ...

dengue detection and prediction system using data mining

... supply features and get a prediction on a possible heart attack. Oona Frunza et al [6] present a machine learning approach that identifies semantic relations between treatments and diseases and focuses on three semantic relations (prevent, cure and side effect). Later, features were extracted from u ...

... supply features and get a prediction on a possible heart attack. Oona Frunza et al [6] present a machine learning approach that identifies semantic relations between treatments and diseases and focuses on three semantic relations (prevent, cure and side effect). Later, features were extracted from u ...

Document

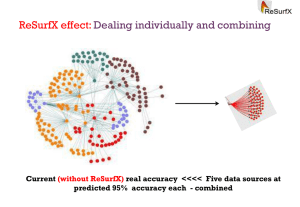

... P(w|X;M) uses information X to predicts who will respond. Order predictions from the most likely to the least likely: P(w|X1;M) > P(w|X2;M) ... > P(w|Xk;M) The ideal model should put those 20% that will reply in front, so that the number of replies Y(Xj) grows to Y0=0.2*N for j=1 .. Y0. In the id ...

... P(w|X;M) uses information X to predicts who will respond. Order predictions from the most likely to the least likely: P(w|X1;M) > P(w|X2;M) ... > P(w|Xk;M) The ideal model should put those 20% that will reply in front, so that the number of replies Y(Xj) grows to Y0=0.2*N for j=1 .. Y0. In the id ...

Predictive Analytics - Regression and Classification

... • It constructs a separating hyperplane in that space, one which maximizes the margin between the two data sets. • To calculate the margin, two parallel hyperplanes are constructed, one on each side of the separating hyperplane. • A good separation is achieved by the hyperplane that has the largest ...

... • It constructs a separating hyperplane in that space, one which maximizes the margin between the two data sets. • To calculate the margin, two parallel hyperplanes are constructed, one on each side of the separating hyperplane. • A good separation is achieved by the hyperplane that has the largest ...

Locality-Sensitive Hashing Scheme Based on p-Stable

... large number of dimensions, the so-called “curse of dimensionality”. Despite decades of intensive effort, the current solutions are not entirely satisfactory; in fact, for large enough d, in theory or in practice, they often provide little improvement over a linear algorithm which compares a query t ...

... large number of dimensions, the so-called “curse of dimensionality”. Despite decades of intensive effort, the current solutions are not entirely satisfactory; in fact, for large enough d, in theory or in practice, they often provide little improvement over a linear algorithm which compares a query t ...

Intro to Remote Sensing

... selected. These initial values can influence the outcome of the classification. In general, both methods assign first arbitrary initial cluster values. The second step classifies each pixel to the closest cluster. In the third step the new cluster mean vectors are calculated based on all the pixels ...

... selected. These initial values can influence the outcome of the classification. In general, both methods assign first arbitrary initial cluster values. The second step classifies each pixel to the closest cluster. In the third step the new cluster mean vectors are calculated based on all the pixels ...

Analysis of Distance Measures Using K

... assigned to data point. If there is tie between the two classes, then random class is chosen for data point. As shown in figure 1(c), three nearest neighbor are present. One is negative and other two is positive. So in this case, majority voting is used to assign class label to data point. ...

... assigned to data point. If there is tie between the two classes, then random class is chosen for data point. As shown in figure 1(c), three nearest neighbor are present. One is negative and other two is positive. So in this case, majority voting is used to assign class label to data point. ...

Advances in Natural and Applied Sciences

... classifier) using WEKA Tool. Classification is two-step process, first, it build the classification model using training data. Every object of the dataset must be pre-classified i.e. its class label must be known, second the model generated in the preceding step is tested by assigning class labels t ...

... classifier) using WEKA Tool. Classification is two-step process, first, it build the classification model using training data. Every object of the dataset must be pre-classified i.e. its class label must be known, second the model generated in the preceding step is tested by assigning class labels t ...

Course Title: DATA MINING AND BUSINESS INTELLIGENCE Credit

... Module III Classification and Predictions What is Classification & Prediction, Issues regarding Classification and prediction, Decision tree, Bayesian Classification, Classification by Back propagation, Multilayer feed-forward Neural Network, Back propagation Algorithm, Classification methods K-near ...

... Module III Classification and Predictions What is Classification & Prediction, Issues regarding Classification and prediction, Decision tree, Bayesian Classification, Classification by Back propagation, Multilayer feed-forward Neural Network, Back propagation Algorithm, Classification methods K-near ...

A novel algorithm applied to filter spam e-mails using Machine

... As the technology of machine learning continues to develop and mature, learning algorithms need to be brought to the desktops of people who work with data and understand the application domain from which it arises. It is necessary to get the algorithms out of the laboratory and into the work environ ...

... As the technology of machine learning continues to develop and mature, learning algorithms need to be brought to the desktops of people who work with data and understand the application domain from which it arises. It is necessary to get the algorithms out of the laboratory and into the work environ ...

PPT

... Summary: SVMs for image classification 1. Pick an image representation (in our case, bag of features) 2. Pick a kernel function for that representation 3. Compute the matrix of kernel values between every pair of training examples 4. Feed the kernel matrix into your favorite SVM solver to obtain su ...

... Summary: SVMs for image classification 1. Pick an image representation (in our case, bag of features) 2. Pick a kernel function for that representation 3. Compute the matrix of kernel values between every pair of training examples 4. Feed the kernel matrix into your favorite SVM solver to obtain su ...

幻灯片 1 - Peking University

... Supervised learning infers a function that maps inputs to desired outputs with the guidance of training data. The state-of-the-art algorithm is SVM based on large margin and kernel trick. It was observed that SVM is liable to overfitting, especially on small sample data sets; sometimes SVM can offer ...

... Supervised learning infers a function that maps inputs to desired outputs with the guidance of training data. The state-of-the-art algorithm is SVM based on large margin and kernel trick. It was observed that SVM is liable to overfitting, especially on small sample data sets; sometimes SVM can offer ...

Introduction to Predictive Analytcs

... Generally, models closer to the top left are best, e.g. 100% true positive rate and 0% False Positive Rate ...

... Generally, models closer to the top left are best, e.g. 100% true positive rate and 0% False Positive Rate ...

K-nearest neighbors algorithm

In pattern recognition, the k-Nearest Neighbors algorithm (or k-NN for short) is a non-parametric method used for classification and regression. In both cases, the input consists of the k closest training examples in the feature space. The output depends on whether k-NN is used for classification or regression: In k-NN classification, the output is a class membership. An object is classified by a majority vote of its neighbors, with the object being assigned to the class most common among its k nearest neighbors (k is a positive integer, typically small). If k = 1, then the object is simply assigned to the class of that single nearest neighbor. In k-NN regression, the output is the property value for the object. This value is the average of the values of its k nearest neighbors.k-NN is a type of instance-based learning, or lazy learning, where the function is only approximated locally and all computation is deferred until classification. The k-NN algorithm is among the simplest of all machine learning algorithms.Both for classification and regression, it can be useful to assign weight to the contributions of the neighbors, so that the nearer neighbors contribute more to the average than the more distant ones. For example, a common weighting scheme consists in giving each neighbor a weight of 1/d, where d is the distance to the neighbor.The neighbors are taken from a set of objects for which the class (for k-NN classification) or the object property value (for k-NN regression) is known. This can be thought of as the training set for the algorithm, though no explicit training step is required.A shortcoming of the k-NN algorithm is that it is sensitive to the local structure of the data. The algorithm has nothing to do with and is not to be confused with k-means, another popular machine learning technique.