CS 590M: Security Issues in Data Mining

... – Class of test is same as class of closest training item – Need to define distance ...

... – Class of test is same as class of closest training item – Need to define distance ...

Course Title: DATA MINING AND BUSINESS INTELLIGENCE Credit

... Learn to apply various data mining techniques into various areas of different domains. Examine the types of the data to be mined and present a general classification of tasks and primitives to integrate a data mining system. Discover interesting patterns from large amounts of data to analyze and ext ...

... Learn to apply various data mining techniques into various areas of different domains. Examine the types of the data to be mined and present a general classification of tasks and primitives to integrate a data mining system. Discover interesting patterns from large amounts of data to analyze and ext ...

shattered - Data Science and Machine Intelligence Lab

... Goal of Learning Algorithms The early learning algorithms were designed to find such an accurate fit to the data. A classifier is said to be consistent if it performed the correct classification of the training data The ability of a classifier to correctly classify data not in the training set ...

... Goal of Learning Algorithms The early learning algorithms were designed to find such an accurate fit to the data. A classifier is said to be consistent if it performed the correct classification of the training data The ability of a classifier to correctly classify data not in the training set ...

Missing Data Imputation Using Evolutionary k

... ● Many machine learning algorithms solve missing data problem in an efficient way. ● Advantage of using a machine learning approach is that the missing data treatment is independent of the learning algorithm used. ...

... ● Many machine learning algorithms solve missing data problem in an efficient way. ● Advantage of using a machine learning approach is that the missing data treatment is independent of the learning algorithm used. ...

Mass record updates Data scrubbing Data mining Importing data

... We receive many, many requests to create different kinds of reports for clients. Regardless of whether they are looking to report on association activities, get current data on members, or gain insights to make forecasts, we can help. We get asked to develop IQA, Crystal Reports, SSRS, SQL and AdHoc ...

... We receive many, many requests to create different kinds of reports for clients. Regardless of whether they are looking to report on association activities, get current data on members, or gain insights to make forecasts, we can help. We get asked to develop IQA, Crystal Reports, SSRS, SQL and AdHoc ...

Team 2 - K-NN

... Retrieve help desk information Legal reasoning Conceptual design of mechanical devices ...

... Retrieve help desk information Legal reasoning Conceptual design of mechanical devices ...

BIOS 740: STATISTICAL LEARNING AND HIGH

... review of supervised learning methods (discriminant analysis, kernel methods, nearest neighborhood, tree methods, neural network, support vector machine, random forest, and boosting methods) and unsupervised learning methods (principal component analysis, factor analysis, cluster analysis, multidime ...

... review of supervised learning methods (discriminant analysis, kernel methods, nearest neighborhood, tree methods, neural network, support vector machine, random forest, and boosting methods) and unsupervised learning methods (principal component analysis, factor analysis, cluster analysis, multidime ...

- UCL Discovery

... Text and Data Mining EU Commission set up a Working Group on TDM, as part of Licences For Europe TDM is the process of deriving information from machinereadable material. It works by copying large quantities of material, extracting the data, and recombining it to identify ...

... Text and Data Mining EU Commission set up a Working Group on TDM, as part of Licences For Europe TDM is the process of deriving information from machinereadable material. It works by copying large quantities of material, extracting the data, and recombining it to identify ...

MDAI 2016 - Modeling Decisions for Artificial Intelligence

... forum for researchers to discuss models for decision and information fusion (aggregation operators) and their applications to AI. In MDAI 2016, we encourage the submission of papers on decision making, information fusion, social networks, data mining, and related topics. Applications to data science ...

... forum for researchers to discuss models for decision and information fusion (aggregation operators) and their applications to AI. In MDAI 2016, we encourage the submission of papers on decision making, information fusion, social networks, data mining, and related topics. Applications to data science ...

Decision Tree Classification

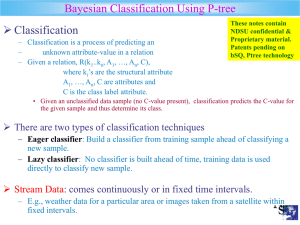

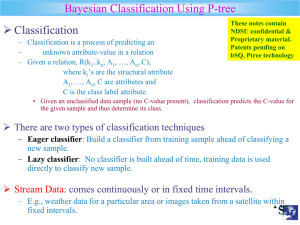

... Given a relation R(K, A1..An, C) where K is the structure attribute and Ai and C are feature attributes. Also C is the class label attribute. Each data sample is represented by feature vector, X=(x1..,xn) depicting the measurements made on the sample from A1,..An, respectively. Given classes, ...

... Given a relation R(K, A1..An, C) where K is the structure attribute and Ai and C are feature attributes. Also C is the class label attribute. Each data sample is represented by feature vector, X=(x1..,xn) depicting the measurements made on the sample from A1,..An, respectively. Given classes, ...

Philosophies and Advances in Scaling Mining Algorithms to Large

... database. For large blocks, this model captures common practice in many of today’s data warehouse installations, where updates from operational databases are batched together and performed in a block update. For small blocks of data, this model captures streaming data, where in the extreme the size ...

... database. For large blocks, this model captures common practice in many of today’s data warehouse installations, where updates from operational databases are batched together and performed in a block update. For small blocks of data, this model captures streaming data, where in the extreme the size ...

Effective Oracles for Fast Approximate Similarity Search

... The effectiveness of indices for search and retrieval has traditionally been evaluated using such measures as precision, recall, and the F-measure, averaged over large numbers of queries of the data set. When the query is based at an object of the data set (query-by-example), the measured performanc ...

... The effectiveness of indices for search and retrieval has traditionally been evaluated using such measures as precision, recall, and the F-measure, averaged over large numbers of queries of the data set. When the query is based at an object of the data set (query-by-example), the measured performanc ...

Data-Science-Predictive

... predicts future values;(2) The k-means algorithm;(3) Regression method. Data analytics project from SocialProof Marketing and Advertising company. The result implies that discount price may not increase customers’ switching. May be the company should offer similar types of benefits (discounts, coupo ...

... predicts future values;(2) The k-means algorithm;(3) Regression method. Data analytics project from SocialProof Marketing and Advertising company. The result implies that discount price may not increase customers’ switching. May be the company should offer similar types of benefits (discounts, coupo ...

Fisher linear discriminant analysis - public.asu.edu

... – Only a subset of the original features are used. ...

... – Only a subset of the original features are used. ...

Nonlinear dimensionality reduction

High-dimensional data, meaning data that requires more than two or three dimensions to represent, can be difficult to interpret. One approach to simplification is to assume that the data of interest lie on an embedded non-linear manifold within the higher-dimensional space. If the manifold is of low enough dimension, the data can be visualised in the low-dimensional space.Below is a summary of some of the important algorithms from the history of manifold learning and nonlinear dimensionality reduction (NLDR). Many of these non-linear dimensionality reduction methods are related to the linear methods listed below. Non-linear methods can be broadly classified into two groups: those that provide a mapping (either from the high-dimensional space to the low-dimensional embedding or vice versa), and those that just give a visualisation. In the context of machine learning, mapping methods may be viewed as a preliminary feature extraction step, after which pattern recognition algorithms are applied. Typically those that just give a visualisation are based on proximity data – that is, distance measurements.