CLOsed-loop Neural prostheses for vestibular disorderS

... This neural prosthesis will be able to restore vestibular information by stimulating the semicircular canals thanks to the information provided by inertial sensors embedded in a device attached to the head and donned by the ...

... This neural prosthesis will be able to restore vestibular information by stimulating the semicircular canals thanks to the information provided by inertial sensors embedded in a device attached to the head and donned by the ...

From autism to ADHD: computational simulations

... significantly overlapping concepts. What behavioral changes are expected? How to tests for them? ...

... significantly overlapping concepts. What behavioral changes are expected? How to tests for them? ...

www.translationalneuromodeling.org

... for the integration of these features into a percept (Eckhorn et al, 1988, Kreiter and Singer, 1996, Fries et al, 1997) Feature bindng extends to oflactory system (Freeman et al, 1978) and the auditory system (Aersen et al, 1991) It is also thought that synchronixation across several motor regions ( ...

... for the integration of these features into a percept (Eckhorn et al, 1988, Kreiter and Singer, 1996, Fries et al, 1997) Feature bindng extends to oflactory system (Freeman et al, 1978) and the auditory system (Aersen et al, 1991) It is also thought that synchronixation across several motor regions ( ...

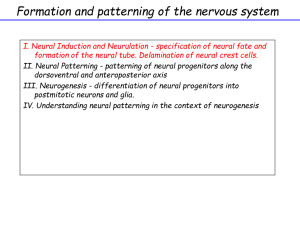

Neurulation I (Pevny)

... Median Hinge point is induced by signals from the notochord, experiments in mouse that Shh signaling that comes from the notochord inhibits DHP formation, if Shh is overexpressed (in transgenic mice or Ptc mutants) Dorsal hinge points do not form resulting in neural tube defects. The epidermal ectod ...

... Median Hinge point is induced by signals from the notochord, experiments in mouse that Shh signaling that comes from the notochord inhibits DHP formation, if Shh is overexpressed (in transgenic mice or Ptc mutants) Dorsal hinge points do not form resulting in neural tube defects. The epidermal ectod ...

S013513518

... There is one neuron in the input layer for each predictor variable. In the case of categorical variables, N-1 neurons are used to represent the N categories of the variable. Input Layer — A vector of predictor variable values (x1...xp) is presented to the input layer. The input layer standardizes th ...

... There is one neuron in the input layer for each predictor variable. In the case of categorical variables, N-1 neurons are used to represent the N categories of the variable. Input Layer — A vector of predictor variable values (x1...xp) is presented to the input layer. The input layer standardizes th ...

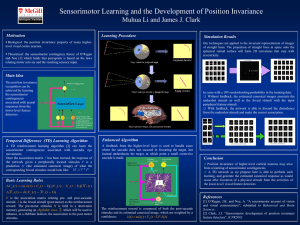

Document

... With feedback, the network is able to discard the disturbance from the undershot stimuli and make the correct association. ...

... With feedback, the network is able to discard the disturbance from the undershot stimuli and make the correct association. ...

Evolving Fuzzy Neural Networks - Algorithms, Applications

... 'manually' created each having the following architecture: 78 inputs (3 time lags of 26 element mel vectors each), 234 condition nodes (three fuzzy membership functions per input), 10 rule nodes, two action nodes, and one output. This architecture is identical to that used for the speech recognition ...

... 'manually' created each having the following architecture: 78 inputs (3 time lags of 26 element mel vectors each), 234 condition nodes (three fuzzy membership functions per input), 10 rule nodes, two action nodes, and one output. This architecture is identical to that used for the speech recognition ...

Deep Belief Networks Learn Context Dependent Behavior Florian Raudies *

... g = 0.2 is the learning rate, and the parameter l = 261024 is a penalty term. For the update of these bias terms in Eq. (12) and (13) we set the penalty term l = 0. We used M = 50 epochs of training. For the last Mavg = 5 epochs we averaged the weights and biases for the updates. If a teacher signal ...

... g = 0.2 is the learning rate, and the parameter l = 261024 is a penalty term. For the update of these bias terms in Eq. (12) and (13) we set the penalty term l = 0. We used M = 50 epochs of training. For the last Mavg = 5 epochs we averaged the weights and biases for the updates. If a teacher signal ...

Lab 4-5: Deep SOM-MLP Classifier

... Use SOM in your MLP Classifier First, create a SOM network and train it to get groups of training samples represented by its nodes. Second, use all SOM outputs computed for each original input data to stimulate the MLP Network instead of using the original input data. You can also use both on the i ...

... Use SOM in your MLP Classifier First, create a SOM network and train it to get groups of training samples represented by its nodes. Second, use all SOM outputs computed for each original input data to stimulate the MLP Network instead of using the original input data. You can also use both on the i ...

Brain(annotated)

... A more likely view is that the information is encoded in exact spike times (and also the strength of synaptic connections). Thus neurons are communicating by sending numbers (times) to each other, and interpreting that information via synaptic strengths. ...

... A more likely view is that the information is encoded in exact spike times (and also the strength of synaptic connections). Thus neurons are communicating by sending numbers (times) to each other, and interpreting that information via synaptic strengths. ...

Connexionism and Computationalism

... How would a symbolic system be programmed to deal with this situation? RGB input values of (255,255,255) would be interpreted as “white”. If(r == 255 && g == 255 & b == 255) color = white but (254,243, 255) would not be, because they would fail the if-statements. So you change the program to work wi ...

... How would a symbolic system be programmed to deal with this situation? RGB input values of (255,255,255) would be interpreted as “white”. If(r == 255 && g == 255 & b == 255) color = white but (254,243, 255) would not be, because they would fail the if-statements. So you change the program to work wi ...

PSY105 Neural Networks 2/5

... learn to associate a warning stimulus with an upcoming wall and hence turn around before it reaches the wall. Describe an experiment which you would do to test the details of how the robot learns. Say what you would do, and what aspect(s) of the robot's learning the results would inform you of, and ...

... learn to associate a warning stimulus with an upcoming wall and hence turn around before it reaches the wall. Describe an experiment which you would do to test the details of how the robot learns. Say what you would do, and what aspect(s) of the robot's learning the results would inform you of, and ...

network - Ohio University

... Completion of images Project pat_complete.proj in Chapter_3. A network with one 5x7 layer, connected bidirectionally with itself. Symmetrical connections: the same weights Wij=Wji. Units belonging to image 8 are connected to themselves with a weight of 1, remaining units have a weight of 0. Activat ...

... Completion of images Project pat_complete.proj in Chapter_3. A network with one 5x7 layer, connected bidirectionally with itself. Symmetrical connections: the same weights Wij=Wji. Units belonging to image 8 are connected to themselves with a weight of 1, remaining units have a weight of 0. Activat ...

Hybrots - Computing Science and Mathematics

... enables much longer-term experiments to be conducted than before, allowing us to go past the 'developmental' phase (which lasts about 90 days for these cultures (Kamioka et al 1996)) and well into maturity (and perhaps, senility?). The recording technology is further along than stimulation technolog ...

... enables much longer-term experiments to be conducted than before, allowing us to go past the 'developmental' phase (which lasts about 90 days for these cultures (Kamioka et al 1996)) and well into maturity (and perhaps, senility?). The recording technology is further along than stimulation technolog ...

A Synapse Plasticity Model for Conceptual Drift Problems Ashwin Ram ()

... neuron level, electrical properties vary from dendrite to dendrite, creating latencies in action potential propagation. However, the processes governing potentiation of synapses should be constant for all synapses. In other words, a constant factor is used to potentiate synapses when necessary. The ...

... neuron level, electrical properties vary from dendrite to dendrite, creating latencies in action potential propagation. However, the processes governing potentiation of synapses should be constant for all synapses. In other words, a constant factor is used to potentiate synapses when necessary. The ...

ANPS 019 Beneyto-Santonja 10-24

... o Autonomic centers for regulation of visceral function (cardiovascular, respiratory, and digestive system activities) Cerebellum o Coordinates complex somatic motor patterns o Adjusts output of other somatic motor centers in brain and spinal cord How does the CNS get its adult shape? Embryonic ...

... o Autonomic centers for regulation of visceral function (cardiovascular, respiratory, and digestive system activities) Cerebellum o Coordinates complex somatic motor patterns o Adjusts output of other somatic motor centers in brain and spinal cord How does the CNS get its adult shape? Embryonic ...

PowerPoint-presentatie

... • Geometrical arrangement of output units • Nearby outputs correspond to nearby input patterns ...

... • Geometrical arrangement of output units • Nearby outputs correspond to nearby input patterns ...