Powerpoint

... •The process of adjusting the weights and threshold values in a neural net is called training •A neural net can presumably be trained to produce whatever results are required •But think about the complexity of this! Train a computer to recognize any cat in a picture, based on training run with sever ...

... •The process of adjusting the weights and threshold values in a neural net is called training •A neural net can presumably be trained to produce whatever results are required •But think about the complexity of this! Train a computer to recognize any cat in a picture, based on training run with sever ...

textbook slides

... •The process of adjusting the weights and threshold values in a neural net is called training •A neural net can presumably be trained to produce whatever results are required •But think about the complexity of this! Train a computer to recognize any cat in a picture, based on training run with sever ...

... •The process of adjusting the weights and threshold values in a neural net is called training •A neural net can presumably be trained to produce whatever results are required •But think about the complexity of this! Train a computer to recognize any cat in a picture, based on training run with sever ...

chapter_1

... The first VLSI realization of neural networks. Broomhead and Lowe (1988) First exploitation of radial basis function in designing neural ...

... The first VLSI realization of neural networks. Broomhead and Lowe (1988) First exploitation of radial basis function in designing neural ...

Towards comprehensive foundations of Computational Intelligence

... terms of old has been used to define the measure of syntactic and semantic information (Duch, Jankowski 1994); based on the size of the minimal graph representing a given data structure or knowledge-base specification, thus it goes beyond alignment. ...

... terms of old has been used to define the measure of syntactic and semantic information (Duch, Jankowski 1994); based on the size of the minimal graph representing a given data structure or knowledge-base specification, thus it goes beyond alignment. ...

Learning multiple layers of representation

... Learning feature detectors To enable the perceptual system to make the fine distinctions that are required to control behavior, sensory cortex needs an efficient way of adapting the synaptic weights of multiple layers of feature-detecting neurons. The backpropagation learning procedure [1] iterative ...

... Learning feature detectors To enable the perceptual system to make the fine distinctions that are required to control behavior, sensory cortex needs an efficient way of adapting the synaptic weights of multiple layers of feature-detecting neurons. The backpropagation learning procedure [1] iterative ...

(MCF)_Forecast_of_the_Mean_Monthly_Prices

... aspects that hinder their specification. This relates to the fact that the optimal parameters are not unique for a specification of the model (inputs or lags, hidden neurons, etc.). The artificial neural network type Cascade Correlation CASCOR [6] presents interesting conceptual advantages in relati ...

... aspects that hinder their specification. This relates to the fact that the optimal parameters are not unique for a specification of the model (inputs or lags, hidden neurons, etc.). The artificial neural network type Cascade Correlation CASCOR [6] presents interesting conceptual advantages in relati ...

2806nn7

... Flip a fair coin( ~ simulates a random guess) If the result is heads, pass a new pattern through the first expert and discard correctly classified patterns until a pattern is missclassified. That missclassified pattern is added to the training set for the second expert. If the result is tails, pass ...

... Flip a fair coin( ~ simulates a random guess) If the result is heads, pass a new pattern through the first expert and discard correctly classified patterns until a pattern is missclassified. That missclassified pattern is added to the training set for the second expert. If the result is tails, pass ...

nips2.frame - /marty/papers/drotdil

... For all training sets, the receptive fields of all units contained regions of all-max weights and all-min weights within the direction-speed subspace at each x-y point. For comparison, if the model is trained on uncorrelated direction noise (a different random local direction at each x-y point), thi ...

... For all training sets, the receptive fields of all units contained regions of all-max weights and all-min weights within the direction-speed subspace at each x-y point. For comparison, if the model is trained on uncorrelated direction noise (a different random local direction at each x-y point), thi ...

CLASSIFICATION AND CLUSTERING MEDICAL DATASETS BY

... Artificial Neural Networks (ANN) is an information-processing paradigm inspired by the way the human brain processes information. Artificial neural networks are collections of mathematical models that represent some of the observed properties of biological nervous systems and draw on the analogies o ...

... Artificial Neural Networks (ANN) is an information-processing paradigm inspired by the way the human brain processes information. Artificial neural networks are collections of mathematical models that represent some of the observed properties of biological nervous systems and draw on the analogies o ...

Site-specific correlation of GPS height residuals with soil moisture variability

... contrast to standard statistical test procedures, they can correlate, both spatially and temporally, one or multiple input variables (e.g. soil moisture+other variables) with a single output signal (e.g. long-term GPS time series) through the interconnected neurons with trainable weights and bias si ...

... contrast to standard statistical test procedures, they can correlate, both spatially and temporally, one or multiple input variables (e.g. soil moisture+other variables) with a single output signal (e.g. long-term GPS time series) through the interconnected neurons with trainable weights and bias si ...

Artificial Intelligence for Speech Recognition Based on Neural

... Model of speech recognition was based on artificial neural networks. This was investigated to develop a learning neural network using genetic algorithm. This approach was implemented in the system identification numbers, coming to the realization of the system of recognition of voice commands. A sys ...

... Model of speech recognition was based on artificial neural networks. This was investigated to develop a learning neural network using genetic algorithm. This approach was implemented in the system identification numbers, coming to the realization of the system of recognition of voice commands. A sys ...

Artificial Spiking Neural Networks

... • Real cortical neurons communicate with spikes or action potentials ...

... • Real cortical neurons communicate with spikes or action potentials ...

Hybrid Intelligent Systems

... place of the knowledge base. The input data does not have to precisely match the data that was used in network training. This ability is called approximate reasoning. Rule Extraction § Neurons in the network are connected by links, each of which has a numerical weight attached to it. § The weights i ...

... place of the knowledge base. The input data does not have to precisely match the data that was used in network training. This ability is called approximate reasoning. Rule Extraction § Neurons in the network are connected by links, each of which has a numerical weight attached to it. § The weights i ...

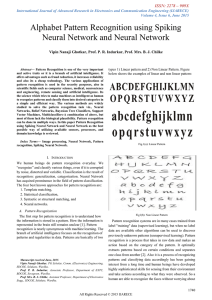

Alphabet Pattern Recognition using Spiking Neural

... Abstract— Pattern Recognition is one of the very important and active traits or it is a branch of artificial intelligence. It offers advantages such as fraud reduction, it increases reliability and also its a cheap technology. The various applications of pattern recognition is used in the security p ...

... Abstract— Pattern Recognition is one of the very important and active traits or it is a branch of artificial intelligence. It offers advantages such as fraud reduction, it increases reliability and also its a cheap technology. The various applications of pattern recognition is used in the security p ...

Assignment 3

... %Implements a version of Foldiak's 1989 network, running on simulated LGN %inputs from natural images. Incorporates feedforward Hebbian learning and %recurrent inhibitory anti-Hebbian learning. %lgnims = cell array of images representing normalized LGN output %nv1cells = number of V1 cells to simula ...

... %Implements a version of Foldiak's 1989 network, running on simulated LGN %inputs from natural images. Incorporates feedforward Hebbian learning and %recurrent inhibitory anti-Hebbian learning. %lgnims = cell array of images representing normalized LGN output %nv1cells = number of V1 cells to simula ...

AND X 2

... Training Value, T : When we are training a network we not only present it with the input but also with a value that we require the network to produce. For example, if we present the network with [1,1] for the AND function the training value will be 1 G51IAI – Introduction to AI ...

... Training Value, T : When we are training a network we not only present it with the input but also with a value that we require the network to produce. For example, if we present the network with [1,1] for the AND function the training value will be 1 G51IAI – Introduction to AI ...

Statistical Inference, Multiple Comparisons, Random Field Theory

... According to Lemma 1, given a bound K, we should not separate the variables set into too many small subsets. Or it is more possible that we can combine some of the subsets into a new subset whose cardinality is no greater than K, thus the new SNB will be coarser than the old one. From this viewpoint ...

... According to Lemma 1, given a bound K, we should not separate the variables set into too many small subsets. Or it is more possible that we can combine some of the subsets into a new subset whose cardinality is no greater than K, thus the new SNB will be coarser than the old one. From this viewpoint ...

Modeling Estuarine Salinity Using Artificial Neural Networks

... 3.0 Results and Discussion 3.1 Model structure Figure 3 shows the trial-and-error process in finding the number of hidden nodes, learning rate, and momentum. This is an important step in training the network because the lower the error, the more able the ANNs predict salinity. The optimum number of ...

... 3.0 Results and Discussion 3.1 Model structure Figure 3 shows the trial-and-error process in finding the number of hidden nodes, learning rate, and momentum. This is an important step in training the network because the lower the error, the more able the ANNs predict salinity. The optimum number of ...

The Third Generation of Neural Networks

... network for all problems. For several years, this was the suggested advice. However, just because a single layer network can, in theory, learn anything, the universal approximation theorem does not say anything about how easy it will be to learn. Additional hidden layers make problems easier to lea ...

... network for all problems. For several years, this was the suggested advice. However, just because a single layer network can, in theory, learn anything, the universal approximation theorem does not say anything about how easy it will be to learn. Additional hidden layers make problems easier to lea ...

Paul Rauwolf - WordPress.com

... no work has been conducted which systematically compares such algorithms via an indepth study. This work initiated such research by contrasting the advantages and disadvantages of two unique intrinsically motivated heuristics: (1) which sought novel experiences and (2) which attempted to accurately ...

... no work has been conducted which systematically compares such algorithms via an indepth study. This work initiated such research by contrasting the advantages and disadvantages of two unique intrinsically motivated heuristics: (1) which sought novel experiences and (2) which attempted to accurately ...

Observational Learning Based on Models of - FORTH-ICS

... of two phases, execution and observational learning. During the execution phase the demonstrator exhibits two behaviors to the observer, each associated with a different object. During the observational learning phase a novel object is shown to the observer and the demonstrator exhibits one of the b ...

... of two phases, execution and observational learning. During the execution phase the demonstrator exhibits two behaviors to the observer, each associated with a different object. During the observational learning phase a novel object is shown to the observer and the demonstrator exhibits one of the b ...

Catastrophic interference

Catastrophic Interference, also known as catastrophic forgetting, is the tendency of a artificial neural network to completely and abruptly forget previously learned information upon learning new information. Neural networks are an important part of the network approach and connectionist approach to cognitive science. These networks use computer simulations to try and model human behaviours, such as memory and learning. Catastrophic interference is an important issue to consider when creating connectionist models of memory. It was originally brought to the attention of the scientific community by research from McCloskey and Cohen (1989), and Ractcliff (1990). It is a radical manifestation of the ‘sensitivity-stability’ dilemma or the ‘stability-plasticity’ dilemma. Specifically, these problems refer to the issue of being able to make an artificial neural network that is sensitive to, but not disrupted by, new information. Lookup tables and connectionist networks lie on the opposite sides of the stability plasticity spectrum. The former remains completely stable in the presence of new information but lacks the ability to generalize, i.e. infer general principles, from new inputs. On the other hand, connectionst networks like the standard backpropagation network are very sensitive to new information and can generalize on new inputs. Backpropagation models can be considered good models of human memory insofar as they mirror the human ability to generalize but these networks often exhibit less stability than human memory. Notably, these backpropagation networks are susceptible to catastrophic interference. This is considered an issue when attempting to model human memory because, unlike these networks, humans typically do not show catastrophic forgetting. Thus, the issue of catastrophic interference must be eradicated from these backpropagation models in order to enhance the plausibility as models of human memory.