No Slide Title - Computer Science Home

... any nonzero inhibitory input will prevent the neuron from firing – It takes one time step for a signal to pass over one ...

... any nonzero inhibitory input will prevent the neuron from firing – It takes one time step for a signal to pass over one ...

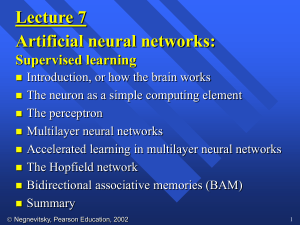

artificial neural networks

... Increase iteration p by one, go back to Step 2 and repeat the process until the selected error criterion is satisfied. As an example, we may consider the three-layer back-propagation network. Suppose that the network is required to perform logical operation Exclusive-OR. Recall that a single-layer p ...

... Increase iteration p by one, go back to Step 2 and repeat the process until the selected error criterion is satisfied. As an example, we may consider the three-layer back-propagation network. Suppose that the network is required to perform logical operation Exclusive-OR. Recall that a single-layer p ...

poster - Xiannian Fan

... For a 8-variable problem, partition all the variables by Simple Grouping (SG) into two groups: G1={X1, X2, X3, X4}, G2={X5, X6, X7, X8}. We created the pattern databases with a backward breadth-first search in the order graph for each group. ...

... For a 8-variable problem, partition all the variables by Simple Grouping (SG) into two groups: G1={X1, X2, X3, X4}, G2={X5, X6, X7, X8}. We created the pattern databases with a backward breadth-first search in the order graph for each group. ...

Title of Paper (14 pt Bold, Times, Title case)

... Evolutionary neural network (ENN) is a combination of a neural network with evolutionary algorithm. A common limitation of neural network is usually associated with network training. Backpropagation learning algorithms had serious drawbacks, which cannot guarantee that the optimal solution is given. ...

... Evolutionary neural network (ENN) is a combination of a neural network with evolutionary algorithm. A common limitation of neural network is usually associated with network training. Backpropagation learning algorithms had serious drawbacks, which cannot guarantee that the optimal solution is given. ...

BC34333339

... the abruptness of the functions as it changes between two asymptotic values. Sigmoidal functions are differential, which is an important feature of Neural Network Theory. ...

... the abruptness of the functions as it changes between two asymptotic values. Sigmoidal functions are differential, which is an important feature of Neural Network Theory. ...

November 2000 Volume 3 Number Supp p 1168

... that the response, unlike a speedometer, should not increase continuously with increasing velocity; instead, going beyond an optimum velocity should decrease the response. The model also predicted that the optimum velocity should vary with the pattern's spatial wavelength so that their ratio remains ...

... that the response, unlike a speedometer, should not increase continuously with increasing velocity; instead, going beyond an optimum velocity should decrease the response. The model also predicted that the optimum velocity should vary with the pattern's spatial wavelength so that their ratio remains ...

The Use of Artificial Neural Networks (ANN) in Forecasting

... ANN is a part of machine learning where you can train the user design network to learn a process like forecasting, classification or other rule-based programming. Generally, it is a copy of human brain for information processing and computing. Like our brains, ANN uses artificial nerves and links th ...

... ANN is a part of machine learning where you can train the user design network to learn a process like forecasting, classification or other rule-based programming. Generally, it is a copy of human brain for information processing and computing. Like our brains, ANN uses artificial nerves and links th ...

Visionael tracks assets for network vulnerability

... also high on the uptake,” said Lui. “And to see how it’s configured. virtual private LAN services are being imThe information taken from this netplemented by enterprises with multiple work audit is fed into the core product, branch offices or regional headquarters.” NRM, which houses a database of d ...

... also high on the uptake,” said Lui. “And to see how it’s configured. virtual private LAN services are being imThe information taken from this netplemented by enterprises with multiple work audit is fed into the core product, branch offices or regional headquarters.” NRM, which houses a database of d ...

Introduction to Hybrid Systems – Part 1

... In a rule-based expert system, the inference engine compares the condition part of each rule with data given in the database. When the IF part of the rule matches the data in the database, the rule is fired and its THEN part is executed. The precise matching is required (inference engine cannot cope ...

... In a rule-based expert system, the inference engine compares the condition part of each rule with data given in the database. When the IF part of the rule matches the data in the database, the rule is fired and its THEN part is executed. The precise matching is required (inference engine cannot cope ...

Pathfinding in Computer Games 1 Introduction

... The two most commonly employed algorithms for directed pathfinding in games use one or more of these strategies. These directed algorithms are known as Dijkstra and A* respectively [RusselNorvig95]. Dijkstra’s algorithm uses the uniform cost strategy to find the optimal path while the A* algorithm ...

... The two most commonly employed algorithms for directed pathfinding in games use one or more of these strategies. These directed algorithms are known as Dijkstra and A* respectively [RusselNorvig95]. Dijkstra’s algorithm uses the uniform cost strategy to find the optimal path while the A* algorithm ...

A Partitioned Fuzzy ARTMAP Implementation for Fast Processing of

... training list to its corresponding output pattern. To achieve the aforementioned goal we present the training list to Fuzzy ARTMAP architecture repeatedly. That is, we present I1 to F1a , O1 to F2b , I2 to F1a , O2 to F2b , and finally IP T to F1a , and OP T to F2b . We present the training list to ...

... training list to its corresponding output pattern. To achieve the aforementioned goal we present the training list to Fuzzy ARTMAP architecture repeatedly. That is, we present I1 to F1a , O1 to F2b , I2 to F1a , O2 to F2b , and finally IP T to F1a , and OP T to F2b . We present the training list to ...

Corps & Cognition team meeting, 2014/12/02 A (new) non

... moments of vertical equilibrium (moments during which he does not received any information). How neurons can learn something in absence of events? ...

... moments of vertical equilibrium (moments during which he does not received any information). How neurons can learn something in absence of events? ...

Survey of Eager Learner and Lazy Learner Classification Techniques

... Before training can begin, the user must decide on the their knowledge representation. Acquired knowledge in network topology by specifying the number of units in the the form of a network of units connected by weighted input layer, the number of hidden layers (if more than links is difficult for hu ...

... Before training can begin, the user must decide on the their knowledge representation. Acquired knowledge in network topology by specifying the number of units in the the form of a network of units connected by weighted input layer, the number of hidden layers (if more than links is difficult for hu ...

Study on Future of Artificial Intelligence in Neural Network

... approach for computation purposes. In most cases an ANN is an adaptive system that changes its structure based on external or internal information that flows through the network. However, the paradigm of neural networks - i.e., implicit, not explicit , they use the modern technique of “learning with ...

... approach for computation purposes. In most cases an ANN is an adaptive system that changes its structure based on external or internal information that flows through the network. However, the paradigm of neural networks - i.e., implicit, not explicit , they use the modern technique of “learning with ...

Effects of Chaos on Training Recurrent Neural Networks

... training to periodic data saves time by not exploring solutions which are far from optimal. These highly chaotic nets are unlikely to generate the time series needed or be stable in the long run. What is somewhat surprising, however, is that excluding all networks with positive LEs (even when traini ...

... training to periodic data saves time by not exploring solutions which are far from optimal. These highly chaotic nets are unlikely to generate the time series needed or be stable in the long run. What is somewhat surprising, however, is that excluding all networks with positive LEs (even when traini ...

An Introduction to Deep Learning

... architectures, i.e. architectures composed of multiple layers of nonlinear processing, can be considered as a relevant choice. Indeed, some highly nonlinear functions can be represented much more compactly in terms of number of parameters with deep architectures than with shallow ones (e.g. SVM). Fo ...

... architectures, i.e. architectures composed of multiple layers of nonlinear processing, can be considered as a relevant choice. Indeed, some highly nonlinear functions can be represented much more compactly in terms of number of parameters with deep architectures than with shallow ones (e.g. SVM). Fo ...

development of an artificial neural network for monitoring

... units, which has a natural propensity for storing experiential knowledge and making it available for use. The knowledge is acquired by the networks from its environment through a learning process which is basically responsible to adapt the synaptic weights to the stimulus received by the environment ...

... units, which has a natural propensity for storing experiential knowledge and making it available for use. The knowledge is acquired by the networks from its environment through a learning process which is basically responsible to adapt the synaptic weights to the stimulus received by the environment ...

Neural Network

... neural network architectures and learning rules. Emphasis is placed on the mathematical analysis of these networks, on methods of training them and on their application to practical engineering problems in such areas as pattern recognition, signal processing and control systems. Ming-Feng Yeh ...

... neural network architectures and learning rules. Emphasis is placed on the mathematical analysis of these networks, on methods of training them and on their application to practical engineering problems in such areas as pattern recognition, signal processing and control systems. Ming-Feng Yeh ...

JARINGAN SYARAF TIRUAN

... building efficient systems for real world applications. This may make machines more powerful, relieve humans of tedious tasks, and may even improve upon human performance. ...

... building efficient systems for real world applications. This may make machines more powerful, relieve humans of tedious tasks, and may even improve upon human performance. ...

Catastrophic interference

Catastrophic Interference, also known as catastrophic forgetting, is the tendency of a artificial neural network to completely and abruptly forget previously learned information upon learning new information. Neural networks are an important part of the network approach and connectionist approach to cognitive science. These networks use computer simulations to try and model human behaviours, such as memory and learning. Catastrophic interference is an important issue to consider when creating connectionist models of memory. It was originally brought to the attention of the scientific community by research from McCloskey and Cohen (1989), and Ractcliff (1990). It is a radical manifestation of the ‘sensitivity-stability’ dilemma or the ‘stability-plasticity’ dilemma. Specifically, these problems refer to the issue of being able to make an artificial neural network that is sensitive to, but not disrupted by, new information. Lookup tables and connectionist networks lie on the opposite sides of the stability plasticity spectrum. The former remains completely stable in the presence of new information but lacks the ability to generalize, i.e. infer general principles, from new inputs. On the other hand, connectionst networks like the standard backpropagation network are very sensitive to new information and can generalize on new inputs. Backpropagation models can be considered good models of human memory insofar as they mirror the human ability to generalize but these networks often exhibit less stability than human memory. Notably, these backpropagation networks are susceptible to catastrophic interference. This is considered an issue when attempting to model human memory because, unlike these networks, humans typically do not show catastrophic forgetting. Thus, the issue of catastrophic interference must be eradicated from these backpropagation models in order to enhance the plausibility as models of human memory.