How to Grow a Mind: Statistics, Structure, and Abstraction

... h3, “all animals”; or any other rule consistent with the data? Likelihoods favor the more specific patterns, h1 and h2; it would be a highly suspicious coincidence to draw three random examples that all fall within the smaller sets h1 or h2 if they were actually drawn from the much larger h3 (18). T ...

... h3, “all animals”; or any other rule consistent with the data? Likelihoods favor the more specific patterns, h1 and h2; it would be a highly suspicious coincidence to draw three random examples that all fall within the smaller sets h1 or h2 if they were actually drawn from the much larger h3 (18). T ...

Universal Learning

... correlations, but it is not capable of learning task execution. Hidden layers allow for the transformation of a problem and error correction permits learning of difficult task execution, the relationships of inputs and outputs. The combination of Hebbian learning – correlations (x y) – and errorbase ...

... correlations, but it is not capable of learning task execution. Hidden layers allow for the transformation of a problem and error correction permits learning of difficult task execution, the relationships of inputs and outputs. The combination of Hebbian learning – correlations (x y) – and errorbase ...

INTRODUCTION

... Unsupervised learning is the great promise of the future. Currently, this learning method is limited to networks known as self-organizing maps. These kinds of networks are not in widespread use. They are basically an academic novelty. Yet, they have shown they can provide a solution in a few instanc ...

... Unsupervised learning is the great promise of the future. Currently, this learning method is limited to networks known as self-organizing maps. These kinds of networks are not in widespread use. They are basically an academic novelty. Yet, they have shown they can provide a solution in a few instanc ...

Honors Thesis

... neurons using a random number generator. Crucially, the seed used is always the same, so that when we compare the results of the simulation we know that changes in the output display are a result of changes in parameters, not changes in random number sequences that permeate through the simulation. T ...

... neurons using a random number generator. Crucially, the seed used is always the same, so that when we compare the results of the simulation we know that changes in the output display are a result of changes in parameters, not changes in random number sequences that permeate through the simulation. T ...

AUTONOMIC REFLEX - Semmelweis University

... cardiac muscle and certain glands and adrenal medulla ...

... cardiac muscle and certain glands and adrenal medulla ...

Introduction to Artificial Intelligence – Course 67842

... Long-held dreams are coming true: Language Translation Speech Recognition ...

... Long-held dreams are coming true: Language Translation Speech Recognition ...

On Line Isolated Characters Recognition Using Dynamic Bayesian

... Since the sixties, the man seeks “to learn how to read” for computers. A task of recognition is difficult for the isolated handwritten characters because their forms are varied compared with the printed characters. The on line recognition makes it possible to interpret a writing represented by the p ...

... Since the sixties, the man seeks “to learn how to read” for computers. A task of recognition is difficult for the isolated handwritten characters because their forms are varied compared with the printed characters. The on line recognition makes it possible to interpret a writing represented by the p ...

Applied Machine Learning for Engineering and Design

... must be referenced when included in a student’s work. The course instructor may use plagiarism checking software and/or request evidence of references for any submitted work. A useful website on avoiding plagiarism is found at the Purdue Online Writing Lab (http://owl.english.purdue.edu/owl/resource ...

... must be referenced when included in a student’s work. The course instructor may use plagiarism checking software and/or request evidence of references for any submitted work. A useful website on avoiding plagiarism is found at the Purdue Online Writing Lab (http://owl.english.purdue.edu/owl/resource ...

Artificial Intelligence in the Open World

... rather than waiting for a final answer to an inferential problem? Methods that could actually generate increasingly valuable, or increasingly complete results over time were developed. I had called these procedures flexible computations, later to also become known as anytime algorithms. The basic id ...

... rather than waiting for a final answer to an inferential problem? Methods that could actually generate increasingly valuable, or increasingly complete results over time were developed. I had called these procedures flexible computations, later to also become known as anytime algorithms. The basic id ...

13.2 part 2

... The gap between two neurons or a neuron and an effector is known as a synapse. The neuron carrying the impulse into the synapse is called the presynaptic neuron. The neuron leaving the synapse is called the postsynaptic neuron. The neurotransmitters that carry the impulse across the synapse are cont ...

... The gap between two neurons or a neuron and an effector is known as a synapse. The neuron carrying the impulse into the synapse is called the presynaptic neuron. The neuron leaving the synapse is called the postsynaptic neuron. The neurotransmitters that carry the impulse across the synapse are cont ...

LETTER RECOGNITION USING BACKPROPAGATION ALGORITHM

... As shown in Figure 2.3, neural network consist of layers which there are neurons at every layer. Neurons connected with the inputs from outside and output layer. Weight is adjusted to ensure that the value of input and output are correct. Each neuron is basic information processing unit. It performs ...

... As shown in Figure 2.3, neural network consist of layers which there are neurons at every layer. Neurons connected with the inputs from outside and output layer. Weight is adjusted to ensure that the value of input and output are correct. Each neuron is basic information processing unit. It performs ...

news and views - Cortical Plasticity

... and only a few nonzero weights remaining (Fig. 1b). This means that in an optimal neural network that is operating at maximal capacity and with maximal tolerance to noise, most weights have to be zero for memory retrieval to function correctly. Because zero-valued synaptic weights translate into ine ...

... and only a few nonzero weights remaining (Fig. 1b). This means that in an optimal neural network that is operating at maximal capacity and with maximal tolerance to noise, most weights have to be zero for memory retrieval to function correctly. Because zero-valued synaptic weights translate into ine ...

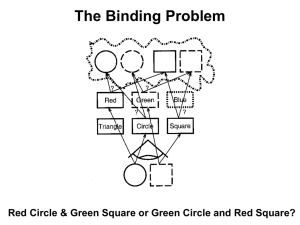

Optional extra slides on the Binding Problem

... Neurons within the loci of attention may selectively gate signals depending upon whether input cells are synchronized. ...

... Neurons within the loci of attention may selectively gate signals depending upon whether input cells are synchronized. ...

Model Construction in General Intelligence

... want to highlight two interrelated aspects of our findings. First, subjects construct occurrent models of this system as a hierarchical network of metaphors of different scope. And second, these temporary hierarchies scaffold the further development of the subjects’ conceptualizations, that is, of t ...

... want to highlight two interrelated aspects of our findings. First, subjects construct occurrent models of this system as a hierarchical network of metaphors of different scope. And second, these temporary hierarchies scaffold the further development of the subjects’ conceptualizations, that is, of t ...

Learning with Hierarchical-Deep Models

... feature representations, instead of learning them from data, can be detrimental. This is especially important when learning complex tasks, as it is often difficult to hand-craft high-level features explicitly in terms of raw sensory input. Moreover, many HB approaches often assume a fixed hierarchy ...

... feature representations, instead of learning them from data, can be detrimental. This is especially important when learning complex tasks, as it is often difficult to hand-craft high-level features explicitly in terms of raw sensory input. Moreover, many HB approaches often assume a fixed hierarchy ...

Learning nonlinear functions on vectors: examples and predictions

... that best describes what it does. The basal ganglia perform action selection, the prefrontal cortex plans and performs abstract thinking, and so on. In theoretical neuroscience, functions are the mathematical operations that can be combined to bring about that higher-level verbal description. While ...

... that best describes what it does. The basal ganglia perform action selection, the prefrontal cortex plans and performs abstract thinking, and so on. In theoretical neuroscience, functions are the mathematical operations that can be combined to bring about that higher-level verbal description. While ...

Structured Regularizer for Neural Higher

... x, i.e. f m−n (yt−n+1:t ; t, x) = [1(yt−n+1:t = a1 ) gm (x, t), . . .]T where gm (x, t) is an arbitrary function. This function maps an input sub-sequence into a new feature space. In this work, we choose to use MLP networks for this function being able to model complex interactions among the variab ...

... x, i.e. f m−n (yt−n+1:t ; t, x) = [1(yt−n+1:t = a1 ) gm (x, t), . . .]T where gm (x, t) is an arbitrary function. This function maps an input sub-sequence into a new feature space. In this work, we choose to use MLP networks for this function being able to model complex interactions among the variab ...

Character Recognition using Spiking Neural Networks

... is connected to all of the neurons in the previous layer. Finally, each of the output layer neuron is connected to every other output neuron via inhibitory lateral connections. These lateral connections reflect the competition among the output neurons. IV. R ESULTS For initial testing, the network w ...

... is connected to all of the neurons in the previous layer. Finally, each of the output layer neuron is connected to every other output neuron via inhibitory lateral connections. These lateral connections reflect the competition among the output neurons. IV. R ESULTS For initial testing, the network w ...

Trends in Cognitive Sciences 2007 Bogacz

... On the basis of single-unit recordings from monkeys performing this task [4–6,8], it has been proposed that such perceptual decisions involve three process [17] (Figure 1). First, the neurons in sensory areas that are responsive to critical aspects of the stimulus (in this task, motion-sensitive neu ...

... On the basis of single-unit recordings from monkeys performing this task [4–6,8], it has been proposed that such perceptual decisions involve three process [17] (Figure 1). First, the neurons in sensory areas that are responsive to critical aspects of the stimulus (in this task, motion-sensitive neu ...

Canonical Neural Models1

... Mathematical modeling is a powerful tool in studying fundamental principles of information processing in the brain. Unfortunately, mathematical analysis of a certain neural model could be of limited value since the results might depend on particulars of that model: Various models of the same neural ...

... Mathematical modeling is a powerful tool in studying fundamental principles of information processing in the brain. Unfortunately, mathematical analysis of a certain neural model could be of limited value since the results might depend on particulars of that model: Various models of the same neural ...