Crash Course on Basic Statistics

... than the sample median. As the sample sizes get larger (above 25) the median tends to be the best estimate of the middle value. We can use binomial distribution to estimate the confidence intervals: the following formula constructs a confidence interval around any percentile. The median (0.5) would ...

... than the sample median. As the sample sizes get larger (above 25) the median tends to be the best estimate of the middle value. We can use binomial distribution to estimate the confidence intervals: the following formula constructs a confidence interval around any percentile. The median (0.5) would ...

SUFFICIENT STATISTICS 1. Introduction Let X = (X 1,...,Xn) be a

... Let X = (X1 , . . . , Xn ) be a random sample from fθ , where θ ∈ Θ is unknown. We are interested using X to estimate θ. In the simple case where Xi ∼ Bern(p), we found that the sample mean was an efficient estimator for p. Thus, if we observe a finite sequence of coin flips, in order to have an eff ...

... Let X = (X1 , . . . , Xn ) be a random sample from fθ , where θ ∈ Θ is unknown. We are interested using X to estimate θ. In the simple case where Xi ∼ Bern(p), we found that the sample mean was an efficient estimator for p. Thus, if we observe a finite sequence of coin flips, in order to have an eff ...

overhead - 09 Univariate Probability Distributions

... Develop the parameters if simulating variable using the mean to forecast the deterministic component: ...

... Develop the parameters if simulating variable using the mean to forecast the deterministic component: ...

36c6d9a31e04bad

... spread out/clustered together the scores are Variability is usually defined in terms of distance How far apart scores are from each other How far apart scores are from the mean How representative a score is of the data set as a whole ...

... spread out/clustered together the scores are Variability is usually defined in terms of distance How far apart scores are from each other How far apart scores are from the mean How representative a score is of the data set as a whole ...

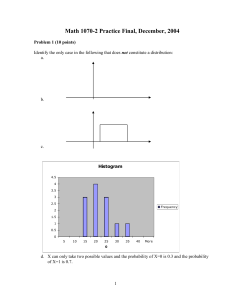

Problem 4 (5 points)

... a. The line that makes the square of the correlation in the data as large as possible. b. The line that makes the sum of the squares of the vertical distances of the data points from the line as small as possible. c. The line that best splits the data in half, with half of the points above the line ...

... a. The line that makes the square of the correlation in the data as large as possible. b. The line that makes the sum of the squares of the vertical distances of the data points from the line as small as possible. c. The line that best splits the data in half, with half of the points above the line ...

Chapter3.3to3.4

... A normal distribution creates a histogram that is symmetrical and has a bell shape, and is used quite a bit in statistical analyses Also called a Gaussian Distribution It is symmetrical with equal mean, median and mode that fall on the line of symmetry of the curve ...

... A normal distribution creates a histogram that is symmetrical and has a bell shape, and is used quite a bit in statistical analyses Also called a Gaussian Distribution It is symmetrical with equal mean, median and mode that fall on the line of symmetry of the curve ...

File psychology stats power p

... the formulas for these measurements. Then we will go through the steps on how to use the formulas. ...

... the formulas for these measurements. Then we will go through the steps on how to use the formulas. ...

Bootstrapping (statistics)

In statistics, bootstrapping can refer to any test or metric that relies on random sampling with replacement. Bootstrapping allows assigning measures of accuracy (defined in terms of bias, variance, confidence intervals, prediction error or some other such measure) to sample estimates. This technique allows estimation of the sampling distribution of almost any statistic using random sampling methods. Generally, it falls in the broader class of resampling methods.Bootstrapping is the practice of estimating properties of an estimator (such as its variance) by measuring those properties when sampling from an approximating distribution. One standard choice for an approximating distribution is the empirical distribution function of the observed data. In the case where a set of observations can be assumed to be from an independent and identically distributed population, this can be implemented by constructing a number of resamples with replacement, of the observed dataset (and of equal size to the observed dataset).It may also be used for constructing hypothesis tests. It is often used as an alternative to statistical inference based on the assumption of a parametric model when that assumption is in doubt, or where parametric inference is impossible or requires complicated formulas for the calculation of standard errors.