Final06Sol

... that these 2 variables are not important causal factors in MORTality. Is there statistical support for this conclusion? There is no evidence of linear association between MORTality and HC or NOX, when all other variables are in the model. However, we cannot readily infer causality (or lack of causal ...

... that these 2 variables are not important causal factors in MORTality. Is there statistical support for this conclusion? There is no evidence of linear association between MORTality and HC or NOX, when all other variables are in the model. However, we cannot readily infer causality (or lack of causal ...

Rival Forms

... the dependent variable will be used, taking into account the effects of the independent variables • It can tell us what the relationship is between the choice of rival forms and other factors ...

... the dependent variable will be used, taking into account the effects of the independent variables • It can tell us what the relationship is between the choice of rival forms and other factors ...

Download File

... parameter which captures the deterministic portion of the relationship between Y and X, not an estimated coefficient. Please also recognize that E is the stochastic disturbance in the regression model and not a residual from a least squares regression. This means that if 5 were known, then for any g ...

... parameter which captures the deterministic portion of the relationship between Y and X, not an estimated coefficient. Please also recognize that E is the stochastic disturbance in the regression model and not a residual from a least squares regression. This means that if 5 were known, then for any g ...

Data Modeling and Least Squares Fitting 2 COS 323

... – If last iteration decreased error, accept the step and decrease λ to λ/10 – If last iteration increased error, reject the step and increase λ to 10λ ...

... – If last iteration decreased error, accept the step and decrease λ to λ/10 – If last iteration increased error, reject the step and increase λ to 10λ ...

Document

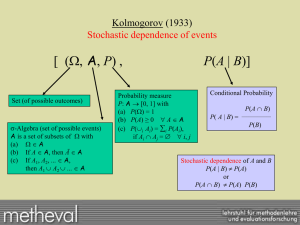

... E(Y X ) can be highly misleading if causally interpreted. It may indicate a positive dependence when in fact there is a negative individual causal effect for each and every individual in the population. (See Steyer et al., 2000) MPR-online, „Causal Regression. Models I“ ...

... E(Y X ) can be highly misleading if causally interpreted. It may indicate a positive dependence when in fact there is a negative individual causal effect for each and every individual in the population. (See Steyer et al., 2000) MPR-online, „Causal Regression. Models I“ ...

Regression + Structural Equation Modeling

... The best fit line always gives you the best estimate of Y, based on X Any other line would not be as useful for prediction ...

... The best fit line always gives you the best estimate of Y, based on X Any other line would not be as useful for prediction ...

Review of Probability and Statistics

... coefficients across the three models Can compare sign and significance (based on a standard t test) of coefficients, though To compare the magnitude of effects, need to calculate the derivatives, say at the means Stata will do this for you in the probit case Economics 20 - Prof. Anderson ...

... coefficients across the three models Can compare sign and significance (based on a standard t test) of coefficients, though To compare the magnitude of effects, need to calculate the derivatives, say at the means Stata will do this for you in the probit case Economics 20 - Prof. Anderson ...

Assignment

... species in a habitat patch. In this case, however, the use sample will be compared against an ‘available’ sample. Import ‘Data4a.csv’ that is provided on the web-site Make sure the data was imported correctly Generate some summary statistics on the data (mean, min, max, etc.) Run a logistic regressi ...

... species in a habitat patch. In this case, however, the use sample will be compared against an ‘available’ sample. Import ‘Data4a.csv’ that is provided on the web-site Make sure the data was imported correctly Generate some summary statistics on the data (mean, min, max, etc.) Run a logistic regressi ...

Chapter 14, part C

... A. Total Sum of Squares (SST) If you had to estimate repair cost but had no knowledge of the car’s age, what would be your best guess? Probably the mean repair cost. If we subtract each yi from the mean, we calculate the error involved in using the mean to estimate cost. I hope our regression equat ...

... A. Total Sum of Squares (SST) If you had to estimate repair cost but had no knowledge of the car’s age, what would be your best guess? Probably the mean repair cost. If we subtract each yi from the mean, we calculate the error involved in using the mean to estimate cost. I hope our regression equat ...

Powerpoint Slides for Unit 10

... distribution is probably OK Residuals from a regression of Log Weekly Earnings on demographic characteristics ...

... distribution is probably OK Residuals from a regression of Log Weekly Earnings on demographic characteristics ...

please click, ppt - Department of Statistics | Rajshahi University

... be more or less close and close to – 0.58 for this example. • If x, y and z is a trivariate normal distribution then theoretically the value of the correlation coefficient will be same for each x. • Thus Solution 1 does not need any distributional assumptions but gives multiple answers whereas solut ...

... be more or less close and close to – 0.58 for this example. • If x, y and z is a trivariate normal distribution then theoretically the value of the correlation coefficient will be same for each x. • Thus Solution 1 does not need any distributional assumptions but gives multiple answers whereas solut ...

Regression with limited dependent variables

... this is a linear regression model Yi = b0 + b1 X 1i + ....bK X Ki + ei Pr(Yi = 1 X 1i ,...., X Ki ) = b0 + b1 X 1i + ....bK X Ki b1 is the change in the probability that Y = 1 associated with a unit change in X 1 , holding constant X 2 .... X K , etc This can be estimated by OLS but Note that since ...

... this is a linear regression model Yi = b0 + b1 X 1i + ....bK X Ki + ei Pr(Yi = 1 X 1i ,...., X Ki ) = b0 + b1 X 1i + ....bK X Ki b1 is the change in the probability that Y = 1 associated with a unit change in X 1 , holding constant X 2 .... X K , etc This can be estimated by OLS but Note that since ...

Linear regression

In statistics, linear regression is an approach for modeling the relationship between a scalar dependent variable y and one or more explanatory variables (or independent variables) denoted X. The case of one explanatory variable is called simple linear regression. For more than one explanatory variable, the process is called multiple linear regression. (This term should be distinguished from multivariate linear regression, where multiple correlated dependent variables are predicted, rather than a single scalar variable.)In linear regression, data are modeled using linear predictor functions, and unknown model parameters are estimated from the data. Such models are called linear models. Most commonly, linear regression refers to a model in which the conditional mean of y given the value of X is an affine function of X. Less commonly, linear regression could refer to a model in which the median, or some other quantile of the conditional distribution of y given X is expressed as a linear function of X. Like all forms of regression analysis, linear regression focuses on the conditional probability distribution of y given X, rather than on the joint probability distribution of y and X, which is the domain of multivariate analysis.Linear regression was the first type of regression analysis to be studied rigorously, and to be used extensively in practical applications. This is because models which depend linearly on their unknown parameters are easier to fit than models which are non-linearly related to their parameters and because the statistical properties of the resulting estimators are easier to determine.Linear regression has many practical uses. Most applications fall into one of the following two broad categories: If the goal is prediction, or forecasting, or error reduction, linear regression can be used to fit a predictive model to an observed data set of y and X values. After developing such a model, if an additional value of X is then given without its accompanying value of y, the fitted model can be used to make a prediction of the value of y. Given a variable y and a number of variables X1, ..., Xp that may be related to y, linear regression analysis can be applied to quantify the strength of the relationship between y and the Xj, to assess which Xj may have no relationship with y at all, and to identify which subsets of the Xj contain redundant information about y.Linear regression models are often fitted using the least squares approach, but they may also be fitted in other ways, such as by minimizing the ""lack of fit"" in some other norm (as with least absolute deviations regression), or by minimizing a penalized version of the least squares loss function as in ridge regression (L2-norm penalty) and lasso (L1-norm penalty). Conversely, the least squares approach can be used to fit models that are not linear models. Thus, although the terms ""least squares"" and ""linear model"" are closely linked, they are not synonymous.