* Your assessment is very important for improving the workof artificial intelligence, which forms the content of this project

Download Review of Linear Algebra - Carnegie Mellon University

Rotation matrix wikipedia , lookup

Determinant wikipedia , lookup

Matrix (mathematics) wikipedia , lookup

Exterior algebra wikipedia , lookup

System of linear equations wikipedia , lookup

Jordan normal form wikipedia , lookup

Laplace–Runge–Lenz vector wikipedia , lookup

Cayley–Hamilton theorem wikipedia , lookup

Non-negative matrix factorization wikipedia , lookup

Principal component analysis wikipedia , lookup

Perron–Frobenius theorem wikipedia , lookup

Eigenvalues and eigenvectors wikipedia , lookup

Orthogonal matrix wikipedia , lookup

Euclidean vector wikipedia , lookup

Gaussian elimination wikipedia , lookup

Singular-value decomposition wikipedia , lookup

Vector space wikipedia , lookup

Matrix multiplication wikipedia , lookup

Covariance and contravariance of vectors wikipedia , lookup

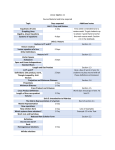

+ Review of Linear Algebra 10-725 - Optimization 1/14/10 Recitation Sivaraman Balakrishnan + Outline Matrix subspaces Linear independence and bases Gaussian Eigen elimination values and Eigen vectors Definiteness Matlab essentials Geoff’s LP sketcher linprog Debugging and using documentation Basic concepts Vector in Rn is an ordered set of n real numbers. 1 6 3 4 e.g. v = (1,6,3,4) is in R4 A column vector: A row vector: m-by-n matrix is an object with m rows and n columns, each entry filled with a real (typically) number: 1 6 3 4 1 2 8 4 78 6 9 3 2 Basic concepts - II Vector dot product: u v u1 u2 v1 v2 u1v1 u2v2 Matrix product: a11 a12 b11 b12 , B A a21 a22 b21 b22 a11b11 a12b21 a11b12 a12b22 AB a21b11 a22b21 a21b12 a22b22 + Matrix subspaces What is a matrix? Geometric notion – a matrix is an object that “transforms” a vector from its row space to its column space Vector space – set of vectors closed under scalar multiplication and addition Subspace – subset of a vector space also closed under these operations Always contains the zero vector (trivial subspace) + Row space of a matrix Vector space spanned by rows of matrix Span – set of all linear combinations of a set of vectors This isn’t always Rn – example !! Dimension of the row space – number of linearly independent rows (rank) We’ll discuss how to calculate the rank in a couple of slides + Null space, column space Null space – it is the orthogonal compliment of the row space Every vector in this space is a solution to the equation Ax = 0 Rank – nullity theorem Column space Compliment of rank-nullity + Linear independence A set of vectors is linearly independent if none of them can be written as a linear combination of the others Given a vector space, we can find a set of linearly independent vectors that spans this space The cardinality of this set is the dimension of the vector space + Gaussian elimination Finding rank and row echelon form Applications Solving a linear system of equations (we saw this in class) Finding inverse of a matrix + Basis of a vector space What is a basis? A basis is a maximal set of linearly independent vectors and a minimal set of spanning vectors of a vector space Orthonormal basis Two vectors are orthonormal if their dot product is 0, and each vector has length 1 An orthonormal basis consists of orthonormal vectors. What is special about orthonormal bases? Projection is easy Very useful length property Universal (Gram Schmidt) given any basis can find an orthonormal basis that has the same span + Matrices as constraints Geoff introduced writing an LP with a constraint matrix We know how to write any LP in standard form Why not just solve it to find “opt”? A special basis for square matrices The eigenvectors of a matrix are unit vectors that satisfy Ax = λx Example calculation on next slide Eigenvectors are orthonormal and eigenvalues are real for symmetric matrices This is the most useful orthonormal basis with many interesting properties Optimal matrix approximation (PCA/SVD) Other famous ones are the Fourier basis and wavelet basis Eigenvalues (A – λI)x = 0 λ is an eigenvalue iff det(A – λI) = 0 Example: 4 5 1 A 0 3 / 4 6 0 0 1 / 2 4 5 1 det( A I ) 0 3/ 4 6 (1 )(3 / 4 )(1 / 2 ) 0 0 1 / 2 1, 3 / 4, 1 / 2 + Projections (vector) (2,2,2) b = (2,2) (0,0,1) (0,1,0) (1,0,0) 2 1 0 0 2 2 0 1 0 2 0 0 0 0 2 a = (1,0) 2 ab c T a aa 0 T + Matrix projection Generalize formula from the previous slide Special case of orthonormal matrix Projected vector = (QTQ)-1 QTv Projected vector = QTv You’ve probably seen something very similar in least squares regression Definiteness Characterization based on eigen values Positive definite matrices are a special sub-class of invertible matrices One way to test for positive definiteness is by showing xTAx > 0 for all x A very useful example that you’ll see a lot in this class Covariance matrix Matlab Tutorial - 1 Linsolve Stability and condition number Geoff’s sketching code – might be very useful for HW1 Matlab Tutorial - 2 Linprog – Also, very useful for HW1 Also, covered debugging basics and using Matlab help + Extra stuff Vector and matrix norms Matrix norms - operator norm, Frobenius norm Vector norms - Lp norms Determinants SVD/PCA