* Your assessment is very important for improving the workof artificial intelligence, which forms the content of this project

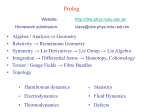

Download M1GLA: Geometry and Linear Algebra Lecture Notes

Linear least squares (mathematics) wikipedia , lookup

Symmetric cone wikipedia , lookup

Rotation matrix wikipedia , lookup

Laplace–Runge–Lenz vector wikipedia , lookup

Euclidean vector wikipedia , lookup

Determinant wikipedia , lookup

Matrix (mathematics) wikipedia , lookup

Non-negative matrix factorization wikipedia , lookup

Exterior algebra wikipedia , lookup

Vector space wikipedia , lookup

Singular-value decomposition wikipedia , lookup

Covariance and contravariance of vectors wikipedia , lookup

Jordan normal form wikipedia , lookup

Orthogonal matrix wikipedia , lookup

Perron–Frobenius theorem wikipedia , lookup

Gaussian elimination wikipedia , lookup

Cayley–Hamilton theorem wikipedia , lookup

System of linear equations wikipedia , lookup

Eigenvalues and eigenvectors wikipedia , lookup

Matrix multiplication wikipedia , lookup