* Your assessment is very important for improving the workof artificial intelligence, which forms the content of this project

Download The columns of AB are combinations of the columns of A. The

Linear least squares (mathematics) wikipedia , lookup

Vector space wikipedia , lookup

Capelli's identity wikipedia , lookup

Covariance and contravariance of vectors wikipedia , lookup

Rotation matrix wikipedia , lookup

Principal component analysis wikipedia , lookup

Eigenvalues and eigenvectors wikipedia , lookup

Jordan normal form wikipedia , lookup

System of linear equations wikipedia , lookup

Matrix (mathematics) wikipedia , lookup

Non-negative matrix factorization wikipedia , lookup

Perron–Frobenius theorem wikipedia , lookup

Singular-value decomposition wikipedia , lookup

Four-vector wikipedia , lookup

Orthogonal matrix wikipedia , lookup

Determinant wikipedia , lookup

Cayley–Hamilton theorem wikipedia , lookup

Matrix calculus wikipedia , lookup

The columns of AB are combinations of the columns of A. The reason is that each column of AB equals A

times the corresponding column of B. But that is a linear combination of the columns of A with

coefficients given by the entries in the column of B.

The product is defined when n = p and then the dimensions of the product are m × q.

This MUST hold – matrix multiplication satisfies the distributive property.

This might hold. (A+B)(A-B) = A2 + BA – AB – B2 and this is A2 – B2 if and only if AB=BA. Sometimes

that is true, but not always.

Cancellation does not always work, and it is quite possible for AB=AC to hold, even when B≠C. One way

to see this is as follows: AB=AC if and only if AB-AC = 0 if and only if A(B-C) = 0. So if B-C is a nonzero

matrix whose columns are all in the null space of A, then AB=AC but B doesn’t have to equal C. Here is a

specific example: ⎡ 1 − 2⎤ ⎡4 3⎤ = ⎡ 1 − 2⎤ ⎡6 7⎤

⎢⎣− 3 6 ⎥⎦ ⎢⎣2 1⎥⎦ ⎢⎣− 3 6 ⎥⎦ ⎢⎣3 3⎥⎦

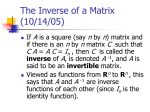

A square matrix A is invertible if there exists a matrix B (with the same dimensions) such that both AB

and BA equal the identity matrix.

Given an n×n matrix A, append an n×n identity matrix to create [A | I]. Now reduce this combined

matrix to rref. If the first n columns are pivot columns, then the rref has the form [I | B] and the right

side, B is the inverse of A. If the first n columns of the rref are not pivot columns, then A is not invertible.

An elementary matrix is one that is obtained by performing a single row operation on the identity matrix.

If we do this and create the matrix E, then multiplying EA produces the same result as applying the row

operation to A.

We can see that every elementary row operation is invertible by constructing generic examples for each

type of row operation and then using the parts of the invertible matrix theorem. For example, if we swap

two rows of the identity matrix, the resulting matrix still has independent columns, and so is invertible.

Similarly, if we multiply one row of the identity matrix by a nonzero scalar r, the resulting matrix is still a

diagonal matrix. This allows us to compute its determinant as r and since that is nonzero, the matrix is

invertible. Finally, when a multiple of one row is added to another, the identity matrix is changed into a

triangular matrix with all 1’s on the diagonal. Again we can compute the determinant to see that the

result is invertible. Alternatively, we can argue that since every row operation is reversible by another

row operation, the row operation matrices are all invertible.

If A is invertible, then we can reduce it to I by applying a sequence of row operations. If we do this using

elementary matrices, then we get an equation like this:

Ep Ep-1 … E2 E1 A = I

Where Ej is the jth row operation we perform on A. Multiplying both sides of this equation on the left by

the inverses of the elementary matrices in reverse order, we obtain

A = E1-1 E2-1 … Ep-1-1 Ep-1

This shows that A is a product of elementary row operations, because the inverse of each elementary

matrix is another elementary matrix.

The column space of an m×n matrix A is the subspace of m spanned by the columns of A. That is, it is

the set of all linear combinations of the columns of A.

A vector space is an algebraic system consisting of a non-empty set V and two operations, addition of

elements of V (the elements are called vectors) and multiplication of elements of V by real numbers (called

scalars) for which the following hold:

1. For any v and w in V, the sum v+w is also in V.

2. For any v and w in V, v+w= w+v.

3. For any u, v, and w in V, (u+v)+w = u + (v+w)

4. There exists an element 0 in V such that v+0 = v for all v in V.

5. For any v in V there exists an element –v in V such that –v + v = 0.

6. For any v in V and any scalar r, the product rv is also in V.

7. For any v and w in V and any scalar r, r(v+w) = rv + rw.

8. For any v in V and any scalars r and s, (r+s)v = rv+sv.

9. For any v in V and any scalars r and s, r(sv) = (rs)v.

10. For any v in V, 1v = v.

The set {v1, v2, … , vp} is linearly independent if the equation c1v1 + c2v2 + … + cpvp = 0 holds if and only if

all the scalars cj are zero.

If V can be spanned by a finite set, then the dimension is the number of elements in any basis. That is,

the dimension is n if and only if any linearly independent spanning set has n elements. If V cannot be

spanned by any finite set V is infinite dimensional.

The kernel of a linear transformation T consists of all the vectors v in the domain of T for which T(v) = 0.

Let A be an n×n invertible matrix, and let b be a vector in n. For each j between 1 and n inclusive,

define a matrix Bj by replacing the j th column of A by b. Then Cramer’s rule states that the unique

solution to the system Ax = b is the vector x=[ x1 x2 … xn]T where xj = det(Bj)/det(A) for all j.

Let A be the matrix on the left side of the equation. We can see that det(A) = (3)(4) – (2)(7) = -2. Now

replace the first column of A with [5 11]T to make B1 and replace the second column with [5 11]T to make

B2 and take the determinants. We find

det B =

5 7

= 20 − 77 = −57

11 4

det B =

3 5

= 33 − 10 = 23 .

2 11

1

and

2

Therefore, according to Cramer’s rule, the solution is given by

⎡ x ⎤ ⎡ 57 2 ⎤

⎢ y ⎥ = ⎢− 23 ⎥

⎣ ⎦ ⎢⎣

2 ⎥⎦

This is FALSE. Independent columns indicate a nonzero determinant, but not necessarily a determinant

of 1. For example, a 2x2 diagonal matrix with 2 and 3 on the diagonal has independent columns and the

determinant is 6, not 1.

This is TRUE – it is one of the results stated in the Determinants handout.

This is True. We know that det(AB) = det(A)det(B) and det(BA) = det(B)det(A). But since det(A) and

det(B) are real numbers, we also know that det(A)det(B) = det(B)det(A). So we have

det(AB) = det(A)det(B) = det(B)det(A) = det(BA).

This is a triangular matrix, so the determinant is the product of the diagonal entries: (1)(2)(-1)(4)(5)=-40.

This matrix has a column of all 0’s. That means the columns are not linearly independent, so the matrix

is not invertible, so its determinant is 0. Or, if we compute the determinant by the method of minors, and

choose all of our elements from the second column, then we see that all the coefficients will be 0 so the

determinant is 0.

The invertible matrices do NOT form a subspace. In particular, the zero matrix is NOT invertible, so it

is not in the set under consideration. But every subspace must include the zero element. Therefore, the

set of invertible matrices is not a subspace.

This H is not closed under addition. For example, if we add (3,5) + (5,3), both of which are in H, we get

(8,8) which is not in H.

The given information tells us that q(t) = 2(first basis element)+3(third basis element). Therefore, we

have q(t) = 2 + 3(t+1)2 = 3t2 + 6t + 5.

We have to solve the equation p(t) = a(1) + b(t+1) + c (t+1) 2 . We can see by inspection that the coefficient

of (t+1)2 must be 0, because p has no quadratic term. Substituting the definition for p and expanding the

right side we get 3t+5 = bt+(a+b), so we conclude that b = 3, and then a must be 2. That is, p(t) = 2(1) +

3(t+1), so [p(t)]B = [2 3 0]T.

A basis for the column space is given by the first, second, and fourth columns of A. We know that these

are linearly independent because the corresponding columns of B are linearly independent, and because

row operations do not change linear dependencies between the columns. Now we know that the column

space of A is spanned by the 5 columns of A. But we can see in B that the third column is a linear

combination of the first 2, and that the fifth column is a linear combination of columns 1, 2, and 4. The

same must be true of the columns of A. So we see that the 3rd and 5th columns of A are dependent on

columns 1 2 and 4, and can thus be eliminated without changing the set spanned by the columns. So we

see that columns 1, 2, and 4 are linearly independent and span the column space. That makes them a

basis for the column space.

The nonzero rows of B form a basis for the row space. We can see that they are linearly independent,

and they clearly span the row space of B. Since that is the same as the row space of A, we can see that

these rows are a basis for the row space of A.

The null space of A is the set of solutions to the homogeneous equation Ax = 0. Looking at B, we see that

it is in rref form, so this is the rref of A. Solving for the basic variables, we find

x1 = 2x3 – x5

x2 = -x3 + 2x5

x4 = 0x3 – x5.

This leads to the general solution of the homogeneous equation as

[x1 x2 x3 x4 x5]T = x3 [2 -1 1 0 0] T + x5 [-1 2 0 -1 1]T

and that shows that the two vectors on the right side of the equation are a spanning set for the null space.

But they are also independent, as we can observe by looking at the entries in positions 3 and 5 – neither

can be a multiple of the other. Since they are independent and span the null space, they are a basis for

the null space.

In a space of dimension n any set of more than n vectors is dependent. (That is a theorem from the book).

The four vectors are independent, so they form a basis for the subspace that they span. But that is then a

four dimensional subspace of V, and therefore must actually equal V. Therefore, the given vectors are a

basis for V and so must be a spanning set.

Either the vectors are independent or they are not. If they are independent, then they are a basis for V

and so dim V = 3. If they are dependent, we can eliminate one or more to leave a spanning set that is

independent. That will still be a basis for V, but now with fewer than 3 elements. In this case the

dimension of V is less than 3. This shows that across all possibilities, dim V ≤ 3.