* Your assessment is very important for improving the workof artificial intelligence, which forms the content of this project

Download lecture 11

Survey

Document related concepts

Learning theory (education) wikipedia , lookup

Neuroeconomics wikipedia , lookup

Attribution (psychology) wikipedia , lookup

Theory of planned behavior wikipedia , lookup

Theory of reasoned action wikipedia , lookup

Milgram experiment wikipedia , lookup

Applied behavior analysis wikipedia , lookup

Classical conditioning wikipedia , lookup

Adherence management coaching wikipedia , lookup

Perceptual control theory wikipedia , lookup

Verbal Behavior wikipedia , lookup

Behavior analysis of child development wikipedia , lookup

Shock collar wikipedia , lookup

Psychological behaviorism wikipedia , lookup

Transcript

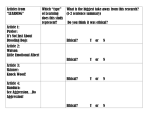

Instrumental/Operant Conditioning Instrumental Conditioning involves three key elements: a response • usually an arbitrary motor response • relevance or belongingness an outcome (the reinforcer) • bigger reward = better conditioning • contrast effects a relation between the response and outcome • contiguity • contingency Contiguity delay of reinforcement • bridge the delay with a conditioned reinforcer • marking procedure studies of delay of reinforcement show that a perfect causal relation between the response and outcome is not sufficient to produce strong instrumental responding • even with a perfect causal relation, conditioning does not occur if reinforcement is delayed too long • led to the conclusion that response-reinforcer contiguity, rather than contingency, was the critical factor Skinner’s Superstition Experiment A landmark study in the debate about the role of contiguity versus contingency Method Food presented to pigeons every 15 s regardless of the behavior of the bird. Result Birds showed stereotyped behavior patterns as time for food delivery approached. Skinner’s operant conditioning explanation: Adventitious (accidental) reinforcement of the bird’s behavior Stresses the importance of contiguity between R and the reinforcer Logic of Skinner’s explanation: Animal could pick out which response was being reinforced Animal was insensitive to contingency Extinction was much weaker than acquisition The Staddon and Simmelhag Superstition Experiment A landmark study that challenged Skinner’s interpretation Method Basically the same procedure as Skinner, except fixed time interval of 12 s, and birds were observed and their behavior recorded on all sessions. Responding at Asymptote Fixed R1 Interval R2 R1 R7 Terminal Responses R8 R1 = magazine wall Fixed Time R2 = pecking key R3 = pecking floor R4 = ¼ circle R8 R3 R4 R4 R3 R5 = flapping wings R6 = window wall R1 R7 = pecking wall R8 = moving along mag wall R2 R11 = head in magazine R7 Interim Responses R8 R9 = preening R10 = beak to ceiling R1 R6 R3 R7 R5 Importantly, early in training, terminal responses were not contiguous with food, interim responses were; According to Skinner’s adventitious reinforcement hypothesis, interim responses should have become the terminal responses, but they did not. thus superstitious key pecking is not due to adventitious reinforcement. Classical Conditioning Explanation of Superstition CS Time since food US Food CR Pecking UR Pecking Animal Behavior Terminology • Appetitive Behavior – Occurs when reinforcement not available • Consumatory Behavior – Occurs when reinforcement is about to appear Contingency effects of controllability of reinforcers a strong contingency between the response and a reinforcer means the response controls the reinforcer most of the research has focused on control over aversive stimulation contemporary research originated with studies by Seligman and colleagues they investigated the effects of uncontrollable shock on subsequent escape-avoidance learning in dogs the major finding was that exposure to uncontrollable shock disrupted subsequent learning this phenomenon called the learned-helplessness effect The learned-helplessness effect The design for LH experiments is outlined in Table 5.2, page 153 Experiment by Seligman and Maier (1967) - demonstrated the basic LH effect - 3 groups of dogs Phase 1: Group 1: Escape - restrained and given unsignaled shock to hindfeet - could terminate the shock by pressing either of 2 panels on either side of snout Group 2: Yoked - placed in same restraint and given same # and pattern of shocks - could not terminate the shocks by pressing the panels; they were shocked whenever Escape animals were shocked Group 3: Control - just put in restraint The learned-helplessness effect Phase 2: - all dogs were treated alike - put in a 2-compartment shuttlebox and taught a normal escape/avoidance reaction - dogs could avoid shock by responding during a 10-s warning L or escape shock once it came on by jumping to other side of compartment - if subject did not respond in 60 s, the shock was terminated Thus, the experiment tested whether prior inescapable shock affected escape/avoidance learning The learned-helplessness effect Results: 60 Mean escape 40 latency 20 E C Y The Escape group learned as easily as the Control group The Yoked group showed an impairment This deficit in learning is the learned-helplessness effect The learned-helplessness effect the yoked group received the same number of shocks as the escape group, so the failure to learn is not simply due to having received shock in phase 1 rather, the failure to learn was due to the inability to control shock in phase 1 no matter which response they performed, their behavior was unrelated to shock offset in phase 1 according to Seligman and Maier, the lack of control in phase 1 led to the development of the general expectation that behavior is irrelevant to the shock offset this expectation of lack of control transferred to the new situation in phase 2, causing retardation of learning Explanations of the LH effect The learned-helplessness hypothesis • based on the conclusion that animals can perceive the contingency between their behavior and the reinforcer • so, the original theory emphasized the lack of control over outcomes • according to this position, when the outcomes are independent of the subject’s behavior, the subject develops a state of learned helplessness which is manifest in 2 ways: - there is a motivations loss indicated by a decline in performance and heightened level of passivity - the subject has a generalized expectation that reinforcers will continue to be independent of its behavior - this persistent belief is the cause of the future learning deficit The LH hypothesis has been challenged by studies showing that it is not the lack of control that leads to the LH outcome, but rather the inability to predict the reinforcer receiving predictable, inescapable shock is less damaging than receiving unsignaled shock • if inescapable shock is signaled, then see less learning deficit • if you present a cue that tells the animal the shock is coming, then see less learning deficit • animal still can’t escape the shock (i.e., still uncontrollable), but they know its coming presentation of stimuli following offset of inescapable eliminates the LH deficit • this was demonstrated in an experiment by Jackson & Minor, (1988) Jackson & Minor (1988) 4 groups of rats Phase 1: Escape Group: - received unsignaled shock that rats could terminate by turning a small wheel There were 2 Yoked groups Feedback Group: - house-light was turned off for a few seconds when shock ended No-Feedback Group: - no stimulus was given when shock was turned off No-Shock Control Group: Phase 2: - all rats trained in a shuttlebox where they could run to other side to turn off shock Results: 30 25 Mean 20 Latency 15 Yoked Escape 10 5 No Shock Yoked/feedback Trials Escape and No shock groups performed better than the Yoked Group – this is the typical LH effect Yoked/feedback group learned as well as the Escape and No shock groups The Jackson & Minor (1988) experiment demonstrated that receiving a feedback stimulus following shock offset eliminated the typical learning deficit So, the learning deficit is not due to lack of control, as suggested by the LH Hypothesis, but rather, it is due to a lack of predictability Explanations of the LH effect 1. The learned-helplessness hypothesis 2. Attentional deficits - inescapable shock may cause animals to pay less attention to their actions - if an animal fails to pay attention to its behavior, it will have difficulty associating its actions with reinforces in escape-avoidance conditioning - if the response is marked by an external stimulus, which helps the animal pay attention to the appropriate response, the LH deficit is reduced - demonstrated in an experiment by Maier, Jackson & Tomie (1987) – described on p 154-155 of your text. Chapter 6 Schedules of reinforcement Schedules of Reinforcement A schedule of reinforcement is a program, or rule, that determines how and when the occurrence of a response will be followed by a reinforcer The simplest schedule is a continuous reinforcement schedule, abbreviated CRF - every response = reinforcer - rare in everyday life - more common to have partial, or intermittent, reinforcement Schedules of Intermittent Reinforcement There are 4 basic schedules of intermittent reinforcement Ratio schedules – reward is based on the number of responses Interval schedules – a response that occurs after a certain period of time has elapsed is reinforced Each of these classes is further divided into Fixed or Variable schedules Schedules of Intermittent Reinforcement Ratio (# responses) Interval (time) Fixed Fixed Ratio Fixed Interval Variable Variable Ratio Variable Interval Fixed Ratio (FR) There must be a specified number of responses to obtain each reinforcer. This number is FIXED (i.e., the same number to obtain each reinforcer) thus the reinforcer is predictable. Effect: Post-reinforcement pause (PRP; longer with higher ratios) followed by a ratio run (NB: ratio strain when very high ratios required) Example: ‘piecework’ in factories PRP Ratio Run Variable Ratio (VR) There must a specified number of responses made to obtain each reinforcer The specified number of responses required varies from one reinforcer to the next; Thus the reinfrocer is unpredictable. Effect: generally no PRP; steady very high rate Example: slot machines Fixed Interval (FI) A specified interval of time must pass during which no reinforcer is delivered no matter how many responses are made Once the interval is over, then the next response produces the reinforcer The time interval is fixed (i.e., the same time interval must pass before each reinforcer), thus the reinforcer is predictable. Effect: Postreinforcement pause (PRP) followed by gradually accelerating response rate, producing the characteristic FI scallop Example: Exams spaced apart at certain intervals – study more closer to MT and Final Variable Interval (VI) A specified interval of time must pass during which no reinforcer is delivered no matter how many responses are made Once the interval is over, then the next response produces the reinforcer The specified length of the time interval varies from one reinforcer to the next, thus the reinforcer is not predictable Effect: generally no PRP; stable rate of responding Example: time between customers in a store