* Your assessment is very important for improving the work of artificial intelligence, which forms the content of this project

Download ppt

Computer network wikipedia , lookup

Distributed firewall wikipedia , lookup

Zero-configuration networking wikipedia , lookup

TV Everywhere wikipedia , lookup

Airborne Networking wikipedia , lookup

Wake-on-LAN wikipedia , lookup

Network tap wikipedia , lookup

Deep packet inspection wikipedia , lookup

Video on demand wikipedia , lookup

List of wireless community networks by region wikipedia , lookup

Recursive InterNetwork Architecture (RINA) wikipedia , lookup

Cracking of wireless networks wikipedia , lookup

TCP congestion control wikipedia , lookup

Quality of service wikipedia , lookup

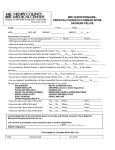

Measuring Congestion Responsiveness of Windows Streaming Media James Nichols Thesis Presentation PEDS - 12/8/03 Advisors: Prof. Mark Claypool Prof. Bob Kinicki Reader: Prof. David Finkel 1 Network Impact of Streaming Media • Unlike file transfer or Web browsing, Streaming Media has specific bitrate and timing requirements. • Typically, UDP is the default network transport protocol for delivering Streaming Media. • UDP does not have any end-to-end congestion control mechanisms. 2 The Dangers of Unresponsiveness • Flows in the network which are unresponsive to congestion can cause several undesirable situations: • Unfairness when competing with responsive flows for limited resources • Unresponsive flows can contribute to congestion collapse • Some Streaming Media applications use UDP, but rely on the application layer to provide adaptability to available capacity • Performance of these application layer mechanisms is unknown 3 Intelligent Streaming • Application layer mechanism of Windows Streaming Media (WSM) to adapt to network conditions • Can “thin” streams by sending fewer frames • If the content producer has encoded multiple bitrates into the stream, IS can choose an appropriate one • Chung et al. suggests that technologies like IS may provide responsiveness to congestion, even TCP-friendliness • Performance of Intelligent Streaming is unknown 4 Research Goals • No measurement studies have been completed where researchers had total control over: • The streaming server • Content-encoding parameters • Network conditions at or close to the server • We seek to characterize the bitrate response function of Windows Streaming Media in response to congestion in the network. • Want to precisely quantify relationship between content encoding rate and performance. 5 Outline • • • • • • Introduction Related Work Methodology Results & Analysis Conclusions Future Work 6 Related Work • Some research has been done in the general area of Streaming Media: • Traffic characterization studies [VAM+02, dMSK02] performed through log analysis • Empirical studies using custom tools [CCZ03, WCZ01, LCK02] • Characterization of streaming content available on the Web [MediaCrawler] • None had control of the server, client, and network conditions 7 Need control over the server • Not having server limits possible data set of content to study • For example, [LCK02], measured IP packet fragmentation when streaming WSM clips but packet size can be tuned server-side • Other research [CCZ03] had to stream over the public Internet while measuring network performance 8 Outline • • • • • • Introduction Related Work Methodology Results & Analysis Conclusions Future Work 9 Methodology •Construct testbed •Examine SBR clip •Create/adapt tools •Range of SBR clips •Encode content •MBR clips •Systematic control •Vary loss and latency 10 Results and Analysis Single Bitrate Clip 11 Experiments • • • • Single bitrate (SBR) clip in detail Range of SBR clips Multiple bitrate (MBR) clips Additional experiments performed but not discussed here 12 Single Bitrate Clip Experiment • Hypothesis: SBR clips are unresponsive to congestion • Latency: 45 ms • Induced loss: 0% • Bottleneck capacity: 725 Kbps • Start a TCP flow through the link • 10 Seconds later stream a WSM clip • Measure achieved bitrates and loss rates for each flow 13 340 Kbps Clip - Bottleneck Capacity 725 Kbps TCPFriendly? < 0.001 packet loss After 15 seconds 14 548 Kbps Clip - Bottleneck Capacity 725 Kbps Not TCPFriendly! ~ 0.003 packet loss for WSM ~ 0.006 packet loss for TCP after 15 seconds 15 1128 Kbps Clip - Bottleneck Capacity 725 Kbps Responsive! 16 Network Topology 17 Measuring Buffering Performance • Parse packet capture for RTSP PLAY message • Examine MediaTracker output and measure how long it took from the start of streaming to when the buffer is reported to be full • PLAY + interval = buffering period 18 Experiments • SBR clip in detail • Range of SBR clips • MBR clips 19 Comparison of Single Bitrate Clips • Want to precisely quantify relationship between content encoding rate and performance • Repeat the previous experiment, but for a range of single bitrate clips: • 28, 43, 58, 109, 148, 282, 340, 548, 764, 1128 Kbps • Vary network capacity: 250, 750, 1500 Kbps • Measure performance during and after buffering 20 SBR Clips - Bottleneck Capacity 725 Kbps Buffering Period 21 SBR Clips - Bottleneck Capacity 725 Kbps Playout Period 22 Results and Analysis Multiple Bitrate Clips 23 Multiple Bitrate Clips • Hypothesis: Multiple Bitrates make WSM more responsive to congestion • Same experiment as before, but with different encoded content • Vary network capacity: 250, 725, 1500 Kbps • Created two sets of 10 multiple bitrate clips • Experiments with lots of other MBR clips 24 Multiple Bitrate Content • First set of clips (adding lower): • • • • • 1128 Kbps 1128-764 Kbps 1128-764-548 Kbps … 1128-764-548-340282-148-109-58-4328 Kbps • Second set of clips (adding higher): • • • • • 28 Kbps 28-43 Kbps 28-43-56 Kbps … 28-43-58-109-148282-340-548-7641128 Kbps 25 Adding lower bitrates to clip - 250 Kbps Bottleneck Capacity - Buffering Period 26 Adding lower bitrates to clip - 250 Kbps Bottleneck Capacity - Playout Period 27 Adding lower bitrates to clip - 725 Kbps Bottleneck Capacity Buffering Playout 28 Adding higher bitrates to clip 725 Kbps Bottleneck Capacity Buffering Playout 29 Additional experiments • Not enough time to discuss all the results • Different bottleneck capacities • Carefully choose 2 or 3 bitrates to include in MBR clips • Vary loss rate • Vary latencies • Also look at other network level metrics: interarrival times, burst lengths, and IP fragmentation 30 Conclusions • Prominent buffering period means WSM cannot be modeled as a simple CBR flow • WSM single bitrate clips: • During buffering WSM responds to capacity only when the encoding rate is less than capacity • Otherwise, high loss rates are induced • During playout WSM responds to available capacity • Thin if necessary • If rate is less then capacity, will still be responsive to high loss rates (5%) 31 Conclusions • WSM multiple bitrate clips: • During buffering WSM responds to capacity only when content contains a suitable bitrate to choose • Chosen bitrate is largest that capacity allows • Otherwise, still tries to fit the smallest available, again resulting in high amounts of loss • During playout WSM is responsive to available capacity • Either because it chose the proper rate, or because it thins if proper rate isn’t encoded in clip • However, the chosen bitrate probably isn’t fair to TCP 32 Future Work • Run the same experiments with other streaming technologies: RealVideo and Quicktime • Examine the effects of different content types • Build NS simulation model of streaming media for use in future research 33 Questions 34