* Your assessment is very important for improving the work of artificial intelligence, which forms the content of this project

Download Chapter 11

Hearing loss wikipedia , lookup

Auditory processing disorder wikipedia , lookup

Audiology and hearing health professionals in developed and developing countries wikipedia , lookup

Sound from ultrasound wikipedia , lookup

Soundscape ecology wikipedia , lookup

Noise-induced hearing loss wikipedia , lookup

Sound localization wikipedia , lookup

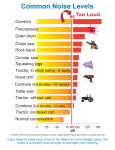

Chapter 11 Hearing and Listening Prenatal hearing Babies can hear in utero. Mother’s heartbeat, breathing, speaking, singing, and stomach gurgles. Baby learns to identify mother’s voice in utero. Hard to measure human auditory capacity. This includes numerous voices, as well as everyday sounds, such as walking, laughing, crying, hammering, … Sound enables us to judge shape of struck objects, quantity of fluid in a vessel, loose screws. Can sort a single sound source from background, e.g. a person’s voice in a crowded stadium. Range and limits of hearing Threshold intensity = the softest sound that can be hear (at a given frequency). A plot of threshold intensity versus frequency yields an audibility function. Reference frequencies Lowest note on a piano: 27.5 Hz Middle C: 261.63 Hz Highest note on a piano: 4,186 Hz Typical phone: 4,000-8,000 Hz TV whine: 16,000 Hz Hearing loss In the U.S., ~28 million suffer some form of hearing loss. (Cf., ~10 million suffer age-related macular degeneration) Total Deafness = Cannot hear voices at less tht 82 dB. Auditory impairment = substandard audibility function. Two types of hearing loss Conduction: Due to problems in the outer and middle ear. Sensory/neural: Due to problems in the inner ear and brain. Conduction losses Excessive ear wax. Middle ear infections Persistent infections in infants can lead to permanent sensory deficits, since the brain is still developing. Otosclerosis: Immobilization of stapes by buildup of spongy substance. Progressive condition Tends to occur in young adults. More frequent in females than males. Treated surgically. Sensory/neural losses Presbycusis: Age related loss of high frequency sensitivity. Perhaps unavoidable. 30 years: lose > 15,000 Hz 50 years: lose > 12,000 Hz For all ages, men tend to have greater hearing loss than women. Perhaps due to changes in basilar membrane. Perhaps due to changes in vasculature. Perhaps due to cumulative exposure to loud noises. Supported by reduced hearing loss in African tribal populations. Noise exposure and hearing loss Single explosive events can lead to hearing loss, e.g. guns. Sustained loud noise, as in music or factories May be as high as 60% among teens in U.S. At all frequencies, those who regularly attend rock concerts have higher auditory thresholds. Short exposures to loud noises (> ~60dB) lead to temporary threshold shifts. Such short exposures can occur when using household appliances. Women tend to have lower threshold shifts than men when low frequencies are inducers Men tend to have lower threshold shifts than women when high frequencies are inducers. Drugs and hearing loss Nicotine users have higher high-frequency thresholds than non-users. Aspirin also produces temporary hearing loss. May be due to changes in circulation, which deprive cochlea. The dosage for this is about what is recommended for handling rheumatoid arthritis. Aspirin also makes one more susceptible to the effects of noise. Compensating for hearing loss Hearing aids Amplify incoming sounds by up to 60dB. They tend to favor sounds for speech, i.e. 2504,000Hz. Compensating for hearing loss Cochlear implants Help if only cochlea is damaged; loss of auditory nerve or auditory cortex cannot be helped. Sounds are transformed into electrical stimulation of the auditory nerve. Do not produce normal hearing, but only responsiveness to sound. ~100,000 people worldwide have cochlear implants. Half are children; half adults. ~30,000 in U.S. (?) May work best when implanted early. Cochlear implants A prime candidate for cochlear implants is described as: having severe to profound sensorineural hearing impairment in both ears a functioning auditory nerve is present has lived a short amount of time without hearing has good speech, language, and communication skills, or in the case of infants and young children, has a family willing to work toward speech and language skills with therapy other kinds of hearing aids are not helping no medical reason to avoid surgery lives in or desires to live in the "hearing world" has realistic expectations about results has the support of family and friends. Hearing in non-human animals Noise masking and critical bands Auditory masking = not hearing in the presence of ambient noise, e.g. shower or traffic. dB masked – dB unmasked Broad band noise versus bandpass noise Noise masking and critical bands After a certain point, further increases in noise bandwidth does no more masking. The noise bandwidth at which this occurs defines the critical band. The inflection point is the frequency limit (tuning width) of the fiber. Variations in masking paradigm Varying width of noise of fixed intensity Varying position of noise of fixed intensity Removing center frequencies (notched spectrum) Varying intensity of noise of fixed bandwidth and position. Noise masking Bottom line: Noise only masks when it is near the test frequency Loudness perception and discrimination Loudness perception can be measured by matching a variable tone to a target tone. Loudness perception can also be measured by assigning a number to the perceived loudness. Loudness perception Loudness depends on frequency: Equal loudness contours. Lower frequencies tend to sound weaker, which is why audio manufacturers add mechanisms to increase their strength. How does perceived loudness vary with sound intensity? Perceived loudness is a logarithmic function of intensity Neural bases of loudness perception Partially auditory neuron firing rates. Partially different neurons respond to different sound intensities. A given neuron can vary firing rate over about 40dB, but normal humans can hear loudness variations over 130dB. 20-60 dB, 40-80dB, and 60-100 dB. Partially due to more intense sounds activating more neurons outside their tuning frequencies. Loudness discrimination Discrimination threshold is the least perceptible change. For loudness, the discrimination threshold is ~1-2dB, though it depends on details of experimental measuring procedure, etc. Loudness discrimination “Traditional” view: Discrimination of pure tones depends on changes in the frequency of firing of the neurons tuned to that frequency. Profile analysis: Discrimination of tones depends on comparing changes in a few frequencies against a backdrop of other frequencies. Experiment 1: Subjects compared pairs of stimuli. Stimuli = combinations of 21 different tones 300-3,000Hz in frequency In one of the pair, all 21 tones had the same intensity. In the other of the pair, one of the 21 tones increased in intensity. David Green, (1982) Experiment 1 results: Subjects were almost as good in identifying the changed tone against the backdrop of 20 other tones as they were in identifying the changed tone in isolation. David Green, (1982) Experiment 2: Subjects compared pairs of stimuli. Stimuli = combinations of different tones 3003,000Hz in frequency In one of the pair, all tones had the same intensity. In the other of the pair, one of the tones increased in intensity. Green varied the number of tones in each of the stimuli. David Green, (1982) Experiment 2 results: Subjects could make finer loudness discriminations with more tones, i.e., the more complex background helped make differences more detectable. Pitch ≠ frequency Pitch is a psychological property; frequency is a physical property of sound waves. Loudness influences pitch: At 300Hz, louder is lower. At 3,000 Hz, louder is higher. Background tones influence pitch Lower background tones make a tone’s pitch higher. Higher background tones make a tone’s pitch lower. The Missing Fundamental The lowest frequency of a tone is its fundamental frequency. (Blake & Sekuler’s text is less than maximally clear on this; cf. the glossary.) Theoretically, a fundamental frequency gives rise to integer multiples of this frequency. This multiples are the “harmonics.” The Missing Fundamental Synthesize the patterns only without the fundamental and the tone sounds like the original. Harmonics produce timbre Pure tones and musical notes produce tonal pitch. Complex aperiodic notes produce atonal pitch. Neural basis of pitch perception Perhaps pitch is based on the activity of neurons corresponding to the fundamental frequency. (Place theory) Supporting evidence: Damage to a region of the basilar membrane causes losses of specific frequency sounds. Cochlear implants can replace specific frequencies. Neural basis of pitch perception Evidence contrary to Place Theory: Frequencies below 1,000 Hz do not register in any specific place on the basilar membrane, yet such tones are easily identified. Time delayed tones. Neural basis of pitch perception Temporal theory: Pitches correspond to firing rates of neurons. This is observed in some neurons. Together place theory and temporal theory explain pitch perception. Sound Recognition How do we separate the sound produced by a single object from background noise, when all the sound waves are present at once? In other words, how do we form auditory images? Sound recognition Common spectral content Common time course Spectral Harmonics Familiarity Localization Grouping by common spectral content Bregman (1990) experiment 1 Bregman (1990) experiment 2 Played a tune “Mary had a little lamb.” Between the notes, he added noise of similar frequencies. This blocked hearing of the tune. Played a tune “Mary had a little lamb.” Between the notes, he added noise of higher frequencies. This did not block hearing of the tune. Recall Gestalt principle of grouping by similarity. Spectral content Cannot be the whole story, since two women’s voices have similar spectral content, but are still easily discerned. Common time course Form an image by grouping together the frequencies that temporally vary together. This can be observed by using sound recordings in which some frequencies are artificially delayed relative to others. This, too, cannot be the whole story, since voices singing in unison can clearly be discriminated. Spectral harmonics Frequencies that are multiples of each other tend to be heard together, e.g. a sound involving frequencies of 440, 880, and 1,320 Hz tend to go together. Familiarity One can overcome background noise with familiarity or priming. Practice can do this in Bregman’s (1990) paradigm. Familiarity It is easier for you to separate voices of English than of, say, Japanese. Variations in musical chords (tones) are more readily discerned than variations in nonmusical combinations of tones. Sounds localization The direction of a sound is not an intrinsic feature of the acoustic signal. In this regard, sound localization differs from visual localization. IID and ITD Stevens & Newman, (1934). Person seated on a roof with speaker at the end of an arm with constant elevation relative to listener. <1,000 Hz localization was good 2,000 – 4,000 Hz localization was less good > 4,000 Hz localization improved Duplex theory of localization: Low frequencies depend on ITD; high frequencies depend on IID. IID and ITD Congenitally blind individuals are better at sound localization. Brain plasticity appears to support this. This plasticity is seen in both young and somewhat later cases of deafness. Cocktail party effect Play a tone to one ear. Adding noise to that ear blocks the tone. Adding noise to the other ear unblocks the tone. This is binaural unmasking. (Note the confusing definition in B&S.) Cocktail party effect Binaural masking-level difference (BLMD) = difference in audibility between monaural and binaural cases. Unmasking is apparently caused by localization of tone in one place and noise in another. Cocktail party effect Hearing one’s voice attracts attention. This suggests that you are normally processing the background information in the “background”. There is a vast literature on dichotic listening. This attempts to study, among other things, how attention influences what auditory information is processed. Mislocalization Happens most often with sounds directly in front or directly in back. More common with sounds of a narrow band of frequencies; reduced with broadband noise. Reduced with reflecting surfaces. Mislocalization compensation 1. When IID and ITD cues fail, turn your head. 2. Pinnas of ears helps. Verify this with headphones or earmuffs. Especially useful in cases of localization of elevation. Comparisons of localizations with recordings in one’s own ears versus those in others’ ears shows difference. Comparisons of localizations with materials in ear folds. Sound through headphones Music is often localized in your head; not in external space. The reason is that the sounds are recorded from several microphones near the musicians. To have an auditory image, one has to use just two microphones to record. One must also not move ones head. Scientists are trying to develop a headphone system that can compensate for head movements. Mislocalization with eyes and ears Visual dominance over auditory information Eyes mislead about sound location: In movie theaters. Ventriloquism Pseudophone Reasons for visual dominance Echoes are misdirections, but light does not so often reflect. Light travels faster than sound, so provides a more nearly instantaneous measure of location. Visual acuity and localization If you have a small region of high acuity, you had best be able to use audition to guide your direction of it. If you have a small region of high acuity, you had best be able to use audition to guide your direction of it. Sound localization: Distance People are good indoors, since there are echoes. People are poor outdoors tending to underestimate distances. They use intensity to measure. Moving in the direction of the sound is helpful e.g. auditory looming. Auditory Looming As an approaching object makes a sound, the object looms forward. Humans overestimate the change in sound between approaching and receding. (Frequency, loudness?). Approaching sounds seems closer than they really are. Monkeys are susceptible to auditory loom. Auditory and visual interactions Two black spots moving toward each other then back is ambiguous between Colliding One passing behind the other Properly timed sounds render the image unambiguous. Auditory and visual interactions Replicated with fMRI. With bouncing (hence multimodal processing) more areas of the brain are active. With bouncing (hence multimodal processing) each area of the brain was less active than in a purely visual or purely auditory task. Auditory and visual interactions Guttman, Gilroy, & Blake, (2004). Subjects compared two “visual rhythms.” Visual rhythms consist of gratings of light and dark bars that moved in rapid and unpredictable ways. Subjects were asked to judge whether two successive visual rhythms were the same or different. Version 1: Musical rhythm matched movements of gratings. Version 2: Musical rhythm did not match movements of gratings. Auditory and visual interactions Guttman, Gilroy, & Blake, (2004) results: People’s judgments were heavily influenced by sound. When the auditory rhythm matched the visual rhythm, subjects were nearly perfect. When the auditory rhythm did not match the visual rhythm, subject performed some better than chance. Auditory and visual interactions Shams, Kamitani, & Shimojo, (2000). Single flash presented alone seen as single flash. Single flash presented in conjunction with an auditory click seen as a double flash.