* Your assessment is very important for improving the work of artificial intelligence, which forms the content of this project

Download Paired t-test, non

Survey

Document related concepts

Transcript

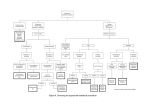

The paired t-test, non-parametric tests, and ANOVA July 13, 2004 Review: the Experiment (note: exact numbers have been altered) Grade 3 at Oak School were given an IQ test at the beginning of the academic year (n=90). Classroom teachers were given a list of names of students in their classes who had supposedly scored in the top 20 percent; these students were identified as “academic bloomers” (n=18). BUT: the children on the teachers lists had actually been randomly assigned to the list. At the end of the year, the same I.Q. test was readministered. The results Children who had been randomly assigned to the “top-20 percent” list had mean I.Q. increase of 12.2 points (sd=2.0) vs. children in the control group only had an increase of 8.2 points (sd=2.5) Confidence interval (more information!!) 95% CI for the difference: 4.0±1.99(.64) = (2.7 – 5.3) t-curve with 88 df’s has slightly wider cutoff’s for 95% area (t=1.99) than a normal curve (Z=1.96) The Paired T-test The Paired T-test Paired data means you’ve measured the same person at different time points or measured pairs of people who are related (husbands and wives, siblings, controls pair-matched to cases, etc. For example, to evaluate whether an observed change in mean (before vs. after) represents a true improvement (or decrease): Null hypothesis: difference (after-before)=0 The differences are treated like a single random variable n Xi Yi Xi - Yi X1 Y1 D1 X2 Y2 D2 X3 Y3 D3 X4 Y4 D4 … … … Xn Yn Dn D i 1 Dn n n D 2 (D i Dn ) 2 i 1 n 1 Dn 0 T= SD n SD 2 Example Data baseline Test2 improvement 10 10 9 8 12 11 11 7 6 9 9 10 9 9 12 13 8 11 12 13 11 8 9 8 9 9 -1 +2 +4 0 -1 +1 +2 +4 +2 0 -1 -1 0 Is there a significant increase in scores in this group? Average of differences = +1 Sample Variance = 3.3; sample SD = 1.82 T 12 = 1/(1.82/3.6) = 1.98 data _null_; pval= 1-probt(1.98, 12); put pval; run; 0.0355517436 Significant for a one-sided test; borderline for twosided test Example 2: Did the control group in the Oak School experiment improve at all during the year? t71 8.2 8.2 28 2 .29 2.5 72 p-value <.0001 Confidence interval for annual change in IQ test score 95% CI for the increase: 8.2±2.0(.29) = (7.6 – 8.8) t-curve with 71 df’s has slightly wider cutoff’s for 95% area (t=2.0) than a normal curve (Z=1.96) Summary: parametric tests True standard deviation is known One sample (or paired sample) Two samples One-sample Z-test Two-sample Z-test Two-sample t-test Standard deviation is estimated by the sample One-sample t-test Equal variances are pooled Unequal variances (unpooled) Non-parametric tests Non-parametric tests t-tests require your outcome variable to be normally distributed (or close enough). Non-parametric tests are based on RANKS instead of means and standard deviations (=“population parameters”). Example: non-parametric tests 10 dieters following Atkin’s diet vs. 10 dieters following Jenny Craig Hypothetical RESULTS: Atkin’s group loses an average of 34.5 lbs. J. Craig group loses an average of 18.5 lbs. Conclusion: Atkin’s is better? Example: non-parametric tests BUT, take a closer look at the individual data… Atkin’s, change in weight (lbs): +4, +3, 0, -3, -4, -5, -11, -14, -15, -300 J. Craig, change in weight (lbs) -8, -10, -12, -16, -18, -20, -21, -24, -26, -30 Enter data in SAS… data nonparametric; input loss diet $; datalines ; +4 atkins +3 atkins 0 atkins -3 atkins -4 atkins -5 atkins -11 atkins -14 atkins -15 atkins -300 atkins -8 jenny -10 jenny -12 jenny -16 jenny -18 jenny -20 jenny -21 jenny -24 jenny -26 jenny -30 jenny ; run; Jenny Craig 30 25 20 P e r c 15 e n t 10 5 0 -30 -25 -20 -15 -10 -5 0 5 Weight Change 10 15 20 Atkin’s 30 25 20 P e r c 15 e n t 10 5 0 -300 -280 -260 -240 -220 -200 -180 -160 -140 -120 -100 -80 Weight Change -60 -40 -20 0 20 t-test doesn’t work… Comparing the mean weight loss of the two groups is not appropriate here. The distributions do not appear to be normally distributed. Moreover, there is an extreme outlier (this outlier influences the mean a great deal). Statistical tests to compare ranks: Wilcoxon rank-sum test (equivalent to MannWhitney U test) is analogue of two-sample ttest. Wilcoxon signed-rank test is analogue of onesample t-test, usually used for paired data Wilcoxon rank-sum test RANK the values, 1 being the least weight loss and 20 being the most weight loss. Atkin’s +4, +3, 0, -3, -4, -5, -11, -14, -15, -300 1, 2, 3, 4, 5, 6, 9, 11, 12, 20 J. Craig -8, -10, -12, -16, -18, -20, -21, -24, -26, -30 7, 8, 10, 13, 14, 15, 16, 17, 18, 19 Wilcoxon “rank-sum” test Sum of Atkin’s ranks: 1+ 2 + 3 + 4 + 5 + 6 + 9 + 11+ 12 + 20=73 Sum of Jenny Craig’s ranks: 7 + 8 +10+ 13+ 14+ 15+16+ 17+ 18+19=137 Jenny Craig clearly ranked higher! P-value *(from computer) = .017 – from ttest, p-value=.60 *Tests in SAS… /*to get wilcoxon rank-sum test*/ proc npar1way wilcoxon data=nonparametric; class diet; var loss; run; /*To get ttest*/ proc ttest data=nonparametric; class diet; var loss; run; Wilcoxon “signed-rank” test H0: median weight loss in Atkin’s group = 0 Ha:median weight loss in Atkin’s not 0 Atkin’s +4, +3, 0, -3, -4, -5, -11, -14, -15, -300 Rank absolute values of differences (ignore zeroes): Ordered values: 300, 15, 14, 11, 5, 4, 4, 3, 3, 0 Ranks: 1 2 3 4 5 6-7 8-9 Sum of negative ranks: 1+2+3+4+5+6.5+8.5=30 Sum of positive ranks: 6.5+8.5=15 P-value*(from computer)=.043; from paired t-test=.27 *Tests in SAS… /*to get one-sample tests (both student’s t and signed-rank*/ proc univariate data=nonparametric; var loss; where diet="atkins"; run; What if data were paired? e.g., one-to-one matching; find pairs of study participants who have same age, gender, socioeconomic status, degree of overweight, etc. Atkin’s +4, +3, 0, -3, -4, -5, -11, -14, -15, -300 J. Craig -8, -10, -12, -16, -18, -20, -21, -24, -26, -30 Enter data differently in SAS… 10 pairs, rather than 20 individual observations data piared; input lossa lossj; diff=lossa-lossj; datalines ; +4 -8 +3 -10 0 -12 -3 -16 -4 -18 -5 -20 -11 -21 -14 -24 -15 -26 -300 -30 ; run; *Tests in SAS… /*to get all paired tests*/ proc univariate data=paired; var diff; run; /*To get just paired ttest*/ proc ttest data=paired; var diff; run; /*To get paired ttest, alternatively*/ proc ttest data=paired; paired lossa*lossj; run; ANOVA for comparing means between more than 2 groups ANOVA (ANalysis Of VAriance) Idea: For two or more groups, test difference between means, for quantitative normally distributed variables. Just an extension of the t-test (an ANOVA with only two groups is mathematically equivalent to a t-test). Like the t-test, ANOVA is “parametric” test— assumes that the outcome variable is roughly normally distributed The “F-test” Is the difference in the means of the groups more than background noise (=variability within groups)? Variabilit y between groups F Variabilit y within groups Spine bone density vs. menstrual regularity 1.2 1.1 1.0 S P I N E 0.9 Within group variability Between group variation Within group variability Within group variability 0.8 0.7 amenorrheic oligomenorrheic eumenorrheic Group means and standard deviations Amenorrheic group (n=11): – Mean spine BMD = .92 g/cm2 – standard deviation = .10 g/cm2 Oligomenorrheic group (n=11) – Mean spine BMD = .94 g/cm2 – standard deviation = .08 g/cm2 Eumenrroheic group (n=11) – Mean spine BMD =1.06 g/cm2 – standard deviation = .11 g/cm2 The size of the groups. Between-group variation. The F-Test 2 sbetween The difference of each group’s mean from the overall mean. 2 2 2 (. 92 . 97 ) (. 94 . 97 ) ( 1 . 06 . 97 ) ns x2 11* ( ) .063 3 1 2 swithin avg s 2 1 (.102 .082 .112 ) .0095 3 F2,30 The average amount of variation within groups. 2 between 2 within s s .063 6.6 .0095 Large F value indicates Each group’s variance. that the between group variation exceeds the within group variation (=the background noise). The F-distribution The F-distribution is a continuous probability distribution that depends on two parameters n and m (numerator and denominator degrees of freedom, respectively): The F-distribution A ratio of sample variances follows an Fdistribution: 2 between 2 within The F ~ Fn ,m F-test tests the hypothesis that two sample variances are equal. will be close to 1 if sample variances are equal. 2 2 H 0 : between within H a : 2 between 2 within ANOVA Table Source of variation d.f. Between k-1 (k groups) Sum of squares Mean Sum of Squares SSB SSB/k-1 (sum of squared deviations of group means from F-statistic SSB SSW p-value Go to k 1 nk k Fk-1,nk-k chart grand mean) Within nk-k (n individuals per group) Total nk-1 variation SSW (sum of squared deviations of observations from their group mean) s2=SSW/nk-k TSS (sum of squared deviations of observations from grand mean) TSS=SSB + SSW ANOVA=t-test Source of variation Between (2 groups) Within d.f. 1 2n-2 Sum of squares SSB Squared (squared difference difference in means in means) SSW equivalent to numerator of pooled variance Total 2n-1 variation Mean Sum of Squares TSS Pooled variance F-statistic p-value Go to (X Y ) sp 2 2 ( X Y 2 ) (t 2 n 2 ) 2 sp F1, 2n-2 Chart notice values are just (t 2 2n-2) ANOVA summary A statistically significant ANOVA (F-test) only tells you that at least two of the groups differ, but not which ones differ. Determining which groups differ (when it’s unclear) requires more sophisticated analyses to correct for the problem of multiple comparisons… Question: Why not just do 3 pairwise ttests? Answer: because, at an error rate of 5% each test, this means you have an overall chance of up to 1(.95)3= 14% of making a type-I error (if all 3 comparisons were independent) If you wanted to compare 6 groups, you’d have to do 6C2 = 15 pairwise ttests; which would give you a high chance of finding something significant just by chance (if all tests were independent with a type-I error rate of 5% each); probability of at least one type-I error = 1-(.95)15=54%. Multiple comparisons With 18 independent comparisons, we have 60% chance of at least 1 false positive. Multiple comparisons With 18 independent comparisons, we expect about 1 false positive. Correction for multiple comparisons How to correct for multiple comparisons posthoc… Bonferroni’s correction (adjusts p by most conservative amount, assuming all tests independent) Holm/Hochberg (gives p-cutoff beyond which not significant) Tukey’s (adjusts p) Scheffe’s (adjusts p) Non-parametric ANOVA Kruskal-Wallis one-way ANOVA Extension of the Wilcoxon Sign-Rank test for 2 groups; based on ranks Proc NPAR1WAY in SAS Reading for this week Chapters 4-5, 12-13 (last week) Chapters 6-8, 10, 14 (this week)