* Your assessment is very important for improving the work of artificial intelligence, which forms the content of this project

Download The Critical Thread:

Bra–ket notation wikipedia , lookup

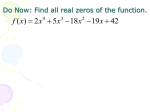

System of polynomial equations wikipedia , lookup

Birkhoff's representation theorem wikipedia , lookup

Linear algebra wikipedia , lookup

Factorization wikipedia , lookup

Group (mathematics) wikipedia , lookup

Invariant convex cone wikipedia , lookup

Basis (linear algebra) wikipedia , lookup

Polynomial ring wikipedia , lookup

Eisenstein's criterion wikipedia , lookup

Oscillator representation wikipedia , lookup

Homomorphism wikipedia , lookup

Field (mathematics) wikipedia , lookup

Representation theory wikipedia , lookup

Modular representation theory wikipedia , lookup

Cayley–Hamilton theorem wikipedia , lookup

Factorization of polynomials over finite fields wikipedia , lookup