* Your assessment is very important for improving the workof artificial intelligence, which forms the content of this project

Download Universal Principles for Ultra Low Power and Energy Efficient Design

Wireless power transfer wikipedia , lookup

History of electric power transmission wikipedia , lookup

Negative feedback wikipedia , lookup

Pulse-width modulation wikipedia , lookup

Switched-mode power supply wikipedia , lookup

Control system wikipedia , lookup

Alternating current wikipedia , lookup

Public address system wikipedia , lookup

Life-cycle greenhouse-gas emissions of energy sources wikipedia , lookup

Power engineering wikipedia , lookup

Integrated circuit wikipedia , lookup

Distributed generation wikipedia , lookup

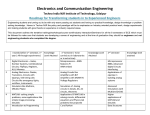

IEEE TRANSACTIONS ON CIRCUITS AND SYSTEMS—II: EXPRESS BRIEFS, VOL. 59, NO. 4, APRIL 2012 193 Universal Principles for Ultra Low Power and Energy Efficient Design Rahul Sarpeshkar, Senior Member, IEEE Abstract—Information is represented by the states of physical devices. It costs energy to transform or maintain the states of these physical devices. Thus, energy and information are deeply linked. This deep link allows the articulation of ten information-based principles for ultra low power design that apply to biology or electronics, to analog or digital systems, and to electrical or nonelectrical systems, at small or large scales. In this tutorial brief, we review these key principles along with examples of how they have been applied in practical electronic systems. Index Terms—Design principles, energy efficient, information, low power. Fig. 1. “The low-power hand.” I. I NTRODUCTION I NFORMATION is always represented by the states of variables in a physical system, whether that system is a sensing, actuating, communicating, controlling, or computing system or a combination of all types. It costs energy to change or maintain the states of physical variables. These states can be in the voltage of a piezoelectric sensor, in the mechanical displacement of a robot arm, in the current of an antenna, in the chemical concentration of a regulating enzyme in a cell, or in the voltage on a capacitor in a digital processor. Hence, it costs energy to process information, whether that energy is used by enzymes in biology to copy a strand of deoxyribonucleic acid or in electronics to filter an input.1 To save energy, one must then reduce the amount of information that one wants to process. The higher the output precision and the higher the temporal bandwidth or speed at which the information needs to be processed, the higher is the rate of energy consumption, i.e., power. To save power, one must then reduce the rate of information processing. The information may be represented by analog state variables, digital state variables, or both. The information processing can use analog processing, digital processing, or both. The art of low-power design consists of decomposing the task to be solved in an intelligent fashion such that the rate of Manuscript received October 17, 2011; revised December 21, 2011; accepted January 29, 2012. Date of publication March 16, 2012; date of current version April 11, 2012. This work was supported in part by the National Institutes of Health under Grant NS056140, by the National Science Foundation under Grant NRI-NSF CCF-1124247, and by a campus collaboration initiative from Lincoln Laboratories. This paper was recommended by Associate Editor M. Sawan. The author is with the Analog Circuits and Biological Systems Group, Research Laboratory of Electronics, Massachusetts Institute of Technology, Cambridge, MA 02139 USA (e-mail: [email protected]). Digital Object Identifier 10.1109/TCSII.2012.2188451 1 Technically, if one operates infinitely slowly and in a manner that allows the states of physical variables to be recovered even after they have been transformed, energy need not be dissipated [28]. In practice, in both natural and artificial systems, which cannot compute infinitely slowly, and which always have finite losses, there is always an energy cost to changing or maintaining the states of physical variables. information processing is reduced as far as is possible without compromising the performance of the system. Intelligent decomposition of the task involves good architectural system decomposition, a good choice of topological circuits needed to implement various functions in the architecture, and a good choice of technological devices for implementing the circuits. Thus, low-power design requires deep knowledge of devices, circuits, and systems. Fig. 1 shows the “low-power hand.” The low-power hand reminds us that the power consumption of a system is always defined by five considerations, which are represented by the five fingers of the hand: 1) the task that it performs; 2) the technology (or technologies) that it is implemented in; 3) the topology or architecture used to solve the task; 4) the speed or temporal bandwidth of the task; and 5) the output precision of the task. The output precision is measured by the output Signal-to-Noise Ratio (SNR), by the number of bits of task precision, or by the error rate of the task. As the complexity, speed, and output precision of a task increase, the rate of information processing is increased, and the power consumption of the devices implementing that task increases. The problem of low-power design may be formulated as follows: Given an input X, an output function Y = f (X, t), and basis functions {b1 , b2 , b3 , . . .} formed by a set of technological devices, and noise-resource equations for devices in a technology that describes how their noise or error is reduced by an increase in their power dissipation for a given bandwidth [1], find a topological implementation of the desired function in terms of these devices that maximizes the mutual information rate between the actual output Y(t) and the desired output f (X, t) for a fixed power-consumption constraint or per-unit system power consumption. Area may or may not be a simultaneous constraint in this optimization problem. A high value of mutual information, measured in units of bits per second, implies that the output encodes a significant amount of desired information about the input, with higher mutual information values typically requiring higher amounts of power consumption. Hence, lowpower design is, in essence, an information encoding problem. How do you encode the function you want to compute, whether 1549-7747/$31.00 © 2012 IEEE 194 IEEE TRANSACTIONS ON CIRCUITS AND SYSTEMS—II: EXPRESS BRIEFS, VOL. 59, NO. 4, APRIL 2012 it is just a simple linear amplification of a sensed signal or a complex function of its input into transistors and other devices that have particular basis functions given, for example, by their I–V curves? Note that this formulation is also true if one is trying the minimize the power of an actuator or sensor, since information is represented by physical state variables in both, and we would like to sense or transform these state variables in a fashion that extracts or conveys information at a given speed and precision. A default encoding for many sensory computations is a highspeed high-precision analog-to-digital conversion followed by digital signal processing that computes f ( ) with lots of calculations. This solution is highly flexible and robust but is rarely the most power-efficient encoding: It does not exploit the fact that the actual meaningful output information that we are after is often orders of magnitude less than the raw information in the numbers of the signal, and the technology’s basis functions are more powerful than just switches. It may be better to preprocess the information in an analog fashion before digitization and then digitize and sample higher level information at significantly lower speed and/or precision, for example, in a radio receiver: In a radio receiver, the raw RF signal is preprocessed by analog circuits with inductive, capacitive, multiplying, and filtering basis functions. Such preprocessing reduces the bandwidth and power needed for digitization and subsequent digital processing of the signal by many orders of magnitude over a solution employing immediate digitization. The radio example generalizes to several other computations where delaying digitization to an optimum point in the system saves power. The field of “compressed sensing” attempts to exploit similar bandwidth-reduction schemes before sampling and digitization to improve efficiency [2]. Similarly, a bioinspired asynchronous stochastic sampling scheme reduces the energy for neural stimulation by lowering the effective sampling rate while preserving signal fidelity [3]. This paper is organized as follows. Section II outlines ten key principles of low-power and energy-efficient design along with examples of their applicability in man-made systems or in natural systems. Section III concludes by briefly outlining how their universality enables application in the design of several systems, whether they be electrical, mechanical, analog, digital, biological, electronic, small-scale, or large-scale. II. G ENERAL P RINCIPLES FOR L OW-P OWER M IXED -S IGNAL D ESIGN We shall now present ten principles for low-power and energy-efficient design that apply to digital, analog, and mixedsignal systems. A. Exploit Analog Preprocessing Before Digitization in an Optimal Way There is an optimal amount of analog preprocessing before a signal-restoring or quantizing digitization is performed: For too little analog preprocessing, efficiency is degraded due to an increase in power consumption in the analog-to-digital converter (ADC) and digital processing, and for too much analog preprocessing, efficiency is degraded because the costs of maintaining precision in the analog preprocessor become too high. The exact optimum depends on the task, technology, and topology. Fig. 2 illustrates the idea visually. In a practical design, other considerations of flexibility and modularity that are Fig. 2. Optimum amount of analog preprocessing before digitization minimizes power consumption. complementary to efficiency will also affect the location of this optimum. Examples of systems where analog preprocessing has been used to reduce power by more than an order of magnitude include a highly digitally programmable cochlear-implant processor described in [4] and an electroencephalogram processor described in [5]. The retina in the eye compresses nearly 36 Gb · s−1 of incoming visual information into nearly 20 Mb · s−1 of output information before sampling and quantization [1]. By symmetry, digital output information from a DAC should be postprocessed with an optimal amount of analog processing before it interfaces with an actuator or communication link. In general, this principle illustrates that energy-efficient systems are optimally partitioned between the analog and digital domains. B. Use Subthreshold Operation to Maximize Energy Efficiency Subthreshold or weak-inversion transistor operation [1], [6], [7] is advantageous in low-power design for five reasons: 1) The gm /I ratio is maximum such that speed per watt or precision per watt is maximized. Thus, in analog design, subthreshold operation uses the least power for a given bandwidth–SNR product. It is the most energy-efficient regime in digital design, since for a given VDD , the on–off current ratio is maximized in this regime. 2) Low values of transistor saturation voltage enable VDD minimization and thus save power in both analog and digital designs. 3) Effects such as velocity saturation degrade energy efficiency by degrading the gm /I ratio and by increasing excess thermal noise. Such effects are nonexistent in the subthreshold regime and improve energy efficiency in the analog and digital domains. 4) Since current levels are relatively small in the subthreshold regime, resistive and inductive drops due to parasitics that can degrade gm are minimized in the subthreshold regime, as is power-supply noise. 5) “Leakage” current as it were can actually profitably be used in the analog domain to compute rather than being “wasted.” Subthreshold operation has three primary disadvantages: 1) The high gm /I ratio and exponential sensitivity to voltage and temperature make this regime highly sensitive to transistor mismatch, power-supply noise, and temperature. Thus, biasing circuits such as those in [4] are essential SARPESHKAR: UNIVERSAL PRINCIPLES FOR ULTRA LOW POWER AND ENERGY EFFICIENT DESIGN 195 for robust operation in analog design, as are feedback and calibration architectures. Similarly, VDD has to be sufficiently high in digital designs [1] to ensure robust operation across all process corners; certain topologies involving stacking, feedback, and parallel devices must be avoided in digital designs. 2) The fT , which is a measure of the maximal frequency of operation of the transistor, is more subject to parasitics degrading energy efficiency by about a factor of two. 3) Linearization is more difficult than in other regimes. Reference [8] shows how to architect linear topologies through the use of negative feedback in the subthreshold regime, and [9] provides useful topologies for lowvoltage designs. C. Encode the Computation in the Technology Efficiently Fig. 3. Collective analog or hybrid computation. The exponential basis function is a very powerful universal basis function with all order of polynomial terms in its Taylorseries expansion. Thus, polynomially linear [10] and nonlinear current-mode dynamical systems of any order [1] can be implemented with single-transistor exponential basis functions in a highly energy-efficient fashion in bipolar and subthreshold technologies. Similarly, biology also utilizes exponential basis functions to compute in a highly energy-efficient fashion. Simple highly temperature-invariant logarithmic ADCs are efficiently implemented in the subthreshold regime [11], as are complex ear-inspired active partial differential equations for efficient broad-band RF spectrum analysis [12]. In general, efficient computational encodings in the analog domain enhance signal gain over noise gain by using very few transistors to encode relatively complex functions at the needed (but not excessively high) precision in any one output channel [13]. In the digital domain, efficient encodings minimize switching and leakage power [14]. parallel inputs in the dendrites of neurons, and to implement a filter bank in the inner ear or cochlea [1], [16]. The biochemical reaction networks in the cell are also highly parallel energyefficient networks [1], [17]. Parallel architectures are capable of simultaneously high-speed and low-power operation but frequently trade power for area [1]. D. Use Parallel Architectures Parallel architectures utilize many parallel units to implement a complex computation through a divide-and-conquer approach. The speed, complexity, or precision of each parallel unit can be significantly less than that needed in the overall computation. Since the costs of power are typically nonlinear expansive functions of the complexity, speed, or precision, the sum of the power of all N parallel units is significantly less than the power of a complex high-speed precise unit doing all the computation N × Psmpl (flo (N ), SN Rlow (N )) < Pcmplx (fhi , SN Rhi ). (1) The use of energy-efficient parallel architectures for digital processing is discussed in [15]. In either the analog or digital domain, if the input is not inherently parallel, e.g., as in an image, then an encoder and/or a decoder is needed to perform one-to-N encoding and N -to-one decoding. The costs of these encoders and decoders affect the optimum number of parallel units. In parallel digital designs, power is saved because 2 2 N flo CVDDlo is less than Cfhi VDDhi in the above-threshold regime. In analog designs that employ several slow and parallel ADCs rather than one high-speed serial ADC, power is saved because N (flo )2lo < fhi 2hi , i.e., bandwidth and precision are reduced in the parallel units. Transmission-line architectures in nature are exploited to share computational hardware amongst E. Balance Computation and Communication Costs A frequent tradeoff in many portable systems is the balance between computation and communication power costs. If one computes too little, there is too much information to transmit, which increases communication costs. If one computes too much, there is little information to transmit, which reduces communication costs but increases computation costs. The optimum depends on the relative costs of each. Such tradeoffs affect cell-phone–base-station communication, digitization of bits that need to be transmitted wirelessly in brain–machine interfaces and in neural prosthetics [18], and the general design of complex systems composed of parts that need to interact with each other [1]. For example, a cell phone with a compression algorithm in its DSP trades increased computation power in its DSP for reduced communication power in its power amplifier. F. Exploit Collective Analog or Hybrid Computation Fig. 3(a) illustrates the idea behind collective analog or hybrid computation, which combines the best of the analog and digital worlds to compute as in the mixed-signal systems in nature: Rather than compute with weak logic basis functions and with low 1-bit precision per channel as in traditional digital computation, or with powerful high-precision analog basis functions on one channel as in traditional analog computation, we compute with several moderate-precision analog units that collectively interact to perform high-precision or complex computation. Fig. 3(b) shows that, depending on computationversus-interaction costs (the w/c ratio in Fig. 3), the optimum energy efficiency is attained at low or moderate computing precision per analog channel [13]. The collective analog principle is likely the most important principle utilized by cells and neurons to save power [1] and is an important and relatively unexplored frontier in ultra low power mixed-signal designs today. The work described in [12] outlines an example of collective analog computation where the interaction between analog units is purely analog in an ear-inspired broad-band RF spectrum 196 IEEE TRANSACTIONS ON CIRCUITS AND SYSTEMS—II: EXPRESS BRIEFS, VOL. 59, NO. 4, APRIL 2012 analyzer chip termed an “RF cochlea.” The interaction between analog units can be both digital and analog in event-based and cellular systems [19]. The “hybrid state machine” architecture discussed in [1] and [19] comprises analog dynamical systems that incorporate event-based feedback from discrete digital dynamical systems. Such architectures are a more general version of architectures such as ΣΔ ADCs, which are usually very energy efficient. They generalize arbitrarily complex sequential synchronous digital computation in finite-state machines to a framework for arbitrarily complex asynchronous sequential hybrid analog–digital computation. G. Reduce the Amount of Information That Needs to Be Processed Since energy and information are intimately linked, reducing the amount of information that needs to be processed saves energy. Many examples of this principle abound, including the use of automatic gain control (AGC) circuits in analog systems [20], the use of efficient algorithms or clock gating in digital systems [14], the use of analog preprocessing to reduce information [4], and the use of learning to accumulate knowledge that reduces the amount of information that needs to be processed [21]. The overarching principle of information reduction could encompass all principles in low-power design. We have listed other principles separately because this overarching principle is rather abstract. Its concrete application requires knowledge of algorithms, signal processing, architectures, circuit topologies, and device physics. bration to improve the performance of analog systems are examples of this principle. The improvement in energy efficiency results because the feedback path is usually either slow and precise, simple and precise, or infrequent such that it adds little power overhead. Often, the same hardware is just reconfigured into a temporary feedback loop with switches such that there is little areal cost except to minimize capacitive charge-injection errors. A fast and complex feedforward path can attain precision via a slow and/or simple well-controlled feedback path at cheap cost. Since high-gain–bandwidth products in a feedback loop cost power and can lead to instability and ringing, it is advantageous to combine feedforward techniques with feedback techniques such that a feedforward path removes a large fraction of the predictable error or unwanted signal while a feedback path removes the residual unpredictable error or unwanted signal. Since the error that is needed is then reduced, the loop gain of the feedback loop can be much lower, improving stability and energy efficiency [1], [29]. Indeed, if we incorporate learning in both feedforward and feedback pathways, removal of unwanted signal and undesirable error can get better and better over time as the system gets more familiar with the statistics of the signal and the statistics of the noise. Indeed, that is exactly what biological systems do when they learn to perform a task better [1], [21]. Clock gating is an example of a feedforward information-reducing energy-saving principle in digital systems. Wakeup circuits that only activate energyconsuming circuits when needed are other examples of feedforward architectures for power reduction. H. Use Feedback and Feedforward Architectures for Improving Robustness and Energy Efficiency I. Separate Speed and Precision in the Architecture if Possible Feedback is important in improving both the efficiency and robustness of a low-power mixed-signal system. The feedback benefits for energy efficiency occur through three primary mechanisms. 1) SNR improvement via feedback attenuation of undesirable signals. For example, we can remove the dc bias current of a microphone preamplifier [22] via feedback, allowing one to amplify the information-bearing ac sound signal in a more energy-efficient fashion. The gain–bandwidth product of the feedback loop is typically small in such cases since we only need to reject slowly varying signals in most cases; thus, the energy overhead of the added feedback circuitry is usually small. 2) Feedback adaptation to signal statistics. As signal statistics change, the parameters of the system must adapt such that informative regions of the input space are always mapped to the output with relatively constant SNR or such that the system does not needlessly waste resources. AGC circuits in analog systems are examples of the operation of this principle: The effective overall dynamic range of operation is large in an AGC system while the instantaneous dynamic range and output SNR are much smaller. Thus, for example, we can pay the power costs of an 8-bit computation while having a 16-bit dynamic range. The adaptation of the power supply with the activity factor in digital systems is another example of the operation of this principle. 3) Feedback attenuation of device noise statistics. The use of calibration and auto-zeroing feedback loops to attenuate offset and 1/f noise and the use of digital feedback cali- It costs power to be simultaneously fast and accurate, whether we are performing analog processing, digital processing, sensing, or actuating. Thus, it is advantageous as far as possible to design architectures where speed and precision are not simultaneously needed. Digital systems, in general, already incorporate this principle since they can be fast, but they are only 1-bit precise. Analog comparators also embody this principle very well, which is why they are significantly more energy efficient than amplifiers; they have also scaled well with technology. Comparator-based ADC topologies are much more energy efficient than topologies that utilize amplifiers [23], [24]. A comparator is typically implemented with a low-gain open-loop preamplifier followed by a positive-feedback latch. The preamplifier has a relatively low power cost: The gain–bandwidth product of the open-loop amplifier, which is also proportional to its SNR–bandwidth product, is modest such that the power consumption of the preamplifier is relatively low. The positive-feedback latch achieves high gain through unstable nonlinear amplification. It is therefore also highly power efficient at having low power consumption for a given speed of operation. In the cascade of the preamplifier and the latch, the preamplifier needs to be somewhat precise as far as its input-referred minimum-detectable signal goes; the relative precision required of the preamplifier decreases with increases in its common-mode voltage. The latch need only be fast. Thus, speed and precision are well separated in the two halves of the comparator since neither circuit, preamplifier, or latch needs to simultaneously have extreme speed or extreme precision. It is interesting that neuronal and genetic circuits in nature also use “comparators” extensively and neurons and cells are highly SARPESHKAR: UNIVERSAL PRINCIPLES FOR ULTRA LOW POWER AND ENERGY EFFICIENT DESIGN 197 energy efficient. In fact, a single human cell operates at ∼1 pW of power consumption and is a 30 000-node custom nanotechnology gene-protein supercomputer that dissipates only ∼20 kT per energy-consuming biochemical operation, orders of magnitude less than even advanced transistors of the future [1]. The parameter kT is a unit of thermal energy that is equal to 4 × 10−21 J at 300 K. J. Operate Slowly and Adiabatically if Possible Operating well below the fT of a technology is power efficient since it allows for adiabatic digital operation by minimizing voltage drops across energy-dissipating resistors. In such operation, the frequency of the clock fclk is well below that of the RC charging times of switches, which leads to a reduction in switching power dissipation by a factor proportional to fclk RC. In [1], we discuss why such energy-efficient adiabatic operation in digital circuits is just a manifestation of highQ energy-efficient operation with passive devices in analog circuits, e.g., as in tuned LCR oscillators. In analog circuits, operating well below fT minimizes gate-capacitance switching losses and switch resistance losses, e.g., as in well-designed switching Class-E amplifiers. Operating adiabatically is also advantageous in nerve-stimulation circuits [25]. In RF circuits, operating too close to the maximum fT increases amplifier input-referred noise due to current and voltage gain reductions and leads to the need to account for noise correlations in transistors via induced gate noise [26]. Thus, in general, in low-power circuits, one must try and operate at a factor that is at least five times below fT if possible. Fortunately, fT ’s in subthreshold and moderate inversion are already a few gigahertz in several commercially available processes. Before we end this section, it is worth pointing out that robustness and flexibility always trade off with energy efficiency: The extra degrees of freedom necessary to maintain robustness or flexibility invariably hurt efficiency. This principle is true in all systems, whether digital, analog, or mixed-signal. Fig. 4 summarizes the pros and cons of the general-purpose ADC-then-DSP architecture versus that of a significantly more energy-efficient architecture that is architected in accord with the low-power principles that we have outlined. Both architectures can actually exist in advanced systems, with the generalpurpose architecture providing periodic calibration and tuning functions for the more efficient architecture. The low-power design typically evolves from the general-purpose architecture toward the energy-efficient architecture with time and learning: The general-purpose architecture makes sense in initial design iterations when flexibility is important because one’s ignorance of the environment is high. When one has acquired knowledge of the environment over time, and complete flexibility is less important, we should evolve toward the more energy-efficient architecture. III. U NIVERSALITY Information-based principles are also useful in the design of energy-efficient systems that are nonelectrical or much bigger in their scale. For example, in [1], it is shown how they may be applied to the design of low-power cars: Cars exhibit power–speed tradeoffs set by static frictional power consumption and dynamic braking power consumption, very analogous to static power consumption and dynamic power consumption in digital designs. A circuit model of car power Fig. 4. Evolution of low-power and energy-efficient design. consumption that exploits astounding parallels between energyefficient electrical and mechanical system designs sheds insight into why electric cars are more energy efficient than gasoline cars. The tradeoffs seen in energy-harvesting RF power links also apply exactly to electric motors in cars or to piezoelectric energy harvesters [1], [27]. Not surprisingly, the deep universal connections between energy and information [28] reveal that there is a fundamental Shannon limit for cost of energy per bit of kT ln2 in all finite-time computing systems, as we have discussed [1]. A more quantitative and deeper discussion of the concepts reviewed in this brief may be found in [1]. R EFERENCES [1] R. Sarpeshkar, Ultra Low Power Bioelectronics: Fundamentals, Biomedical Applications, and Bio-Inspired Systems. Cambridge, U.K.: Cambridge Univ. Press, 2010. [2] D. L. Donoho, “Compressed sensing,” IEEE Trans. Inf. Theory, vol. 52, no. 4, pp. 1289–1306, Apr. 2006. [3] J. J. Sit and R. Sarpeshkar, “A cochlear-implant processor for encoding music and lowering stimulation power,” IEEE Pervasive Comput., vol. 1, no. 7, pp. 40–48, Jan./Mar. 2008, special issue on implantable systems. [4] R. Sarpeshkar, C. D. Salthouse, J. J. Sit, M. W. Baker, S. M. Zhak, T. K. T. Lu, L. Turicchia, and S. Balster, “An ultra-low-power programmable analog bionic ear processor,” IEEE Trans. Biomed. Eng., vol. 52, no. 4, pp. 711–727, Apr. 2005. [5] A. T. Avestruz, W. Santa, D. Carlson, R. Jensen, S. Stanslaski, A. Helfenstine, and T. Denison, “A 5 μW/channel spectral analysis IC for chronic bidirectional brain–machine interfaces,” IEEE J. Solid-State Circuits, vol. 43, no. 12, pp. 3006–3024, Dec. 2008. [6] C. Mead, Analog VLSI and Neural Systems. Reading, MA: AddisonWesley, 1989. [7] C. Enz and E. A. Vittoz, Charge Based MOS Transistor Modeling: The EKV Model for Low Power and RF IC Design. Chichester, U.K.: Wiley, 2006. [8] R. Sarpeshkar, R. F. Lyon, and C. A. Mead, “A low-power widelinear-range transconductance amplifier,” Analog Integr. Circuits Signal Process., vol. 13, pp. 123–151, 1997. [9] S. Chatterjee, Y. Tsividis, and P. Kinget, “0.5-V analog circuit techniques and their application in OTA and filter design,” IEEE J. Solid-State Circuits, vol. 40, no. 12, pp. 2373–2387, Dec. 2005. [10] Y. P. Tsividis, V. Gopinathan, and L. Toth, “Companding in signal processing,” Electron. Lett., vol. 26, no. 17, pp. 1331–1332, Aug. 1990. [11] J. J. Sit and R. Sarpeshkar, “A micropower logarithmic A/D with offset and temperature compensation,” IEEE J. Solid-State Circuits, vol. 39, no. 2, pp. 308–319, Feb. 2004. [12] S. Mandal, S. Zhak, and R. Sarpeshkar, “A bio-inspired active radiofrequency silicon cochlea,” IEEE J. Solid-State Circuits, vol. 44, no. 6, pp. 1814–1828, Jun. 2009. [13] R. Sarpeshkar, “Analog versus digital: Extrapolating from electronics to neurobiology,” Neural Comput., vol. 10, no. 7, pp. 1601–1638, Oct. 1998. [14] J. Rabaey, Low Power Design Essentials. Upper Saddle River, N.J.: Prentice-Hall, 2009. [15] A. P. Chandrakasan and R. W. Brodersen, “Minimizing power consumption in digital CMOS circuits,” Proc. IEEE, vol. 83, no. 4, pp. 498–523, Apr. 1995. 198 IEEE TRANSACTIONS ON CIRCUITS AND SYSTEMS—II: EXPRESS BRIEFS, VOL. 59, NO. 4, APRIL 2012 [16] T. Lu, S. Zhak, P. Dallos, and R. Sarpeshkar, “Fast cochlear amplification with slow outer hair cells,” Hearing Res., vol. 214, no. 1/2, pp. 45–67, Apr. 2006. [17] U. Alon, An Introduction to Systems Biology: Design Principles of Biological Circuits. Boca Raton, FL: CRC Press, 2007. [18] R. Sarpeshkar, W. Wattanapanitch, S. K. Arfin, B. I. Rapoport, S. Mandal, M. Baker, M. Fee, S. Musallam, and R. A. Andersen, “Low-power circuits for brain–machine interfaces,” IEEE Trans. Biomed. Circuits Syst., vol. 2, no. 3, pp. 173–183, Sep. 2008. [19] R. Sarpeshkar and M. O’Halloran, “Scalable hybrid computation with spikes,” Neural Comput., vol. 14, no. 9, pp. 2003–2038, Sep. 2002. [20] M. Baker and R. Sarpeshkar, “Low-power single loop and dual-loop AGCs for bionic ears,” IEEE J. Solid-State Circuits, vol. 41, no. 9, pp. 1983–1996, Sep. 2006. [21] G. Cauwenberghs and M. A. Bayoumi, Learning on Silicon: Adaptive VLSI Neural Systems. Boston, MA: Kluwer, 1999. [22] M. Baker and R. Sarpeshkar, “A low-power high-PSRR current mode microphone preamplifier,” IEEE J. Solid-State Circuits, vol. 38, no. 10, pp. 1671–1678, Oct. 2003. [23] H. Yang and R. Sarpeshkar, “A bio-inspired ultra-energy-efficient analogto-digital converter for biomedical applications,” IEEE Trans. Circuits [24] [25] [26] [27] [28] [29] Syst. I, Reg. Papers, vol. 53, no. 11, pp. 2349–2356, Nov. 2006, Special Issue on Life Sciences and System Applications. J. K. Fiorenza, T. Sepke, P. Holloway, C. G. Sodini, and L. Hae-Seung, “Comparator-based switched-capacitor circuits for scaled CMOS technologies,” IEEE J. Solid-State Circuits, vol. 41, no. 12, pp. 2658–2668, Dec. 2006. S. K. Arfin and R. Sarpeshkar, “An energy-efficient, adiabatic electrode stimulator with inductive energy recycling and feedback current regulation,” IEEE Trans. Biomed. Circuits Syst., vol. 6, no. 1, pp. 1–14, Feb. 2012. T. H. Lee, The Design of CMOS Radio-Frequency Integrated Circuits, 2nd ed. Cambridge, U.K.: Cambridge Univ. Press, 2004. S. Roundy, “On the effectiveness of vibration-based energy harvesting,” J. Intell. Mater. Syst. Struct., vol. 16, no. 10, pp. 809–823, 2005. C. H. Bennett and R. Landauer, “The fundamental physical limits of computation,” Sci. American, vol. 253, pp. 48–56, 1985. S. Guo and H. Lee, “Single-capacitor active-feedback compensation for small-capacitive-load three-stage amplifiers,” IEEE Trans. Circuits Syst. II, Exp. Briefs, vol. 56, no. 10, pp. 758–762, Oct. 2009.