* Your assessment is very important for improving the workof artificial intelligence, which forms the content of this project

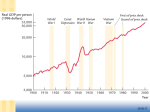

Download What Explains the Great Recession and the Slow Recovery?

Economic growth wikipedia , lookup

Fear of floating wikipedia , lookup

Monetary policy wikipedia , lookup

Fiscal multiplier wikipedia , lookup

Nominal rigidity wikipedia , lookup

Okishio's theorem wikipedia , lookup

Economic calculation problem wikipedia , lookup

Interest rate wikipedia , lookup

Rostow's stages of growth wikipedia , lookup