* Your assessment is very important for improving the work of artificial intelligence, which forms the content of this project

Download Transport Layer protocols

Computer network wikipedia , lookup

Asynchronous Transfer Mode wikipedia , lookup

Airborne Networking wikipedia , lookup

Network tap wikipedia , lookup

Parallel port wikipedia , lookup

Serial port wikipedia , lookup

Deep packet inspection wikipedia , lookup

Cracking of wireless networks wikipedia , lookup

Real-Time Messaging Protocol wikipedia , lookup

Recursive InterNetwork Architecture (RINA) wikipedia , lookup

Internet protocol suite wikipedia , lookup

Transport Layer - Overview

Understanding:

Transport layer services

Multiplexing/demultiplexing

Connectionless transport: UDP

Principles of reliable data transfer

Connection-oriented transport: TCP

Goals:

Understand principles behind Transport layer services

¾ Instantiation and implementation in the Internet

¾

1

¾

flow control

¾

connection management

2

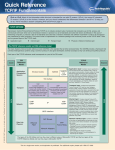

TLP

Transport Layer protocols

Transport Services and Protocols

Internet transport services:

Provide logical communication

¾

network

data link

physical

a

ic

network

data link

physical

network

data link

physical

rt

po

TLP

network

data link

physical

s

an

tr

rt

po

3

nd

s

an

tr

application

transport

network

data link

physical

-e

nd

Unreliable ( Ù best-effort),

unordered unicast or

multicast delivery: UDP

Services not provided by TCP:

• real-time (need RTP, RTCP)

• bandwidth guarantees

• reliable multicast

¾

nd

-e

network

data link

physical

network

data link

physical

network

data link

physical

le

nd

network

data link

physical

application

transport

network

data link

physical

a

ic

le

network

data link

physical

Reliable, in-order unicast

delivery: TCP

• congestion

• flow control

• connection setup

g

lo

network

data link

physical

g

lo

TLP

reliable transfer

TCP congestion control

TLP

between app’ processes running application

transport

network

data link

on different hosts

physical

Transport protocols run in end

systems (only)

Transport vs network layer

services:

¾ network layer: data transfer

between nodes/end systems

¾ transport layer: data transfer

between processes at end systems

¾ relies on, but enhances, network

layer service capability

¾

application

transport

network

data link

physical

4

UDP

• Delivery of packet without guarantee (of arrival and in-order)

• No handshaking and ACKnowledgement ÕÕ fast response

• Reliability of link is application’s responsibility

Transmission Control Layer

• Two Protocol suites of TCP in internet architecture:

- UDP (User Datagram Protocol) (RFC768)

~ provides connectionless, unreliable, without flow

control services

- TCP (Transport Control Protocol)

UDP datagram

TCP segment

Ö IP packet

(RFC793, 1122, 1323, 2018, 2581)

~ provides connection-oriented, reliable, and byte-stream

oriented, with flow control services

ACK packet

(TCP only)

TCP

• Encapsulation of TPL’s PDU in IP packet

• Need Connection setup before transmission

• Guarantee packet delivery (no duplication) and in-order

reception, byte-stream oriented

• Reliability of link is TCP’s responsibility

IP (20B) TCP (20B)/UDP(8B)

header

header

UDP datagram

TCP segment

TLP

5

Multiplexing:

application-layer data

segment

header

segment

Ht M

Hn segment

P1

P

application

transport

network

P3

• Multiplexing/Demultiplexing:

– based on IP addresses,

sender’s and receiver’s

port numbers

~ delivering received segments

to correct app layer processes

via socket

P

P

Source port

Destination port

Sequence number

Acknowledgment number

P

P4

application

transport

network

P

Destination port

Source port

~ process

~ socket

Message length

P2

Checksum

UDP

Data

application

transport

network

~ unit of data exchanged between transport layer entities

aka TPDU:

TPDU: Transport Protocol Data Unit

TLP

6

Multiplexing/

Demultiplexing (cont

’d)

Multiplexing/Demultiplexing

(cont’d)

Demultiplexing:

receiver

B: bytes

IP packet (65535B max)

TLP

Multiplexing

Multiplexing and

and Demultiplexing

Demultiplexing

~ gathering data from multiple

application processes(sockets),

enveloping data with header

(later used for demul.)

TCP/UDP

data

7

DA SA

TLP

TF IP Header

Data offset

U

P RSF

Reserved R A

C S SYI

Window

P K H T NN

Checksum

Urgent pointer

Option

Padding

TCP data

TCP/UDP header

Data

CRC

8

TCP Well-known

Port Numbers

• Source port numbers

~ randomly assigned by the

sending host (1024< # <65536)

• Destination port numbers

~ the well-known one or the

incoming source port #

(# <1024)

Port N umber

0

1

5

7

9

11

13

15

17

19

20

21

23

25

37

42

43

53

77

79

80

93

95

101

102

103

104

111

113

117

119

129

139

TLP

UDP Well-known Port Numbers

Description

Reserve

TC P M ultiplexer

Rem ote Job Entry

Echo

Discard

Active Users

Daytim e

Network status program

Quote of the day

Character generator

FTPȐ dataȑ

FTPȐ com m andȑ

Term inal Connection

SM TP

Tim e

Host Nam e Server

W ho is

Dom ain Nam e Server

Private RJE service

Finger

Http protocol

Device Control Protocol

SU PD UP Protocol

N IC host nam e server

IOS-TSA P

X.400 m ail service

X.400 m ail sending

SU N RPC

Authentication Service

UUC P-path service

USEN ET new s Transfer Protocol

Password Generator Protocol

NETB IOS Session Service

Port

N um ber

0

7

9

11

13

15

17

19

37

42

43

53

67

68

69

111

123

161

162

512

513

514

525

9

• When host receives IP datagrams . . .

– each datagram has source IP

address, destination IP address

– each datagram carries 1

transport-layer segment

– each segment has source,

destination port number

(recall: well-known port numbers

for specific applications)

• Host uses IP address & port number

to direct segment to the appropriate

socket (w.r.t. a process)

RFC 1700.

1700.

RFC

FTP site:

site: ftp://isi.edu./in-notes/iana/assignments.

ftp://isi.edu./in-notes/iana/assignments.

FTP

Assigned port

port numbers

numbers range

range from

from 00 -- 1023.

1023.

•• Assigned

Assignedare

arereserved

reservedby

byIANA

IANAand

andcannot

cannotbe

beused

used

–– Assigned

Usedfor

forTCP,

TCP,IP,

IP,UDP

UDPand

andvarious

variousapplications

applicationssuch

such

–– Used

asTELNET

TELNET

as

Registered range

range for

for 1024

1024 -- 65535

65535 and

and these

these are

are

•• Registered

companies that

that have

have registered

registered their

their application.

application.

companies

Dynamic port

port numbers

numbers are

are also

also in

in the

the range

range of

of

•• Dynamic

1024 -- 65535.

65535.

1024

[ check with Unix/Linux files: /etc/services ]

10

How Demultiplexing works ?

Up-to-dateassignments

assignmentsof

ofnumbers

numbers

–– Up-to-date

TLP

R eserve

E cho

D iscard

A ctive U sers

D aytim e

N etw ork status program

Q uote of the day

C haracter G enerator

Tim e

H ost N am e Server

W ho is

D om ain N am e Server

B ootstrap Protocol Server

B ootstrap Protocol C lient

Trivial File Transfer (TFT P)

S un M icrosystem s R PC

N etw ork Tim e Protocol (N T P)

S N M P net m onitor

S N M P traps

U N IX com sat

U N IX rw ho daem on

S ystem log

Tim e daem on

TLP

Assigned, Registered and Dynamic Port Numbers

••

••

D escription

11

TLP

32 bits

source port #

dest port #

other header fields

application

data

(message)

TCP/UDP segment format

12

Mux/DeMux (TCP): Example I

Mux/DeMux (TCP) : Example II

• Multiple connection to multiple processes

• One process to one connection

host A

Web client

host C

server B

src port: 5678

dest. port: 23

P1’

C’s IP: 140.112.234.2

Dest IP: B

src port: 7976

dest. port: 80

P

P’

Web server

host B

P2’

source port:23

dest. port:5678

Telnet client

13

B’s IP = 140.124.13.3

Well-known Port = 80

TLP

14

MUX/DeMUX Happened Everywhere

Mux/DeMux (TCP): Example I

• One process to one connection

AP Layer

PING

host A

TELNET

SMTP

FTP

TRACE

ROUTE

SNMP

BOOTP

server B

src port: 5678

dest. port: 23

Multiplexing

)ӭπ*

P

P’

application

transport

network

application

transport

network

source port:23

dest. port:5678

Segment

or

Datagram

TCP

TP Layer

UTP

Packet

(Daragram)

ICMP

ARP

IP

Frame

+

15

TLP

NTP

Based on

port #

Internet Layer

(S/W modules)

IGMP

Demultiplexing

)ှӭπ*

RARP

(Interface-SAP)

Telnet server

DNS

Based on

protocol type

Bits

TLP

network

Dest IP: B

src port: 8879

dest. port: 80

Src IP: 140.124.70.13

Dest IP: 140.124.13.3

source port: 8879

dest. port: 80

P1’

Telnet client

P3

Web client

host A

Telnet server

TLP

P2

transport

DeMUX

C’s IP: 140.112.234.2

application

transport

network

application

transport

network

P1

Based on

frame’s L/T

DATA LINK (e.g., Ethernet)

Ntwk Access Layer

Medium (Frames)

(from physical link)

16

UDP Header and Segment Format

UDP: User Datagram Protocol [RFC 768]

“no frills,”

frills,” “bare bones”

bones”

Internet transport protocol

“best effort”

effort” service, UDP

segments may be:

¾lost

¾delivered out of order to

applications

connectionless:

¾no handshaking between

UDP sender, receiver

¾each UDP segment handled

independently of others

Why is there a UDP?

multimedia apps with

¾ loss tolerant

Length, in

bytes of UDP

¾ rate sensitive

segment,

Other UDP uses:

including

header

¾ DNS

¾ SNMP

Reliable transfer over UDP:

add reliability at application

layer

¾ application-specific error

recover!

17

TLP

32 bits

source port #

dest port #

length

checksum

Application

data

(message)

UDP segment format

18

TLP

UDP Checksum

Checksum in the UDP Header

Goal: detect “errors”

errors” (e.g., flipped bits) in transmitted segment

• ChecKSum

( | IP’s CKS with the differences of following )

1. Allowing odd # of data byte (by padding one byte of “0”

but don’t transmit it)

9 2. Including pseudo-header from IP header (12 bytes

counted in total)

Sender:

treat segment contents as

sequence of 16-bit integers

checksum: addition (1’s

complement sum) of

segment contents

sender puts checksum value

into UDP checksum field

Receiver:

compute checksum of

received segment

check if computed checksum

SRC IP(4B), DEST IP(4B), 00 + Protocol (2B), UDP length(2B)

9

equals checksum field value:

¾

NO - error detected

¾

YES - no error detected.

• Goal : to verify that the UDP DG has reached its correct

destination

But maybe errors nonethless?

nonethless?

See next slide for implementation details

TLP

Often used for streaming

no connection setup/

establishing time(which

can add delay)

¾ simple: no connection

state at sender’s and

receiver’s app

¾ small segment header

• Low overhead

¾ no congestion control:

UDP can blast away as fast

as desired (unregulated

sending rate)

¾

pp.200-201

19

TLP

• No CKS used if CKS = all 0’s being transmitted.

• Transmit 65535 if computed CKS = all 0’s (one’s complement)

• CKS Æ adds pseudo hdr and UDP data (plus 8-bit 0’s if necessary)

20

TCP: Overview (RFCs:

RFCs: 793, 1122, 1323, 2018, 2581)

full duplex data:

one sender, one receiver

reliable, inin-order byte steam:

¾ no “message boundaries”

pipelined:

¾ TCP congestion and flow

control set window size

send & receive buffers

¾

application

writes data

application

reads data

TCP

send buffer

TCP

receive buffer

segment

Important issue in application, transport, and link layers

bi-directional data flow in

same connection

¾ MSS: maximum segment

size

connectionconnection-oriented:

¾ handshaking (exchange of

control msgs) init’s

sender, receiver state

before data exchange

flow controlled:

socket

door

¾ sender will not

overwhelm receiver

¾

Top of important networking topics!

Being called (details

when data arrives

Being called

when pkt arrives

characteristics of a unreliable channel will determine the

complexity of reliable data transfer (rdt

(rdt)) protocol.

21

TLP

coming next)

Network

layer

pointpoint-toto-point:

socket

door

Principles of Reliable data transfer

TLP

udt ~ unreliable data transfer protocol (IP, here)

Reliable data transfer: getting started

22

IP contradicts TCP ?

• Recall:

rdt_send(): called from above,

(e.g., by app.). Passed data to

deliver to receiver upper layer

send

side

udt_send(): called by rdt,

to transfer packet over

unreliable channel to receiver

TLP

C.O.

• TCP provides completely reliable transfer

C.L.

• (But) IP offers best-effort (unreliable) delivery

• TCP uses IP ? (YES ) How does it be done ?

deliver_data(): called by

rdt to deliver data to upper

) Reliable Data Transmission rely on . . .

receive

side

- Positive acknowledgment

~ Receiver returns a short message (called ACK,

acknowledgement) to the sender when data arrives

- Retransmission (upon timeout)

~ Sender starts timer whenever a segment is transmitted

~ If timer expires before acknowledgment arrives,

sender retransmits THE message

rdt_rcv(): called when packet

arrives on rcv-side of channel

23

TLP

24

TCP Header – II

TCP Header - I

• Sequence number (SEQ # ) :

Head

length

- identifies each byte in the stream of data from the

sending TCP to the receiving TCP (byte streams)

- numbering ranging from 0 to 232 -1 and wrapping back

around to 0

- SEQ # = (so-called) initial SEQ # (ISN) when SYN = 1

(flag)

(the first (data) segment = ISN + 1)

receiver window size

SQN is bounded to octets rather than to entire segments.

• Acknowledgment number :

- the next sequence number that the receiver expects to

receive (i.e., the piggybacked ACK)

= the SEQ # of the last successfully received data byte + 1

• TCP packed data in “segment” but counting/tracking by bytes.

• Seq# and Ack#: Counting by bytes of data (not segments)!

TLP

25

TLP

(ACK { 1 when the connection is firstly established)

TCP Header – III

TCP Connection Establishment

• Data Offset = header length (HL) in 32-bit word, (60 bytes max)

• Establishing a connection between two ends before exchanging data

• Connection establishing protocol ~ a threethree-way handshaking

• Code bits :

client

- URG Ö “urgent pointer” field is valid (when it is set to 1)

- ACK Ö Making ACK number valid (when it is set to 1)

(Active open)

SYN_SENT

Open a conn.

||

Open a socket

Connection

Established

- PSH Ö sender should send out all data in the sending buffer

conn. management

Ö receiver should pass this data to an application ASAP

- RST Ö reset the connection (port unreachable)

- SYN Ö synchronize sequence numbers to initiate a connection

server

SYN = j = ISN

(SYN = 1, Seq# = j)

Listen (passive open)

ISN

SYN = k, ACK = j+1

SYN_RCVD

( k ~ Rxer’s seq # )

ACK = k+1

27

initialize TCP variables:

seq. #, buffers, flow

control info (e.g.

RcvWindow)

Established

- FIN Ö sender is finished sending data (ask to close connection)

• Window (for credit allocation flow control) :

Ö indicating the number of bytes the sender is willing to accept

TLP

26

TLP

- SYN consumes one sequence number

- ISN should change over time (differs from connection to connection ) 28

Decompose PDUs in a TCP/IP Scenario

Windows> telnet 140.124.70.26

(PDU cont’d)

(showing the first two packets sending by the client)

Port #: Transport--Application layer

Src port # (randomly generated by the src PC – 1059, here)

Dest port # (an well-known for well-known application)

(for reliable, in-order reception)

(Selective ACKnowledgment) - see next pages

Protocol #: Network--Transport layer

TLP

29

TLP

30

Stop-and-Wait Protocol

Performance of Stop-and-Wait Protocol

(rdt3.0 – Alternating-bit protocol, textbook)

rdt3.0 works, but performance stinks

Performance issue:

Example: 1 Gbps link, 15 ms ee-e prop. delay, 1KB packet:

• Sends one segment and

waits for Ack returning

before continuing

sending the next segment

pipe

(Packet size)

8kb/pkt

Ttransmit =

= 8 microsec

10**9 b/sec

(performance)

(channel capacity)

receiver

sender

• Sender/channel Utilization

fraction of time

8 usec

Utilization = U = sender busy sending = 30.008 ms = 0.00027

(or 0.027%)

Bits into the channel

(Sender)

first packet bit transmitted, t = 0

last packet bit transmitted, t = L / R

time

RTT

ACK arrives, send next

packet, t = RTT + L / R

TLP

first packet bit arrives

last packet bit arrives, send ACK

Send 1KB pkt every 30.008 msec

Æ effective throughput only 267 kbps over 1 Gbps link

¾ network protocol limits use of physical resources a lot!

Æ

(assuming no error)

31

TLP

(15.008 x 2, if ACK ignored)

(ref. P.214)

32

Pipelined protocols

(Why need ?)

Pipelining: increaseing utilization

Pipelining : allowign sender to send multiple, “inin-flight”

flight”,

yetyet-toto-bebe- acknowledged pkts w/o waiting for ACKs

For reliable data transfer :

¾ the range of sequence numbers must be increased (not retx.)

retx.)

¾ need to buffer more than one packet at sender and/or receiver

first packet bit transmitted, t = 0

ACK arrives, send next

packet, t = RTT + L / R

(next cycle begins)

Increase utilization

by a factor of 3!

U

Two generic forms of pipelined protocols:

go-Back-N and Selective repeat

first packet bit arrives

last packet bit arrives, send ACK

last bit of 2nd packet arrives, send ACK

last bit of 3rd packet arrives, send ACK

RTT

• Seq.# range and buffering

depend on the manner in

which a data transfer protocol

responds to lost, corrupted,

and overly delayed packets.

¾

(assuming no error)

last bit transmitted, t = L / R

filling a pipeline

TLP

receiver

sender

(pipelined with error recovery)

Go-Back-N

33

sender

=

3*L/R

RTT + L / R

=

.024

30.008

= 0.0008

l 0.00027

(0.08%)

34

TLP

GBN (Cont’d)

Preview : sliding window

Sender :

k-bit seq # in pkt header

Receiver :

“window”

window” of up to N, consecutive unAck’

unAck’ed pkts allowed (the window size)

ACK-only: always send ACK for correctly-received

pkt with highest in-order seq #

may generate duplicate ACKs

¾ need only remember expected seqnum

¾

out-of-order packet:

ACK(n): ACKs all pkts up to, including seq # n ~ “cumulative

ACK”

ACK” (Advantage: see Fig. 3.34)

¾ may deceive duplicate ACKs (see receiver) ?? You find it out.

Set timer for each inin-flight pkt

timeout(n): retransmit pkt n and all higher seq # pkts in window

TLP

35

TLP

¾

discard (don’t buffer) Æ no receiver buffering

¾

ACK pkt with highest in-order seq #

36

GBN in action

Selective Repeat/Rejecct

Receiver individually acknowledges all correctly

received pkts

¾buffers pkts, as needed, for eventual in-order delivery

to upper layer

Sender only resends pkts for which ACK not received

¾sender timer for each unACKed pkt

Sender window

¾N consecutive seq #’s

¾again limits seq #s of sent and unACKed pkts

discard

discard

reTx Æ

discard

37

TLP

38

TLP

Selective repeat: sender, receiver windows

Selective Repeat in action

loss

TLP

(Read: Fig. 3.23-25 for Sender’s and receiver’s events and actions)39

Window size = 4

TLP

40

Selective Repeat: a dilemma

¾seq

Connection Maintenance (Ex: Telnet Scenario)

Invisible

curtain

Example:

Example:

an interactive application

#’s: 0, 1, 2, 3 (size = 4)

• "echo back"

size = 3 < Max seq #

Internet

¾window

¾Receiver

sees no difference

in both scenarios (a) and

(b).

¾Incorrectly

(duplicate pkt

|| 0)

ReTx the

the 11stst pkt

pkt

passes duplicate ReTx

remote site.

• Each character traverses the

network twice

Internet

what should be the

relationship between seq #

size and window size?

?

0

A: sequence # space >= 2*window

(Sec. 3.4.4)

(new pkt 0)

Tx55ththpkt

pkt

Tx

client

Close a conn.

||

close a socket

Se

41

receipt

Q: How receiver handles

of echoed

outout-ofof-order segments ?

‘C’

A: TCP spec doesn’

doesn’t say,

~ up to implementor

(go(go-backback-N or Selective Repeat)

Seq=4

3, ACK

=80, .

..

time

TLP

42

TCP Connection Management

TCP Connection Termination

(Active close)

FIN_WAIT_1

User Seq=4

2, AC

K=79,

types

data =

‘C’

‘C’

host ACKs

receipt of

’

= ‘C ‘C’, echoes

, data

3

4

=

, ACK

back ‘C’

q=79

host ACKs

Q: To prevent this ambiguity,

(Problem 3.18)

been received and processed at

0

Host B

Segment exchange

seen by Telnet user have already

data as new in case (a)

TLP

Host A

Æ ensure that characters

server

FIN = M

(FIN=1& SYN=M)

Listen (passive close)

Å TCP client lifecycle

CLOSE_WAIT

ACK = M+1

FIN_WAIT_2

(ACK=1& SYN=M+1)

FIN = N

LAST_ACK

(closing)

TIME_WAIT

(2 MSL wait state)

Timed wait

CLOSED

ACK = N+1

Resend ACK

in case it lost

(if ACK rxed)

CLOSED

TCP server lifecycle Æ

Resources at both C

and S are deallocated.

• MSL = Max Segment Lifetime; MSL in RFC 793 = 2 min, max.

TLP

• Connection termination protocol Æ3-way but taking four segments

43

TLP

44

TCP Flow Control

TCP: retransmission scenarios

lost ACK scenario

Host A

s data

ACK

Seq=100 timeout

Seq=92 timeout

timeout

Host A

Host B

Seq=9

2, 8 b

yte

=100

X

loss

Seq=9

2, 8 b

yte

s data

=100

ACK

flow control

sender won’t overrun

receiver’s buffers by

transmitting too much,

too fast

premature timeout, cumulative ACKs

New timeout

for seq.=92

Host B

Seq=9

2, 8 b

ytes d

ata

Seq=

100,

20 by

tes d

ata

RcvBuffer = size or TCP Receive Buffer

RcvWindow = amount of spare room in Buffer

0

10

K=

120

C

K

A AC =

Seq=9

2, 8 b

yte

receiver: explicitly informs

sender of (dynamically

changing) amount of free

buffer space

s data

20

K=1

AC

time

time

45

TLP

TLP

Flow Control - Sliding Window

sender: limits the amount of

transmitted, unACKed

data less than most

recently received

RcvWindow

- guarantees receive

buffer doesn’t

overflow

❒ spare room in buffer

Duplicated. Host B’s action?

- RcvWindow field in

TCP segment

= RcvWindow

= RcvBuffer-[LastByteRcvd - LastByteRead]

Example

46

Sliding window flow control (cont’d)

• To improve the utilization of the channel in the cases of Tprop > Tframe

by allowing multiple frames to be transmited before receiving ACK(s)

(to improve the performance of the stop-and-wait mechanism)

• To keep track of which frames without waiting for any ACKed,

each frame is labeled with sequence number.

• Rule of sliding window:

ACK

- Txer maintains a list of SEQ numbers that it is allowed to send

- Rxer maintains a list of SEQ numbers that it is prepared to receive

RR ~ Receiver Ready (in HDLC)

Window of frames

- Frames are numbered (0 ~

2K-1)

modulo 2K , k = # of bits in SEQ #

- The window size d 2K , and the SEQ # has a bounded size since it

occupies a field in the frame

(?)

- Sender must buffer these frames in case they need to be retransmitted

ACK

• Applied to Go-back-N and Selective-reject ARQ, and LLC, HDLC, and X.25

TLP

47

TLP

Back to GBN 48

Example

TCP Flow Control - Credit Allocation

W=1400

A=1001,

• Operation:

- Sending TCP includes a SEQ # of the first byte in the

segment field

- Receiving TCP ACKs an incoming segment with (A=i, W=j),

where

A=i Ö expecting SEQ = i and all SEQ prior to i are ACKed

W=j Ö granting of permission to send additional j (window)

bytes, i.e., corresponding to SEQ # in i ~ (i+j-1)

(granted permission)

Remaining credits

• Some examples of granting credit:

+ 600

Assuming Rxer just issued (A=i, W=j )]

- Rxer issues (A=i, W=k) to increase credit to k (k > j) when no

additional data have arrived

- Rxer issues (A=i+m, W=j-m) without granting additional

credit to ACK an incoming segment containing m bytes (m < j)

TLP

49

TLP

Estimation of RTT

TCP Round Trip Time and Timeout

EstimatedRTT = (1- D)*EstimatedRTT + D*SampleRTT

- Exponential weighted moving average (why?)

- influence of given sample decreases exponentially fast

- typical value of D = 0.125 (RFC 2988)

Q: How to estimate RTT?

z SampleRTT: measured time

from segment transmission

until ACK receipt, ignore

350

RTT: from gaia.cs.umass.edu to fantasia.eurecom.fr

retransmissions and

cumulatively ACKed

segments

300

RTT (milliseconds)

Q: How to set TCP

timeout value?

z longer than RTT

Γ note: RTT will

vary

z too short:

premature timeout,

Γ unnecessary

retransmissions

z too long: slow

reaction to

segment loss (which

is unnecessary)

- sending 200 bytes/segment; sending and receiving SEQ

# are synchronized through connection establishment

- initial credit = 1400 bytes, and SEQ # = 1001

50

z SampleRTT will vary, want

estimated RTT “smoother”

average several recent

measurements, not just

current SampleRTT

250

200

150

Sample RTT

1

TLP

Estimated RTT

100

51

TLP

8

15

22

29

36

43

50

57

time (seconds)

64

71

78

85

92

99

106

52

RTO (Retransmission Time Out)

Principles of Congestion Control

Setting the timeout

❒ EstimtedRTT plus “safety margin”

❍

Congestion:

large variation in EstimatedRTT -> larger safety margin

z informally: “too many sources sending too much

❒ First estimate of how much SampleRTT deviates from

data too fast for network to handle”

EstimatedRTT:

z different from flow control (w.r.t.

receiver)

z Manifestations:

DevRTT = (1-E)*DevRTT +

Γ Γ lost packets (buffer overflow at routers)

E*|SampleRTT - EstimatedRTT|

Γ Γ long delays (queueing in router buffers)

(typically, E = 0.25)

z a top-10 problem!

❒ Then set timeout interval:

TimeoutInterval(RTO)= EstimatedRTT + 4*DevRTT

53

TLP

54

TLP

Case study: ATM ABR congestion control

Approaches towards congestion control

ABR: available bit

Two broad approaches towards congestion control:

1. End-end congestion

control:

2. Network-assisted

congestion control:

❒ no explicit feedback

❒ routers provide feedback

from network

❒ congestion inferred

❍

to end systems

❍

from end-system

observed loss, delay

❒ approach taken by TCP

❍

Ref: Sec 3.6.2~3.6.3

rate

❒ “elastic service”

❒ if sender’s path

“underloaded”:

sender should use

available bandwidth

❒ if sender’s path

single bit indicating

congestion (SNA,

DECbit, TCP/IP

ECN(RFC2481), ATM)

congested:

❍

sender throttled to

minimum guaranteed

rate

RM (resource management)

cells:

❒ sent by sender, interspersed

with data cells

❒ Two bits in RM cell set by

switches (i.e., “networkassisted”)

NI bit: No Increase in rate

(mild congestion)

❍ CI bit: Congestion Indication

❒ RM cells returned to sender by

receiver, with bits intact

❍

explicit rate sender

should send at

(Ex: choke packet in PSN)

TLP

55

TLP

56

TCP Congestion Control (cont’d)

TCP Congestion Control : Overview

z “probing” for usable

bandwidth:

- ideally: transmit as fast

as possible (Congwin as

large as possible)

without loss

- increase Congwin until

loss (congestion)

- loss happened: decrease

Congwin, then begin

probing (increasing)

again

уу෧෧, ٩ᏵࣁՖ ?

❒ end-end control (no network

z Important variables:

- Congwin:

~ congestion

window size

- threshold:

~ defines threshold

between two slow

start phase and

congestion control

phase

perceive congestion?

assistance)

❒ loss event = timeout or 3

❒ sender limits transmission:

duplicate acks

LastByteSent-LastByteAcked

❒ TCP sender reduces rate

d CongWin

(CongWin) after loss

❒ Roughly,

event

rate =

CongWin

Bytes/sec

RTT

❒ CongWin is dynamic, function

of perceived network

congestion

Abbreviations:

• Congwin l cwnd

• threshold l ssthresh

57

TLP

~ increase CongWin

by 1 MSS every

RTT in the absence

of loss events:

24 Kbytes

AIMD

❍

Slow start

❍

Congestion Avoidance

58

When connection begins, increase rate exponentially fast until

first loss event.

❒ Multiplicative Decrease:

~ cut CongWin in half

• Operation:

after loss event

- Initializing cwnd = 1 (1 MSS) whenever opening a new connection

- Increasing cwnd by 1 (up to a Max) every time an ACK is received

- At any time, TCP measures the congestion window in segment

and restrains the transmission by

AIMD Operation

awnd = Min { credit, cwnd }

awnd = allowed window (currently allowed to send w/o receiving ACKs)

cwnd = congestion window (used at startup and reduced during congestion)

credit = receiver advertised window (used to calculate window/segment size)

16 Kbytes

• Slow start probes the internet to make sure not to send too many

segments into an already congested network

• Connection’s data flow is controlled by the incoming ACK (not cwnd)

8 Kbytes

TLP

❍

II.

II. Slow

Slow Start

Start

probing

congestion

window

Three mechanisms:

TLP

I.

I. TCP

TCP AIMD

AIMD Congestion

Congestion Control

Control

❒ Additive Increase:

How does sender

time

59

TLP

60

Slow Start Operation

Initialization

Æa new connection

- A is sending 100-byte

segments

III.

III. Congestion

Congestion Avoidance

Avoidance

SN = 1

1st

• Also, Dynamic Window sizing on Congestion (Jacobson [88/95])

~ modified the growth of cwnd from exponential to linear

~ a way to deal with the segment loss :

a timeout occurring and receipt of duplicate ACKs

ACK = 101

RTT

SN = 101

SN = 201

SN = 701

ACK = 801

Really slow ?

• Slow start may be a

misnomer since cwnd

grows exponentially

(pretty much close to)

TLP

1st RTT

2nd RTT

SN = 1401

ACK = 1501

3rd RTT

4th RTT

61

TLP

62

Congestion avoidance (cont’d)

• Example

- check how long

it would take to

recover the cwnd

level before

congestion ?

• Operation:

- Begin with slow start algorithm until a congestion occurs :

- Set ssthresh (a slow start threshold) = cwnd/2

- Set cwnd = 1 and perform slow start process

(i.e., increase cwnd by 1 for every ACK received)

until cwnd = ssthresh

- For cwnd t ssthresh, increase cwnd by one for each round-trip

time (RTT)

ACK = 201

- A can fill the pipe with a

continuous flow of segments

after approximately FOUR

RTTs

6th

RTT

1st

RTT

Comparison of Slow Start and

Congestion Avoidance

Slow start, ending

with a timeout

Å counted as

ONE more RTT)

Exponential

growth of cwnd

9

8

ssthresh

Linear

growth of cwnd

Linear

growth of cwnd

1

(RTT)

cwnd = 9

Slow start, ending

with a timeout

TLP

Exponential

growth of cwnd

? ssthresh = 8

63

TLP

(See what the texkbook says.)

64

TCP Slow Start Algorithm

TCP Congestion Avoidance : Tahoe

Slowstart algorithm

• exponential increase (per

RTT) in window size (not

so slow!)

Host A

RTT

initialize: Congwin = 1

for (each segment ACKed)

Congwin++

until (loss event OR

CongWin > threshold)

TCP Tahoe Congestion avoidance

Host B

/* slowstart is over

*/

/* Congwin > threshold */

Until (loss event) {

every w segments ACKed:

Congwin++

}

threshold = Congwin/2

Congwin = 1

perform slowstart

one segm

ent

two segm

ents

four segm

ents

• loss event Γ timeout

(Tahoe TCP) and/or or

three duplicate ACKs

(Reno TCP)

time

TLP

65

TLP

66

TCP Congestion Avoidance : Reno

TCP Reno versus TCP Tahoe:

TCP Reno Congestion avoidance

TLP

congestion window size

(segments)

• Three duplicate ACKs

(Reno TCP):

• Some segments are

getting through

correctly!

• Don’t “overreact” by

decreasing window to

1 as in Tahoe

– decrease window

sizeindicates

by half

3 dup ACKs

network capable of

delivering some segments

14

/* slowstart is over

*/

/* Congwin > threshold */

Until (loss event) {

every w segments ACKed:

Congwin++

}

threshold = Congwin/2

If (loss detected by timeout) {

Congwin = 1

Threshold = Congwin/2

perform slowstart }

If (loss detected by triple

duplicate ACK) {

Congwin = Congwin/2,

Congwin increases linearly }

12

10

8

6

threshold

4

(variable)

2

0

1

2

3

4

5

6

7

8

9 10 11 12 13 14 15

Transmission round

TCP

Tahoe

Series1

TCP

Series2

Reno

Fig. 3-51 Evolution of TCP Congestion window (Tahoe and Reno)

67

TLP

68

TCP Fairness

(Joined) Throughput Realized by Two TCPs

• TCP Fairness goal:

• Two competing sessions:

~ if K TCP connections pass through a router (share same

bottleneck link), each TCP should get R/K of link capacity

– Additive increase gives slope of 1, as throughout increases

– multiplicative decrease decreases throughput proportionally

TCP connection 1

TCP

connection 2

Goal : having achieved throughput fall

somewhere around intersection

equal bandwidth share

R

Connection 2 throughput

Example:

bottleneck

Router capacity R

❒ Example: link of rate R supporting 9 connections;

❍ What

❍

TLP

How TCP approaches fairness ?

if :new app asks for 1 TCP, gets rate R/10

What if new app asks for 11 TCPs, gets what ?

(A: R/2)

69

TLP

71

TLP

loss: decrease window by factor of 2

congestion avoidance: additive increase

loss: decrease window by factor of 2

congestion avoidance: additive increase

Assuming starting

Connection 1 throughput R

70

The End

Understanding the Computer

TLP

72