* Your assessment is very important for improving the work of artificial intelligence, which forms the content of this project

Download DataStage DB2 Parallel Configuration

Extensible Storage Engine wikipedia , lookup

Microsoft Access wikipedia , lookup

Relational model wikipedia , lookup

Microsoft Jet Database Engine wikipedia , lookup

Database model wikipedia , lookup

Team Foundation Server wikipedia , lookup

Open Database Connectivity wikipedia , lookup

Information Integration Solutions

IBM InfoSphere DataStage

Configuring Parallel DB2 Remote Connectivity

August 9, 2008

INFORMATION INTEGRATION SOLUTIONS

1 Preface

This document is intended for those who are planning for and implementing IBM

InfoSphere® DataStage ™ requiring connectivity to DB2 Enterprise Server Edition (DB2).

It is intended to replace product documentation and provide guidance when implementing

systems where the database server is distinct from the engine tier server. Such an

implementation is referred to as a remote server implementation. In the following

sections, we discuss the issues that should be resolved prior to installation, installation

requirements, and configuration.

To demonstrate the processes discussed in this document, we will implement the DB2

Enterprise stage and use screen shots and actual files from this implementation.

1.1

Organization

This document contains the following sections:

1

2

PREFACE ......................................................................................................... 2

1.1

Organization .................................................................................................................... 2

1.2

Documentation Conventions ........................................................................................... 2

1.3

Goals and Target Audience .............................................................................................. 3

BACKGROUND ................................................................................................ 4

2.1

DB2 Stage Types within DataStage ................................................................................. 4

2.2

DB2 Enterprise Stage Architecture .................................................................................. 5

3

PREREQUISITES ............................................................................................. 7

4

HOW-TO SET UP DB2 CONNECTIVITY FOR REMOTE SERVERS ..................... 8

5

USING THE DB2 ENTERPRISE STAGE........................................................... 14

6

CONFIGURING MULTIPLE INSTANCES IN ONE JOB .................................... 17

7

TROUBLESHOOTING ..................................................................................... 17

8

PERFORMANCE NOTES ................................................................................. 18

9

SUMMARY OF SETTINGS .............................................................................. 18

1.2 Documentation Conventions

This document uses the following conventions:

Convention

Usage

Bold

In syntax, bold indicates commands, function names, keywords, and

options that must be input exactly as shown. In text, bold indicates

keys to press, function names, and menu selections.

Italic

In syntax, italic indicates information that you supply. In text, italic also

indicates UNIX commands and options, file names, and pathnames.

Plain

In text, plain indicates prompts, commands and options, file names,

Version 3.0 DataStage Enterprise Edition DB2 Configuration

Page 2 of 19

INFORMATION INTEGRATION SOLUTIONS

Bold Italic

and pathnames.

Indicates: important information.

Courier indicates examples of source code and system output and

prompts.

Tahoma Bold

In examples, tahoma bold indicates characters that the user types or

keys the user presses (for example, <Return>).

A right arrow between menu commands indicates you should choose

each command in sequence. For example, “Choose File Exit” means

you should choose File from the menu bar, and then choose Exit from

the File pull-down menu.

This

The continuation character is used in source code examples to indicate

linecontinues a line that is too long to fit on the page, but must be entered as a

single line on screen.

Courier

The following are also used:

Syntax definitions and examples are indented for ease in reading.

All punctuation marks included in the syntax—for example, commas, parentheses,

or quotation marks—are required unless otherwise indicated.

Syntax lines that do not fit on one line in this manual are continued on subsequent

lines. The continuation lines are indented. When entering syntax, type the entire

syntax entry, including the continuation lines, on the same input line.

Text enclosed in parenthesis and underlined (like this) following the first use of

proper terms will be used instead of the proper term.

Interaction with our example system will usually include the system prompt and the

command, most often on 2 or more lines. For example:

/home/dsadm @ database_server >

/bin/tar –cvf /dev/rmt0 /usr/dsadm/Ascential/DataStage/Projects

1.3 Goals and Target Audience

This document presents a detailed set of instructions for configuring connectivity from the

engine tier server to a remote DB2 instance using the native parallel DB2 Enterprise stage.

The primary audience for this document is DataStage administrators and DB2 DBAs.

Information in certain sections may also be relevant for Technical Architects and System

Administrators.

Version 3.0 DataStage Enterprise Edition DB2 Configuration

Page 3 of 19

INFORMATION INTEGRATION SOLUTIONS

2 Background

2.1

DB2 Stage Types within DataStage EE

In addition to the ODBC stages, there are five stages available on the DataStage Designer

canvas that can access DB2:

DB2 API – plugin data access for read, insert, update-insertion (upsert) and delete.

DB2 Load – plugin data access for load

DB2 ZLoad – plugin data access for read, insert, update, and load to DB2 on

System Z

Dynamic RDBMS – plugin data access for read, insert, upsert and delete.

DB2 Enterprise – native parallel data access for read, insert, upsert, delete and

load.

The plugin stages are designed for lower-volume access to DB2 databases. These stages

also provide connectivity to non-UNIX DB2 databases, databases on UNIX platforms that

differ from the platform of the DataStage ETL server, or DB2 databases on Windows or

Mainframe platforms .

Figure 1: DB2 stages available on the Parallel Job design palette

By facilitating flexible connectivity to multiple types of remote DB2 database servers, the

use of DataStage plugin stages expands the range of options available to the designer.

However, this flexibility limits overall performance and scalability. Furthermore, when

used as data sources, plugin stages cannot read from DB2 in parallel.

When using the DB2 API stage or the Dynamic RDBMS stage, it is possible to access a

DB2 with Data Partitioning Facility (DPF) database in parallel by manually partitioning data

and stages on the canvas for each partition of the database. Because each plugin

invocation will open a separate connection to the same target DB2 database table, the

ability to function in parallel may be limited by the table and index configuration set by the

DB2 database administrator. This document does not provide any further discussion of

this technique.

Version 3.0 DataStage Enterprise Edition DB2 Configuration

Page 4 of 19

INFORMATION INTEGRATION SOLUTIONS

The capabilities of each DB2 stage are summarized in the following table. For specific

details on the stage capabilities, consult the DataStage documentation (DataStage Parallel

Job Developers Guide, DataStage Plugin guides)

Stage

Name

Stage

Type

DB2

Enterprise

Native DPF,

Parallel Homogeneous

Hardware and

Operating

System 1

Plugin Any DB2 via

DB2 Client or

DB2-Connect

Plugin Any DB2 via

DB2 Client or

DB2-Connect

Plugin DB2 on Z

DB2 API

Dynamic

RDBMS

DB2 Z Load

DB2 Load

Plugin

DB2

Supports

Parallel Parallel

Requirement Partitioned Read?

Write?

DB2?

<not sure of

all the

capabilities

here>

Subject to

DB2 Loader

Limitations

Yes /

directly to

each DB2

node

Yes

Yes

Parallel SQL

Sparse Open

Lookup /

Close

Yes

Yes

Yes /

No

through DB2

node 0

Yes /

No

through DB2

node 0

Yes /

No

through DB2

node 0

Possible

No

Limitations

No

Possible

No

Limitations

No

No

No

No

No

No

No

No

No

Figure 2: DB2 Communication Options and Capabilities

It is possible to connect the DB2 UDB stage to a remote database by cataloging the remote database in the

local instance and then using it as if it were a local database. This will only work when the authentication

mode of the database on the remote instance is set to “client authentication”. If you use the stage in this

way, you may experience data duplication when working in partitioned instances since the node

configuration of the local instance may not be the same as the remote instance. For this reason, the “client

authentication” configuration of a remote instance is not recommended.

1

2.2

DB2 Enterprise Stage Architecture

As a native, parallel component, the DB2 Enterprise stage is designed for maximum

performance and scalability. These goals are achieved through tight integration with DB2,

including direct communication with each DB2 database node, and reading from or writing

to DB2 in parallel (where appropriate), using the same data partitioning as the referenced

DB2 tables.

This section outlines the high-level architecture of the native parallel DB2 Enterprise stage

providing relevant background to understand its configuration as detailed in the remaining

sections of this document.

Version 3.0 DataStage Enterprise Edition DB2 Configuration

Page 5 of 19

INFORMATION INTEGRATION SOLUTIONS

Prior to V7, DataStage required the primary DataStage ETL server (aka “conductor node”)

to be installed on the DB2 coordinator server. Starting with V7 and later releases,

DataStage provides “remote DB2” configuration, separating the primary ETL server

(“conductor node”) from the primary DB2 server (coordinator node or “node zero”) using

the native parallel DB2 Enterprise stage. Because DataStage is tightly integrated with the

DB2 servers and routes data to individual nodes based on DB2 table partitioning,

configuration is provided by a combination of DB2 client and DataStage clustered

processing.

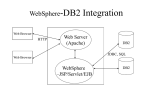

As outlined in Figure 3, the primary ETL server (“conductor node”) must have the DB2

V9.x client installed and configured to connect to the remote DB2 V9.x server instance.

This is the same DB2 client that DataStage uses to connect to DB2 databases through the

DB2 plugin stages (DB2 API, DB2 Load, Dynamic RDBMS) for reading, writing, and import

of metadata.

Primary (“conductor node”)

DataStage EE Server

32-bit DB2 client

DSEE engine

DB2 DPF node 0

DSEE engine

DB2 DPF node 1

DSEE engine

DB2 DPF node n

DSEE engine

Figure 3: DataStage DB2 Communication Architecture

The native parallel DB2 Enterprise stage of DataStage uses the DB2 client connection to

“pre-query” the DB2 instance and determine partitioning of the source or target table.

This partitioning information is then used to read/write/load data directly from/to the

remote DB2 nodes based on the actual table configuration. This tight integration is

provided by routing data between the engine nodes configured on the DB2 instance

server(s), which requires a clustered configuration of the Information Server engine.

Assumptions:

As with any clustered DataStage configuration, the engine libraries must be installed in the

same location on all ETL and DB2 servers in the cluster. This is most easily achieved by

creating a shared mount point on the remote DB2 nodes through NFS or similar directory

sharing methods.

The DB2 libraries do not have to be installed in the same location on all servers, as long as

all locations are included in the $PATH and $LIBPATH, $LD_LIBRARY_PATH, or

$SHLIB_PATH environment variable settings.

Version 3.0 DataStage Enterprise Edition DB2 Configuration

Page 6 of 19

INFORMATION INTEGRATION SOLUTIONS

Connectivity Scenario Description:

The connectivity scenario for a DataStage DB2 Enterprise stage is:

1) The DataStage conductor node uses the DB2 environment variable

APT_DB2INSTANCE_HOME as the location on the ETL and DB2 servers where the

remote DB2 server's db2nodes.cfg is located (it needs to be copied to the

DataStage conductor).

2) DataStage reads the file db2nodes.cfg from a $APT_DB2INSTANCE_HOME/sqllib

subdirectory identified for the specified DB2 instance. This file allows DataStage to

determine the individual network node names of each DB2 node.

3) DataStage scans the current configuration file specified by the environment variable

$APT_CONFIG_FILE (APT_CONFIG_FILE) for node names whose fastname

properties match the node names provided in

$APT_DB2INSTANCE_HOME/sqllib/db2nodes.cfg. DataStage must find each DB2

node name in the APT_CONFIG_FILE or the job will fail.

4) The conductor node queries the local DB2 instance via the DB2 client to determine

table partitioning information. The results of this query are then used to route data

directly to or from the appropriate DB2 nodes.

5) DataStage starts up processes across all ETL and DB2 nodes in the cluster. This can

be easily verified by setting the environment variable $APT_DUMP_SCORE to

TRUE, and examining the corresponding score entry placed in the job log within

DataStage Director.

3 Prerequisites

-

The DB2 database schema to be accessed must NOT have any columns with User

Defined Types (UDTs). Use the “db2 describe table [table-name]” command on the

DB2 client for each table to be accessed to determine if UDTs are in use.

Alternatively, examine the DDL for each schema to be accessed.

-

The Engine Tier libaries must be copied or mounted to each DB2 node in the DB2

cluster. The DataStage version demonstrated in this document is 8.0.1.

-

The hardware and operating system of the ETL server and DB2 nodes must be the

same. The systems demonstrated in this document were running AIX V5.3.

-

A DB2 V9.x client must be installed on the primary (conductor) ETL server. If you

using DB2 for your Metadata Repository and it resides on your ETL server, you can

use the DB2 V9.x client that is already installed.

-

The remote database must be DB2 V9.x with the Data Partitioning Facility (DPF)

option installed.

Version 3.0 DataStage Enterprise Edition DB2 Configuration

Page 7 of 19

INFORMATION INTEGRATION SOLUTIONS

-

4 How-To Set Up DB2 Connectivity for Remote Servers

Our example systems are 2 AIX systems, one with 4 CPUs used as the DB2 UDB server,

and one with 2 CPUs used as the engine tier server. In this How-To, we will demonstrate

using the DataStage super-user, by default dsadm.

Note that dsadm does NOT have to be the local database instance owner.

Host: etl_server

Host: db2_server

DB2 Database: db2_dpf1_db

Figure 4: DataStage DB2 Example System

1) Perform the following on ALL members of the cluster BEFORE installing DataStage

on the ETL server:

a. Create the primary group to which the DataStage users will belong (in this

document, this group is the recommended default dstage) and ensure that

this group has the same UNIX group id (like 127) on all the systems.

b. Create DataStage users on all members of the cluster. Make sure that each

user has the same user id (like 204) on all the systems, and that every user

has the correct group memberships, minimally with dstage as the primary

group, and the DB2 group in the list of secondary groups.

c. Add these users to the DB2 database and ensure they can log in to DB2 on

db2_server. At this step, we are on the DB2 server, and NOT the ETL

server. If you fail here, contact your DB2 DBA for support – this is NOT a

DataStage issue.

/db2home/db2inst1@db2_server> . /db2home/db2inst1/sqllib/db2profile

/db2home/db2inst1@db2_server> db2 connect to db2_dpf1_db user dsadm using db2_psword

Database Connection Information

Database server

SQL authorization ID

Local database alias

= DB2/AIX64 9.1.0

= DSADM

= SAMPLE

Version 3.0 DataStage Enterprise Edition DB2 Configuration

Page 8 of 19

INFORMATION INTEGRATION SOLUTIONS

2) Enable the rsh command on all servers in the cluster (you may also use ssh, but

those configuration details are not provided here). The simplest way to do this is

to create a .rhosts file in the home directory of each DataStage user that has the

host name or IP address of all members of the cluster, and then setting the

permissions on this file to 600. This must be done for each user on all members of

the cluster. Note that modern security systems may prohibit this method, but it will

serve as an adequate example of the requirement. Contact the System

Administrators for the cluster for assistance. Here are the commands to be

performed on each node of our example system to implement the rhosts method:

echo “etl_server dsadm” > ~/.rhosts

echo “db2_server dsadm” >> ~/.rhosts

chmod 600 ~/.rhosts

And an example of the validation of the etl_server:

/home/dsadm@etl_server> rsh db2_server date

Wed Jan 18 15:40:51 CST 2006

3) If DB2 V9.x client is not already installed on primary ETL server, then install/create

a DB2 V9.x client (e.g., db2inst1) NOTE: If using DB2 for Metadata Repository

and it resides on ETL server, then you can use this DB2 V9 instance as your client

instance. The default instance name for the this is usually db2inst1.

4) The DB2 DBA must now catalog all the databases you wish to access on the DB2

server into this instance of the DB2 client.

a. Ensure that dsadm can log in to DB2 on the db2_server. At this step, we

are on the ETL server, and NOT the DB2 server. If you fail here, contact

your DB2 DBA for support – this is NOT a DataStage issue.

/home/dsadm@etl_server> . /home/db2inst1/sqllib/db2profile

/home/dsadm@etl_server> db2 "LIST DATABASE DIRECTORY"

Database alias

Database name

Node name

Database release level

Comment

Directory entry type

Authentication

Catalog database partition number

=

=

=

=

=

=

=

=

db2dev1

db2_dpf1_db

db2_server

a.00

Remote

SERVER

-1

/home/dsadm@etl_server> db2 connect to db2dev1 user dsadm using db2_psword

Database Connection Information

Database server

SQL authorization ID

Local database alias

= DB2/AIX64 9.1.0

= DSADM

= SAMPLE

5) Log out of the ETL server and log back in to reset all the environment variables to

their original state. Edit $DSHOME/dsenv to include the following information (note

Version 3.0 DataStage Enterprise Edition DB2 Configuration

Page 9 of 19

INFORMATION INTEGRATION SOLUTIONS

that underlined items in blue should be substituted with appropriate values for your

configuration). We are assuming that the $DB2DIR directory is the same on all

nodes in our cluster. This ensures that $PATH and $LIBPATH are correctly set for

the remote sessions as well as the local session without resorting to individual files

on each member of the cluster.

Note that on operating systems other than AIX (our example system), $LIBPATH

may be $SHLIB_PATH or $LD_LIBRARY_PATH.

################################################

# DB2 Setup section of dsenv

################################################

#DB2DIR is where the DB2 home is located

DB2DIR=/opt/IBM/db2/V9.1; export $DB2DIR

#DB2INSTANCE is the name of the DB2 client where the databases are

cataloged

DB2INSTANCE=db2inst1; export $DB2INSTANCE

#INSTHOME is the PATH where the client instance is located, usually the

home directory of the instance owner.

INSTHOME=/home/db2inst1; export $INSTHOME

#Append the DB2 directories to the PATH

PATH=$PATH:$DB2DIR/bin; export $PATH

THREADS_FLAG=native; export $THREADS_FLAG

#Add the DB2 libraries to END of the LIBPATH on AIX or LD_LIBRARY_PATH on

SUN and Linux

LIBPATH=$LIBPATH:$DB2DIR/lib32; export $LIBPATH

IMPORTANT: the DataStage libraries MUST be placed BEFORE the DB2 entries in

$LIBPATH ($SHLIB_PATH or $LD_LIBRARY_PATH). DataStage and DB2 use the

same library name “librwtool”.

6) Copy the db2nodes.cfg file from the remote instance to the DataStage server.

Create a user defined environment variable APT_DB2INSTANCE_HOME in the DS

administrator, add it to a test job and have it point to the location of the sqllib

subdirectory where the db2nodes.cfg has been placed. Avoid setting this at the

Project level so that other DB2 jobs which are connecting locally do not pick up this

value.

In our example, the DB2 server has four processing nodes (logical nodes), the

instance owner is db2inst1, the db2nodes.cfg file on the DB2 server is

/home/db2inst1/sqllib/db2nodes.cfg, and this file has these contents:

0

1

2

3

db2_server

db2_server

db2_server

db2_server

0

1

2

3

In our example, the ETL server client is owned by dsadm, the

APT_DB2INSTANCE_HOME environment variable has been set to

Version 3.0 DataStage Enterprise Edition DB2 Configuration

Page 10 of 19

INFORMATION INTEGRATION SOLUTIONS

“/home/dsadm/remote_db2config”, and this file was copied to

/home/dsadm/remote_db2config/sqllib/db2nodes.cfg on the ETL server.

7) Ensure that dsadm can connect to the remote DB2 database using the values in

$DSHOME/dsenv instead of ~/sqllib/db2profile. Log out of the ETL server and log

back in to reset all the environment variables to their original state.

/home/dsadm@etl_server> cd `cat /.dshome`/dsenv

/home/dsadm@etl_server> . ./dsenv

/home/dsadm@etl_server> db2 connect to db2dev1 user dsadm using db2_psword

Database Connection Information

Database server

SQL authorization ID

Local database alias

= DB2/AIX64 9.1.0

= DSADM

= SAMPLE

8) Implement a DataStage cluster (please refer to the Install and Upgrade guide for

more details). In this example, /etl/Ascential is the file system that contains the

DataStage software system, and it is NFS-exported from the ETL server to the DB2

server, and NFS-mounted exactly on /etl/Ascential, a file system owned by dsadm

on the DB2 server.

9) Verify that the DB2 operator library has been properly configured by making sure

the link “orchdb2op” exists in the DSComponents/bin directory. Normally this

link is configured on install, but if it does not exist, you must run the script

DSComponents/install/install.liborchdb2op. You will be prompted to specify

DB2 version 7 or 8, in our case, select version 8 (which means version 8 or higher).

10)The db2setup.sh script located in the $PXHOME/bin/ can run without reporting

errors even if they occur, and if there are errors, DataStage will not be able to

connect to the database(s). Run the following commands and ensure that no

errors occur.

/home/dsadm@etl_server>

/home/dsadm@etl_server>

/home/dsadm@etl_server>

/home/dsadm@etl_server>

blocking all grant public

/home/dsadm@etl_server>

/home/dsadm@etl_server>

/home/dsadm@etl_server>

/home/dsadm@etl_server>

/home/dsadm@etl_server>

db2

db2

db2

db2

connect reset

connect terminate

connect to db2dev1 user dsadm using db2_psword

bind DSComponents/bin/db2esql80.bnd datetime ISO

cd ${INSTHOME}/sqllib/bnd

db2 bind @db2ubind.lst datetime ISO1 blocking all grant public

db2 bind @db2cli.lst datetime ISO2 blocking all grant public

db2 connect reset

db2 connect terminate

/home/dsadm@etl_server> db2 connect to db2dev1 user dsadm using db2_psword

/home/dsadm@etl_server> db2 grant bind, execute on package dsadm.db2esql8 to group

dstage

1

Datetime ISO currently prevents this bind from succeeding. Omit this option when issuing the bind until

this issue has been resolved by development.

2

Datetime ISO currently prevents this bind from succeeding. Omit this option when issuing the bind until

this issue has been resolved by development.

Version 3.0 DataStage Enterprise Edition DB2 Configuration

Page 11 of 19

INFORMATION INTEGRATION SOLUTIONS

/home/dsadm@etl_server> db2 connect reset

/home/dsadm@etl_server> db2 connect terminate

11)The db2grant.sh script located in the $PXHOME/bin/ can run without reporting

errors even if they occur, and if there are errors, DataStage will not operate

correctly. Run the following commands and ensure that no errors occur. Grant

bind and execute privileges to every member of the primary DataStage group, in

our case dstage.

/home/dsadm@etl_server>

/home/dsadm@etl_server>

dstage

/home/dsadm@etl_server>

/home/dsadm@etl_server>

db2 connect to db2dev1 user dsadm using dsadm_db2_psword

db2 grant bind, execute on package dsadm.db2esql8 to group

db2 connect reset

db2 connect terminate

12)Create a DataStage configuration file that includes nodes to be used for ETL

processing and a node entry for each physical server in the remote DB2 instance.

Unless other non-DB2 ETL processing is to be performed on the remote DB2

instance nodes, these entries should be removed from the default node pool (pools

“”). Each node in the DB2 instance should be part of the same node pool (eg.

pools “db2”). An example configuration file is shown below:

{

node "node1"

{

fastname "etl_server"

pools ""

resource disk "/worknode1/datasets" {pools ""}

resource scratchdisk "/worknode1/scratch" {pools ""}

}

node "db2node1"

{

fastname "db2_server"

pools "db2"

resource disk "/tmp" {pools ""}

resource scratchdisk "/tmp" {pools ""}

}

}

13) Restart the DataStage server.

14)Test server connectivity by trying to import a table definition within DataStage

Designer using the DB2 API plugin (Server Plugin ). If this fails, you do not have

connectivity to the DB2 server and need to revisit all the previous steps until this

succeeds.

If this succeeds, check the imported TableDefs to be sure the data types are

legitimate.

15)Create a user defined variable APT_DB2INSTANCE_HOME in the DataStage project

using the DataStage Administrator client for use in jobs that access DB2. Avoid

setting this at the Project level so that other DB2 jobs which are connecting locally

Version 3.0 DataStage Enterprise Edition DB2 Configuration

Page 12 of 19

INFORMATION INTEGRATION SOLUTIONS

do not pick up this value. Set this variable in each job to the location of the

sqllib/db2nodes.cfg file, in our case /home/dsadm/remote_db2config.

Version 3.0 DataStage Enterprise Edition DB2 Configuration

Page 13 of 19

INFORMATION INTEGRATION SOLUTIONS

5 Using the DB2 Enterprise Stage

Create a Parallel job and add a DB2 Enterprise stage and sequential file stage. Set the

file path in the sequential file stage to /dev/null. Set or add the following properties to the

DB2 Enterprise stage (see image below).

Figure 5: DataStage DB2 Enterprise Stage Properties

For connection to a remote DB2 instance, you need to set the following properties on the

DB2 Enterprise stage in your parallel job:

Client Instance Name. Set this to the DB2 client instance name. If you set

this property, DataStage assumes you require remote connection.

Server. Set this to the name of the DB2 server OR use the DB2 environment

variable DB2INSTANCE to identify the name of the DB2 server.

Client Alias DB Name. Set this to the DB2 client’s alias database name for the

remote DB2 server database. [This is required only if the client’s alias is different

from the actual name of the remote server database.]

Database. Set this to the remote server database name OR use the environment

variables APT_DBNAME or APT_DB2DBDFT to identify the database.

User. Enter the user name for connecting to DB2. This is required for a remote

connection in order to retrieve the catalog information from the local instance of

DB2 and thus must have privileges for that local instance.

Password. Enter the password for connecting to DB2. This is required for a

remote connection in order to retrieve the catalog information from the local

instance of DB2 and thus must have privileges for that local instance.

This stage has been parameterized in the following example:

Version 3.0 DataStage Enterprise Edition DB2 Configuration

Page 14 of 19

INFORMATION INTEGRATION SOLUTIONS

Figure 6: DataStage Parallel Job Properties Tab

Figure 7: DataStage DB2 Enterprise Stage Properties Using Job Parameters

Set the APT_DB2INSTANCE_HOME variable in the Parameters panel to

/home/dsadm/remote_db2config.

Version 3.0 DataStage Enterprise Edition DB2 Configuration

Page 15 of 19

INFORMATION INTEGRATION SOLUTIONS

Figure 8: Sample Job Properties Panel

Test the connection using View Data on the Output / Properties panel:

Figure 9: Sample View Data Output

Version 3.0 DataStage Enterprise Edition DB2 Configuration

Page 16 of 19

INFORMATION INTEGRATION SOLUTIONS

6 Configuring Multiple Instances in One Job

Although it is not officially supported, it is possible to connect to more than one DB2

instance within a single job. Your job must meet one of the following configurations

(note: the use of the word “stream” refers to a contiguous flow of one stage to another

within a single job):

1. Single stream - Two Instances Only

reading from one instance and writing to another instance with no other DB2

instances (not sure how many stages of these 2 instances can be added to the

canvas for this configuration for lookups)

2. Two Stream – One Instance per Steam

reading from instance A and writing to instance A and reading from instance B and

writing to instance B (not sure how many stages of these 2 instances can be added

to the canvas for this configuration for lookups)

3. Multiple Stream with N DB2 sources with no DB2 targets

reading from 1 to n DB2 instances in separate source stages with no downstream

other DB2 stages

In order to get this configuration to work correctly, you must adhere to all of the

directions specified for connecting to a remote instance AND the following:

You must not set the APT_DB2INSTANCE_HOME environment variable. Once this

variable is set, it will try to use it for each of the connections in the job. Since a

db2nodes.cfg file can only contain information for one instance, this will create

problems.

In order for DS to locate the db2nodes.cfg, you must build a user on the DS server

with the same name as the instance you are trying to connect to (the default logic

for the DB2 Enterprise stage is to use the instance’s home directory as defined for

the UNIX user with the same name as the DB2 instance). In the users UNIX home

directory, create a sqllib subdirectory and place the remote instance’s db2nodes.cfg

there. Since the APT_DB2INSTANCE_HOME is not set, DS will default to this

directory to find the configuration file for the remote instance.

7 Troubleshooting

1) If you get an error while performing the binds and grants, make sure dsadm has

privileges to create schema, can select on the sysibm.dummy1 table, and bind

packages (see installation documentation for the DB2 grants necessary to run the

scripts).

2) There are several errors while trying to view data from the DB2 Enterprise stage

that don’t represent the actual issue:

- If you log into DS with a username (ex dsadm) and try to view data with a

different user in the plugin (username and password inside of the Plugin , you

Version 3.0 DataStage Enterprise Edition DB2 Configuration

Page 17 of 19

INFORMATION INTEGRATION SOLUTIONS

could get a failed connection. This is because the username and password inside of

the stage is only used to create a connection to DB2 via the client and then the job

actually runs using the DS user (username used to log into DS either from the

designer or the director).

- The user doesn’t have permission to read the catalog tables

3) The userid used to access the DB2 remote servers has to be set in each of the

servers. For example, the dsadm user has to exist as a UNIX user on the ETL

server and all of the DB2 nodes. Also make sure the groups are set correctly since

the db2grant.sh scripts only grants permission to the group (in our example, dstage

or something like db2group).

4) The DB2 databases on the remote DB2 server must be catalogued in the DB2 client

on the ETL server before you can connect to any of the cataloged databases.

5) The permission on the resource disk or scratch are not set correctly (mainly for

performing a load) When performing a load, make sure the resource disk and

scratch are read / write to the dstage group as well as the DB2 instance owner

were the data is going to be loaded. Usually the groups are different so the

permission needs to be set to 777.

8 Performance Notes

In some cases, when using user-defined SQL without partitioning against large volumes of

DB2 data, the overhead of routing information through a remote DB2 coordinator may be

significant. In these instances, it may be beneficial to have the DB2 DBA configure

separate DB2 coordinator nodes (no local data) on each ETL server (in clustered ETL

configurations). In this configuration, DB2 Enterprise stage should not include the Client

Instance Name property, forcing the DB2 Enterprise stages on each ETL server to

communicate directly with their local DB2 coordinator.

9 Summary of Settings

Environment variables must be supplied after the native DataStage environment variables.

This is done with the dsenv file for the DataStage server. Here are the last lines of the

dsenv file with DB2 setup information added:

/etl/Ascential/DataStage/DSEngine @ etl_server >> tail -8 dsenv

# DB2 setup section

DB2DIR=/opt/IBM/db2/V9.1; export DB2DIR

DB2INSTANCE=db2inst1; export DB2INSTANCE

INSTHOME=/home/db2inst1; export INSTHOME

PATH=$PATH:$DB2DIR/bin; export PATH

THREADS_FLAG=native; export THREADS_FLAG

LIBPATH=$LIBPATH:$DB2DIR/lib32; export LIBPATH

Here are the contents of the db2nodes.cfg file located in /home/dsadm/remote_db2config

/sqllib:

/home/dsadm/remote_db2config/sqllib @ etl_server >> cat db2nodes.cfg

0 db2_server 0

1 db2_server 1

Version 3.0 DataStage Enterprise Edition DB2 Configuration

Page 18 of 19

INFORMATION INTEGRATION SOLUTIONS

Version 3.0 DataStage Enterprise Edition DB2 Configuration

Page 19 of 19