* Your assessment is very important for improving the work of artificial intelligence, which forms the content of this project

Download O() Analysis of Methods and Data Structures

Survey

Document related concepts

Transcript

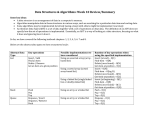

O() Analysis of Methods and Data Structures Reasonable vs. Unreasonable Algorithms Using O() Analysis in Design Now Available Online! http://www.coursesurvey.gatech.edu O() Analysis of Methods and Data Structures The Scenario • We’ve talked about data structures and methods to act on these structures… – Linked lists, arrays, trees – Inserting, Deleting, Searching, Traversal, Sorting, etc. • Now that we know about O() notation, let’s discuss how each of these methods perform on these data structures! Recipe for Determining O() • Break algorithm down into “known” pieces – We’ll learn the Big-Os in this section • Identify relationships between pieces – Sequential is additive – Nested (loop / recursion) is multiplicative • Drop constants • Keep only dominant factor for each variable Array Size and Complexity How can an array change in size? MAX is 30 ArrayType definesa array[1..MAX] of Num We need to know what N is in advance to declare an array, but for analysis of complexity, we can still use N as a variable for input size. Traversals • Traversals involve visiting every element in a collection. • Because we must visit every node, a traversal must be O(N) for any data structure. – If we visit less than N elements, then it is not a traversal. LB Comparing Data Structures and Methods Data Structure Unsorted L List Sorted L List Unsorted Array Sorted Array Binary Tree BST F&B BST Traverse N N N N N N N Searching for an Element Searching involves determining if an element is a member of the collection. • Simple/Linear Search: – If there is no ordering in the data structure – If the ordering is not applicable • Binary Search: – If the data is ordered or sorted – Requires non-linear access to the elements Simple Search • Worst case: the element to be found is the Nth element examined. • Simple search must be used for: – Sorted or unsorted linked lists – Unsorted array – Binary tree – Binary Search Tree if it is not full and balanced LB Comparing Data Structures and Methods Data Structure Unsorted L List Sorted L List Unsorted Array Sorted Array Binary Tree BST F&B BST Traverse N N N N N N N Search N N N N N ? Balanced Binary Search Trees If a binary search tree is not full, then in the worst case it takes on the structure and characteristics of a sorted linked list with N/2 elements. 14 11 7 42 58 Binary Search Trees If a binary search tree is not full or balanced, then in the worst case it takes on the structure and characteristics of a sorted linked list. 7 11 14 42 58 Example: Linked List • Let’s determine if the value 83 is in the collection: Head 5 19 35 83 Not Found! 42 \\ Simple/Linear Search Algorithm cur <- head loop exitif(cur = NIL) OR (cur^.data = target) cur <- cur^.next endloop if(cur <> NIL) then print( “Yes, target is there” ) else print( “No, target isn’t there” ) endif Pre-Order Search Traversal Algorithm • As soon as we get to a node, check to see if we have a match • Otherwise, look for the element in the left sub-tree • Otherwise, look for the element in the right sub-tree 14 Left ??? Right ??? Find 9 94 3 22 36 cur 14 67 9 If I have to watch one more of these I think I’m going to die. LB Big-O of Simple Search • The algorithm has to examine every element in the collection – To return a false – If the element to be found is the Nth element • Thus, simple search is O(N). Binary Search • We may perform binary search on – Sorted arrays – Full and balanced binary search trees • Tosses out ½ the elements at each comparison. Full and Balanced Binary Search Trees Contains approximately the same number of elements in all left and right sub-trees (recursively) and is fully populated. 34 25 21 45 29 41 52 Binary Search Example 7 12 42 59 71 Looking for 89 86 104 212 Binary Search Example 7 12 42 59 71 Looking for 89 86 104 212 Binary Search Example 7 12 42 59 71 Looking for 89 86 104 212 Binary Search Example 7 12 42 59 71 86 104 89 not found – 3 comparisons 3 = Log(8) 212 Binary Search Big-O • An element can be found by comparing and cutting the work in half. – We cut work in ½ each time – How many times can we cut in half? – Log2N • Thus binary search is O(Log N). LB What? N 2 4 8 16 32 64 128 256 512 Searches 1 2 3 4 5 6 7 8 9 LB What? log2(N) log2(2) log2(4) log2(8) log2(16) log2(32) log2(64) log2(128) log2(256) log2(512) = = = = = = = = = Searches 1 2 3 4 5 6 7 8 9 LB CS Notation lg(N) lg(2) lg(4) lg(8) lg(16) lg(32) lg(64) lg(128) lg(256) lg(512) = = = = = = = = = Searches 1 2 3 4 5 6 7 8 9 LB Recall log2 N = k • log10 N k = 0.30103... So: O(lg N) = O(log N) LB Comparing Data Structures and Methods Data Structure Unsorted L List Sorted L List Unsorted Array Sorted Array Binary Tree BST F&B BST Traverse N N N N N N N Search N N N Log N N N Log N Insertion • Inserting an element requires two steps: – Find the right location – Perform the instructions to insert • If the data structure in question is unsorted, then it is O(1) – Simply insert to the front – Simply insert to end in the case of an array – There is no work to find the right spot and only constant work to actually insert. LB Comparing Data Structures and Methods Data Structure Unsorted L List Sorted L List Unsorted Array Sorted Array Binary Tree BST F&B BST Traverse N N N N N N N Search N N N Log N N N Log N Insert 1 1 1 Insert into a Sorted Linked List Finding the right spot is O(N) – Recurse/iterate until found Performing the insertion is O(1) – 4-5 instructions Total work is O(N + 1) = O(N) Inserting into a Sorted Array Finding the right spot is O(Log N) – Binary search on the element to insert Performing the insertion – Shuffle the existing elements to make room for the new item Shuffling Elements Note – we must have at least one empty cell 5 12 35 Insert 29 77 101 Big-O of Shuffle Worst case: inserting the smallest number 5 12 35 77 101 Would require moving N elements… Thus shuffle is O(N) Big-O of Inserting into Sorted Array Finding the right spot is O(Log N) Performing the insertion (shuffle) is O(N) Sequential steps, so add: Total work is O(Log N + N) = O(N) LB Comparing Data Structures and Methods Data Structure Unsorted L List Sorted L List Unsorted Array Sorted Array Binary Tree BST F&B BST Traverse N N N N N N N Search N N N Log N N N Log N Insert 1 N 1 N 1 Inserting into a Full and Balanced BST Always insert when current = NIL. Find the right spot – Binary search on the element to insert Perform the insertion – 4-5 instructions to create & add node Full and Balanced BST Insert Add 4 12 41 3 2 7 35 98 Full and Balanced BST Insert 12 41 3 2 7 4 35 98 Big-O of Full & Balanced BST Insert Find the right spot is O(Log N) Performing the insertion is O(1) Sequential, so add: Total work is O(Log N + 1) = O(Log N) LB Comparing Data Structures and Methods Data Structure Unsorted L List Sorted L List Unsorted Array Sorted Array Binary Tree BST F&B BST Traverse N N N N N N N Search N N N Log N N N Log N Insert 1 N 1 N 1 Log N LB What about a BST Not full & balanced? LB Comparing Data Structures and Methods Data Structure Unsorted L List Sorted L List Unsorted Array Sorted Array Binary Tree BST F&B BST Traverse N N N N N N N Search N N N Log N N N Log N Insert 1 N 1 N 1 N Log N Two Sorting Algorithms • Bubblesort – Brute-force method of sorting – Loop inside of a loop • Mergesort – Divide and conquer approach – Recursively call, splitting in half – Merge sorted halves together Bubblesort Review Bubblesort works by comparing and swapping values in a list 1 2 3 4 5 6 77 42 35 12 101 5 Bubblesort Review Bubblesort works by comparing and swapping values in a list 1 2 3 4 5 6 42 35 12 77 5 101 Largest value correctly placed procedure Bubblesort(A isoftype in/out Arr_Type) to_do, index isoftype Num to_do <- N – 1 N-1 loop exitif(to_do = 0) index <- 1 loop exitif(index > to_do) if(A[index] > A[index + 1]) then Swap(A[index], A[index + 1]) to_do endif index <- index + 1 endloop to_do <- to_do - 1 endloop endprocedure // Bubblesort Analysis of Bubblesort • How many comparisons in the inner loop? – to_do goes from N-1 down to 1, thus – (N-1) + (N-2) + (N-3) + ... + 2 + 1 – Average: N/2 for each “pass” of the outer loop. • How many “passes” of the outer loop? –N–1 LB Bubblesort Complexity Look at the relationship between the two loops: – Inner is nested inside outer – Inner will be executed for each iteration of outer Therefore the complexity is: O((N-1)*(N/2)) = O(N2/2 – N/2) = O(N2) LB Graphically 2N-1 N-1 N-1 2N-1 O(N2) Runtime Example Assume you are sorting 250,000,000 items N = 250,000,000 N2 = 6.25 x 1016 If you can do one operation per nanosecond (10-9 sec) which is fast! It will take 6.25 x 107 seconds So 6.25 x 107 60 x 60 x 24 x 365 = 1.98 years Mergesort 98 23 45 14 98 23 45 14 Log N 98 23 98 23 23 98 Log N 45 14 45 14 14 45 14 23 45 98 6 67 33 42 6 67 33 42 6 67 33 42 6 33 67 6 67 42 33 42 6 33 42 67 6 14 23 33 42 45 67 98 Analysis of Mergesort Phase I – Divide the list of N numbers into two lists of N/2 numbers – Divide those lists in half until each list is size 1 Log N steps for this stage. Phase II – Build sorted lists from the decomposed lists – Merge pairs of lists, doubling the size of the sorted lists each time Log N steps for this stage. Analysis of the Merging 98 23 45 14 6 67 33 42 Merge Merge Merge Merge 23 98 14 45 6 67 33 42 Merge Merge 14 23 45 98 6 33 42 67 Merge 6 14 23 33 42 45 67 98 Mergesort Complexity Each of the N numerical values is compared or copied during each pass – The total work for each pass is O(N). – There are a total of Log N passes Therefore the complexity is: O(Log N + N * Log N) = O (N * Log N) Break apart Merging O(NLogN) Runtime Example Assume same 250,000,000 items N*Log(N) = 250,000,000 x 8.3 = 2, 099, 485, 002 With the same processor as before 2 seconds Summary • You now have the O() for basic methods on varied data structures. • You can combine these in more complex situations. • Break algorithm down into “known” pieces • Identify relationships between pieces – Sequential is additive – Nested (loop/recursion) is multiplicative • Drop constants • Keep only dominant factor for each variable Questions? Reasonable vs. Unreasonable Algorithms Reasonable vs. Unreasonable Reasonable algorithms have polynomial factors – O (Log N) – O (N) – O (NK) where K is a constant Unreasonable algorithms have exponential factors – O (2N) – O (N!) – O (NN) Algorithmic Performance Thus Far • Some examples thus far: – O(1) Insert to front of linked list – O(N) Simple/Linear Search – O(N Log N) MergeSort – O(N2) BubbleSort • But it could get worse: – O(N5), O(N2000), etc. An O(N5) Example For N = 256 N5 = 2565 = 1,100,000,000,000 If we had a computer that could execute a million instructions per second… • 1,100,000 seconds = 12.7 days to complete But it could get worse… The Power of Exponents A rich king and a wise peasant… The Wise Peasant’s Pay Day(N) 1 2 3 4 ... 63 64 Pieces of Grain 2 4 8 16 2N 9,223,000,000,000,000,000 18,450,000,000,000,000,000 How Bad is 2N? • Imagine being able to grow a billion (1,000,000,000) pieces of grain a ? second… • It would take – 585 years to grow enough grain just for the 64th day – Over a thousand years to fulfill the peasant’s request! LB So the King cut off the peasant’s head. The Towers of Hanoi A B C Goal: Move stack of rings to another peg – Rule 1: May move only 1 ring at a time – Rule 2: May never have larger ring on top of smaller ring Towers of Hanoi: Solution Original State Move 1 Move 2 Move 3 Move 4 Move 5 Move 6 Move 7 Towers of Hanoi - Complexity For 3 rings we have 7 operations. In general, the cost is 2N – 1 = O(2N) Each time we increment N, we double the amount of work. This grows incredibly fast! Towers of Hanoi (2N) Runtime For N = 64 2N = 264 = 18,450,000,000,000,000,000 If we had a computer that could execute a million instructions per second… • It would take 584,000 years to complete But it could get worse… The Bounded Tile Problem Match up the patterns in the tiles. Can it be done, yes or no? The Bounded Tile Problem Matching tiles Tiling a 5x5 Area 25 available tiles remaining Tiling a 5x5 Area 24 available tiles remaining Tiling a 5x5 Area 23 available tiles remaining Tiling a 5x5 Area 22 available tiles remaining Tiling a 5x5 Area 2 available tiles remaining Analysis of the Bounded Tiling Problem Tile a 5 by 5 area (N = 25 tiles) 1st location: 25 choices 2nd location: 24 choices And so on… Total number of arrangements: – 25 * 24 * 23 * 22 * 21 * .... * 3 * 2 * 1 – 25! (Factorial) = 15,500,000,000,000,000,000,000,000 Bounded Tiling Problem is O(N!) Tiling (N!) Runtime For N = 25 25! = 15,500,000,000,000,000,000,000,000 If we could “place” a million tiles per second… • It would take 470 billion years to complete Why not a faster computer? A Faster Computer • If we had a computer that could execute a trillion instructions per second (a million times faster than our MIPS computer)… • 5x5 tiling problem would take 470,000 years • 64-ring Tower of Hanoi problem would take 213 days Why not an even faster computer! The Fastest Computer Possible? • What if: – Instructions took ZERO time to execute – CPU registers could be loaded at the speed of light • These algorithms are still unreasonable! • The speed of light is only so fast! Where Does this Leave Us? • Clearly algorithms have varying runtimes. • We’d like a way to categorize them: – Reasonable, so it may be useful – Unreasonable, so why bother running Polynomial Performance Categories of Algorithms Sub-linear Linear Nearly linear Quadratic O(Log N) O(N) O(N Log N) O(N2) Exponential O(2N) O(N!) O(NN) Reasonable vs. Unreasonable Reasonable algorithms have polynomial factors – O (Log N) – O (N) – O (NK) where K is a constant Unreasonable algorithms have exponential factors – O (2N) – O (N!) – O (NN) Reasonable vs. Unreasonable Reasonable algorithms • May be usable depending upon the input size Unreasonable algorithms • Are impractical and useful to theorists • Demonstrate need for approximate solutions Remember we’re dealing with large N (input size) Runtime Two Categories of Algorithms 1035 1030 1025 1020 1015 trillion billion million 1000 100 10 Unreasonable NN 2N N5 Reasonable N Don’t Care! 2 4 8 16 32 64 128 256 512 1024 Size of Input (N) Summary • Reasonable algorithms feature polynomial factors in their O() and may be usable depending upon input size. • Unreasonable algorithms feature exponential factors in their O() and have no practical utility. Questions? Using O() Analysis in Design Air Traffic Control Conflict Alert Coast, add, delete Problem Statement • What data structure should be used to store the aircraft records for this system? • Normal operations conducted are: – Data Entry: adding new aircraft entering the area – Radar Update: input from the antenna – Coast: global traversal to verify that all aircraft have been updated [coast for 5 cycles, then drop] – Query: controller requesting data about a specific aircraft by location – Conflict Analysis: make sure no two aircraft are too close together Air Traffic Control System Program Algorithm Freq 1. Data Entry / Exit 2. Radar Data Update 3. Coast / Drop 4. Query 5. Conflict Analysis Insert N*Search Traverse Search Traverse*Search 15 12 60 1 12 #1 #2 #3 #4 #5 LLU 1 N^2 N N N^2 LLS N N^2 N N N^2 AU 1 N^2 N N N^2 AS N NlogN N LogN NlogN BT 1 N^2 N N N^2 F/B BST LogN NlogN N LogN NlogN Questions?