* Your assessment is very important for improving the work of artificial intelligence, which forms the content of this project

Download Structure from Motion

Quartic function wikipedia , lookup

System of polynomial equations wikipedia , lookup

Fundamental theorem of algebra wikipedia , lookup

Linear algebra wikipedia , lookup

Matrix (mathematics) wikipedia , lookup

Rotation matrix wikipedia , lookup

Quadratic form wikipedia , lookup

Non-negative matrix factorization wikipedia , lookup

Determinant wikipedia , lookup

Matrix calculus wikipedia , lookup

System of linear equations wikipedia , lookup

Gaussian elimination wikipedia , lookup

Matrix multiplication wikipedia , lookup

Orthogonal matrix wikipedia , lookup

Singular-value decomposition wikipedia , lookup

Cayley–Hamilton theorem wikipedia , lookup

Jordan normal form wikipedia , lookup

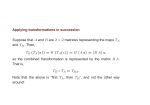

Eigen Decomposition Based on the slides by Mani Thomas Modified and extended by Longin Jan Latecki Introduction Eigenvalue decomposition Physical interpretation of eigenvalue/eigenvectors What are eigenvalues? Given a matrix, A, x is the eigenvector and is the corresponding eigenvalue if Ax = x A must be square and the determinant of A - I must be equal to zero Ax - x = 0 iff (A - I) x = 0 Trivial solution is if x = 0 The non trivial solution occurs when det(A - I) = 0 Are eigenvectors unique? If x is an eigenvector, then x is also an eigenvector and is an eigenvalue A(x) = (Ax) = (x) = (x) Calculating the Eigenvectors/values Expand the det(A - I) = 0 for a 2 x 2 matrix a11 a12 1 0 0 det A I det a a 0 1 22 21 a12 a11 det 0 a11 a22 a12 a21 0 a22 a21 2 a11 a22 a11a22 a12a21 0 For a 2 x 2 matrix, this is a simple quadratic equation with two solutions (maybe complex) 2 a11 a22 a11 a22 4a11a22 a12a21 This “characteristic equation” can be used to solve for x Eigenvalue example Consider, The corresponding eigenvectors can be computed as 2 a11 a22 a11a22 a12a21 0 1 2 2 A (1 4) 1 4 2 2 0 2 4 2 (1 4) 0, 5 1 2 0 2 4 0 1 2 5 5 0 2 4 0 0 x 1 2 x 1x 2 y 0 0 0 y 2 4 y 2 x 4 y 0 0 x 4 2 x 4 x 2 y 0 0 y 2 x 1 y 0 5 y 2 1 For = 0, one possible solution is x = (2, -1) For = 5, one possible solution is x = (1, 2) For more information: Demos in Linear algebra by G. Strang, http://web.mit.edu/18.06/www/ Let (A) be the set of all eigenvalues of A . Then (A)= (AT ) where AT is the transposed matrix of A. Proof: The matrix (A)T is the same as the matrix (AT ) , since the identity matrix is symmetric. Thus: det(AT )=det( (A)T )=det(A) The last equation follows from the fact that a matrix and its transpose have the same determinant, since both A and its transpose have the same characteristic polynomial. Hence the eigenvalues are the same for both A and AT . Physical interpretation Consider a covariance matrix, A, i.e., A = 1/n S ST for some S 1 .75 A 1 1.75, 2 0.25 .75 1 Error ellipse with the major axis as the larger eigenvalue and the minor axis as the smaller eigenvalue Original Variable B Physical interpretation PC 2 PC 1 Original Variable A Orthogonal directions of greatest variance in data Projections along PC1 (Principal Component) discriminate the data most along any one axis Physical interpretation First principal component is the direction of greatest variability (covariance) in the data Second is the next orthogonal (uncorrelated) direction of greatest variability So first remove all the variability along the first component, and then find the next direction of greatest variability And so on … Thus each eigenvectors provides the directions of data variances in decreasing order of eigenvalues For more information: See Gram-Schmidt Orthogonalization in G. Strang’s lectures