* Your assessment is very important for improving the work of artificial intelligence, which forms the content of this project

Download DOE06Proposal25Sep2006CMS

Data analysis wikipedia , lookup

Super-Kamiokande wikipedia , lookup

Business intelligence wikipedia , lookup

Strangeness production wikipedia , lookup

Future Circular Collider wikipedia , lookup

Large Hadron Collider wikipedia , lookup

ATLAS experiment wikipedia , lookup

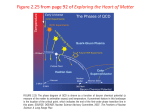

DOE/ER/40712 1. INTRODUCTION 1.1. OVERVIEW We propose to investigate the hot and extraordinarily dense new form of matter recently observed in high-energy nuclear collisions at the Relativistic Heavy Ion Collider (RHIC) at Brookhaven National Laboratory. We will do this using the PHENIX detector, which we use to study the production and properties of this partonic state of matter. Additionally we will look for evidence of chiral symmetry restoration in these collisions. We also propose to carry these investigations to the next level, using the Compact Muon Solenoid (CMS) detector at the Large Hadron Collider (LHC) at CERN, which we anticipate will enable us study the quark gluon plasma (QGP) production in a new regime. With a factor of 30 increase in the center of mass energy from RHIC to the LHC, the system should reach about a factor of 20 higher energy density and should be longer lived. The faculty members of the Relativistic Heavy Ion group at Vanderbilt are Professor Charles Maguire, Professor Victoria Greene (promoted to full professor in spring, 2006), and Assistant Professor Julia Velkovska. In addition, the current members of the Relativistic Heavy Ion group at Vanderbilt include postdoctoral fellows Shengli Huang, Ivan Danchev, and Michael Issah and graduate students Ron Belmont, Brian Love, Dillon Roach, and Hugo Valle. We also work with undergraduate students, advising them in directed study, independent study or as summer students. We typically have three undergraduates during each semester and during the summer. During the last three years we have worked with undergraduates Brian Love (now a graduate student with the group), Judson Wallace, Alexander Khasumski, Michael Mendenhall (now a graduate student at MIT), Robele Bekele, Evan Leitner, William Brown, Jeff Garcia, and Theodore Brasoveanu (now a graduate student at Princeton). Currently, Evan Leitner and Jeff Garcia are working with the group. The Vanderbilt group is a charter member of the PHENIX collaboration, having constructed major components of the baseline hardware and software systems. Members of the group were active in the analysis teams which produced the first PHENIX research publications indicating the production of a new state of matter at RHIC and in the continuing study of its properties. In the past three years, the present group at Vanderbilt has nearly completed an upgrade to the PHENIX detector and its data analysis capabilities. This addition to PHENIX will provide crucial measurements involving identified charged particles that will allow for further detailed studies of the matter produced in relativistic heavy ion collisions. In 2006, the Vanderbilt group formally joined the CMS heavy ion collaboration. We expect to contribute to developing analyses and triggers specific to the heavy ion program and the simulations needed for these analyses. The current accelerator schedule includes a pilot low luminosity Pb+Pb run at √sNN = 5.5 TeV by 2008. A full luminosity Pb+Pb run is expected in 2009. Thus, the first heavy ion data from CMS will be available on the time scale of this proposal. The Vanderbilt group is preparing to take active part in all aspects of the exploration of this new, unexplored regime of heavy ion collisions at ultra-relativistic energies. [BREAK] 1.2. SOFTWARE AND COMPUTING Since the beginning of RHIC data acquisition the Vanderbilt group has had management responsibility in the PHENIX collaboration for coordinating the major simulation projects carried out in support of all the real data analyses. Hundreds of TBytes of simulated data have been generated using the several large computer farms at PHENIX institutions, including the one at Vanderbilt. In 2005 and 2006, the majority of such projects were carried out by Vanderbilt personnel, primarily graduate students supported on the grant. Automated database software has been written by these students to account for, and retrieve, the tens of thousands of files that have been generated thus far. We intend to continue and expand this work on behalf on the PHENIX collaboration. For RHIC Run 6 the Vanderbilt group assumed responsibility for the real-time analysis of PHENIX Level2 triggered data. These triggered data contain the events that have the J/ψ and light vector mesons recorded by the PHENIX detector. By reconstructing the events in real time we can provide immediate feedback to the PHENIX management and the RHIC accelerator group on the quality and physical significance of the data. This work had been previously carried out at ORNL but was halted because of resource limitations. Before the start of Run 6, the Vanderbilt group assembled the personnel, hardware and software infrastructure needed to continue this vital effort. We were fortunate to have donated to us the services of the management at ACCRE, who are eager to have their farm involved in the reconstruction of data from large physics experiments like PHENIX. The ACCRE staff put in place the Grid software and server that received the data from the PHENIX counting house just after it was acquired at RHIC. The data was then reconstructed within hours of being taken. As an example of the quality of this process, Figure 1 shows the reconstructed π0 and η mesons in different bins of transverse momentum. A clear η peak is observed in the γγ invariant mass distributions out to very high pT ~ 20 GeV/c. The Run 6 Level2 reconstruction effort for PHENIX at Vanderbilt was an unqualified success. The work even attracted the notice of the Science Grid This Week newsletter: www.interactions.org/sgtw/2006/0503/phenix_more.html (May 3, 2006). This article highlighted the use of the Grid software in a RHIC experiment at the DOE’s Brookhaven National Laboratory. As with the simulation projects, we intend to expand the 2 real data reconstruction work for PHENIX in the coming three year cycle of our group’s research program. Figure 1: π0→γγ and η→γγ real-time reconstruction of Level2 filtered data at the Vanderbilt farm. A clear η peak is observed out to pT ~ 20 GeV/c. 2. GOALS FOR THE NEXT THREE YEARS The goal of the RHI group at Vanderbilt is to continue the investigation of sQGP at RHIC energies via precise measurements using rare probes and systematic studies of phenomena that can be studied with the use of more abundant probes, such as light hadrons. We also plan to expand the investigation of QGP to higher energies through our participation in CMS. Our proposed physics program is described in detail in Section Error! Reference source not found.. We will continue the extensive service work for PHENIX which entails the maintenance and operation of the PHENIX pad chambers (Section Error! Reference source not found.), the commissioning, maintenance and operation of the PHENIX TOF.W system (Section Error! Reference source not found.) and the real-time data reconstruction of Level2 filtered data (Section 1.2). 3 Service work to CMS will include the development of triggers and data analyses and simulations specific to the heavy ion program, as well as data reconstruction at the Vanderbilt ACCRE farm (Section 3.2). To carry out the proposed research program we expect to continue at our present strength of three faculty members, three research associates, four graduate students and two undergraduate students as described in Section Error! Reference source not found.. [BREAK] 2.1. CMS The CMS detector1 will provide unique capabilities for focused measurements that exploit the new opportunities unfolding at the LHC. These measurements will directly address the fundamental scientific questions in the field of high density QCD. The detector provides unparalleled coverage for both tracking and electromagnetic and hadronic calorimetry combined with precise muon identification. The detector is read out by a fast data acquisition system and allows the development of extremely complex triggering. The CMS detector was designed to provide tracking and calorimetry with high resolution and granularity over the full azimuthal angle as well as a very large range in rapidity. The primary emphasis is on detecting muons, electrons, photons, and jets, but significant capability exists for other heavy ion reaction products. The various detector elements can be used to perform particle identification of a large array of particle species. The electronics and data acquisition systems allow a very fast initial readout as well as complex multilevel triggering. 1 Ballintijn, et al, Heavy Ion Physics at the LHC with the Compact Muon Solenoid Detector, proposal to the U.S. Department of Energy, 2 July 2006. 4 Figure 2: A cutaway view of the CMS detector. A three-dimensional cutaway view of the CMS detector is shown in Figure 2. The most prominent element is the superconducting solenoidal coil, which is roughly 13 m long, 6 m in diameter, and provides a 4 T field throughout the inner portion of the detector. The inner region holds the silicon tracking system and the electromagnetic and hadronic calorimeters. Additional information about particles at high pseudorapidity is provided by a calorimeter located near the beam line about 11 m from the interaction point. The regions outside the coil as well as in the forward and backward directions are filled with tracking and absorbers for detecting muons. The central tracking covers |η|< 2.5, the central calorimeters cover |η|< 3 and the forward calorimeters extend the coverage to 3< |η|< 2.5. Muons can be tracked and identified inside |η|< 2.4, roughly the same region covered by the inner Si tracker. Very far from the interaction point (not shown in the figure), the experiment includes a suite of detectors designed to study particles emitted at very high pseudorapidity. The CASTOR calorimeter covers 5<|η|< 2.5 and the TOTEM Roman pots extend this to 7<|η|<10. Finally, the Zero Degree Calorimeter sits 140 m away which is behind the first accelerator magnet. This detector is primarily sensitive to neutron spectators from the colliding ions and is one of the major hardware contributions of the US heavy ion group. [BREAK] 5 2.2. PHYSICS WITH CMS At the LHC, the energy densities of the thermalized matter are predicted to be 20 times higher than at RHIC, implying a doubling of the initial temperature2 . The higher densities of the produced partons result in more rapid thermalization and, consequently, the time spent in the quark-gluon plasma phase increases by almost a factor of three2. These dramatically different conditions may allow the hot, dense system to reach the weakly interacting, ideal gas quark-gluon plasma, or some altogether different state, in 3 contrast to the strongly interacting plasma believed to be created at RHIC . The CMS detector (see Section 2.1) is well suited for the study of the properties of the produced matter via a variety of probes. From our participation in PHENIX, the Vanderbilt group has developed expertise on the analyses of identified particle production, flow and jet correlations. These topics are of interest at the LHC, too and can be studied with the CMS detector. At the time we joined the CMS collaboration we specifically expressed interest in analyzing data on global observables, tagged jets and quarkonia production. The first heavy ion data from CMS will be available on the timescale of this proposal. For reference, we include below (Table 1) the projected run schedule at the LHC and the expected data samples. The physics goals for the Heavy Ion - CMS collaboration are listed in Table 2. Table 1: The projected run schedule at the LHC1. Note that only the minimum bias events of interest to the heavy ion program are counted for the p+p runs. 2 3 I. Vitev and M. Gyulassy, Phys. Rev. Lett. 89, 252301 (2002); I. Vitev, nucl-th/0308028. T.D. Lee and M. Gyulassy, nucl-th/0403032. 6 Table 2: A preliminary schedule for the physics goals of the heavy ion program at the LHC1 for the calendar years that are relevant to this proposal. The Vanderbilt group has the potential to make significant contributions to the heavy ion physics program at CMS. In addition to the computing projects (simulations and data reconstruction) described in Section 3.2, we will develop analysis techniques that are relevant to our physics interests. Dr Issah will devote ½ of his effort in 2007 to developing analysis tools and simulations for the study of identified particle flow (v1 , v2 and v4 ) via the reaction plane and the cumulant method. Later on he will work primarily on CMS, while still supervising physics analysis of graduate students working on RHIC data. We expect that the new graduate student who will replace Mr. Valle after he completes his Ph.D ( expected for 2008) will be working on CMS data. 7 One of the key questions for understanding the connection between heavy ion collisions and equilibrated QCD matter as described in lattice QCD calculations concerns the approach to thermal equilibrium in the early stages of heavy ion collisions. At RHIC, studies of elliptic flow have become the main experimental tool addressing this question. Comparison to hydrodynamic calculations suggest that at the highest energies, except for the most peripheral collisions, approximate thermal equilibrium is achieved and that, correspondingly, the produced medium is characterized by a very small shear viscosity. Theoretical efforts to understand how equilibration is achieved and to quantify the connection of medium properties like the viscosity to the experimental observables are underway. Measurements at the LHC will provide crucial new information to the existing studies through the measurement of flow at significantly higher initial densities. This is particularly important since elliptic flow data so far exhibit a steady rise in √sNN continuing up to the highest RHIC energies. As discussed in Section Error! Reference source not found., the flow for heavy quark flavors presents the most promising tool for these studies. CMS will be able to perform these measurements with high precision. The highly segmented, large acceptance calorimeters will allow a very accurate determination of the reaction plane for each event. Measurements sensitive to heavy quark flavors, e.g. based on single muons not originating from the main vertex, will be performed over a large rapidity range and out to higher pT than accessible at RHIC. Qualitatively new information will arise from probing the effect of the increase in initial density, thereby providing clear tests of our understanding of the approach to equilibrium and the properties of the QCD medium. Another topic of interest to our group is the flavor dependence of jet quenching. For heavy quarks, gluon bremsstrahlung at small angles is predicted to be suppressed. This so-called dead cone effect leads to a considerably smaller energy loss for heavy quarks compared to light quarks. Experimentally, heavy quark jets are tagged by reconstructing secondary vertices of the leading D or B mesons. In CMS, the B meson decays can be tagged either in the semi-leptonic decay channel by looking for high transverse momentum muons with displaced vertices or by reconstructing high multiplicity secondary vertices in the hadronic decay channel. A cut on the lifetime of the secondary decay will be used to vary the relative contribution of charm or bottom quark jets in the sample. The high precision muon and charged particle tracking of CMS will provide good tagging efficiency with low contamination of light quark/gluon jets. Related measurements are single D and B meson yields. These analyses require sophisticated triggering due to the high multiplicity environment at the LHC. Prof. Velkovska is planning to spend her sabbatical leave in 2008-2009 at CERN working on the triggering and analysis tools needed for this data. This grant proposal includes a request for one semester support for Prof. Velkovska during this time, while Vanderbilt will also contribute one semester salary. The baryon/meson effects and the study of hadronization can be performed at CMS using identified π0, η, φ, Λ, Ω, D, B and jet-correlations. The feasibility of these studies at pT relevant for the hadronization physics will be addressed by Prof. Velkovska in the coming year and then specific analyses will be developed during her sabbatical leave. 8 CMS and the LHC environment present exciting opportunities for the study of charmonium (J/') and bottomonium (’" production and thus reveal crucial information on the many-body dynamics of high-density QCD matter. Sequential suppression of heavy quarkonia production is generally agreed to be one of the most direct probes of quark gluon plasma formation. Lattice QCD calculations of the heavyquark potential indicate that color screening dissolves the ground-state charmonium and bottomonium states, at temperatures 2Tc and 4Tc, respectively. Because of the enhanced yield of charm quarks, the formation of J/by recombination may also become significant. While charmonium has been studied in heavy ion collisions at the SPS and at RHIC, the bottomonium studies are only feasible at LHC energies. Regarding these measurements, CMS has unique capabilities in terms of acceptance, resolution, and statistical power in comparison to any existing or planned heavy ion detector. Prof. Greene is interested in these measurements and is planning to spend her sabbatical leave in 2009-1010 working on this subject. This grant proposal includes a request for one semester support for Prof. Greene during this time, while Vanderbilt will also contribute one semester salary. 3. COMPUTING PROJECTS FOR PHENIX AND CMS 3.1. PHENIX As described in Section 1.4.2 the Vanderbilt group enlisted the assistance of the staff of the large computer farm at Vanderbilt (ACCRE) in order to process in near realtime the PHENIX Level2 data acquired in the Run 6 pp beam time during the spring 2006. This effort was so successful that we are encouraged to expand upon it during the next three running periods at RHIC, and eventually into processing data from the CMS experiment at LHC. The near-real time reconstruction feature is the most critical aspect of these operations. Raw data are acquired into six buffer disk areas in the PHENIX counting house. In normal running the data can remain on those buffer areas for only a few days, after which the data must be sent to the High Performance Storage System (HPSS), a system of tape archiving. Retrieving the data from the tape archives concurrent with data taking is neither feasible nor desirable since the bandwidth of the HPSS should be dedicated to archiving newly arriving data from RHIC. Doing otherwise compromises the investment in the luminosity capability of the PHENIX detector. Hence, the data must be copied from the PHENIX counting house buffer disks to external resources where the data can be reconstructed and evaluated as quickly as possible. We established prior to Run 6 that the data transfer rates to Vanderbilt from RHIC were in excess of 50 Megabits/second, which made our reconstruction plans viable. A consortium of biomedical, engineering, and physics groups operates the Vanderbilt ACCRE 4 (Advanced Computing Center for Research and Education) 4 http://www.accre.vanderbilt.edu 9 computer farm. At present this farm offers 1500 CPUs, front-ended by 32 gateway nodes through which users can submit jobs via the well-known PBS queuing system. The ACCRE facility was funded in 2004 with an $8.7M capital grant from the Vanderbilt University Provost's office as means of ensuring that our university's research programs have continued international prominence. Since then the NIH has also provided $1.5M additional funding for research programs in the School of Medicine who are using ACCRE. Vanderbilt is an Internet2 member and currently has an OC12 (644 Megabits/second) external network connection. In late 2006 the University will be adding three 10 Gigabit/second connections, one which will terminate at the Starlight hub in Chicago. This hub in turn will permit high-speed connectivity with Brookhaven National Lab, Fermi lab, CERN, and other high performance networks. In the Run 6 data processing more than 1,250 jobs were run in the course of three months, with an average wait per job start of less than 16 minutes. Effectively, as soon as the raw data files were transferred from the PHENIX counting house, then they could be reconstructed. In practice, there was a twice daily draining of accumulated raw data buffers at RHIC, followed by a corresponding twice daily reconstruction cycle at ACCRE. There were sustained periods of time when 50 nodes were in continuous use. Since this was a proof-of-principle project, there was no extra cost incurred to the grant. However, if such work is to continue in the next three running periods of RHIC, in addition to our continued simulation projects service work to PHENIX, then new funding will be required. We asked the ACCRE staff to conduct a study as to what would be the cost of doubling our effort for RHIC Run 7 compared to what we did in Run 6, and continuing at that level for Run 8 and Run 9. From this study it was determined that the total cost would come to $49,800 per year for three years, split between hardware ($33,800) and operating costs ($16,000). Operating costs in ACCRE are pro-rated according to the number of nodes allocated to a group, and in this case include the dedicated use of 10 Terabytes of disk space during the RHIC running periods. Twenty-four hour/day and seven day/week ACCRE staffing is also included in the operations costs. During the Run 6 PHENIX operations the ACCRE staff service was indeed superb. For this enhanced level of data reconstruction in PHENIX we would need to allocate an additional 56 nodes in installments of 26, 13, and 13 during the next three years. As part of its growth plan, these nodes and many more will be purchased soon by ACCRE. With this proposal of an additional $49,800 per year we are guaranteeing that the nodes will be available for the analysis of PHENIX data in real time. There is no other computing resource available to PHENIX that will be in a position do this work. The RHIC Computing Facility (RCF) is already overburdened by the off-line analysis of prior year data. In fact, to relieve the burden at RCF major fractions of the PHENIX prior year data volume are shipped to the PHENIX Computer Center in Japan (CCJ), with the rest of the CCJ facility being devoted to simulation production. While the ACCRE staff would insure that the computer farm is operating full time, the actual monitoring of the data reconstruction would be done, as it was in Run 6, by the group’s members. In Run 6 this included Mr. Hugo Valle and Prof. Charles 10 Maguire, assisted by Dr. David Silvermyr of ORNL. Because Dr. Silvermyr will be assuming new responsibilities related to LHC involvement, we do not anticipate that he will be as involved, nor would he be still needed, in the future for this work. 3.2. CMS We are closely aligned with the work of the High Energy experimental group at Vanderbilt who joined CMS in 2005. This group has proposed that the ACCRE system will become a Tier 2 facility for CMS (see their Letter of Intent in the Appendix). Thus our High Energy colleagues were quite happy to see our corresponding efforts for PHENIX succeeding in Run 6. In the same vein, we will propose that Vanderbilt’s ACCRE system at become one of the four major computing resources in the U.S. heavy ion program at CMS. In fact, the ACCRE system is the most advanced of any yet in this respect. We have begun initial discussions with other CMS HI members for this proposal. Lastly, we will bring to CMS our long experience with simulation processing. We understand that currently the simulation effort in the heavy ion program for CMS is limited. One of us (Prof. Maguire) plans to spend at least 30% of his sabbatical year in calendar 2007 at CERN in order to have the Vanderbilt group be a major contributor to CMS simulation. Our budget includes salary support for Prof. Maguire in the fall of 2007 semester for this purpose, while his spring 2007 sabbatical semester is already funded by Vanderbilt. 11