* Your assessment is very important for improving the work of artificial intelligence, which forms the content of this project

Download POSITIVE DEFINITE RANDOM MATRICES

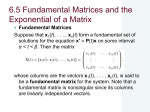

Eigenvalues and eigenvectors wikipedia , lookup

System of linear equations wikipedia , lookup

Determinant wikipedia , lookup

Fundamental theorem of algebra wikipedia , lookup

Jordan normal form wikipedia , lookup

Singular-value decomposition wikipedia , lookup

Quadratic form wikipedia , lookup

Non-negative matrix factorization wikipedia , lookup

Gaussian elimination wikipedia , lookup

Orthogonal matrix wikipedia , lookup

Fisher–Yates shuffle wikipedia , lookup

Matrix calculus wikipedia , lookup

Perron–Frobenius theorem wikipedia , lookup

Comptes rendus de l’Academie bulgare des Sciences, Tome 59, № 4, 2006, p. 353 – 360.

POSITIVE DEFINITE RANDOM MATRICES

Evelina Veleva

Abstract: The paper begins with necessary and sufficient conditions for positive

definiteness of a matrix. These are used for obtaining an equivalent by distribution

presentation of elements of a Wishart matrix by algebraic functions of independent random

variables. The next section studies when sets of sample correlation coefficients of the

multivariate normal (Gaussian) distribution are dependent, independent or conditionally

independent. Some joint densities are obtained. The paper ends with an algorithm for

generating uniformly distributed positive definite matrices with preliminary fixed diagonal

elements.

Key words: positive definite matrix, Wishart distribution, multivariate normal

(Gaussian) distribution, sample correlation coefficients, generating random matrices

2000 Mathematics Subject Classification: 62H10

1. Necessary and sufficient conditions for positive definiteness of a matrix

Definition. Suppose A is a real symmetric matrix. If, for any non-zero vector x , we

have that

x′A x > 0

then A is a positive definite matrix (here and subsequently x′ is the transpose of x ).

Let A = {ai j }in, j =1 be a real matrix, and let N = {1, 2,… , n} . For any nonempty

α , β ⊂ N let A(α , β ) be the submatrix of A obtained by deleting from A the rows

indexed by α and the columns indexed by β . To simplify notation we use A (α ) instead of

A (α ,α ) . We will write the complement of α in N as α c . For any integers i and j,

1 ≤ i ≤ j ≤ n we will denote by A (α )i j and A(α )i0j the block matrices

⎛ A (α )

A (α ,{ j}c ) ⎞

⎛ A (α )

A (α ,{ j}c ) ⎞

0

=

(

α

)

,

A

A (α )i j = ⎜

⎟

⎜

⎟.

ij

c

⎜ A′(α ,{i}c )

⎟

ai j

0

⎝ A′(α ,{i} )

⎠

⎝

⎠

Theorem 1. Let A be a real symmetric matrix of size n, and let i, j be fixed integers

such that 1 ≤ i < j ≤ n . The matrix A is positive definite if and only if the matrices A ({i})

and A ({ j}) are both positive definite and the element ai j satisfies the inequalities

− A({i, j})i0j −

A({i}) A({ j})

A({i, j})

< ai j <

− A({i, j})i0j +

A({i}) A({ j})

A({i, j})

,

where . denotes determinant of a matrix.

Theorem 2. A real symmetric matrix A = {ai j }in, j =1 is positive definite if and only if

its elements satisfy the following conditions:

aii > 0 ,

i = 1,… , n ;

− a11 aii < a1i < a11 aii , i = 2,… , n ;

− A({i,… , n})i0j −

A({i,… , n})ii A ({i,… , n}) j j

A ({i,… , n})

<

< ai j

− A ({i,… , n})i0j +

A({i,… , n})i i A ({i,… , n}) j j

A({i,… , n})

i = 2,… , n − 1,

,

j = i + 1,… , n.

The proofs of Theorems 1 and 2 will appear in [1].

2. Wishart random matrices

Let X1 ,…, X n be independent, Xi ~ N p (0, Σ) , with n ≥ p , i.e., Xi has a pdimensional multivariate normal distribution with mean vector a zero vector 0 and

covariance matrix Σ , Σ > 0 and let W = ∑ Xi X′i . We say that W has a central Wishart

distribution with n degrees of freedom and covariance matrix Σ , and write W ~ W p (n, Σ) . It

is easy to see that W is a p × p matrix and that W > 0 . From this definition, it is apparent

that

EW = nΣ,

(1)

AWA′ ~ Wq (n, AΣA′) ,

where A is q × p of rank q.

It is known that, for an arbitrary positive definite p × p matrix Σ there exist a

p × p matrix B such that Σ = BB′ . Consequently, from (1) it follows that if W ~ W p (n, I ) ,

where I is the identity matrix of size p, then BWB′ ~ W p (n, Σ) . Therefore from now on we

consider the case Σ = I .

Ignatov and Nikolova in [2] consider the distribution Ψ (n, p ) with joint density

(2)

C D

( n − p −1) / 2

where

2

IE ,

- C is a constant;

- D is the matrix

⎛ 1

⎜

⎜ x12

D=⎜

⎜ .

⎜ x1 p

⎝

x12

1

.

x2 p

x1 p ⎞

⎟

… x2 p ⎟

;

. . ⎟⎟

… 1 ⎟⎠

…

- I E is the indicator of the set E, containing all points ( xij , 1 ≤ i < j ≤ p ) in the real

space R p ( p −1) / 2 , such that the matrix D is positive definite.

They have proved the following Proposition:

Proposition. Let τ1 ,τ 2 ,… ,τ p be independent and identically χ 2 - distributed

random variables with n degrees of freedom and let random variables ν ij , 1 ≤ i < j ≤ p have

distribution Ψ (n, p ) . Let us assume, that the set { τ1 ,τ 2 ,… ,τ p } is independent of the set

{ν i j , 1 ≤ i < j ≤ p }. Then the matrix below V has the Wishart distribution W p ( n, I ) ,

⎛ τ …

⎜ 1

V= ⎜

⎜

⎜ 0

⎝

⎛ 1 ν 12

0 ⎞⎜

⎟ ⎜ ν 12

1

⎟⎜

⎟

τ p ⎟⎠ ⎜⎜

⎝ν 1 p ν 2 p

ν1 p ⎞

⎟ ⎛ τ1 …

⎟⎜

⎟ ⎜⎜ 0

1 ⎟⎠ ⎝

ν 2 p ⎟⎜

0 ⎞

⎟

⎟.

⎟

τ p ⎟⎠

Using the same variable transformation as in the proof of this Proposition, it can be

shown that the opposite statement is also true. So, the density (2) gives actually the joint

density of the entries of the sample correlation matrix in the case Σ = I .

The next Theorem gives an equivalent by distribution presentation of the random

variables ν ij , 1 ≤ i < j ≤ p , having distribution Ψ (n, p ) by functions of independent

random variables. This can be used for generating Ψ (n, p ) distributed random matrices and

consequently Wishart ones (see [3]).

Theorem 3. Let the random variables ηij ,1 ≤ i < j ≤ p be mutually independent.

Suppose that ηi i +1 , … , ηip be identically distributed Ψ (n − i + 1, 2) for i = 1,… , p − 1 . The

random variables ν ij , 1 ≤ i < j ≤ p , defined by

ν1i = η1i , i = 2,…, p,

2

ν 2i = η12η1i + η2i (1 − η12

)(1 − η12i ), i = 3,… , p,

3

i −1

i −1 ⎛

q =1

t =1 ⎝

t −1

⎞

q =1

⎟

⎠

ν ij = ηij ∏ (1 − η qi2 )(1 − ηq2 j ) + ∑ ⎜ηti ηtj ∏ (1 − ηqi2 )(1 − ηqj2 ) ⎟, 3 ≤ i < j ≤ p

⎜

have distribution Ψ (n, p ) .

To generate random Ψ (n, p ) matrices, using Theorem 3, we have to know how to

generate random variables with distribution Ψ (k , 2) for k ≥ 2 . For p = 2 the density (2) of

the distribution Ψ (n, p) has the form

C (1 − y 2 )

n −3

2 ,

y ∈ (−1,1)

and it is known as the Pearson probability distribution of second type. Random variables

with this distribution can be easily generated (see [4], p.481) using the quotient of the

difference and the sum of two gamma - distributed random variables.

3. Marginal densities of the distribution Ψ (n, p)

In recent years the Wishart distribution and distributions derived from the Wishart

one have received a lot of attention because of their use in graphical Gaussian models. The

essence of graphical models in multivariate analysis is to identify independences and

conditional independences between various groups of variables.

Empirical correlation matrices are of great importance for risk management and

asset allocation. The study of correlation matrices is one of the cornerstone of Markowitz’s

theory of optimal portfolios.

Using Theorems 1 and 2 it is possible to get some marginal densities of the

distribution Ψ (n, p ) and to investigate when sets of sample correlation coefficients of the

multivariate normal (Gaussian) distribution are dependent, independent or conditionally

independent.

Let ν ij , 1 ≤ i < j ≤ p be random variables with distribution Ψ (n, p ) . Denote by V

the set of random variables V = {ν ij , 1 ≤ i < j ≤ p} . In [5] we have proved the following

Theorem:

Theorem 4. Every p random variables from the set V are dependent.

Let us introduce the notation f S for the joint density of the random variables from a

set S ; if S = Φ , i.e. the set S is empty we define f S = 1 .

Theorem 5.

Let r and q be arbitrary integers, such that 2 ≤ r ≤ p − 1 and

1 ≤ q ≤ p − 2 . Let us denote by

S1 and

S2 = {ν ij q + 1 ≤ i < j ≤ p} . Then

4

S2

the sets

S1 = {ν ij 1 ≤ i < j ≤ r} and

f S1 ∪ S2 =

(3)

f S1 f S2

f S1 ∩ S2

.

The proof will appear in [6].

We denote by f S1 ∪ S2 / S1 ∩ S2 , f S1 / S1 ∩ S2 and f S2 / S1 ∩S2 the densities of S1 ∪ S2 , S1

and S2 , conditioned on the random variables from the set S1 ∩ S2 . If we divide the two

sides of equality (3) by f S1 ∩ S2 we get the relation

(4)

f S1 ∪ S2 / S1 ∩ S2 = f S1 / S1 ∩ S2 f S2 / S1 ∩S2 .

According to the definition of conditional independence, given in [7], equality (4) shows that

the random variables from the sets S1 and S2 are independent conditionally on the random

variables from the set S1 ∩ S2 .

Theorem 6. Let q1 ,… , qk , r1 ,… rk be integers, such that 1 = q1 ≤ q2 ≤ … ≤ qk ≤ p and

1 ≤ r1 ≤ r2 ≤ … ≤ rk = p . Let us denote by Sl the set Sl = {vij ql ≤ i < j ≤ r l}, l = 1,… k . Then

f S1 ∪…∪ Sk =

f S1 f S2 … f Sk

f S1 ∩ S2 f S2 ∩S3 … f Sk −1 ∩ Sk

.

Let S = {ν is js , s = 1,… , k} be an arbitrary subset of the set of random variables V . To

this subset S we can attach a graph G ( S ) with nodes {1, 2,…, p} and k undirected edges

{ {is , js }, s = 1,…, k} .

Theorem 7. Let S1 and S2 be two subsets of the set V and G1 and G2 be their

corresponding graphs. Let us denote by K1 the set of the numbers of the nodes, which are

vertex of an edge from G1 . Analogically, for the graph G2 let us denote the corresponding

set by K 2 and let K = K1 ∩ K 2 . If the set K contains at most one element then

f S1 ∪ S2 = f S1 f S2 ,

i.e. the set S1 and S2 are independent. If the set K contains at least two elements and for

every two elements r and q from K , r < q the random variable ν rq belongs

simultaneously to S1 and S2 then

f S1 ∪ S2 =

f S1 f S2

f S1 ∩ S2

,

i.e. the sets S1 and S2 are independent conditioned on the set S1 ∩ S2 .

5

Theorem 8. Let S be a subset of the set V and G be the corresponding graph. Let

us denote by S+ the subset of S containing all variables which edges in the graph G are

part of a closed path (circuit). If S− is the set S− = S \ S+ then the sets S+ and S− are

independent.

The proofs of Theorems 6 - 8 will appear in [8].

In [9] we have proved the following two Theorems:

Theorem 9. Let r and q be arbitrary integers, such that 1 ≤ r < q ≤ p . The random

variables from the set S = V \ {ν rq } have joint density function of the form

(n− p )

⎛ (n − p + 1) ⎞ ⎛ 1 ⎞

Γ⎜

Γ⎜ ⎟ ( D

2

⎟

r ;r Dq ;q )

2

⎠ ⎝2⎠

C ⎝

IE ,

( n − p +1)

⎛n− p+2⎞

Γ⎜

2

⎟

Dr , q;r ,q

2

⎝

⎠

where

- I E is the indicator of the set E, containing all points in R p ( p −1) / 2−1 , such that the

matrices Dr ;r and Dq;q are both positive definite;

- Γ(.) is the well-known Gamma function.

Theorem 10. Let r be an arbitrary integer, such that 1 ≤ r ≤ p . The random

variables from the set S = {ν ij 1 ≤ i < j ≤ p, i ≠ r , j ≠ r} have joint density function of the

form

fS =

⎡ ⎛ n ⎞⎤

⎢Γ ⎜ 2 ⎟ ⎥

⎣ ⎝ ⎠⎦

p −2

⎛ n −1 ⎞ ⎛ n − 2 ⎞

⎛ n − p + 2 ⎞ ⎡ ⎛ 1 ⎞⎤

Γ⎜

⎟Γ⎜

⎟… Γ⎜

⎟ ⎢Γ ⎜ ⎟ ⎥

2

2

2

⎝

⎠ ⎝

⎠

⎝

⎠ ⎣ ⎝ 2 ⎠⎦

( p −1) ( p − 2)

2

Dr ;r

n− p

2

for all points in R( p −1)( p − 2) / 2 for which the matrix Dr ;r is positive definite.

4. Uniformly distributed positive definite matrices

The positive definiteness of a matrix is a necessary condition for applying many

numerical algorithms. The diagonal elements of the matrix have often specified significance.

The correctness of such numerical algorithm can be proven if we are able to choose a

positive definite matrix at random with uniform distribution. The space of all positive

definite matrices is, however, a cone and consequently a uniform distribution cannot be

defined over the whole cone because it has infinite volume. This enforces to introduce

additional restrictions on the matrices, which together with the positive definiteness reduce

our choice within a set with finite volume. An algorithm for generating uniformly distributed

positive definite matrices with fixed diagonal elements is given below. If the user does not

6

want to fix concrete diagonal elements of the matrix in advance, he has though to assign

bounds for each diagonal element. For instance, he can choose all diagonal elements to be in

the interval (0,100). Then a uniform random number in the chosen bounds has to be

generated for every diagonal element. The described below algorithm allows the user a

random choice among all positive definite matrices with concrete diagonal elements that are

either fixed in advance or randomly generated within the chosen bounds.

From now on we assume that a11 , … , ann are the diagonal elements of the matrix,

chosen in accordance with users’ preferences. They have to be positive so that to exist at

least one positive definite matrix with such diagonal elements (see Theorem 1).

Theorem 11. Let the random variables ηij ,1 ≤ i < j ≤ p be mutually independent.

Suppose that ηi i +1 , … , ηip be identically distributed Ψ (n − i + 2, 2) for i = 1,… , p − 1 .

Consider the random variables ν ij , 1 ≤ i < j ≤ p , defined by

ν1i = η1i a11aii , i = 2,…, p,

⎡ i −1 ⎛

r −1

⎞

i −1

⎤

⎢⎣ r =1 ⎜⎝

q =1

⎟

⎠

q =1

⎥⎦

2

2

ν ij = = aii a jj ⎢ ∑ ⎜η riη rj ∏ (1 − η qi

)(1 − η q2 j ) ⎟ + ηij ∏ (1 − η qi

)(1 − η q2 j ) ⎥

i = 2,… , p − 1, j = i + 1,… , p .

The joint density function of the random variables ν ij , 1 ≤ i < j ≤ p is of the form:

fν ij ,1≤i < j ≤ p ( xij ,1 ≤ i < j ≤ p ) = C I E ,

where

- C is the constant

⎡ ⎛ p + 1 ⎞⎤

⎢Γ ⎜ 2 ⎟ ⎥

⎠⎦

⎣ ⎝

C=

⎛ p ⎞ ⎛ p −1 ⎞

⎛ 3 ⎞ ⎡ ⎛ 1 ⎞⎤

Γ⎜ ⎟Γ⎜

⎟ … Γ ⎜ ⎟ ⎢Γ ⎜ ⎟ ⎥

⎝2⎠ ⎝ 2 ⎠

⎝ 2 ⎠ ⎣ ⎝ 2 ⎠⎦

p −1

p ( p −1)

2

(a11a22 … a pp

p −1

) 2

;

- I E is the indicator of the set E , consisting of all points ( xij ,1 ≤ i < j ≤ p ) in

R p ( p −1) / 2 , for which the matrix

(5)

⎛ a11

⎜

x

A = ⎜ 12

⎜

⎜

⎝ x1n

x1n ⎞

⎟

x2 n ⎟

⎟

⎟

ann ⎠

x12

a22

x2 n

is positive definite.

7

Theorem 11 gives the following algorithm for generating uniformly distributed

positive definite matrices:

1) Generate p ( p − 1) / 2 random numbers yij , 1 ≤ i < j ≤ p so that yij comes from

the distribution Ψ ( n − i + 2, 2) .

2) In order to reduce calculations, compute the auxiliary quantities zij , 1 ≤ i ≤ j ≤ p

such that

zij = yij (1 − y12j )… (1 − yi2−1 j ) with yii = 1 .

3) Calculate the desired matrix (5) with

xij = aii a jj ( z1i z1 j + z2i z2 j +

+ zii zij ) .

The obtained formulas show that it is possible to create a program, which generates

without dialog with the user a matrix U with units on the main diagonal. This is done by

steps 1)-3) substituting a11 = = ann = 1 . Then the user, according to his preferences, forms

a diagonal matrix D = diag ( a11 ,… , ann ) and the desired matrix A is A = DUD .

The details will appear in [10].

Reference:

[1]. VELEVA E. Linear Algebra and Appl. (submitted).

[2]. IGNATOV T. G., A. D. NIKOLOVA. Annuaire Univ. Sofia, Fac. of Econ. & Buss.

Admin., 2004, v.3, 79 – 94.

[3]. VELEVA E. Annuaire Univ. Sofia, Fac. of Econ. & Buss. Admin., 2007, v.6, 57 - 66.

[4]. DEVROYE L., Non-Uniform Random Variate Generation, New York, Springer-Verlag,

1986, 843 p.

[5]. VELEVA E., T. IGNATOV. Advanced Studies in Contemporary Mathematics, 12 (2),

2006, pp. 247 - 254.

[6]. VELEVA E. Math. and Education in Math. 35(2006), 315-320.

[7]. DAWID A. P., J. Roy. Stat.Soc., Ser. B, 1979, 41(1), 1–31.

[8]. VELEVA E. Pliska Stud. Math. Bulgar. 18 (2007), 379 – 386.

[9]. VELEVA E. Annuaire Univ. Sofia, Fac. of Econ. & Buss. Admin., 2006, v.5, 353 – 369.

[10]. VELEVA E. Math. and Education in Math. 35(2006), 321 – 326.

.

Evelina Ilieva Veleva

Department of Numerical Methods and Statistics,

Rouse University, Rouse, 7017, Studentska 8, Bulgaria

E-mail address: [email protected]

8