* Your assessment is very important for improving the work of artificial intelligence, which forms the content of this project

Download [5pt] Fixed-point Convergence

Survey

Document related concepts

Transcript

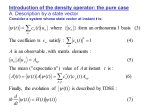

Convergence of Fixed-Point Iterations

Instructor: Wotao Yin (UCLA Math)

July 2016

1 / 30

Why study fixed-point iterations?

• Abstract many existing algorithms in optimization, numerical linear

algebra, and differential equations

• Often require only minimal conditions

• Simplify complicated convergence proofs

2 / 30

3 / 30

Notation

• space: Hilbert space H equipped with h·, ·i and k · k

• Fine to think in R2 (though not always)

• An operator T : H → H (or C → C where C is closed subset of H)

• our focus:

•

•

when FixT := {x ∈ H : x = T (x)} is nonempty

the convergence of xk+1 ← T (xk )

• simplification: T (x) is often written as T x

4 / 30

Examples

unconstrained C 1 minimization:

minimize f (x)

• x∗ is a stationary point if ∇f (x∗ ) = 0

• gradient descent operator: for γ > 0

T := I − γ∇f

• the gradient descent iteration

xk+1 ← T xk

• lemma: x∗ is a stationary point if, and only if, x∗ ∈ FixT

5 / 30

Examples

constrained C 1 minimization:

minimize f (x)

subject to x ∈ C

• assume: f is proper closed convex, C is nonempty closed convex

• projected-gradient operator: for γ > 0

T := projC (I − γ∇f )

• xk+1 ← T xk is the projected-gradient iteration

xk+1 ← projC xk − γ∇f (xk )

• x∗ is optimal if

h∇f (x∗ ), x − x∗ i ≥ 0

∀x ∈ C

• lemma: x∗ is optimal if, and only if, x∗ ∈ FixT

6 / 30

Lipschitz operator

• definition: an operator T is L-Lipschitz, L ∈ [0, ∞), if

kT x − T yk ≤ Lkx − yk,

∀x, y ∈ H

• definition: an operator T is L-quasi-Lipschitz, L ∈ [0, ∞), if for any

x∗ ∈ FixT (assumed to exist),

kT x − x∗ k ≤ Lkx − x∗ k,

∀x ∈ H

L

7 / 30

Contractive operator

• definition: T is contractive if it is L-Lipschitz for L ∈ [0, 1)

• definition: T is quasi-contractive if it is L-quasi-Lipschitz for L ∈ [0, 1)

L 1

8 / 30

Banach fixed-point theorem

• Theorem: If T is contractive, then

•

•

•

T admits a unique fixed-point x∗ (existence and uniqueness)

xk → x∗ (convergence)

kxk − x∗ k ≤ Lk kx0 − x∗ k (speed)

• Holds in a Banach space

• Also known as the Picard-Lindelöf Theorem

9 / 30

Examples

minimize a Lipschitz-differentiable strongly-convex function:

minimize f (x)

• definition: a convex f is L-Lipschitz-differentiable if

k∇f (x) − ∇f (y)k2 ≤ Lhx − y, ∇f (x) − ∇f (y)i

∀x, y ∈ domf

• definition: a convex f is µ-strongly convex if, element wise,

h∂f (x) − ∂f (y), x − yi ≥ µkx − yk2

∀x, y ∈ domf

• lemma: Gradient descent operator T := I − γ∇f is C-contractive for all

γ in a certain interval.

exercise: find the interval of γ and the formula of C in γ, L, µ

• Also true for a projected-gradient operator if C is closed convex and

C ∩ domf 6= ∅

10 / 30

Nonexpansive operator

• definition: an operator is nonexpansive if

it is 1-Lipschitz, i.e.,

kT x − T yk ≤ kx − yk,

1

∀x, y ∈ H

• properties:

•

•

•

T may not have a fixed point x∗

if x∗ exists, xk+1 = T xk is bounded

may diverge

• examples: rotation, alt. reflection

T 3x

•

T 2x

•

Tx

•

x

•

θ

11 / 30

Between L = 1 and L < 1

• L < 1: linear (or geometric) convergence

• L = 1: bounded, may diverge

• A vast set of algorithms (often with sublinear convergence) cannot be

characterized by L

•

•

•

•

Alternative projection (von Neumann)

Gradient descent without strong convexity

Proximal-point algorithm without strong convexity

Operator splitting algorithms

12 / 30

Averaged operator

• fixed-point residual operator: R := I − T

• Rx∗ = 0 ⇔ x∗ = T x∗

• averaged operator: from some η > 0,

kT x − T yk2 ≤ kx − yk2 − ηkRx − Ryk2 ,

∀x, y ∈ H.

• quasi-averaged operator: from some η > 0,

kT x − x∗ k2 ≤ kx − x∗ k2 − ηkRxk2 ,

∀x ∈ H.

• interpretation: improve by the amount of fixed-point violation

• speed: may become slower as xk gets closer to the minimizer

13 / 30

• convention: use α instead of η following

η :=

1−α

α

• η > 0 ⇔ α ∈ (0, 1)

• α-averaged operator: from some η > 0,

kT x − T yk2 ≤ kx − yk2 −

1−α

kRx − Ryk2 ,

α

∀x, y ∈ H

• special case:

•

•

α = 12 : T is called firmly nonexpansive

α = 1 (violating α ∈ (0, 1)): T is called nonexpansive

14 / 30

Why called “averaged”?

Lemma

T is α-averaged if, and only if, there exists a nonexpansive map T 0 so that

T = (1 − α)I + αT 0 .

or equivalently,

T 0 := (1 −

1 1

)I + T

α

α

is nonexpansive.

Proof. From T 0 := (I − α1 )I +

gives us: for any x and y,

1

T

α

=I−

1

R,

α

basic algebraic manipulation

α(kx − yk2 − kT 0 x − T 0 yk2 ) = kx − yk2 − kT x − T yk2 −

1−α

kRx − Ryk2 .

α

Therefore, T 0 is nonexpansive ⇔ T is α-averaged.

15 / 30

Properties

• assume:

•

•

T is α-averaged

T has a fixed point x∗

• iteration: xk+1 ← T xk

• claims about the iteration: step-by-step,

(a) kxk+1 − x∗ k2 ≤ kxk − x∗ k2 −

1−α

kRxk

α

− |{z}

Rx∗ k2

=0

(b) by telescopic sum on (a),

kxk+1 − x∗ k2 ≤ kx0 − x∗ k2 −

1−α

α

Pk

j=0

kRxj k2 .

(c) {kRxk k2 } is summable and kRxk k → 0

16 / 30

• claims (cont.):

(d) by (a), {kxk − x∗ k2 } is monotonically decreasing until xk ∈ FixT

(e) by (d), limk kxk − x∗ k2 exists (but not necessarily zero)

(f) by (d), {xk } is bounded and thus has a weak cluster point x̄

(note: H is weakly sequentially closed)

Next: we will show that x̄ ∈ FixT and then xk * x̄.

17 / 30

• claims (cont.):

(h) demiclosedness principle: Let T be nonexpansive and R := I − T .

If xj * x0 and lim kRxj k = 0, then Rx0 = 0.

Proof. Goal is to expand kRx0 k2 into convergent terms as j → ∞.

kRx0 k2 = kRxj k2 + 2hRxj , T xj − T x0 i + kT xj − T x0 k2

− kxj − x0 k2 − 2hRx0 , xj − x0 i

≤ kRxj k2 + 2hRxj , T xj − T x0 i − 2hRx0 , xj − x0 i.

Each term on the RHS → 0 as j → ∞. Therefore, kRx0 k2 = 0.

(i) by applying (h) to any converging subsequence, each cluster point x̄

of {xk } is a fixed point.

18 / 30

• claims (cont.):

(j) By (e) and (i), x̄ is the unique cluster point.

Proof. Let ȳ also be a cluster point.

• ȳ ∈ FixT , just like x̄.

• by (e), both limk kxk − x̄k2 and limk kxk − ȳk2 exist.

• algebraically,

2hxk , x̄ − ȳi = kxk − x̄k2 − kxk − ȳk2 + kx̄k2 − kȳk2 ,

whose RHS converges to a constant, say C.

• passing the limits of the two subsequence, to x̄ and to ȳ,

2hx̄, x̄ − ȳi = 2hȳ, x̄ − ȳi = c.

• hence, kx̄ − ȳk2 = 0.

19 / 30

Theorem (Krasnosel’skiĭ)

Let T be an averaged operator with a fixed point. Then, the iteration

xk+1 ← T xk

converges weakly to a fixed point of T .

20 / 30

Mann’s version

• Let T be a nonexpansive operator with a fixed point. Then, the iteration

xk+1 ← (1 − λk )xk + λk T xk

(known as the KM iteration) converges weakly to a fixed point of T as

long as

X

λk > 0,

λk (1 − λk ) = ∞.

k

• The λk condition is ensured if

λk ∈ [, 1 − ]

(bounded away from 0 and 1)

21 / 30

Remarks

• Can be relaxed to quasi-averagedness

• Summable errors can be added to the iteration

• In finite dimension, demiclosedness principle is not needed

• This fundamental result is largely ignore, yet often reproved in Rn

• Browder-Göhde-Kirk fixed-point theorem: If T has no fixed point and λk

is bounded away from 0 and 1, the sequence {xk } is unbounded.

• Speed: kRxk k2 = o(1/k), no rate for xk * x∗

• Much more applications than Banach’s fixed-point theorem

22 / 30

Special cases

proximal-point algorithm

• problem:

minimize f (x)

• proximal operator: let λ > 0,

T := proxλf

• Since T is firmly-nonexpansive,

xk+1 ← proxλf (xk )

converges weakly to a minimizer of f , if it exists

23 / 30

Special cases

gradient descent:

• Define the gradient-descent operator:

T := I − λ∇f

• iteration:

xk+1 ← T xk = xk − γ∇f (xk )

• Baillion-Haddad theorem: if f is convex and ∇f is L-Lipschitz, then

k∇f (x) − ∇f (y)k2 ≤ Lhx − y, ∇f (x) − ∇f (y)i

• If f has a minimizer x∗ , then

2

kγ∇f (xk )k2 ≤ 2hxk − x∗ , γ∇f (xk )i

Lγ

24 / 30

• Directly expand kxk+1 − x∗ k2 :

kxk+1 − x∗ k2 = kxk − γ∇f (xk ) − x∗ k2

= kxk − x∗ k2 − 2hxk − x∗ , γ∇f (xk )i + kγ∇f (xk )k2

≤ kxk − x∗ k2 − (

2

− 1)kγ∇f (xk )k2 .

Lγ

Therefore, T is quasi-averaged if

λ ∈ 0,

2

.

L

• In fact, it is easy to show that T is averaged.

• The convergence result applies to gradient descent.

25 / 30

Composition of operators

• If T1 , . . . , Tm : H → H are nonexpansive, then T1 ◦ · · · ◦ Tm is

nonexpansive.

• If T1 , . . . , Tm : H → H are averaged, then T1 ◦ · · · ◦ Tm is averaged.

• The averagedness constants get worse: let Ti be αi -averaged (allowing

αi = 1), then T = T1 ◦ · · · ◦ Tm is α-averaged where

α=

m

m − 1 + max1i αi

• In addition, if any Ti is contractive, T1 ◦ · · · ◦ Tm is contractive.

26 / 30

Special cases

projected-gradient method:

• convex problem:

minimize f (x)

subject to x ∈ C.

x

• assume sufficient intersection between domf and C

• define:

T := projC ◦ (I − λ∇f )

• assume ∇f is L-Lipschitz, let λ ∈ (0, 2/L)

• since both projC and (I − λ∇f ) are averaged, T is averaged

• therefore, the following sequence weakly converges to a minimizer, if exists:

xk+1 ← T xk = projC xk − λ∇f (xk )

27 / 30

Special cases

prox-gradient method:

• convex problem:

minimize f (x) + h(x)

x

• assume sufficient intersection between domf and domh

• define:

T := proxλh ◦ (I − λ∇f )

• assume ∇f is L-Lipschitz, let λ ∈ (0, 2/L)

• since both proxλh and (I − λ∇f ) are averaged, T is averaged

• therefore, the following sequence weakly converges to a minimizer, if exists:

xk+1 ← T xk = projλh xk − λ∇f (xk )

28 / 30

Special cases

Later this course, we will see more special cases

• forward-backward iteration

• Douglas-Rachford and Peaceman-Rachford iteration

• ADMM

• Tseng’s forward-backward-forward iteration

• Davis-Yin iteration

• primal-dual iteration

• ···

29 / 30

Summary

• Fixed-point iteration and analysis are powerful tools

• Contractive T : fixed-point exists, is unique, iteration strongly converges

• Nonexpansive T : bounded, if fixed-point exists

• Averaged T : weakly converges, if fixed-point exists

• More power: closedness under composition

30 / 30