* Your assessment is very important for improving the work of artificial intelligence, which forms the content of this project

Download (3) 2013 test

Survey

Document related concepts

Transcript

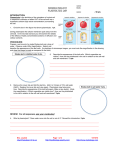

Assessment 2, Data Mining IAA, Jan. 2013 1. (8 pts.) Terminology: How many “nodes” __5__ and how many “leaves” (terminal nodes) __3___ are in this decision tree? ___ root node|___| _________|__________ _|_ _|_ node |___| node |___| leaf ________|_______ _|_ _|_ node |___|leaf node|___|leaf 2. (10) In our neural network example with the veterans data, our first attempt at fitting a model using all of the features (inputs) failed to converge. What did we do to remedy that situation? Explain briefly. We preceded the neural network with a logistic regression using stepwise to select a model. Inputs not selected are automatically eliminated from the neural network node. 3. (10 pts.) What Chi-Square p-value _0.001_gives logworth 3? -3 =log10(p) 10**(-3)= p 0.001=p 4. (12 pts.) I have a neural network for predicting the probability p that someone will be a good saleswoman. For a particular applicant for a sales job, my neural network gives estimated probability p=0.3 that she will be a good saleswoman. If she is not good, she will cost me $4,000 per month in the long run because her sales are less than her salary. On the other hand, if she is good, she will generate $20,000 in profit (after adjusting for her salary) per month. Based on this information, should I hire the applicant? Explain using numbers. Expected profit = -4000(.7)+20000(.3) = -2800+6000 >0 so I would hire her 5. (10 pts.) A fire alarm company runs a logistic regression to estimate the probability p of someone waking from sleep as a function of the alarm noise level X. Their regression suggests using p = eL/(1+eL) to estimate the probability where L = -10 +0.5X. For what value of X is there a 50% chance of awakening? L=0 is the 50-50 cutoff (because p=1/(1+1) at L=0). Setting L=-10+.5X = 0 implies X=20. 6. (20 pts.) In a group of 10,000 people, there are 5,000 that bought a smart phone and 2,000 that bought a Kindle reader. Both of these counts include 1,500 people that bought both. The other 4,500 people bought neither. Assume no priors or decisions (i.e. profits & losses) were specified. For the “rule” Phone=>Kindle, compute the Support: 1500/10000=15% Confidence: 1500/5000=30%_ Expected Confidence: 2000/10000=20% and Lift: 30/20=1.5 7. (30 pts.) A tree for a binary response (0 or 1) has just two leaves. No decisions (i.e. profits & losses) or priors were specified. Here are the counts of 0s and 1s in the two leaves. Leaf 1 400 1s 100 0s Leaf 2 200 1s 300 0s I can call everything a one which gives the point __(1,1)__ (I misclassify every 0) on the ROC curve or I can call everything in leaf 1 a one and everything in leaf 2 a zero which gives the point ___ (1/4, 2/3) __ or I can call everything a zero which gives the point ____(0,0)____ (I misclassify every 1). For the second of the three decision rules above what, are the sensitivity 2/3 and specificity 3/4 of the decision rule.