* Your assessment is very important for improving the work of artificial intelligence, which forms the content of this project

Download EIGENVECTOR CALCULATION Let A have an approximate

Matrix multiplication wikipedia , lookup

Linear least squares (mathematics) wikipedia , lookup

Gaussian elimination wikipedia , lookup

Horner's method wikipedia , lookup

Compressed sensing wikipedia , lookup

System of linear equations wikipedia , lookup

Fisher–Yates shuffle wikipedia , lookup

Root-finding algorithm wikipedia , lookup

Newton's method wikipedia , lookup

Singular-value decomposition wikipedia , lookup

EIGENVECTOR CALCULATION

Let A have an approximate eigenvalue λ, so that A −

λI is almost singular. How do we find a corresponding

eigenvector? If the eigenvalue is of multiplicity 1, then

in linear algebra courses we usually just try to solve

the linear system

(A − λI ) x = 0

Oversimplifying, we usually drop one of the equations,

arbitrarily assign one of the components of x, and then

solve for the remaining components.

In numerical computations, this almost always leads

to at least one of the eigenvectors being obtain inaccurately due to solving an ill-conditioned linear system.

Consequently, another approach is usually used to find

the eigenvector x.

INVERSE ITERATION

Let λ be an approximate eigenvalue of A, corresponding to some true eigenvalue λk for A. Let xk denote

the associated eigenvector. Choose an initial estimate

z (0) ≈ xk , often using a random number generator.

The inverse iteration method is defined by

(A − λI ) w(m+1) = z (m)

(m+1)

w

z (m+1) = (m+1)

w

∞

for m = 0, 1, 2, ... It is important that λ not be exactly

a true eigenvalue, or else the matrix A − λI will be

singular.

EXAMPLE

Let

6 4 3

A= 4 3 2

3 2 1

The eigenvalues are

.

λ1 =

0.23357813629678

.

λ2 = −0.42027581011042

.

λ3 = 10.18669767381363

The corresponding normalized eigenvectors are

−0.64061130860597

1.00000000000000 ,

−0.10198831463966

0.37938985766816

0.14105311855297

−1.00000000000000

1.00000000000000

0.68921959524450

0.47660643094521

In all cases, with a random z (0), we had z (1) was the

correct answer to the digits shown.

CONVERGENCE

Let A have a diagonal Jordan canonical form,

P −1AP = D = diag [λ1, ..., λn ]

with

P = [x1, ..., xn]

the matrix of eigenvectors (assumed to be normalized

with xi∞ = 1).

For the given z (0), expand it in the basis of eigenvectors {x1, ..., xn}:

z (0) =

n

j=1

α j xj

Let λ ≈ λk for some k; and for simplicity, assume

λk is a simple eigenvalue of A. Moreover, assume

αk = 0. This is generally assured by using a random

number generator to define z (0).

What are the eigenvalues and eigenvectors of A − λI ?

(A − λI ) xi = (λi − λ) xi,

i = 1, ..., n

Also, this implies

(A − λI )−1 xi =

1

xi ,

λi − λ

i = 1, ..., n

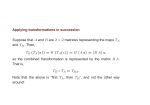

Our inverse iteration method

(A − λI ) w(m+1) = z (m)

w(m+1)

(m+1)

z

= w(m+1) ∞

is a power method. Simply write it in the form

w(m+1) = (A − λI )−1 z (m)

In §9.2 on the power method, replace the role of A by

(A − λI )−1.

Then from (9.2.4) of that section,

z (m) = σm (A − λI )−m z (0)

,

−m (0) z (A − λI )

∞

m≥0

with |σm| = 1. Using this formula and the earlier

(A − λI )−1 xi =

we have

1

xi ,

λi − λ

n 1

λj − λ

j=1

(A − λI )−m z (0) =

=

1

λk − λ

m

i = 1, ..., n

m

α j xj

m

n

λk − λ

α k xk +

j=1

j=k

λj − λ

α j xj

Then

α k xk +

z (m) = σm m

n

λk − λ

j=1

j=k

λj − λ

α j xj

m

n

λ

−

λ

k

α x +

α

x

j j

k k

j=1 λj − λ

j=k

∞

If

|λk − λ| λj − λ ,

j=

k

then

z (m) ≈ σmxk

with only a small value of m. The error with iteration

decreases by a factor of

λ − λ

max k

j=k λj − λ This explains the rapid convergence of our numerical

example.