* Your assessment is very important for improving the work of artificial intelligence, which forms the content of this project

Download Data Warehouse Improvements

Operational transformation wikipedia , lookup

Data center wikipedia , lookup

Entity–attribute–value model wikipedia , lookup

Data analysis wikipedia , lookup

Forecasting wikipedia , lookup

Information privacy law wikipedia , lookup

3D optical data storage wikipedia , lookup

Relational model wikipedia , lookup

Business intelligence wikipedia , lookup

Extensible Storage Engine wikipedia , lookup

Making Data Warehouse Easy

Conor Cunningham – Principal Architect

Thomas Kejser – Principal PM

Introduction

• We build and implement Data Warehouses

(and the engines that run them)

• We also fix DWs that others build

• This talk covers the key patterns we use

• We will also show you how you can make your

life easier with Microsoft’s SQL technologies

What World do you Live in?

Hardware should be

bought when I

know the details

I can’t wait for you to

figure all that out

Do it, NOW!

I need to know my

hardware CAPEX before I

decide to invest

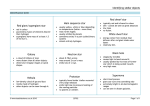

Sketch a Rough Model

Fact/Dim

Sales

Customer

X

Product

Dim columns can wait

3. Build Dimension/Fact

Matrix

1. Define Roughly on

Business Problem

2. Decide on Dimensions

–

Inventory

Purchases

X

X

X

Time

X

X

X

Date

X

X

X

Store

X

Warehouse

X

X

X

Estimate Storage

𝐹𝑎𝑐𝑡𝑟𝑜𝑤𝑛 =

|𝑴𝒊 | +

𝑀𝑖 ∈𝐹𝑎𝑐𝑡𝑛 ≈ 8B

𝐿𝑔(𝑹𝒐𝒘𝒔(𝑫𝒊𝒎𝒊 ))𝐵

≈ 4B

𝐷𝑖𝑚𝑖 ∈ 𝐹𝑎𝑐𝑡𝑛

𝐹𝑎𝑐𝑡𝑛 = 𝐹𝑎𝑐𝑡𝑟𝑜𝑤𝑛 ∗ 𝑹𝒐𝒘/𝒅𝒂𝒚𝒏 ∗ [𝒅𝒂𝒚𝒔 𝒔𝒕𝒐𝒓𝒆𝒅𝒏 ]

1𝑇𝐵

𝐷𝑊 = [𝑪𝒐𝒎𝒑𝒓𝒆𝒔𝒔𝒊𝒐𝒏] ∗ 12 ∗

10 𝐵

≈ 1/3 or sp_estimate_compression

|𝐹𝑎𝑐𝑡𝑖 |

𝑖∈𝐹𝑎𝑐𝑡𝑠

•

•

•

•

Why Integer Keys are Cheaper

Smaller row sizes

More rows/page

= more compression

Faster to join

Faster in column stores

Pick Standard HW Configuration

• Small (GB to low TB) : Business Decision

Appliance

• Medium (up to 80TB): Fast Track

• Large (100s of TB): PDW

– Note: Elastic scale plus for lower sizes too!

• Careful with sizes, some are listed precompression

Server Config / File Layout

1. Follow FT Guidance!

2. You probably don’t need

to do anything else

Why does Fast Track/PDW

Work?

• Warehouses are I/O

hungry

– GB/sec

– This is high

(in a SAN terms)

• We did the HW

testing for you

• Guidance on data

layout

Implement Prototype Model

• Design schema

• Analyse data quality with

DQS/Excel

– Probably not what you

expected to find!

• Start with small data

samples!

Schema Tool Discussion!

SSMS with Schema Designer

SQL Server Data Tools

Prototyping Hints

• Generate INTEGER keys

out of strings keys with

hash

• Focus on Type 1

Dimensions

• PowerPivot/Excel to show

data fast

• Drive conversation with

end users!

Customer Type 1

Key

Name

City

1

Thomas

London

Customer Type 2

Key

Name

City

From

To

1

Thomas

Malmo

2006

2011

2

Thomas

London

2012

9999

Prototype: What users will teach you

• They will change/refocus

their mind when they see

the actual data

• You have probably

forgotten some

dimension data

• You may have

misestimated data sizes

Schema Design Hints

• Build Star Schema

• Beginners may want to avoid snowflakes (most of our users just use

star)

• Implement a Date Table (use INT key in YYYYMMDD format)

– Fact.MyDate BETWEEN 20000000 AND 20009999

– Fact.MyDate BETWEEN ‘2000-01-01’ and ‘2000-12-31’

– YEAR(Fact.MyDate ) = 2000

• Identity, Sequences

• Usually you can validate PK/FK Constraints during load and avoid

them in the model

• Fact Table – fixed sized columns, declared NOT NULL (if possible)

• For ColumnStore, data types need to be the basic ones…

Why Facts/Dimensions?

• Optimizers have a tough job

• Our QO generates star joins early in search

• We look for the star join pattern to do this

– 1 big table, dimensions joined to it…

• Following this pattern will help you

– Reduced compilation time

– Better plan quality (average)

• You can look at the plans and see whether the optimizer

got the “right” shape

– Wrong Plan your query is non-standard OR perhaps QO

messed up!

Partition/Index the Model

• Partition fact by load window

• Fact cluster/heap?

– Cluster fact on seek key

– Cluster fact on date column (if cardinality > partitions)

– Leave as heap

• Column Store index on

– All columns of fact

– Columns of large dim

• Cluster the Dim on Key

If(followedpattern) {expect …}

• Star Join Shape

• << get plan .bmp>>

• Properties:

–

–

–

–

–

Usually all Hash Joins

Parallelism

Bitmaps

Join dimensions together, then scan Fact

Indexes on filtered Dim columns helpful if they are

covering

The Approximate Plan

Stream

Partial

Aggregate Aggregate

Hash

Hash

Join

Batch

Build

Hash

Join

Dim Seek

Batch

Build

Dim Scan

Fact CSI Scan

Column Store Plan Shapes

• For ColumnStore, it’s the same shape

• Minor differences

– Batch mode (Not Row Mode)

– Parallelism works differently

– Converts to row mode above the star join shape

• If you don’t get a batch mode plan, performance is likely to

be much slower (usually this implies a schema design issue

or a plan costing issue)

• Partitioning Sliding Window works well with ColumnStore

(especially since the table must be is readonly)

Data Maintenance

• Statistics

– Add manually on Correlated

Columns

– Update fact statistics after ETL

load

– Leave Dim to auto update

• Rebuilding indexes?

– Probably not needed

– If needed, make part of ETL load

• Switch out old partitions and

drop switch target

– Automate this

Serve the Data

• Self Service

– Tabular / Dimensional Cubes

– Excel / PowerPivot / PowerView

• Fixed Reports

– Reporting Services

– PowerView

• Don’t clean data in “serving engines”

– Materialise post-cleaned data as

column in relational source

?

!