* Your assessment is very important for improving the work of artificial intelligence, which forms the content of this project

Download Eigenvalues and Eigenvectors

Covariance and contravariance of vectors wikipedia , lookup

Linear least squares (mathematics) wikipedia , lookup

System of linear equations wikipedia , lookup

Rotation matrix wikipedia , lookup

Determinant wikipedia , lookup

Matrix (mathematics) wikipedia , lookup

Non-negative matrix factorization wikipedia , lookup

Four-vector wikipedia , lookup

Principal component analysis wikipedia , lookup

Gaussian elimination wikipedia , lookup

Singular-value decomposition wikipedia , lookup

Matrix calculus wikipedia , lookup

Jordan normal form wikipedia , lookup

Orthogonal matrix wikipedia , lookup

Matrix multiplication wikipedia , lookup

Cayley–Hamilton theorem wikipedia , lookup

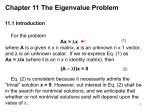

Eigenvalues and Eigenvectors. Definition: The real number is said to be an eigenvalue of the n x n matrix A provided that there exists a nonzero vector v such that Av = v. The vector v is called the eigenvector of the matrix A associated with the eigenvalue . Eigenvalues and eigenvectors are also called characteristic values and characteristic vectors. The equation | A - I | = 0 is called the characteristic equation of the square matrix A. Theorem: The real number is an eigenvalue of the n x n matrix A if and only if satisfies the characteristic equation. The eigenspace associated with a fixed eigenvalue is the solution space of the homogeneous system (A - I ) v = 0. Definition: The n x n matrices A and B are similar provided that there exists an invertible matrix P such B P 1 AP Definition: The n x n matrix A is called diagonalizable if it is similar to a diagonal matrix D, i.e., that is there exists a diagonal matrix D and an invertible matrix P such that D P 1 AP Theorem: The n x n matrix A is diagonalizable if and only if it has n linearly independent eigenvectors. Theorem: The k eigenvectors v 1 , v 2 ,..., v k associated with the distinct eigenvalues 1 , 2 ,..., k of a matrix A are linearly independent. Theorem: If the n x n matrix A has n distinct eigenvalues, then it is diagonalizable. Theorem: Let 1 , 2 ,..., k be the distinct eigenvalues of the n x n matrix A. For each i 1,2,...k , let S i be a basis for the eigenspace associated with i . Then the union S of the basis S i is a linearly independent set of eigenvectors of A. Theorem: Eigenvectors associated with distinct eigenvalues of a symmetric matrix are orthogonal. Theorem: The following properties of square matrix A are equivalent: (a) (b) (c) (d) A is orthogonal A T is orthogonal The column vectors of A are orthonormal The row vectors of A are orthonormal. Definition: The square matrix A is called orthogonally diagonalizable provided there exists an orthogonal matrix P such that D P 1 AP , in which case D P T AP and A PDP T because P 1 P T Theorem: The n x n matrix A is orthogonally diagonalizable if and only if it has n mutually orthogonal eigenvectors. Theorem: A square matrix is othogonally diagonalizable if and only if it is symmetric. Theorem: The characteris equation of a symmetric matrix has only real solutions. Gram-Schmidt Orthogonalization: To replace the linearly independent vectors v 1 , v 2 ,..., v n one by one with mutually orthogonal vectors u1 , u 2 ,..., u n that span the same subspace , begin with u1 v 1 . For k 1,2,...n 1 in turn, take u v u v u v u k 1 v k 1 1 k 1 u 1 2 k 1 u 2 k k 1 u k u 1 .u 1 u 2 .u 2 u k .u k