* Your assessment is very important for improving the work of artificial intelligence, which forms the content of this project

Download eiilm university, sikkim

Linear least squares (mathematics) wikipedia , lookup

Exterior algebra wikipedia , lookup

Laplace–Runge–Lenz vector wikipedia , lookup

Rotation matrix wikipedia , lookup

Euclidean vector wikipedia , lookup

Determinant wikipedia , lookup

System of linear equations wikipedia , lookup

Principal component analysis wikipedia , lookup

Matrix (mathematics) wikipedia , lookup

Vector space wikipedia , lookup

Non-negative matrix factorization wikipedia , lookup

Covariance and contravariance of vectors wikipedia , lookup

Jordan normal form wikipedia , lookup

Orthogonal matrix wikipedia , lookup

Singular-value decomposition wikipedia , lookup

Gaussian elimination wikipedia , lookup

Perron–Frobenius theorem wikipedia , lookup

Eigenvalues and eigenvectors wikipedia , lookup

Cayley–Hamilton theorem wikipedia , lookup

Four-vector wikipedia , lookup

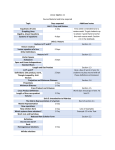

EIILM UNIVERSITY, SIKKIM

EXAMINATIONS, FEBRUARY -2012

B.A.ADD. (MATHEMATICS), YEAR-1

ALGEBRA

Time: 3 hours

M.Marks:60

Note: - Attempt any 5 questions.

All questions carry equal marks.

Q.1

Explain Vector spaces and dimension and coordinates of a vector space?

A vector space is a mathematical structure formed by a collection of vectors: objects that may

be added together and multiplied ("scaled") by numbers, calledscalars in this context. Scalars are

often taken to be real numbers, but there are also vector spaces with scalar multiplication

by complex numbers, rational numbers, or generally any field. The operations of vector addition

and scalar multiplication must satisfy certain requirements, called axioms, listed below. An

example of a vector space is that of Euclidean vectors, which may be used to

represent physical quantities such as forces: any two forces (of the same type) can be added to

yield a third, and the multiplication of a force vector by a real multiplier is another force vector. In

the same vein, but in a more geometric sense, vectors representing displacements in the plane or

in three-dimensional space also form vector spaces.

Vector spaces are the subject of linear algebra and are well understood from this point of view,

since vector spaces are characterized by their dimension, which, roughly speaking, specifies the

number of independent directions in the space. A vector space may be endowed with additional

structure, such as a norm orinner product. Such spaces arise naturally in mathematical analysis,

mainly in the guise of infinite-dimensional function spaces whose vectors are functions. Analytical

problems call for the ability to decide whether a sequence of vectors converges to a given vector.

This is accomplished by considering vector spaces with additional structure, mostly spaces

endowed with a suitable topology, thus allowing the consideration

of proximity and continuity issues. These topological vector spaces, in particular Banach

spaces and Hilbert spaces, have a richer theory.

Historically, the first ideas leading to vector spaces can be traced back as far as 17th

century's analytic geometry, matrices, systems of linear equations, and Euclidean vectors. The

modern, more abstract treatment, first formulated by Giuseppe Peano in the late 19th century,

encompasses more general objects than Euclidean space, but much of the theory can be seen as

an extension of classical geometric ideas like lines, planes and their higher-dimensional analogs.

Today, vector spaces are applied throughout mathematics, science and engineering. They are

the appropriate linear-algebraic notion to deal with systems of linear equations; offer a framework

forFourier expansion, which is employed in image compression routines; or provide an

environment that can be used for solution techniques for partial differential equations.

Furthermore, vector spaces furnish an abstract, coordinate-free way of dealing with geometrical

and physical objects such as tensors. This in turn allows the examination of local properties

of manifolds by linearization techniques. Vector spaces may be generalized in several ways,

leading to more advanced notions in geometry and abstract algebra.

First example: arrows in the plane

The concept of vector space will first be explained by describing two particular examples. The first

example of a vector space consists of arrows in a fixed plane, starting at one fixed point. This is

used in physics to describe forces or velocities. Given any two such arrows, v and w,

the parallelogram spanned by these two arrows contains one diagonal arrow that starts at the

origin, too. This new arrow is called the sum of the two arrows and is denoted v + w. Another

operation that can be done with arrows is scaling: given any positive real number a, the arrow

that has the same direction as v, but is dilated or shrunk by multiplying its length by a, is

called multiplication of v by a. It is denoted av. When a is negative, av is defined as the arrow

pointing in the opposite direction, instead.

The following shows a few examples: if a = 2, the resulting vector aw has the same direction

as w, but is stretched to the double length of w (right image below). Equivalently 2w is the

sum w + w. Moreover, (−1)v = −v has the opposite direction and the same length as v (blue

vector pointing down in the right image).

[edit]Second

example: ordered pairs of numbers

A second key example of a vector space is provided by pairs of real numbers x and y. (The order

of the components x and y is significant, so such a pair is also called an ordered pair.) Such a

pair is written as (x, y). The sum of two such pairs and multiplication of a pair with a number is

defined as follows:

(x1, y1) + (x2, y2) = (x1 + x2, y1 + y2)

and

a (x, y) = (ax, ay).

[edit]Definition

A vector space over a field F is a set V together with two binary operations that satisfy

the eight axioms listed below. Elements of V are called vectors. Elements of F are

called scalars. In this article, vectors are distinguished from scalars by boldface. [nb 1] In

the two examples above, our set consists of the planar arrows with fixed starting point

and of pairs of real numbers, respectively, while our field is the real numbers. The first

operation, vector addition, takes any two vectors v and w and assigns to them a third

vector which is commonly written as v + w, and called the sum of these two vectors. The

second operation takes any scalar a and any vector v and gives another vector av. In

view of the first example, where the multiplication is done by rescaling the vector v by a

scalar a, the multiplication is called scalar multiplication of v by a.

Q.2

Explain representation of transformations by matrices?

An m×n matrix is a set of numbers arranged in m rows and n columns. The following illustration

shows several matrices.

You can add two matrices of the same size by adding individual elements. The following

illustration shows two examples of matrix addition.

An m×n matrix can be multiplied by an n×p matrix, and the result is an m×p matrix. The number

of columns in the first matrix must be the same as the number of rows in the second matrix. For

example, a 4 ×2 matrix can be multiplied by a 2 ×3 matrix to produce a 4 ×3 matrix.

Points in the plane and rows and columns of a matrix can be thought of as vectors. For example,

(2, 5) is a vector with two components, and (3, 7, 1) is a vector with three components. The dot

product of two vectors is defined as follows:

(a, b) • (c, d) = ac + bd

(a, b, c) • (d, e, f) = ad + be + cf

For example, the dot product of (2, 3) and (5, 4) is (2)(5) + (3)(4) = 22. The dot product of (2, 5, 1)

and (4, 3, 1) is (2)(4) + (5)(3) + (1)(1) = 24. Note that the dot product of two vectors is a number,

not another vector. Also note that you can calculate the dot product only if the two vectors have

the same number of components.

Let A(i, j) be the entry in matrix A in the ith row and the jth column. For example A(3, 2) is the

entry in matrix A in the 3rd row and the 2nd column. Suppose A, B, and C are matrices, and AB =

C. The entries of C are calculated as follows:

C(i, j) = (row i of A) • (column j of B)

The following illustration shows several examples of matrix multiplication.

If you think of a point in the plane as a 1 × 2 matrix, you can transform that point by multiplying it

by a 2 × 2 matrix. The following illustration shows several transformations applied to the point (2,

1).

All the transformations shown in the previous figure are linear transformations. Certain other

transformations, such as translation, are not linear, and cannot be expressed as multiplication by a

2 × 2 matrix. Suppose you want to start with the point (2, 1), rotate it 90 degrees, translate it 3

units in the x direction, and translate it 4 units in the y direction. You can accomplish this by

performing a matrix multiplication followed by a matrix addition.

A linear transformation (multiplication by a 2 × 2 matrix) followed by a translation (addition of a 1

× 2 matrix) is called an affine transformation. An alternative to storing an affine transformation in

a pair of matrices (one for the linear part and one for the translation) is to store the entire

transformation in a 3 × 3 matrix. To make this work, a point in the plane must be stored in a 1 × 3

matrix with a dummy 3rd coordinate. The usual technique is to make all 3rd coordinates equal to

1. For example, the point (2, 1) is represented by the matrix [2 1 1]. The following illustration

shows an affine transformation (rotate 90 degrees; translate 3 units in the x direction, 4 units in

the y direction) expressed as multiplication by a single 3 × 3 matrix.

In the previous example, the point (2, 1) is mapped to the point (2, 6). Note that the third column

of the 3 × 3 matrix contains the numbers 0, 0, 1. This will always be the case for the 3 × 3 matrix

of an affine transformation. The important numbers are the six numbers in columns 1 and 2. The

upper-left 2 × 2 portion of the matrix represents the linear part of the transformation, and the

first two entries in the 3rd row represent the translation.

In Windows GDI+ you can store an affine transformation in a Matrix object. Because the third

column of a matrix that represents an affine transformation is always (0, 0, 1), you specify only the

six numbers in the first two columns when you construct a Matrix object. The statement Matrix

myMatrix(0.0f, 1.0f, -1.0f, 0.0f, 3.0f, 4.0f); constructs the matrix shown in the

previous figure.

Q.3

Explain the transpose of a linear transformation with suitable examples?

Q.4

Explain characteristics values and Characteristics vectors? Diagonalizable

operators,

Q.5

Derive primary decomposition theorem?

Let T be a linear operater on the finite dimensional vector space V over the

field F. Let

be the factorization of mT into irreducible monic polynomials over F.

Let

1.

. Then

;

2.

each Wi is T-invariant;

3.

if Ti is the operator induced on Wi by T, then mTi=piri.

Example 6.7.1 Let

operator on V=F3. Then

or

and

, considered as a linear

and

. Thus A is not

diagonalizable over F. The A-invariant docomposition of V is

where

and

. Now

and its kernel is spanned by (0,2,1) and (1,2,1). Note that (1,2,1) is an

eigenvector. The kernel of A-1 is generated by (0,1,1), which is an eigenvector.

Since A(0,2,1)t=-2(1,2,1)t+1(0,2,1)t, we see that with respect to the ordered

basis

, A can be put in the form

. Now

consider the matrix

. We have mA=(x-1)2. Thus the primary

docomposition theorem does not give any decomposition. Nevertheless, it is

obvious that V=F3 can be decomposed into 1-dimensional and 2dimensional A-invariant subspaces.

Suppose that the minimal polynomial is a product of linear factors. As in the

proof of the above theorem, let Ei=fi(T)gi(T),

basis

. Choose a

for each Wi and collect them to get a basis

Then

of V.

. If we let

then

the diagonalizable part of T. Now let

. So D is diagonalizable. D is called

Then

since EiEj=0 if

and Ei2=Ei.

Thus for r>ri for all i, we have Nr=0. Since D and N are polynomials in T, we

have DN=ND.

Definition 6.15 If N is a operator on a n-dim vector space V such

that Nr=0 for some posivive integer r, then N is called a nilpotent operator.

Note that if N is nilpotent, then Nr=0 for some

.

Q.6

Derive the spectral theorem on operators?

Q.7

Explain simultaneous diagonalization of normal operators?

In linear algebra, a square matrix A is called diagonalizable if it is similar to a diagonal matrix,

i.e., if there exists an invertible matrix P such that P −1AP is a diagonal matrix. If V is a finitedimensional vector space, then a linear map T : V → V is called diagonalizable if there exists

a basis of V with respect to which T is represented by a diagonal matrix. Diagonalization is the

process of finding a corresponding diagonal matrix for a diagonalizable matrix or linear map. [1] A

square matrix which is not diagonalizable is called defective.

Diagonalizable matrices and maps are of interest because diagonal matrices are especially easy

to handle: their eigenvalues and eigenvectors are known and one can raise a diagonal matrix to a

power by simply raising the diagonal entries to that same power. Geometrically, a diagonalizable

matrix is an inhomogeneous dilation (or anisotropic scaling) – it scales the space, as does

ahomogeneous dilation, but by a different factor in each direction, determined by the scale factors

on each axis (diagonal entries).

The fundamental fact about diagonalizable maps and matrices is expressed by the following:

An n-by-n matrix A over the field F is diagonalizable if and only if the sum of

the dimensions of its eigenspaces is equal to n, which is the case if and only if there exists

a basis of Fn consisting of eigenvectors of A. If such a basis has been found, one can form

the matrix P having these basis vectors as columns, and P−1AP will be a diagonal matrix. The

diagonal entries of this matrix are the eigenvalues of A.

A linear map T: V → V is diagonalizable if and only if the sum of the dimensions of its

eigenspaces is equal to dim(V), which is the case if and only if there exists a basis

of V consisting of eigenvectors of T. With respect to such a basis, T will be represented by a

diagonal matrix. The diagonal entries of this matrix are the eigenvalues of T.

Another characterization: A matrix or linear map is diagonalizable over the field F if and only if

its minimal polynomial is a product of distinct linear factors over F. (Put in another way, a matrix is

diagonalizable if and only if all of its elementary divisors are linear.)

The following sufficient (but not necessary) condition is often useful.

An n-by-n matrix A is diagonalizable over the field F if it has n distinct eigenvalues in F,

i.e. if its characteristic polynomial has n distinct roots in F; however, the converse may be

false. Let us consider

which has eigenvalues 1, 2, 2 (not all distinct) and is diagonalizable with diagonal form

(also the similar matrix of A)

A linear map T: V → V with n = dim(V) is diagonalizable if it has n distinct eigenvalues,

i.e. if its characteristic polynomial has n distinct roots in F.

Let A be a matrix over F. If A is diagonalizable, then so is any power of it. Conversely, if A is

invertible, F is algebraically closed, and An is diagonalizable for some n that is not an integer

multiple of the characteristic of F, then A is diagonalizable. Proof: If An is diagonalizable, then A is

annihilated by some polynomial

(since

, which has no multiple root

) and is divided by the minimal polynomial of A.

As a rule of thumb, over C almost every matrix is diagonalizable. More precisely: the set of

complex n-by-n matrices that are not diagonalizable over C, considered as a subset of Cn×n,

has Lebesgue measure zero. One can also say that the diagonalizable matrices form a dense

subset with respect to the Zariski topology: the complement lies inside the set where

the discriminant of the characteristic polynomial vanishes, which is a hypersurface. From that

follows also density in the usual (strong) topology given by a norm. The same is not true over R.

The Jordan–Chevalley decomposition expresses an operator as the sum of its semisimple (i.e.,

diagonalizable) part and its nilpotent part. Hence, a matrix is diagonalizable if and only if its

nilpotent part is zero. Put in another way, a matrix is diagonalizable if each block in its Jordan

form has no nilpotent part; i.e., one-by-one matrix.

Q.8

Write differences between symmetric bilinear forms and Skew Symmetric

bilinear forms?