* Your assessment is very important for improving the work of artificial intelligence, which forms the content of this project

Download Matrix Inverses Suppose A is an m×n matrix. We have learned that

Matrix completion wikipedia , lookup

Capelli's identity wikipedia , lookup

Symmetric cone wikipedia , lookup

Linear least squares (mathematics) wikipedia , lookup

Rotation matrix wikipedia , lookup

Eigenvalues and eigenvectors wikipedia , lookup

Principal component analysis wikipedia , lookup

System of linear equations wikipedia , lookup

Jordan normal form wikipedia , lookup

Determinant wikipedia , lookup

Four-vector wikipedia , lookup

Singular-value decomposition wikipedia , lookup

Matrix (mathematics) wikipedia , lookup

Non-negative matrix factorization wikipedia , lookup

Perron–Frobenius theorem wikipedia , lookup

Orthogonal matrix wikipedia , lookup

Matrix calculus wikipedia , lookup

Cayley–Hamilton theorem wikipedia , lookup

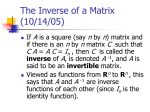

Matrix Inverses € Suppose A is an m × n matrix. We have learned that matrix addition with A is defined for any other m × n matrix B; that the m × n zero matrix (all entries equal to 0) is the identity element for this € operation; and that the matrix −1 ⋅ A = − A is the additive inverse of A, that is, € A + (− A ) = 0 = − A + A. € This allows us to define matrix subtraction in the natural way: B − A = B + (− A ). € We have also seen that matrix multiplication is defined only if a particular compatibility condition € amongst the matrices being multiplied and is met their product: if AB makes sense, and A is m × n and B is p × q, then necessarily n must equal p and the product AB is m × q. For this and a number of other reasons (including for instance € that this operation is not commutative), matrix € multiplication is a more complex operation than is € multiplication of real numbers. However, we know that there is a multiplicative identity element: if I n is the n × n identity matrix (1’s along the diagonal, 0’s elsewhere), then AI n = A; similarly, if I m is the m × m identity matrix, then I m A = A. Does € A have € a multiplicative inverse akin to its additive inverse –A? € € € € The results of Exercises 23 and 24, p. 117, show that • if there were a matrix C that satisfied CA = I n , then m must equal n; and • if there were a matrix D that satisfied AD = I m , then m must equal n. € That is, only square matrices can have multiplicative inverses. Still, even we restrict our € attention only to matrices with square size, multiplicative inverses are not guaranteed for every matrix, as we shall see shortly. € € The n × n matrix A is invertible if it has a multiplicative inverse, that is, if there exists a matrix C such that CA = I n and a matrix D such that AD = I n . However, if these matrices exist, then by the observation that € C = CI n = C ( AD) = ( CA ) D = I n D = D, these two matrices are identical. In other words, A can have only one associated matrix that acts as a €multiplicative inverse. We typically denote the mulitplicative inverse of A by A −1 . It is instructive to look at the 2 × 2 case in detail. If € then its multiplicative A = a b is invertible, c d € € inverse is defined; denote it A −1 w x = . Then y z 1 0 a b w x aw + by ax + bz , = ⋅ = 0 1 c d € y z cw + dy cx + dz € leading to a pair of systems of linear equations, one in w and y, the other in x and z: aw + by = 1 cw + dy = 0 and ax + bz = 0 cx + dz = 1 Solving the two systems produces € d −c −b a w= ,y= and x = ,z = ad − bc ad − bc ad − bc ad − bc thereby proving the € a b Theorem If A = , then A is invertible, with c d inverse € A −1 = 1 d −b , ad − bc − c a if and only if ad − bc ≠ 0 . // € € The most important feature of this theorem is its assertion that not all square matrices have inverses. (See Practice Problem 1(c), p. 125.) Such matrices are called singular. (You will also see noninvertible as a synonym for singular, as well as nonsingular as a synonym for invertible.) € € Theorem Suppose A and B are invertible n × n matrices. Then 1. A −1 is invertible, with inverse ( A −1 ) −1 = A; 2. AB is invertible, with inverse ( € AB ) −1 = B −1 A −1 ; 3. A T is invertible, with inverse ( A T ) −1 = ( A −1 ) T . € Proof In each case, just€multiply the given matrix with its supposed inverse and check that the € product is the identity matrix. // How then do we tell whether an n × n matrix with n > 2 is invertible? The answer can be formulated once again in terms of row reduction. € € The first step in this effort is to relate the elementary row operations to matrix multiplication. As Example 5, p. 122, indicates, performing an elementary row operation on an n × n matrix A can be represented in terms of matrix multiplication: if we perform a certain row operation to the n × n identity I n and obtain the € matrix E (called an elementary matrix), then performing the same row operation on A produces the matrix EA. € € € In particular, this means that elementary matrices are invertible, for we know that row operations are reversible: if we perform a row operation to I n and obtain the matrix E, then there is a second row operation that can be performed on E to return to I n ; since this second operation can be represented € by multiplication by some elementary matrix F, we have FE = I n . These row operations are reversible in the other order as well, so EF = I n , showing that E (and F) are invertible matrices. This idea is € central to the following € Theorem The n × n matrix A is invertible if and only if A is row equivalent to I n . Furthermore, the same sequence of row operations that carries A into I n will€carry I n into A −1 . € Proof If A is invertible then any system of linear equations with € coefficient matrix A, having matrix € form Ax = b, will have a solution obtained by € € multiplying through by A −1 : x = A −1 b. Also, this is the only possible solution, for if y were another solution, then Ay = b ⇒ y = A −1 b = x . So the system has a unique € €solution, meaning that in the row reduction process on the augmented matrix A b , every column and every row of A contains € a pivot entry. Thus, A will row reduce to I n . Conversely, if A is row equivalent to I n , we can represent the row operations in the reduction of A to I n in terms of elementary matrices: € [ € ] € A ~ E1 A ~ E 2 ( E1 A ) ~ L ~ Ek ( Ek − 1 L E1 A ) = I n € € Since each of these elementary matrices are invertible, so is their product E = E k E k − 1 L E 1, therefore EA = I n ⇒ E −1 EA = E −1 ⇒ A = E −1 , so A is invertible with inverse E. In the process of € this argument, we have −1 determined that A = E = E k E k − 1 L E 1 is the € matrix obtained from the identity I n by applying the same sequence of row operations that were used to reduce€A to I n . // € It follows that to find A we can augment A by I n to obtain A I n , then apply row operations to € −1 [ ] reduce the left side to I n ; the matrix appearing on € the right will€have to be A −1 . € € € Bringing together a number of notions in our discussion so far, we can now state The Invertible Matrix Theorem Suppose that A is an n × n matrix. Then the statements listed below are logically equivalent (i.e., all are simultaneously true or simultaneously false): • A is invertible. € • There is an n × n matrix C satisfying CA = I n . • There is an n × n matrix D satisfying AD = I n . • A is row equivalent to I n . • A has € n pivot entries. € • The€matrix equation Ax = 0 € has only the trivial solution x = 0. € A form a set of linearly • The columns of independent vectors. € n • The columns of A span R . € • The linear transformation T:R n → R n defined by the formula T( x ) = Ax is one-to-one. • The linear transformation T:R n → R n defined by € the formula T( x ) = € Ax is onto. • For € every vector b in R n , the matrix equation Ax = b has at least € one solution. • For € every vector b in R n , the matrix equation Ax = b has exactly one solution. // € € € Corollary A is a noninvertible n × n matrix if and € only if every one of the statements above is false. // € Corollary The linear transformation T:R n → R n with standard n × n matrix A is invertible if and only if A is invertible. Further, the standard matrix for the inverse function€is A −1 . // € €