* Your assessment is very important for improving the work of artificial intelligence, which forms the content of this project

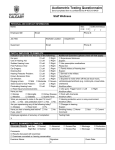

Download Hearing Evaluation

Auditory processing disorder wikipedia , lookup

Sound localization wikipedia , lookup

Olivocochlear system wikipedia , lookup

Evolution of mammalian auditory ossicles wikipedia , lookup

Telecommunications relay service wikipedia , lookup

Auditory system wikipedia , lookup

Hearing loss wikipedia , lookup

Hearing aid wikipedia , lookup

Noise-induced hearing loss wikipedia , lookup

Sensorineural hearing loss wikipedia , lookup

Audiology and hearing health professionals in developed and developing countries wikipedia , lookup