* Your assessment is very important for improving the workof artificial intelligence, which forms the content of this project

Download SVDslides.ppt

System of linear equations wikipedia , lookup

Linear least squares (mathematics) wikipedia , lookup

Exterior algebra wikipedia , lookup

Vector space wikipedia , lookup

Rotation matrix wikipedia , lookup

Determinant wikipedia , lookup

Jordan normal form wikipedia , lookup

Matrix (mathematics) wikipedia , lookup

Principal component analysis wikipedia , lookup

Non-negative matrix factorization wikipedia , lookup

Eigenvalues and eigenvectors wikipedia , lookup

Perron–Frobenius theorem wikipedia , lookup

Cayley–Hamilton theorem wikipedia , lookup

Gaussian elimination wikipedia , lookup

Four-vector wikipedia , lookup

Orthogonal matrix wikipedia , lookup

Matrix calculus wikipedia , lookup

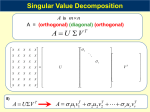

Smallest font Please put away electronic devices Take out clickers Smallest font Math Tools A representational view of matrices Operation D a t a D a t a Operation Operation Operation D a t a D a t a Operation Output Operations Input A note on notation • • • • m = number of rows, n = number of columns “Number”: Number of vectors “Size”: Dimensionality of each vector (elements) [Operation matrix] [Input matrix] • [number of operations x operation size] [size of input x number of inputs] • [m1 x n1] [m2 x n2] = [m1 x n2] • [2 x 3] [3 x 2] = [2 x 2] • n1 and m2 (where the matrices touch) have to match – size of operations has to match size of inputs. • The resulting matrix will have dimensionality number of operations x number of inputs. Inner Product = Dot product Dot product = Row Vector * Column Vector = Scalar scalar DP = [1xn] 1234 5 6 7 8 [mx1] [1x1] 1*5 + 2*6 + 3*7 + 4*8 (m=n) 70 Matrix multiplication 5 6 7 8 19 22 43 50 1 2 3 4 5 6 7 8 (1*5 + 2*7) (1*6 + 2*8) (3*5 + 4*7) (3*6 + 4*8) 31 42 1 2 3 4 The outer product • What if we do matrix multiplication, but when the two matrices are a single column and row vector? OP = [mx1] [1xn] [mxn] • Output is a *matrix*, not a scalar. 1 2 34 (1*3) (1*4) (2*3) (2*4) 3 4 6 8 The transpose = flipping the matrix 1 2 3 1 4 7 4 5 6 2 5 8 7 8 9 3 6 9 NOT just a rotation! Singular Value Decomposition (SVD) • If you really want to understand what Eigenvalues and Eigenvectors are… • The culminating end point of a linear algebra course. • What we will use for the class (and beyond) to use the power of linear algebra to do stuff we care about. Remember this? Singular Value Decomposition (SVD) M=US Orthogonal (“Rotate”) T V Diagonal (“Stretch”) Orthogonal (“Rotate”) SVD U S Output Scaling T V Input A simple case U S û1 û2 O s1 s2 S Outer Product! T V T 1 T 2 v̂ v̂ I V= v̂1 v̂2 SVD can be interpreted as • A sum of outer products! • Decomposing the matrix into a sum of scaled outer products. • Key insight: The operations on respective dimensions stay separate from each other, all the way – through v, s and u. • They are grouped, each operating on another piece of the input. Why does this perspective matter? U û1 û2 O S s1 s2 S T V T 1 T 2 v̂ v̂ I Is the SVD unique? • Is there only one way to decompose a matrix M into U, S and VT? • Is there another set of orthogonal matrices combined with a diagonal one that does the same thing? • The SVD is *not* unique. • But for somewhat silly reasons. • Ways in which it might not be unique? Sign flipping! U û1 û2 U û1 û2 S s1 s2 S -s1 T V T 1 T 2 v̂ v̂ T V -v̂ T 1 s2 v̂ T 2 Permutations! U û1 û2 U û2 û1 S s1 s2 S s2 T V T 1 T 2 v̂ v̂ T V v̂ s1 T 2 T 1 v̂ Given that the SVD is *not* unique, how can we make sure programs will all arrive at the exact *same* SVD? • Conventions! • MATLAB picks the values in the main diagonal of S to be positive – this also sets the signs in the other two matrices. • MATLAB orders them in decreasing magnitude, with the largest one in the upper left. • If it runs its algorithm and arrives at the wrong order, it can always sort them (and does this under the hood). What happens if one of the entries in the main diagonal of S is zero? U û1 û2 S s1 s02 T V T 1 T 2 v̂ v̂ The nullspace • The set of vectors that are mapped to zero. • Information loss. • Always comes about via multiplication by a zero in the main diagonal of S. • A one-dimensional black hole. • A hydraulic press. • “What happens to the inputs?” • The part of the input space (V) that is lost • Is a “subspace” of the input space. What if S is not square? U û1 û2 S 100 010 T V T 1 T 2 T 3 v̂ v̂ v̂ What if the null space is multidimensional? U û1 û2 S 100 000 T V T 1 T 2 T 3 v̂ v̂ v̂ Nullspaces matter in cognition • Categorization • Attention • Depth perception The range space • • • • • Conceptually, a complement to the null space. “What can we reach in the output space?” “Where can we get to?” Some things might be unachievable. Where we can get to in the output space (U)? How might we not reach a part of the output space? U û1 û2 S s1 0 T V T 1 T 2 v̂ v̂ The inverse question • If I observe the outputs of a linear system and watch what is coming, could I figure out what the inputs were? • Related problem: If you start with 2 things in the input space and run them through the system and compare the outputs, can we still distinguish them as different? • So when is the linear system invertible? • How might it not be? Inversion of matrices • Matrix M can be inverted by doing the operations of the SVD in inverse order. • Peel them off one by one (last one done first): • M = U S VT T T • Inverting M: • V S# UT U S VT Q Q = QQ = I I I The pseudoinverse • “The best we can do”. • We recover the information we can. • The non-zero dimensions in the diagonal of S. Next stop • Eigenvectors • Eigenvalues • They will pop out as a special case of the SVD. How else might we not reach a part of the output space? U S û1 û2 û3 10 01 00 T V T 1 T 2 v̂ v̂