* Your assessment is very important for improving the workof artificial intelligence, which forms the content of this project

Download 2 Basics of Probability and Statistics

Survey

Document related concepts

Transcript

2

Basics of Probability and Statistics

2.1

Sample Space, Events, and Probability Measure

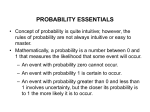

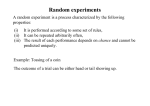

1. Random Experiment: A random experiment is a process leading to at least two possible outcomes with uncertainty as to which will occur. E.G. toss a coin, throw a dice,

pull the lever of a slot machine etc.

2. Sample Space (S): A sample space is the set of all possible outcomes of an experiment.

• Toss a coin: S = {H, T } .

• Toss two coins: S = {HH, HT, T H, T T } .

• NY Mets play a doubleheader: S = {W W, W L, LW, LL} .

Each element of the sample space is called a sample point.

3. Events: An event is a subset of the sample space. For example, list all the subsets of

S = {H, T } : ∅, {H} , {T } , {H, T } . These are the four possible events from tossing a

coin, where ∅ is called null event (it is there for mathematical completeness), it means

that nothing happens. {H} is the event of head; {T } is the event of tail; {H, T } is

the event of “anything happens”.

• Exercise: In the “toss two coins” example, express the event “one head and one

tail”. {HT, T H} .

• Exercise: In the “toss two coins” example, express the event “the first coin is a

head”.

4. The collection of all the events is called the σ-algebra, denoted by S. Example, the

σ-algebra for “toss a coin” example is S = {∅, {H} , {T } , {H, T }} . The σ-algebra for

“toss two coins” example is

S = {∅, {HH} , {HT } , {T H} , {T T } , {HH, HT } , {HH, T H} ,

{HH, T T } , {HT, T H} , {HT, T T } , {T H, T T } , {HH, HT, T H} ,

{HH, T H, T T } , {HH, HT, T T } , {HT, T H, T T } , {HH, HT, T H, T T }}

5. Events are said to be mutually exclusive if the occurrences of one event prevents the

occurrences of another event at the same time. In the “toss two coins” example,

event “one head one tail” {HT, T H} and the event “two heads” {HH} are mutually

exclusive.

6

6. A probability measure P is a mapping from a σ-algebra to [0, 1] that satisfies:

• P (∅) = 0, P (S) = 1;

• P (A) ≥ 0 for all A ∈ S;

• If A1 ∈ S, A2 ∈ S and A1 and A2 are mutually exclusive, then P (A1 ∪ A1 ) =

P (A1 ) + P (A2 ) .

Notation: We use A1 + A2 (or A1 ∪ A2 ) to denote the occurrences of either A1 or A2 ;

use A1 A2 (or A1 ∩ A2 ) to denote the simultaneous occurrences of events A1 and A2 .

7. If A, B are any events, they are said to be statistically independent events if the

probability of their occurring together is equal to the product of their individual

probabilities, that is,

P (A ∩ B) ≡ P (AB) = P (A) P (B) .

• Example: “toss two fair coins”. What is the probability of obtaining a head

on the first coin and a head on the second coin? Event A is the event of head

on the first coin {HH, HT }; event B is the event of head on the second coin

{HH, T H} . The event AB is {HH} . Common sense that A and B are statisti-

cally independent, hence P ({HH}) = 1/4.

8. If events A and B are not mutually exclusive, then [illustrate by a diagram]

P (A + B) = P (A) + P (B) − P (AB)

where P (A + B) is the probability that either A or B occurs, P (AB) is the probability

that A and B both occurs. If A and B are mutually exclusive, of course P (AB) =

P (A ∩ B) = P (∅) = 0 by the definition of probability measure.

• Example: A card is drawn from a deck of cards [52 total cards] What is the

probability that it will be either a heart or a queen. Event A : the card is a

heart; event B : the card is a queen.

P (A + B) = P (A) + P (B) − P (AB)

= 13/52 + 4/52 − 1/52 = 4/13.

7

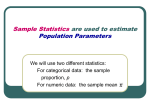

9. Conditional probability: This concept answers the following question: what is the

probability that event A occurs, knowing that event B has occurred (this implies that

P (B) > 0). This is called the conditional probability of event A, conditional on event

B occurring, and it is denoted by P (A|B) , The formula is:

P (A|B) =

P (AB)

P (B)

where the numerator P (AB) is called the joint probability of events A and B and the

denominator is called the marginal probability of event B.

• Example 1: I randomly draw a card from a deck of cards. Suppose that I tell you

the card drawn is a queen, what is the probability that card is a heart? Event

B : the card is a queen; event A : the card is a heart. The probability is

P (A|B) =

P (AB)

1/52

=

= 1/4.

P (B)

4/52

• Example 2: In Economics 101 there are a total of 500 students, of which 300 are

males, 200 are females. We know that 100 males and 60 females plan to major

in economics. A student is randomly selected from the class, and it is found

that this student plan to major in economics. What is the probability that this

student is a male? Answer: Event A : the student is a male; Event B : the

student is an economics major.

Pr (A|B) =

P (AB)

100/500

=

= 5/8.

P (B)

160/500

What is the unconditional probability that a randomly drawn student is a male?

P (A) = 300/500 = 0.6.

• Prove: If events A and B are statistically independent, then P (A|B) = P (A) .

[interpretation: if two events are statistically independent, then knowing one

event has occurred does not reveal any information about the likelihood of the

other event.]

10. Law of Total Probability: Let the sample space S be partitioned into k mutually exclusive and exhaustive events C1 , ..., Ck such that P (Ci ) > 0 for all i. Let C be another

event such that P (C) > 0. We have

C = C ∩ S = C ∩ (C1 ∪ C2 ∪ ... ∪ Ck )

= (C ∩ C1 ) ∪ (C ∩ C2 ) ∪ ... ∪ (C ∩ Ck )

8

hence

P (C) = P (C ∩ C1 ) + ... + P (C ∩ Ck )

= P (C1 ) P (C|C1 ) + ... + P (Ck ) P (C|Ck )

k

X

P (Ci ) P (C|Ci ) .

=

i=1

11. Bayes’ Theorem:

P (Cj |C) =

=

P (C ∩ Cj )

P (C)

P (C|Cj ) P (Cj )

Pk

i=1 P (Ci ) P (C|Ci )

where Ci , i = 1, ..., k are mutually exclusive and exhausive events of the sample space

S. P (Ci ) are called prior probabilities of event Ci and P (Ci |C) are known as the

posterior probabilities of event Ci after it is known that event C occured.

9