* Your assessment is very important for improving the work of artificial intelligence, which forms the content of this project

Download SQL2014 and Azure xStore Integration

Oracle Database wikipedia , lookup

Microsoft Access wikipedia , lookup

Extensible Storage Engine wikipedia , lookup

Team Foundation Server wikipedia , lookup

Microsoft Jet Database Engine wikipedia , lookup

Database model wikipedia , lookup

Relational model wikipedia , lookup

Clusterpoint wikipedia , lookup

SQL Server 2014 and Windows Azure

Blob Storage Service: Better Together

SQL Server Technical Article

Summary: SQL Server 2014 introduces significant new features toward a deeper integration with

Microsoft Azure, thus unlocking new scenarios and provide more flexibility in the database space for

IaaS data models. This technical article will cover in depth SQL Server 2014 Data Files on Azure Blob

storage service, starting from step-by-step configuration, then providing guidance on scenarios, benefits

and limitations, best practices and lessons learned from early testing and adoption. Additionally, a fully

featured example of a new (Windows Server Failover Clustering) - like feature will be introduced to

demonstrate the power of Microsoft Azure and SQL Server 2014 when combined together.

Writer: Igor Pagliai, Francesco Cogno

Technical Reviewer: Silvano Coriani, Francesco Diaz, Pradeep M.M, Luigi Delwiche, Ignacio

Alonso Portillo

Published: April 2014 (revision 2, June 2014)

Applies to: SQL Server 2014 and Microsoft Azure

Copyright

This document is provided “as-is”. Information and views expressed in this document, including URL and

other Internet Web site references, may change without notice. You bear the risk of using it.

Some examples depicted herein are provided for illustration only and are fictitious. No real association

or connection is intended or should be inferred.

This document does not provide you with any legal rights to any intellectual property in any Microsoft

product. You may copy and use this document for your internal, reference purposes.

© 2014 Microsoft. All rights reserved.

2|Page

Contents

.1

Introduction .................................................................................................................................................. 5

1.

2.

3.

Architecture .......................................................................................................................................... 6

1.1

SQL Server Data Files in Windows Azure: overview ..................................................................... 7

1.2

Tools and interfaces ...................................................................................................................... 8

1.3

Limitations..................................................................................................................................... 9

1.4

Usage scenarios .......................................................................................................................... 10

1.5

Pros and cons .............................................................................................................................. 11

Configuration ...................................................................................................................................... 13

2.1

Step-by-step procedure .............................................................................................................. 13

2.2

Best practices .............................................................................................................................. 20

2.3

Operations .................................................................................................................................. 25

Implementing a failover cluster mechanism ...................................................................................... 27

3.1

Introduction ................................................................................................................................ 27

3.2

Shared nothing model in SQL Server Data Files in Windows Azure ........................................... 28

3.3

Endpoint mapping ....................................................................................................................... 32

3.4

Polling architecture ..................................................................................................................... 34

3.5

Limitations of this architecture ................................................................................................... 35

3.5.1

No metadata sharing........................................................................................................... 35

3.5.2

Failover at database level ................................................................................................... 35

3.5.3

No availability group ........................................................................................................... 36

3.6

3.6.1

Requirements ...................................................................................................................... 36

3.6.2

Metadata tables .................................................................................................................. 46

3.6.3

Polling stored procedures ................................................................................................... 48

3.6.4

Polling job............................................................................................................................ 49

3.7

4.

Implementation .......................................................................................................................... 36

Step-by-step installation example .............................................................................................. 49

Monitoring and troubleshooting ........................................................................................................ 57

3|Page

4.1

Windows Azure Management Portal .......................................................................................... 57

4.2

Windows Azure Storage logs and analytics ................................................................................ 59

4.3

Performance counters ................................................................................................................ 61

4.4

SQL Server wait types ................................................................................................................. 63

4.5

SQL Server xEvents trace ............................................................................................................ 63

4.6

Errors ........................................................................................................................................... 66

5.

Conclusion ........................................................................................................................................... 67

Appendix ..................................................................................................................................................... 69

A.

Wait stats analysis........................................................................................................................... 69

B.

Enabling Transparent Data Encryption (TDE) ................................................................................. 70

C.

Downloading Database file blobs using a Valet Key Pattern .......................................................... 71

D.

Microsoft SQL Server to Windows Azure helper library ................................................................. 74

E.

Installing SQL Management Objects ............................................................................................... 94

G.

Stored procedure reference ......................................................................................................... 108

1)

[HAOnAzure].[PlaceDBOnHA] ................................................................................................... 108

2)

[HAOnAzure].[ScanForDBAndMountEndpoints] ...................................................................... 108

3)

[HAOnAzure].[ScanForDetachedDBsAndAttach] ...................................................................... 108

4)

[HAOnAzure].[UpsertAzureAccountCredentials] ...................................................................... 109

5)

[HaOnAzure].[InsertOrUpdateEndpointOnHA]......................................................................... 109

6)

[HaOnAzure].[RemoveDBFromHA] ........................................................................................... 111

7)

[HaOnAzure].[RemoveEndpointFromHA] ................................................................................. 112

8)

[HaOnAzure].[ShowEndpoints] ................................................................................................. 113

9)

[HAOnAzure].[ShowDatabasesOnHA]....................................................................................... 114

4|Page

Introduction

The goal of this white paper is to provide guidance to developers and IT professionals on how to leverage

a new Microsoft SQL Server 2014 feature called SQL Server Data Files in Windows Azure. It explains the

architecture, provides step-by-step instructions for configuration, and clarifies limitations and

advantages, and then it provides indications on performance, monitoring, and troubleshooting. Finally, it

provides a fully functional example of how is possible to create a custom high-availability and disasterrecovery solution, very similar to typical on-premises failover clusters in Windows Server.

Note: In the context of this white paper, SQL Server Data Files in Windows Azure is the capability of SQL

Server 2014 to host database data and transaction log files directly on Windows Azure blob storage,

without using Windows Azure data disks as intermediate storage containers.

This white paper has an appendix that contains all the necessary code, stored procedure references, and

helper libraries. It also includes a step-by-step installation procedure for a custom cluster mechanism we

implemented based on SQL Server Data Files in Windows Azure.

This feature, which was initially released in SQL Server 2014, enables native support for SQL Server

database files stored as Windows Azure blobs. It enables you to create a database in SQL Server running

on-premises or in a virtual machine in Windows Azure with a dedicated storage location for your data in

Windows Azure blob storage. This enhancement helps make it easier to move databases between

machines through the use of detach and attach operations. In addition, it provides an alternative

storage location for your database backup files by allowing you to restore from or to Windows Azure

Storage. SQL Server Data Files in Windows Azure enables you to create low-cost, highly available, and

elastically scaling hybrid solutions that meet your organization’s requirements for data virtualization,

data movement, security, and availability.

5|Page

1. Architecture

SQL Server Data Files in Windows Azure feature is available in all editions of SQL Server 2014; it is

enabled by default and provided at no additional cost.

This improvement delivers native support for SQL Server database files stored as Windows Azure blobs,

making SQL Server 2014 the first truly hybrid-cloud enabled database. SQL Server runs on-premises or in

a virtual machine, and it directly interacts with Windows Azure Storage. Now SQL Server instances, both

on-premises and in virtual machines, can interact directly with Windows Azure blobs. This improves

agility in terms of attaching and moving databases and makes restore possible without data movement.

It also opens the way for a highly available solution that uses Windows Azure blob storage as a shared

storage between multiple nodes.

This is a feature first introduced in SQL Server 2014, for more information about SQL Server integration

with Windows Azure Storage, see the following topic in SQL Server Books Online:

SQL Server Data Files in Windows Azure

http://msdn.microsoft.com/en-us/library/dn385720(v=sql.120).aspx

6|Page

1.1 SQL Server Data Files in Windows Azure: overview

SQL Server 2014 integration with Windows Azure blob storage occurs at a deep level, directly into the

SQL Server Storage Engine; SQL Server Data Files in Windows Azure is more than a simple adapter

mechanism built on top of an existing software layer.

The Manager Layer includes a new component called XFCB Credential Manager, which

manages the security credentials necessary to access the Windows Azure blob containers

and provides the necessary security interface; secrets are maintained encrypted and

secured in the SQL Server built-in security repository in the master system database.

The File Control Layer contains a new object called XFCB, which is the Windows Azure

extension to the file control block (FCB) used to manage I/O against each single SQL Server

data or log file on the NTFS file system; it implements all the APIs that are required for I/O

against Windows Azure blob storage.

At the Storage Layer, the SQL Server I/O Manager is now able to natively generate REST API

calls to Windows Azure blob storage with minimal overhead and great efficiency; in

7|Page

addition, this component can generate information about performance counters and

extended events (xEvents).

Important: The only Windows Azure blob type supported by SQL Server 2014 is page blob; any attempt

to use block blob will fail. To understand the difference between block blobs and page blobs, see

“Understanding Block Blobs and Page Blobs” in the Windows Azure documentation at

http://msdn.microsoft.com/en-us/library/windowsazure/ee691964.aspx.

The path to Windows Azure Storage is different using this new feature. If you use the Windows Azure

data disk and then create a database on it, I/O traffic passes through the Virtual Disk Driver on the

Windows Azure Host node. However, if you use SQL Server Data Files in Windows Azure, I/O traffic uses

the Virtual Network Driver.

SQL Server 2014 can run native REST API calls against Windows Azure blobs via the network directly. For

more information about SQL Server in Windows Azure Virtual Machines (IaaS) scalability target and

performance optimization, see the following white paper:

Performance Guidance for SQL Server in Windows Azure Virtual Machines

http://go.microsoft.com/fwlink/?LinkId=306266

1.2 Tools and interfaces

SQL Server 2014 comes with a series of tools and interfaces you can use to interact with the Windows

Azure blob storage integration:

Windows PowerShell cmdlets. New cmdlets in SQL Server 2014 can be used to create a SQL

Server database that references a blob storage URL path instead of a file path.

Performance counters. A new object called HTTP_STORAGE_OBJECT tracks activity in System

Monitor when you are running SQL Server with Windows Azure Storage data or log files.

8|Page

SQL Server Management Studio. URL paths can be used in all the database-related dialog boxes

for creating, attaching, restoring, and reviewing database properties; you can also use Object

Explorer to browse and connect directly to Windows Azure blob storage accounts.

SQL Server Management Objects. You can specify URLs to data or log file paths in Windows

Azure Storage in SQL Server Management Objects (SMO); additionally, a new property,

HasFileInCloud, in the object Microsoft.SqlServer.Management.Smo.Database returns true if the

database has at least one file stored in Windows Azure blob storage.

Transact-SQL. Transact-SQL syntax now supports usage of URL paths in database creation and

file layout management. Also, a new column called credential_id in the sys.master_files system

view enables you to create joins with the sys.credentials system view and retrieve details about

Windows Azure blob storage access policies and containers.

1.3 Limitations

Windows Azure blob storage integration in SQL Server 2014 comes with some limitations, mainly due to

the nature of Windows Azure blob storage:

Maximum file size and number of files. The maximum size of a single page blob in Windows

Azure storage is 1 terabyte (TB). You can create an infinite number of blobs (and containers), but

the maximum total size per storage account is 200 TB.

Geo-replication. The Windows Azure blob storage geo-replication feature is not supported and

must be disabled: because geo-replication is asynchronous and there is no way to guarantee

write ordering between data and log files, using this feature can generate database corruption if

database failover occurs.

Filestream. Filestream is not supported on Windows Azure blob storage. Only MDF, LDF, and

NDF files can be stored in Windows Azure blob storage using SQL Server Data Files in Windows

Azure.

Hekaton in-memory database. Additional files used by the In-memory Hekaton feature are not

supported on Windows Azure blob storage. This is because Hekaton uses Filestream.

9|Page

AlwaysOn. You can use AlwaysOn if you don’t need to create additional files on the primary

instance. For more information about AlwaysOn support in Windows Azure Virtual Machines

(IaaS), see the following blog post:

SQL Server 2012 AlwaysOn Availability Group and Listener in Azure VMs: Notes, Details

and Recommendations

http://blogs.msdn.com/b/igorpag/archive/2013/09/02/sql-server-2012-alwaysonavailability-group-and-listener-in-azure-vms-notes-details-and-recommendations.aspx

1.4 Usage scenarios

Although it is theoretically possible and officially supported, using an on-premises SQL Server 2014

installation and database files in Windows Azure blob storage is not recommended due to high network

latency, which would hurt performance; for this reason, the main target scenario for this white paper is

SQL Server 2014 installed in Windows Azure Virtual Machines (IaaS). This scenario provides immediate

benefits for performance, data movement and portability, data virtualization, high availability and

disaster recovery, and scalability limits. “Implementing a failover cluster mechanism,” later in this

document, also describes a creative example of a new low-cost mechanism that provides high

availability and disaster recovery functionalities similar to typical on-premises failover clusters in

Windows Server.

These are the biggest advantages of using SQL Server data files on Windows Azure blob storage service:

Portability: in this form (that is, with database files as blobs in Windows Azure Storage), it’s easy

to detach a database from a Windows Azure Virtual Machine (IaaS) and attach the database to a

different virtual machine in the cloud; this feature might be also suitable to implement an

efficient disaster recovery mechanism, because everything is directly accessible in Windows

Azure blob storage. To migrate or move databases, use a single CREATE DATABASE Transact-SQL

query that refers to the blob locations, with no restrictions on storage account and compute

resource location. You can also use rolling upgrade scenarios if extended downtime for

operating system and/or SQL Server maintenance is required.

Database virtualization: When combined with the contained database feature in SQL Server

2012 and SQL Server 2014, a database can now be a self-contained data repository for each

tenant and then dynamically moved to different virtual machines for workload rebalancing. For

more information about contained databases, see the following topic in SQL Server Books

Online:

Contained Databases

http://technet.microsoft.com/en-us/library/ff929071.aspx

High availability and disaster recovery: Because all database files are now externally hosted,

even if a virtual machine crashes, you can attach these files from another hot standby virtual

10 | P a g e

machine, ready to take the processing workload. A practical example of this mechanism is

provided in “Implementing a failover cluster mechanism” later in this document.

Scalability: Using SQL Server Data Files in Windows Azure, you can bypass the limitation on the

maximum number of Windows Azure disks you can mount on a single virtual machine (16 for XL

and A7 sizes); there is a limitation on the maximum number of I/O per second (IOPS) for each

single Windows Azure disk. The next section discusses this limitation in greater detail.

Theoretically, you should be able to mount up to 32,767 database files on each SQL Server

instance, but it’s very important to consider the network bandwidth required to support your

workload. You should also carefully review “Pros and cons” later in this document. For more

information about Windows Azure virtual machine sizes and characteristics, limitations, and

performances, see the following links:

Virtual Machine and Cloud Service Sizes for Windows Azure

http://msdn.microsoft.com/library/dn197896.aspx

Performance Guidance for SQL Server in Windows Azure Virtual Machines

http://go.microsoft.com/fwlink/?LinkId=306266

Elasticity: Mixing SQL Server Data Files on premise and in Windows Azure you can expand your

SQL Server instance storage space as needed. Even better, you pay only for the Azure blob

pages actually in use, not the Azure blob pages allocated (note that you still pay for pages in use

for the Azure blob perspective but unused as far as SQL Server is concerned). You can even mix

the different types of storage based on cost, performance and availability considerations either

manually or using automatic data placement features such as partitioning functions along with

a partition schemes.

CREATE PARTITION SCHEME (Transact-SQL)

http://msdn.microsoft.com/en-us/library/ms179854.aspx

1.5 Pros and cons

SQL Server Data Files in Windows Azure provides several advantages and enables new usage scenarios,

but as with any SQL Server feature, it comes with pros and cons that must be carefully evaluated. The

most important consideration is related to the consumption of network: if you use this new feature, I/O

traffic now counts toward network bandwidth and not the IOPS limit per single Windows Azure disk. The

following table lists the main resource limits and thresholds for each virtual machine size.

11 | P a g e

Important: Values reported in Allocated Bandwidth (Mbps) are not officially covered or guaranteed by

any Service Level Agreement (SLA) today. Information is reported only as a general estimation of what is

expected for each virtual machine size. We hope in future to enable more fine-grained control over

network utilization and then to be able to provide an official SLA.

These are the main pros to consider:

It is possible to scale on the number of IOPS, on database data files, far beyond the (500 IOPS x

16 disks) = 8000 IOPS limit imposed by usage of Windows Azure additional disks.

Databases can be easily detached and migrated or moved to different virtual machines (that is,

portability).

Standby SQL Server virtual machines can be easily used for fast disaster recovery.

You can easily implement custom failover mechanisms. “Implementing a failover cluster

mechanism” (later in this document) demonstrates how to do this.

This mechanism is almost orthogonal to all main SQL Server 2014 features (such as AlwaysOn

and backup and restore) and it is pretty well integrated into the SQL Server management tools

like SQL Server Management Studio, SMO, and SQL Server PowerShell.

You can have a fully encrypted database with decryption only occurring on compute instance

but not in a storage instance. In other words, using this new enhancement, you can encrypt all

data in public cloud using Transparent Data Encryption (TDE) certificates, which are physically

separated from the data. The TDE keys can be stored in the master database, which is stored

locally in your physically secure on-premises machine and backed up locally. You can use these

local keys to encrypt the data, which resides in Windows Azure Storage. If your cloud storage

account credentials are stolen, your data still stays secure because the TDE certificates always

reside on-premises.

These are the main cons to consider:

I/O generated against single database files, using SQL Server Data Files in Windows Azure, count

against network bandwidth allocated to the virtual machine and pass through the same single

network interface card used to receive client/application traffic.

12 | P a g e

It is not possible to scale on the number of IOPS on the single database transaction log file.

Instead, using Windows Azure data disks, you can use the Windows Server 2012 Storage Spaces

feature and then stripe up to four disks to improve performance. For more information about

this technique, see the following white paper.

Performance Guidance for SQL Server in Windows Azure Virtual Machines

http://go.microsoft.com/fwlink/?LinkId=306266

Geo-replication for database file blobs is not supported.

Note: Even if you are using traditional Windows Azure data disks, geo-replication is not

supported if more than one single disk is used to contain all data files and transaction log file

together.

There are some limitations, as mentioned earlier in this document in “Limitations.” For example,

the Hekaton in-memory database and Filestream features are not supported.

2. Configuration

This section provides you with a complete step-by-step procedure that creates all the necessary objects

and required configuration items to implement a fully functional scenario.

2.1 Step-by-step procedure

First of all, create a Windows Azure storage account, if one is not yet available, and then create a

container with Private security access.

Important: For performance reasons, we strongly recommend that you create the storage account in

the same data center as the virtual machine where you will install SQL Server 2014, or, even better, use

the same Windows Azure affinity group.

Because you will use at least two blobs (one for the data file and one for the log file), you should disable

geo-replication. This configuration is not supported for SQL Server. By disabling geo-replication, you can

save around 30 percent on your storage costs. We have been asked several times by customers and

partners why we recommend disabling geo-replication, and the reason is simple: because there is no

13 | P a g e

ordering guaranteed in asynchronous storage replication, writes to the data file blob may occur before

writes to the log file blob, which violates a fundamental principle and requirement of every transactional

database system: Write-Ahead Logging (WAL), which you can read about in the following old but still

valid KB article:

SQL Server 7.0, SQL Server 2000, and SQL Server 2005 logging and data storage algorithms

extend data reliability

http://support.microsoft.com/default.aspx?scid=kb;en-us;230785

Next, create a container for your database with an access level of Private. The names of the storage

account and the container must be lowercase.

Note that this container has a full path equal to

“http://enosg.blob.core.windows.net/sqldatacontainer”. This is important to remember when you use

SQL Server Management Studio later in this paper. Even if the same container can be used by multiple

databases, you should create only one database per container because the security mechanism that is

used in this procedure is based on the container itself.

Next, create a policy and a shared access signature (SAS) to protect and secure the container. SQL Server

requires this policy in order to work correctly with Windows Azure storage. There are several ways to

create the policy and the SAS, You can use C# code for this simple task or use complex REST APIs, but

this procedure uses a free tool, Azure Storage Explorer, which you can find on CodePlex:

http://azurestorageexplorer.codeplex.com

14 | P a g e

Install the tool, open it, and then insert the name and the access key of your storage account. If the

operation succeeds, the content of your storage account is displayed, along with the list of your

containers. Select the one you want to place your database in, and then click Security.

In the Blob & Container Security dialog box, click the Shared Access Policies tab, and then create a new

policy with the settings shown in the following screen shot. Click Save Policies.

15 | P a g e

In some documentation you can find on the Internet, Delete is not listed as a privilege requirement, but

if you want to be able to drop the database from SQL Server, you need to include Delete in the policy

definition. We recommend setting a fixed limit for the expiry time setting (such as one year): although it

is technically possible to have an infinite lease, it’s a good practice to use a fixed limit and then renew

periodically. For more information about blob lease times and expirations, see the following blog post:

New Blob Lease Features: Infinite Leases, Smaller Lease Times, and More

http://blogs.msdn.com/b/windowsazurestorage/archive/2012/06/12/new-blob-lease-featuresinfinite-leases-smaller-lease-times-and-more.aspx

For more information about shared access and blob storage and containers, see the following:

Shared Access Signatures, Part 1: Understanding the SAS Model

http://www.windowsazure.com/en-us/manage/services/storage/net/shared-access-signaturepart-1

Close the dialog box and then reopen it; switching directly to the Shared Access Signatures tab for the

next step will now let you see the newly created policy. After you reopen the dialog box, click the

Shared Access Signatures tab and then in Container name type sqldatacontainer, and in Policy, select

sqlpolicy. When you are done, click Generate Signature. A string appears in the Signature box.

16 | P a g e

Click Copy to Clipboard and then paste the string in a secure location for later reuse. Finally, close the

tool.

Now you can test integration with SQL Server Data Files in Windows Azure. Open SQL Server

Management Studio inside the Windows Azure virtual machine you created, and then create the SQL

Server credential object that you need to access the Windows Azure blob container you created before.

A SQL Server credential object stores authentication information required to connect to a resource

outside of SQL Server. The credential stores the URI path of the storage container and the SAS key

values. For each storage container used by a data or log file, you must create a SQL Server credential

whose name matches the container path. That is, the credential name string must be exactly the same

as the blob container path; otherwise the security information in SQL Server and Windows Azure do not

match, and authentication fails. For example, with the storage container created earlier in this paper,

the correct full path to use, including HTTPS prefix, is

https://enosg.blob.core.windows.net/sqldatacontainer. To create the corresponding credential object in

SQL Server, use the following code in SQL Server Management Studio, enclosing it in square brackets:

CREATE CREDENTIAL [https://enosg.blob.core.windows.net/sqldatacontainer]

WITH IDENTITY='SHARED ACCESS SIGNATURE',

SECRET =

'sr=c&si=sqlpolicy&sig=9aoywKCSbX4uQrIGEWIl%2Bfh3cMtEm5ZA3fSDxh2wskajd7'

Note: The signature in the example is for demonstration only. It is not valid.

To create the object without using Transact-SQL, use the Credentials node in SQL Server Management

Studio: right-click the instance-level Security container, and then click New. In Credential name, enter

the URI, and then in Identity, enter SHARED_ACCESS_SIGNATURE.

In Password, enter the SECRET value that you copied in the Azure Storage Tool earlier. You will need to

modify the string to get the correct value to enter. For example, the string you copied from Azure

Storage Explorer is something like this:

17 | P a g e

https://enosg.blob.core.windows.net/sqldatacontainer?sr=c&si=sqlpolicy&sig=9aoywKCSbX4uQrIGEWIl

%2Bfh3cMtEm5ZA3fSDxh2wskajd7

However, the value you need to provide to SQL Server is only the substring starting from [?] until the

end:

sr=c&si=sqlpolicy&sig=9aoywKCSbX4uQrIGEWIl%2Bfh3cMtEm5ZA3fSDxh2wskajd7

After the credential object is created, the credential object is saved in the SQL Server master database in

an encrypted form. After the object is stored, you do not need to maintain the object itself further, but

be sure to back up the master database regularly.

You can also register and connect to blob storage accounts in Windows Azure through SQL Server

Management Studio starting with SQL Server 2012.

When you click Azure Storage, the following dialog box appears.

As with the other connection methods discussed here, the shared access key is stored securely in the

SQL Server master database.

As of this writing, you can only list, see, and in some cases delete the containers and files for storage

accounts: we hope to add enhanced signature tools in future service packs so that you can create the

connection without having to use external tools or code.

If you try to delete a file that is in use, you receive the following error.

18 | P a g e

After the connection between Windows Azure and SQL Server is established, you can create your first

database using the following Transact-SQL command.

CREATE DATABASE TestDBonAzure

ON

(NAME = file_data1, FILENAME = 'https://enosg.blob.core.windows.net/sqldatacontainer/filedata1.mdf',

SIZE = 10GB),

(NAME = file_data2, FILENAME = 'https://enosg.blob.core.windows.net/sqldatacontainer/filedata2.mdf',

SIZE = 10GB),

(NAME = file_data3, FILENAME = 'https://enosg.blob.core.windows.net/sqldatacontainer/filedata3.mdf',

SIZE = 10GB)

LOG ON

(NAME = file_log1, FILENAME = 'https://enosg.blob.core.windows.net/sqldatacontainer/filelog1.ldf', SIZE =

1GB)

Note: In our testing, this command took 7 seconds to execute. The creation of 100 GB database data file

with a 10 GB transaction log file completed in 17 seconds.

After you run the Transact-SQL command, the new items appear in SQL Server Management Studio.

It is worth mentioning that SQL Server uses temporary leases to reserve blobs for storage, and that each

blob lease is renewed every 45 to 60 seconds. If a server crashes and another instance of SQL Server

configured to use the same blobs is started, the new instance waits up to 60 seconds for the existing

lease on the blob to expire. To remove or break the lease manually for emergency reasons or

troubleshooting, you can use the Lease Blob REST API or the following GUI tool:

Azure Blob Lease

https://github.com/gbowerman/azurebloblease

19 | P a g e

2.2 Best practices

Depending on why you are interested in SQL Server Data Files in Windows Azure (performance, portability,

security, scalability, or high availability and disaster-recovery), different sets of best practices may apply

for specific scenarios.

When you use this feature, it’s critical to remember the following points:

If SQL Server Data Files in Windows Azure is used for certain SQL Server database files, IOPS

against those files count toward the network bandwidth and limits assigned to your virtual

machine.

Today, Windows Azure does not guarantee with an SLA the network bandwidth for a specific

virtual machine size, but we provide a general estimation in “Pros and cons” earlier in this

document.

If you are using Windows Azure data disks for these database files, IOPS against those files counts

toward the 500 IOPS limit per single disk, up to 16 disks, depending on the Windows Azure virtual

machine size.

500 IOPS for each blob/data disk is a hard limit enforced through throttling, but it is not a

performance guarantee for each Windows Azure data disk. For more information about

performance with Windows Azure data disks, see the following white paper:

Performance Guidance for SQL Server in Windows Azure Virtual Machines

http://go.microsoft.com/fwlink/?LinkId=306266

There are many important things to consider when you plan a system that includes Windows Azure. The

following list contains information about network and storage performance targets, limits, and thresholds

that you should bear in mind:

Security

o Use a separate Windows Azure blob storage container for each database and create a

separate CREDENTIAL object, in SQL Server, for each container.

o Create Windows Azure blob storage containers with an access level of Private.

o Because Windows Azure storage does not provide or store blob data encrypted, if you

need encryption at rest, enable SQL Server transparent database encryption (TDE). The

appendix of this paper includes a sample script to enable TDE. For more information about

TDE, which is fully supported in this scenario, see the following topic in SQL Server Books

Online:

Transparent Data Encryption (TDE)

http://technet.microsoft.com/en-us/library/bb934049.aspx

20 | P a g e

o

When you create an SAS policy, use a short-term expiration date and be sure to schedule

appropriate SQL Server credential object updates to avoid blocked access. For more

information about containers, policies, and SAS, see the following blog post:

About Container Shared Access Signature for SQL Server XI

http://blogs.technet.com/b/italian_premier_center_for_sql_server/archive/201

3/12/05/how-to-build-your-container-shared-access-signature-for-sqlxi-fromsql-server.aspx

o

o

All general SQL Server concepts, technologies, and features related to security, like

auditing, still apply and should be adopted.

To help ensure the highest portability and self-containment for databases, use the

contained database feature in SQL Server 2014. For more information about working with

contained databases, see the following topic in SQL Server Books Online:

Contained Databases

http://technet.microsoft.com/en-us/library/ff929071.aspx

Performance

o Because each Windows Azure storage account is able to provide up to 20,000 IOPS and

200 TB space for all the types (that is, blobs, tables, and queues) of accessed objects, you

should carefully monitor the total IOPS amount for all database files and ensure that you

will not be throttled. For blob objects, you can expect up to 60 MB per second, or up to

500 transactions per second.

o If your database requires higher IOPS than a single blob or file can sustain (that is, >>500

IOPS), you should not place it directly on Windows Azure blob storage using SQL Server

Data Files in Windows Azure.

Until Windows Azure Storage allows you to scale up on I/O performances, the only

possibility is to place the transaction log on a volume backed up by Windows Server 2012

Storage Spaces in stripe mode, created inside the virtual machine. Adopting this

approach, you will have to create your data files on Windows Azure blob storage using

SQL Server Data Files in Windows Azure, leaving the transaction log inside the virtual

machine on a traditional Windows Azure data disk. Here is an example of how to do this

using Transact-SQL.

CREATE DATABASE TestDBonAzure

ON

(NAME = file_data1, FILENAME =

'https://enosg.blob.core.windows.net/sqldatacontainer/filedata1.mdf'

, SIZE = 10GB)

LOG ON

(NAME = file_log1,

FILENAME = 'Z:\DATA\file_log1.mdf', SIZE = 1GB)

21 | P a g e

For more information about Windows Server 2012 Storage Spaces and I/O scalability in

Windows Azure virtual machines, see the following white paper:

Performance Guidance for SQL Server in Windows Azure Virtual Machines

http://go.microsoft.com/fwlink/?LinkId=306266

o

o

o

o

We do not recommend the use of SQL Server Data Files in Windows Azure for the master,

model, and msdb system databases: to ensure virtual machine isolation and selfcontainment, store these system databases on system drive C.

We do not recommend the use of SQL Server Data Files in Windows Azure for the tempdb

system database for several reasons:

Because tempdb is a temporary database, there is no need to port outside the

assigned SQL Server instance.

Because using SQL Server Data Files in Windows Azure counts toward your

assigned network bandwidth and threshold, you can save network bandwidth

and use Windows Azure data disks instead.

You should place the SQL Server virtual machine in the same Windows Azure affinity

group as the storage account that you plan to use for SQL Server Data Files in Windows

Azure.

For each database, use multiple data files (blobs) until you reach the required number of

IOPS: because SQL Server can use data files in parallel very efficiently, it’s very easy to

scale up on storage performance, at least for data files.

Be sure to check your network bandwidth consumption when using multiple data files or

blobs with SQL Server Data Files in Windows Azure.

Note: Only one transaction log file can be active per database. If more than one

transaction log file is active per database, SQL Server Data Files in Windows Azure

cannot be used.

o

o

Even though large blob size allocation is very efficient in Windows Azure Storage (NTFS

sparse file mechanism), you should allocate, at creation time, the maximum expected

database file size for SQL Server data files and avoid lengthy file expansion operations.

For transaction log files, SQL Server requires the entire file content or blob to be zeroed

on the storage system. This is not required for azure page blob based transaction log files:

the only initialized part is the log tail witch is fixed in size and this operation is a single,

atomic REST call. So regardless of the dimension of your transaction log the required

amount of time to initialize it is immutable. For this reason the only constraint to evaluate

during transaction log creation is its cost. Keep in mind that the same consideration about

VLF size and cardinality apply in blob based transaction logs as well.

22 | P a g e

o

Use the SQL Server data compression feature whenever possible to reduce I/O against

Windows Azure blob storage. For more information about data compression, see the

following topic in SQL Server Books Online:

Data Compression

http://technet.microsoft.com/en-us/library/cc280449.aspx

o

Consider using the SQL Server backup compression feature as well, to reduce data size

transfer to Windows Azure blob storage during database backup and restore operations.

For more information about backup compression, see the following topic in SQL Server

Books Online:

Backup Compression (SQL Server)

http://technet.microsoft.com/en-us/library/bb964719.aspx

o

Cost

o

o

o

o

o

o

Avoid SQL Server shrink operations on database files, because these operations are

extremely slow.

Because it is not supported, you should disable Windows Azure storage account georeplication. Disabling this feature can save you 30 percent on usage billing.

If Windows Azure storage monitoring and logging are required, be sure to use minimal

detail levels and short information retention policies.

If you use a second SQL Server virtual machine for hot standby and you require very quick

database failover, leave the virtual machine running, but use the lowest possible

Windows Azure virtual machine size: when you need the virtual machine to become

active, change the virtual machine size back to the desired state. This simply triggers a

virtual machine restart.

If you use a second SQL Server virtual machine for cold standby and you do not require

very quick database failover, after the virtual machine is configured, shut it down and

deallocate it, because you will not pay for stopped machines, only for the space occupied

by virtual hard disks. Be aware that restarting the virtual machine takes a few minutes.

Carefully plan database file size for all your files, because you pay for each allocated

gigabyte: even if the storage cost is minimal compared to compute resource usage, you

may want to review the amount of space used to ensure cost efficiency.

Avoid unnecessary index maintenance: use adaptive logic in your script to ensure you

rebuild or reorganize indexes only if necessary.

Monitoring and management

o Using the Windows Azure Management Portal, add additional key metrics to monitor

performance and availability of the Windows Azure storage account (see “Windows Azure

Management Portal” later in this document).

23 | P a g e

o

o

Using the Management Portal, set up alerts on storage metrics per KPI like I/O requests,

availability, throttling, and errors (see “Windows Azure Management Portal” later in this

document).

Back up SQL Server databases directly on Windows Azure blob storage using a new

feature introduced in SQL Server 2012 SP1 – CU2. This approach avoids the use of

Windows Azure data disk, instead permitting remote backup to other Windows Azure

data centers and helping to ensure maximum portability of backup data. For more

information about this feature, see the following:

Tutorial: Getting Started with SQL Server Backup and Restore to Windows Azure

Blob Storage Service

http://msdn.microsoft.com/en-us/library/jj720558.aspx

SQL Server Database Backup in Azure IaaS: performance tests

http://blogs.msdn.com/b/igorpag/archive/2013/07/30/sql-server-databasebackup-in-azure-iaas-performance-tests.aspx

o

o

o

o

So that you always have a general overview of Windows Azure storage account health

and performances, enable Windows Azure storage analytics and metrics collection, at the

minimum verbose level (see “Windows Azure storage logs and analytics” later in this

document).

Be sure to set a reminder before SAS expiration to update the CREDENTIAL object in SQL

Server; otherwise access will be denied by the Windows Azure storage infrastructure.

Be sure to save all the scripts for database and credentials creation in a secure place

outside the main SQL Server virtual machine.

Periodically review SQL Server 2014 performance counters related to SQL Server Data

Files in Windows Azure (see “Performance counters” later in this document) and wait

statistics (see “SQL Server wait types” later in this document).

High availability and disaster recovery

o The official supported and recommended mechanism for high availability for SQL Server

2014 (and SQL Server 2012) in Windows Azure Virtual Machines (IaaS) is AlwaysOn

Availability Groups. For more information about AlwaysOn Availability Groups and SQL

Server IaaS, see the following blog post:

SQL Server 2012 AlwaysOn Availability Group and Listener in Azure VMs:

Notes, Details and Recommendations

http://blogs.msdn.com/b/igorpag/archive/2013/09/02/sql-server-2012alwayson-availability-group-and-listener-in-azure-vms-notes-details-andrecommendations.aspx

24 | P a g e

SQL Server Data Files in Windows Azure also makes it possible to implement different

custom high availability mechanisms. A fully functional example is provided

“Implementing a failover cluster mechanism” later in this document.

o

o

If possible, include SQL Server virtual machines and all data and log files in the same

storage account. The storage account is the failover unit, if a disaster occurs at the level

of the Windows Azure data center or storage rack.

Save the database creation script outside the main SQL Server virtual machine for fast

database recovery in case of a virtual machine crash: this script contains the full HTTPS

paths to Windows Azure blob storage necessary to reattach the database to a new virtual

machine.

Be sure also to save the SQL Server credential creation script for SAS policy. SQL Server

needs this script to mount blobs and files. Keep this file in a secure place, because it

contains security information.

For more information about Windows Azure storage performance targets, limits and thresholds, see the

following blog post:

Azure Storage Scalability and Performance Targets

http://msdn.microsoft.com/library/azure/dn249410.aspx

2.3 Operations

Now that you have your SQL Server instance in a Windows Azure virtual machine and your database is

directly on Windows Azure blob storage using SQL Server Data Files in Windows Azure, consider the

following items related to database maintenance, management, and life cycle:

If you drop the database, the blob files are removed.

If you detach the database, SQL Server releases the lease on the Windows Azure blob files

immediately. The following screen shot of the Windows Azure Management Portal shows the

blob properties after the database is detached.

25 | P a g e

If you take the database offline, as with detaching, SQL Server releases the lease on the

Windows Azure blob files immediately.

As long as SQL Server maintains a lease on the blob files, you cannot externally drop or modify

or mount files in another SQL Server instance. If you need to drop or modify such files, you must

detach the database or stop the SQL Server service.

If you need to programmatically break the lease on database files, you can do so if you

incorporate the necessary logic inside SQL Server itself. For more information about how to

break the lease programmatically, see the following blog post:

Blob leases: how to break them from SQL Server

(using SQL CLR and Windows Azure REST API)

http://blogs.technet.com/b/italian_premier_center_for_sql_server/archive/2013/10/24

/blob-leases-how-to-break-them-from-sql-server-via-sql-clr-and-windows-azure-restapi.aspx

From a secondary SQL Server instance, if you try to attach database files that are already being

used by a primary SQL Server instance, you receive an error similar to this one.

Msg 5120, Level 16, State 140, Line 5

Unable to open the physical file

"https://enosg.blob.core.windows.net/frcogno/filedata1.mdf".

Operating system error 32:

"32(The process cannot access the file because it is being used by another

process.)".

This error appears because a lease already exists at the Windows Azure blob storage level, and

only one instance at a time can have access.

If you need to attach a previously detached database, even on a different SQL Server instance or

virtual machine, use the CREATE DATABASE Transact-SQL command with the FOR ATTACH

switch as shown here.

CREATE DATABASE [TestDBonAzure]

ON PRIMARY

26 | P a g e

(NAME = N'file_data1',

FILENAME = N'https://enosg.blob.core.windows.net/frcogno/filedata1.mdf',

SIZE = 10485760KB, MAXSIZE = 1TB, FILEGROWTH = 1024KB)

LOG ON

(NAME = N'file_log1',

FILENAME = N'https://enosg.blob.core.windows.net/frcogno/filelog1.ldf',

SIZE = 1048576KB, MAXSIZE = 1TB, FILEGROWTH = 512MB)

FOR ATTACH

3. Implementing a failover cluster mechanism

This section presents a complete custom solution that provides functionalities similar to those of onpremises Windows Server failover clusters.

3.1 Introduction

One of the biggest challenges in any IT department is to offer a highly available service, regardless of its

nature. This holds true for Microsoft SQL Server. While on-premises SQL Server installations enable you

to make use of the Windows Server failover cluster technology, as of this writing this solution is not

supported in Windows Azure Virtual Machines (IaaS).

SQL Server 2014, however, with SQL Server Data Files in Windows Azure, enables you to access shared

storage directly. With the required connectivity and security permissions, each blob can be accessed by

any SQL Server installation—both on premises and in the cloud. Windows Azure virtual machines have

out-of-the box Internet connectivity, including the Windows Azure Storage URI—even across data centers.

Page blob

VM A

VM B

The preceding figure shows that every SQL Server instance that has access to those blobs can attach the

database. You can use this architecture to help protect against software failures.

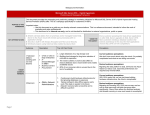

Architecture

Protection level

Different instances

SQL Server instance failure

Different virtual machines

Instance failure

Different data centers

Data center failure*

27 | P a g e

Note: Precautions that help protect you from data center failure do not protect you from blob storage

failure, because the blob geo-replication feature is not supported for Microsoft SQL Server database files.

However, you can use geo-replication for database backups.

The Windows Azure platform, in addition, supports multiple endpoints linked specifically to a virtual

machine (stand-alone endpoints) or shared between all virtual machine in the same cloud service (loadbalanced endpoints). In the context of this white paper, we’ll use and refer to stand-alone endpoints:

these endpoints are mapped to a specific port couple, local and remote, and—at a given time—will point

to a specific virtual machine in the deployment. This configuration is discussed in detail in “Endpoint

mapping” later in this document

By migrating these endpoints from one virtual machine to another, an endpoint failover can be simulated,

achieving the same result as an AlwaysOn Availability Group Listener failover.

Page blob

VM A

VM B

Endpoint

For more information, see the following topics on MSDN:

List Cloud Services (http://msdn.microsoft.com/en-us/library/ee460781.aspx).

Get Cloud Service Properties (http://msdn.microsoft.com/enus/library/windowsazure/ee460806.aspx).

3.2 Shared nothing model in SQL Server Data Files in Windows Azure

The previous section showed that every Windows Azure virtual machine can access the blobs that

compose the database. In order to prevent simultaneous access to the same blob by two or more SQL

Server instances, SQL Server must implement a mechanism similar to the shared nothing model in

Windows Server failover clusters. For more information about the shared nothing model in failover

clusters, see “Cluster Strategy: High Availability and Scalability with Industry-Standard Hardware” at

http://technet.microsoft.com/en-us/library/cc767157.aspx.

28 | P a g e

In the Windows Azure Storage platform SQL Server uses the concept of lease. Each container and blob can

have a lease active on it. While leased, a container or blob can be only accessed via a valid lease ID.

Because SQL Server will not share the lease ID, it is guaranteed to be the only one that can access the blob

(or container) at a given time. There are two types of leases: time based and never expiring (often referred

as fixed). SQL Server 2014 uses time based leases, so in case of problems, the blobs eventually become

available again on their own. The use of time based leases also means that SQL Server must renew a lease

before its expiration. Here is a simplified sequence diagram.

Object

lease

Azure

Storage

SQL

Server

Request lease

Create lease

LeaseID

LeaseID

Renew lease with LeaseID

Renew lease with LeaseID

Note that SQL Server releases the lease if you detach the database manually or if you shut down SQL

Server gracefully. While the lease is active, no REST API request is allowed unless it specifies the lease ID.

For example, the Put Page operation—used by SQL Server to update a database page—is refused unless

a valid lease ID is specified. For more information about REST API requests, see “Put Page (REST API)”

(http://msdn.microsoft.com/en-us/library/windowsazure/ee691975.aspx). You can see the lease status

of a blob by clicking Edit in the Windows Azure Management Portal.

29 | P a g e

Because, by definition, a single database file blob can be leased by a single SQL Server instance at any

given time, you can use the lease as the guarantee required to replicate the shared nothing model. A

database can be composed of many blobs. Each blob maintains an independent lease. It is impossible,

however, to attach a different file of the same databases in different instances, because an instance must

have access to the PRIMARY filegroup in order to bring the database online. Because there is only a single

PRIMARY filegroup in a given database, it is not possible to attach the same database in two different

instances.

The remainder of the section refers to the Microsoft SQL Server to Windows Azure Helper Library, which

is an easy-to-use tool that is available for free on CodePlex: https://sqlservertoazure.codeplex.com. The

concepts discussed here can be applied regardless of what kind of technology you use. For more

information about how to install the library on your instance, see the appendix.

Using the library, call the List Blobs REST API method (http://msdn.microsoft.com/enus/library/windowsazure/dd135734.aspx) and retrieve the current lease status of a given blob.

30 | P a g e

Use the sys.master_files catalog view (http://technet.microsoft.com/en-us/library/ms186782.aspx) to

identify the SQL Server databases attached to an instance using SQL Server Data Files in Windows Azure

feature. Because the blobs are in use, you can confirm the existence of a valid lease.

Note: These examples use the MERGE join hint to minimize the number of REST calls. The purpose of this

hint is performance-related and therefore out of scope of this white paper.

31 | P a g e

If you detach the database, or if a failure that causes the instance to be unable to keep the lease active

occurs, the blobs become available.

In this situation any SQL Server instance that can connect to Windows Azure storage can also attach the

database (given the proper credentials).

3.3 Endpoint mapping

Whenever you create a virtual machine, you are given the option to add it to an existing cloud service. In

general, this enables you to horizontally scale to achieve the required performance. In order to exploit

cloud horizontal scaling, every node must be homogenous. Windows Azure balances the connections

through the available nodes.

While this feature is essential for Web Sites, and in general for stateless services, SQL Server does not

support a scale-out architecture. (SQL Server Reporting Services supports this configuration; however, it

is not discussed here. For more information about using Reporting Services in a scale-out architecture,

see “Configure a Native Mode Report Server Scale-Out Deployment” at http://msdn.microsoft.com/enus/library/ms159114.aspx.)

Another less common approach is to statically bind a cloud port to a specific node instance. This creates

an external port-local port mapping that allows you to reach the internal port that connects to the public

external port. This process is often called port forwarding. In this configuration you can forward one (or

more) SQL Server TCP ports to static Windows Azure cloud ports. You can also map more external ports

32 | P a g e

to the same internal port. This kind of decoupling enables you to simulate different endpoints while in

fact using only one.

The Windows Azure port forwarding can be changed using the Management REST API. Remapping the

endpoint to a different SQL Server instance disrupts the existing socket connection. This disruption is

similar to what happens during a network address resource failover phase in failover clusters. SQL Server

TDS protocol forces a new authentication when endpoints are remapped. This authentication should not

be a problem because your applications should already be able to handle transient errors gracefully: this

best practice holds true for this framework too.

<Port 1>

<Port 2>

. ..

<Port n>

VM A

<Port 1>

<Port 2>

. ..

<Port n>

Cloud service (VIP)

In this framework, the examples in this paper work at the database level—each database can fail over on

its own. An endpoint needs to be exposed for each clustered database. The framework helps ensure

that—at a given time—the endpoint will be mapped on the instance that owns the related database.

<Remote Port 1>

<Remote Port 2>

<Remote Port 3>

<Remote Port n>

VM B

The preceding figure shows how a single cloud service (that holds the virtual IP) allows you to map remote

ports to ports in the virtual machines. Note that you can have more cloud service ports besides the SQL

TCP endpoints. Those ports can be mapped to other services (for example, Remote Desktop). There is no

need to use multiple TCP/IP ports in SQL Server. You can reuse the same local port and map it to multiple

remote ports.

33 | P a g e

VM A

<Port 1>

Cloud service (VIP)

<Port 1>

<Remote Port 1>

<Remote Port 2>

<Remote Port 3>

<Remote Port n>

VM B

This configuration is easier to maintain from the SQL Server perspective; it is also more secure because it

reduces the attack surface area.

3.4 Polling architecture

In the failover cluster architecture the Windows Cluster service handles intra-node communication and

the failure detection mechanism. This is achieved, among other ways, by heartbeat communication

between the nodes. In the model discussed here there is no external service that queries the status of the

other instances. This model relies instead on the lease status of the database’s blobs. That means that

each instance that participates in this topology should query the database blob lease status at regular

intervals. This process is often referred as polling. As soon as an instance detects an unleased database

(in other words, with its blobs unlocked) it should try to attach it. The database downtime is in this case

directly influenced by the polling frequency.

In this case, the expected downtime in case of a failure can be expressed in this way:

𝜏=

𝐿+𝑃

+𝑅

2

Where:

𝝉 is the average down time.

34 | P a g e

L is the SQL Server lease time (60 seconds).

P is the polling interval.

R is the recovery time of the database.

In this architecture you can express P directly and—with less precision—you can influence R by specifying

the recovery interval configuration option (for more information about this option, see

http://technet.microsoft.com/en-us/library/ms191154.aspx). For example, if you poll the blobs every 120

seconds and the recovery time is 15 seconds, you can expect the database to be back online after an

average of 1 minute and 45 seconds. If you add the endpoint to the equation, it looks like this:

𝜏=

𝐿+𝑃

+𝑅+𝐸

2

Where:

E is the time needed to move and endpoint from the old owner to the new one. This time is

in general less than 30 seconds, but because the Windows Azure platform is constantly

improving, you should perform your own testing.

3.5 Limitations of this architecture

3.5.1 No metadata sharing

While this architecture allows you to achieve independence between the database and the holding

instance—as far as the partial containment allows—there are some limitations. The most notable is the

need to maintain a shared repository in the instances so that you know which blobs to watch and monitor.

This can be easily achieved through a simple backup and restore operation (using Windows Azure Storage

as the backup destination makes this even easier). As the number of instances that participate in this

architecture grows, however, the alignment task becomes more demanding. This is somewhat balanced

by how easy is to add another instance to the architecture. All you have to do is to copy metadata database

and start the polling jobs.

Another approach—the one taken by the framework explained in the appendix—is to use the Table

service in Windows Azure Storage. Those tables are, by definition, shared among every instance and

therefore are ideal candidates for shared metadata. In Windows Azure, tables do not support distributed

transactions: each call is atomic on its own. For the purpose of the metadata sharing this is not an issue,

because the examples in this paper access those tables in read-only mode most of the time and in writemode only through user interaction. A complete discussion of Windows Azure tables is beyond the scope

of this white paper. For more information about Windows Azure tables, see “Windows Azure Table

Storage and Windows Azure SQL Database - Compared and Contrasted" (http://msdn.microsoft.com/enus/library/windowsazure/jj553018.aspx).

3.5.2 Failover at database level

The other limitations are directly related to the partial database containment. You still have to align

noncontained entities manually. You can use the sys.dm_db_uncontained_entities dynamic management

35 | P a g e

view (http://technet.microsoft.com/en-us/library/ms191154.aspx) to help locate those objects. This task

is commonly done when you use AlwaysOn Availability Groups or SQL Server Mirroring. For more

information about how to perform those tasks, see SQL Server Books Online

(http://msdn.microsoft.com/en-us/library/ms130214(v=sql.120).aspx).

3.5.3 No availability group

There is no communication between the nodes; each node attempts to attach a database as soon as it

notices its blobs are unlocked. In this scenario there can be no guarantee that a group of databases would

end up attached to the same instance. For this reason you should consider mapping an endpoint to a

single database.

3.6 Implementation

The following sections cover in detail all the requirements for a fully functional solution, along with

descriptions of all of the configuration objects that are necessary for the implementation.

3.6.1 Requirements

In order to implement this mechanism you must have:

At least two Windows Azure virtual machines in the same cloud service with SQL Server 2014

installed.

A TCP stand-alone endpoint available in Windows Azure for each database to be clustered.

The master key for each Windows Azure storage account you want to use.

The Windows Azure management certificate including its private key.

A valid SAS for each database blob container.

Important: Although you can use multiple Windows Azure storage accounts for multiple databases, we

highly recommend that you place all files that are related to a single database into the same account; this

is to avoid potential situations where a storage accounts could fail over independently to different

Windows Azure data centers.

3.6.1.1 Virtual machines in the same Windows Azure cloud service

In order to support endpoint mapping, every SQL Server instance—or better, its virtual machine—must

share the same Windows Azure cloud service. This can be done by selecting the appropriate cloud service

during virtual machine instantiation.

36 | P a g e

3.6.1.2 TCP endpoint

Although Windows Azure endpoint management is handled by the solution’s logic, you must open a TCP

listener in SQL Server manually. You can map multiple external ports to the same local port, which is the

solution we recommend, but you can also add more TCP endpoints to SQL Server directly.

There are many ways to do this. One is to open the SQL Server configuration manager and explore the

network configuration node of your instance. From there you have to double click the TCP/IP section.

37 | P a g e

You can add as many TCP ports as you want, separating them with commas.

Restart the instance to activate your changes. Also note that if you specify a port in TCP Port SQL Server

will not start if that port is unavailable. Using TCP Dynamic Ports would fix this, but it is not compatible

with this solution because it requires a fixed port binding between SQL Server and Windows Azure.

38 | P a g e

3.6.1.3 Windows Azure storage shared key

The Windows Azure storage account master key is required by the Windows Azure storage REST API in

order to sign the requests. Each key is tied to a specific storage account. Keep in mind that you can have

more storage account per Windows Azure subscription.

To find the shared keys, use the Storage section of the Windows Azure Management Portal

(https://manage.windowsazure.com):

Click Storage, and then click Manage Accessed Keys.

39 | P a g e

There are always two shared keys. You can use either one.

3.6.1.4 Windows Azure management certificate

The endpoint management REST API calls require you to add a valid management certificate to be

successful. These certificates can be obtained in various ways. For testing purposes you can use the

makecert and pvx2pfx tools.

For example, create a certificate azuretest.cer.

makecert -r -sv C:\temp\azuretest.pvk -n "CN=Azure Self signed certificate"

C:\temp\azuretest.cer

And then provide file locations on the local user computer and passwords.

pvk2pfx -pvk C:\temp\azuretest.pvk -pi <Password> -spc C:\temp\azuretest.cer

-po <Password> -pfx C:\temp\azuretest.pfx

You will end up with three files:

AzureTest.cer – This is the public key only certificate.

AzureTest.pvk – This is the certificate private key.

AzureTest.pfx – This is the certificate with both the private key and the public key.

For more information, see the following topics on MSDN:

40 | P a g e

Makecert.exe (Certificate Creation Tool)

(http://msdn.microsoft.com/library/bfsktky3(v=vs.110).aspx)

Pvk2Pfx (http://msdn.microsoft.com/en-us/library/windows/hardware/ff550672.aspx)

You have to upload the public key only certificate to Windows Azure. You can use the Windows Azure

Management Portal.

You will need to import both the certificates with private and public keys in every SQL Server instance that

will form the cluster. You must install it into the “My” store of the SQL Server engine execution account.

For that task you can either use the Microsoft Management Console (MMC) or the Windows Server 2012

cmdlet Import-Certificate. For more information, see the following topics on TechNet:

Import a Certificate (http://technet.microsoft.com/en-us/library/cc754489.aspx).

41 | P a g e

Import-Certificate (http://technet.microsoft.com/en-us/library/hh848630.aspx).

As best practice, use certificates for this specific purpose. You should secure the certificates very carefully,

because anyone who gains access to them has complete control over your Windows Azure account. We

strongly recommend that mark the private key as nonexportable while importing them into the certificate

stores, and never to store the .pfx file on the server itself.

3.6.1.5 Valid SAS for the database blob containers

SQL Server Data Files in Windows Azure requires you to specify a valid shared access signature (SAS) in

order to access its blobs without having to authenticate every request. This is a very important

performance feature. There are two types of SASs: direct and policy based. Direct SASs sign the resource

with the shared access key itself. This means that the only way to invalidate the SAS is to regenerate the

shared access key. If you want to invalidate a direct SAS, you must invalidate all of them. For this reason

alone, you should use the policy based SASs, even though they are more complex. You can still use direct

SASs if you want to.

42 | P a g e

For more information about direct SASs and how to generate them from SQL Server, see the following

blog post:

About Container Shared Access Signature for SQL Server Data Files in Windows Azure

(http://blogs.technet.com/b/italian_premier_center_for_sql_server/archive/2013/12/05/howto-build-your-container-shared-access-signature-for-sqlxi-from-sql-server.aspx)

For more information about SAS, see the following:

Create and Use a Shared Access Signature (http://msdn.microsoft.com/enus/library/windowsazure/jj721951.aspx)

Introducing Table SAS (Shared Access Signature), Queue SAS and update to Blob SAS

(http://blogs.msdn.com/b/windowsazurestorage/archive/2012/06/12/introducing-table-sasshared-access-signature-queue-sas-and-update-to-blob-sas.aspx)

Policy based SASs require you to create the container policy first. The advantage of a policy based SAS is

that you can invalidate the SAS by invalidating the policy alone. This makes SAS control more granular.

You can use various methods. This white paper shows you how to do that using the Microsoft SQL Server

to Windows Azure helper library (available at https://sqlservertoazure.codeplex.com/) because in this

tool you work with familiar Transact-SQL statements. You can use the tool that works best for you.

In this example you create a container called “demoacl” and attach to it a policy called “frcogno Demo

ACL Policy” that gives full control to the container and its contains. Note that whenever you see <your

storage account> and <your storage account shared key> you should replace those with your

storage account name and storage account shared key, respectively.

First, create a container.

EXEC [Azure].CreateContainer

'<your storage account>', '<your storage account shared key>', 1,

'demoacl', 'Off';

Use the following command to verify that the container was created.

SELECT * FROM [Azure].ListContainers(

'<your storage account>', '<your storage account shared key>', 1, NULL);

Using the following stored procedure, create the policy and attach it to the container you just created.

EXEC [Azure].AddContainerACL

43 | P a g e

'<your storage account>', '<your storage account shared key>', 1,

'demoacl',

-- Container name

'Off',

-- Public access?

'frcogno Demo ACL Policy',

-- Policy name

'2010-01-01',

-- Policy validity start

'2014-12-31',

-- Policy expiration

1,

-- Give read access?

1,

-- Give write access?

1,

-- Give delete blob privilege?

1

-- Give list blob privilege?

Note that you must specify a validity interval. This example uses a long time span. As best practice you

should find the smallest interval that is manageable.

Check to see that your own policy is in place.

SELECT * FROM [Azure].GetContainerACL(

'<your storage account>', '<your storage account shared key>', 1,

'demoacl', NULL, NULL, NULL);

To check the policy, upload a sample blob. You can use a simple text file.

DECLARE @buffer VARBINARY(MAX);

SET @buffer = CONVERT(VARBINARY(MAX), N'This text comes from SQL Server!' +

CONVERT(NVARCHAR, GETDATE()));

PRINT @buffer;

EXEC [Azure].CreateOrReplaceBlockBlob

'<your storage account>', '<your storage account shared key>', 1,

'demoacl',

'sample.txt',

@buffer,

'text/plain',

'ucs-2';

GO

Next, check the file in your container.

SELECT * FROM [Azure].ListBlobs(

'<your storage account>', '<your storage account shared key>', 1,

'demoacl', 1,1,1,1,NULL)

List blobs in a container

44 | P a g e

Because the container was created as private, it should not be possible to access the blob without

authentication. Confirm this by pasting the blob URI in a browser.