* Your assessment is very important for improving the work of artificial intelligence, which forms the content of this project

Download Data Warehouse Pertemuan 2

Survey

Document related concepts

Transcript

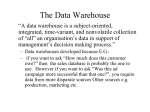

Chapter 2. Data Warehouse History Data Warehouses are a distinct type of computer database that were first developed during the late 1980s and early 1990s. They were developed to meet a growing demand for management information and analysis that could not be met by operational systems. Operational systems were unable to meet this need for a range of reasons: The processing load of reporting reduced the response time of the operational systems, The database designs of operational systems were not optimized for information analysis and reporting, Most organizations had more than one operational system, so company-wide reporting could not be supported from a single system Development of reports in operational systems often required writing specific computer programs which was slow and expensive Data warehouses have evolved through several fundamental stages: Off line Operational Databases Data warehouses in this initial stage are developed by simply copying the database of an operational system to an off-line server where the processing load of reporting does not impact on the operational system's performance. Off line Data Warehouse Data warehouses in this stage of evolution are updated on a regular time cycle (usually daily, weekly or monthly) from the operational systems and the data is stored in an integrated reporting-oriented data structure. Real Time Data Warehouse Data warehouses at this stage are updated on a transaction or event basis, every time an operational system performs a transaction (e.g. an order or a delivery or a booking etc.) Integrated Data Warehouse Data warehouses at this stage are used to generate activity or transactions that are passed back into the operational systems for use in the daily activity of the organization. Definition Definition 1: A decision support database that is maintained separately from the organization’s operational database Definition 2: Support information processing by providing a solid platform of consolidated, historical data for analysis. Definition 3 (Ralph Kimball): A data warehouse is a copy of transaction data specifically structured for query and analysis. Definition 4 (Barry Devlin): A data warehouse is a single, complete and consistent store of data obtained from a variety of sources and made available to end users in a way they can understand and use in a business context. Definition 5 (Ken Orr): A data warehouse is a facility to provide easy access to quality, integrated enterprise data by both professional and non-professional end users. Definition 6: The simplistic view of a data warehouse is that it is historical data about your corporation that has some or all of the characteristics listed below. - Application independent - Collected at a moment in time that is representative of the business cycle - Stored in a fashion easily understood by a nontechnical individual - A model has been created for it. - Metadata has been created for it. Generally, in a data warehouse environment you have to create derived data. You create derived data by using one or more pieces of operational data to produce a new piece of information not stored in the operational system but required by data warehouse users. Definition 7 (W. H. Inmon): A data warehouse is a subject-oriented, integrated, time-variant, and nonvolatile collection of data in support of management’s decisionmaking process. Data warehousing: The process of constructing and using data warehouses Subject-Oriented Organized around major subjects, such as customer, product, sales Focusing on the modeling and analysis of data for decision makers, not on daily operations or transaction processing Provide a simple and concise view around particular subject issues by excluding data that are not useful in the decision support process Integrated Constructed by integrating multiple, heterogeneous data sources relational databases, flat files, on-line transaction records Data cleaning and data integration techniques are applied. Ensure consistency in naming conventions, encoding structures, attribute measures, etc. among different data sources E.g., Hotel price: currency, tax, breakfast covered, etc. When data is moved to the warehouse, it is converted. Time Variant The time horizon for the data warehouse is significantly longer than that of operational systems Operational database: current value data Data warehouse data: provide information from a historical perspective (e.g., past 5-10 years) Every key structure in the data warehouse Contains an element of time, explicitly or implicitly But the key of operational data may or may not contain “time element” Nonvolatile A physically separate store of data transformed from the operational environment Operational update of data does not occur in the data warehouse environment Does not require transaction processing, recovery, and concurrency control mechanisms Requires only two operations in data accessing: initial loading of data and access of data Data Warehouse vs. Heterogeneous DBMS Traditional heterogeneous DB integration: A query driven approach Build wrappers/mediators on top of heterogeneous databases When a query is posed to a client site, a meta-dictionary is used to translate the query into queries appropriate for individual heterogeneous sites involved, and the results are integrated into a global answer set Data warehouse: update-driven, high performance Information from heterogeneous sources is integrated in advance and stored in warehouses for direct query and analysis Data Warehouse vs. Operational DBMS OLTP (on-line transaction processing) Major task of traditional relational DBMS Day-to-day operations: purchasing, inventory, banking, manufacturing, payroll, registration, accounting, etc. OLAP (on-line analytical processing) Major task of data warehouse system Data analysis and decision making Distinct features (OLTP vs. OLAP): User and system orientation: customer vs. market Data contents: current, detailed vs. historical, consolidated Database design: ER + application vs. star + subject View: current, local vs. evolutionary, integrated Access patterns: update vs. read-only but complex queries OLTP vs. OLAP OLTP OLAP users clerk, IT professional knowledge worker function day to day operations decision support DB design application-oriented subject-oriented data current, up-to-date detailed, flat relational isolated repetitive historical, summarized, multidimensional integrated, consolidated ad-hoc lots of scans unit of work read/write index/hash on prim. key short, simple transaction # records accessed tens millions #users thousands hundreds DB size 100MB-GB 100GB-TB metric transaction throughput query throughput, response usage access February 28, 2008 complex query Data Mining: Concepts and Techniques 26 Why Separate Data Warehouse? High performance for both systems DBMS— tuned for OLTP: access methods, indexing, concurrency control, recovery Warehouse—tuned for OLAP: complex multidimensional view, consolidation Different functions and different data: OLAP queries, missing data: Decision support requires historical data which operational DBs do not typically maintain data consolidation: DS requires consolidation (aggregation, summarization) of data from heterogeneous sources data quality: different sources typically use inconsistent data representations, codes and formats which have to be reconciled Note: There are more and more systems which perform OLAP analysis directly on relational databases Data Warehouse Usage Three kinds of data warehouse applications Information processing supports querying, basic statistical analysis, and reporting using crosstabs, tables, charts and graphs Analytical processing multidimensional analysis of data warehouse data supports basic OLAP operations, slice-dice, drilling, pivoting Data mining knowledge discovery from hidden patterns supports associations, constructing analytical models, performing classification and prediction, and presenting the mining results using visualization tools Advantages of Data Warehouse There are many advantages to using a data warehouse, some of them are: Data warehouses enhance end-user access to a wide variety of data. Decision support system users can obtain specified trend reports, e.g. the item with the most sales in a particular area within the last two years. Data warehouses can be a significant enabler of commercial business applications, particularly customer relationship management (CRM) systems. Concerns on Data Warehouse Extracting, transforming and loading data consumes a lot of time and computational resources. Data warehousing project scope must be actively managed to deliver a release of defined content and value. Compatibility problems with systems already in place. Security could develop into a serious issue, especially if the data warehouse is web accessible. Data Storage design controversy warrants careful consideration and perhaps prototyping of the data warehouse solution for each project's environments. Metadata Metadata is succinctly defined as data about data. It is defined as the complete knowledge of the end user, application programmer, and logical and physical data modelers about a singular piece of data. Metadata provide a mechanism to make it easy for end users to search to determine whether this is the data they need. In the case of a data warehouse, data has no meaning without the metadata. This information can and should be collected throughout the development process and is to be a living resource with each new change or addition to the data warehouse. For example: Customer Name - 1. Easy answer - no definition required--wrong! 2. This is the name of a person or organization that does business with our company. It is obtained through the following systems: X, Y, and Z. It is 45 characters long. It is in last name, first name, middle initial format for individuals and in first name to last name for companies. It is captured on a weekly basis. It is related to account number and has additional associated data.It is purged when the customer has had all accounts closed for a minimum of one year. Three Data Warehouse Models Enterprise warehouse collects all of the information about subjects spanning the entire organization Data Mart a subset of corporate-wide data that is of value to a specific groups of users. Its scope is confined to specific, selected groups, such as marketing data mart Independent vs. dependent (directly from warehouse) data mart Virtual warehouse A set of views over operational databases Only some materialized of the possible summary views may be Data Warehouse Development: A Recommended Approach Multi-Tier Data Warehouse Distributed Data Marts Data Mart Data Mart Model refinement Enterprise Data Warehouse Model refinement Define a high-level corporate data model March 2, 2008 Data Mining: Concepts and Techniques 54 Data Mart Definition 1(Aaron Zornes of Meta Group): A data mart is a subject or department oriented Data Warehouse. It can include data duplicated from a corporate Data Warehouse and/or local data. Definition 2(Gartner Group): A data mart is a decentralized subset of data from the repository designed to support the requirements of a specific marketing operation or analysis. Definition 3(Bill Inmon): A data mart is the departmental Data Warehouse that is used to maintain departmental information that is extracted from the enterprise data warehouse. In many cases, a data mart is specific to a group, department, division. The data mart model can be built on either the entity-relationship diagram or the star schema. Figure Data Warehouse Tiered Architecture shows the preferred movement of data from the operational level through the data warehouse to the data mart. In this figure, the data mart is created from the Data Warehouse, but it could also be created from the operational data. The key point is that the split into a global data warehouse and a data mart allows for optimization of design—at the global data warehouse level for data input and extract, and at the data mart level for data manipulation by users. Once data has arrived at the data mart, users will want to be able to access it easily. DSSs provide the means to access data mart data. There can be multiple data marts inside a single corporation; each one relevant to one or more business units for which it was designed. DMs may or may not be dependent or related to other data marts in a single corporation. Data Warehouse: A MultiMulti-Tiered Architecture Other sources Operational DBs Metadata Extract Transform Load Refresh Monitor & Integrator Data Warehouse OLAP Server Serve Analysis Query Reports Data mining Data Marts Data Sources March 2, 2008 Data Storage OLAP Engine Front-End Tools Data Mining: Concepts and Techniques 52 Reasons for creating a data mart Easy access to frequently needed data Creates collective view by a group of users Improves end-user response time Ease of creation Lower cost than implementing a full Data warehouse Potential users are more clearly defined than in a full Data warehouse There are two methodologies regarding Data Warehouse design in relation with data mart: Kimball, in 1997, stated that "...the data warehouse is nothing more than the union of all the data marts", indicating a bottom-up data warehousing methodology in which individual data marts providing thin views into the organizational data could be created and later combined into a larger all-encompassing data warehouse. Inmon responded in 1998 by saying that the data warehouse should be designed from the top-down to include all corporate data. In this methodology, data marts are created only after the complete data warehouse has been created. Data Modeling There are two leading approaches to organizing the data in a data warehouse: the dimensional approach advocated by Ralph Kimball and the normalized approach advocated by Bill Inmon. While operational systems are optimized for simplicity and speed of modification (see OLTP) through heavy use of database normalization and an entity-relationship model (normalized model), the data warehouse is optimized for reporting and analysis (online analytical processing, or OLAP). Frequently data in data warehouses are heavily denormalised, summarised or stored in a dimension-based model. a. Entity Relationship Model (Normalized Approach) An entity relationship model (normalized approach) is represented by entities, their elements, and the relationships among entities. An entity is an item that can be represented by a number of elements. It is perhaps easier to understand what an entity is if you identify an entity with a noun, such as customer, account, sales, or product. An element is an individual piece of information pertaining to an entity. For example, a customer has a name, a phone number, a social security number, an age, and a title. Each of these elements is a further description of the entity. In creating the entity relationship model, there is a normalization process. For example, initially one may identify an address as an element of a customer. During further investigation it is determined that a customer may have more than one address. To normalize this, the information associated with address is removed from the customer, and a new entity called address is created. A relationship between customer and address is created indicating that for each customer there may be many addresses. In this method, the data in the data warehouse is stored in third normal form. An entity relationship model is built to satisfy any query, not specific queries. For this reason, there are usually a larger number of tables with a larger number of elements contained within. If a user wants only customer data, the entity relationship model can suffice quite well. If a user wants customer and address data, a simple join can suffice. However, if a user as stated in the star, wants sales, by customer, by product for the quarter, the query is not easy. The tools available to satisfy the query are few, and the response time is pretty much guaranteed not to be subsecond, because entity relationship models are not tuned for particular queries. The benefits of the entity relationship model for the data warehouse are: - It can contain a large number of elements (more than any one department or user may require). - It can be a source for large amounts of history in terms of years of information. - The user can easily access a small set of tables. - It can be used as a central location for extracting integrated data for data marts. - It is quite straightforward to add new information into the database. The disadvantages of the entity relationship model are: - It has slower performance because of large amounts of data. - It is not as flexible in terms of user queries (large numbers of joins can be difficult). - It is not tuned for a limited set of parameters. - It may require large amounts of storage that may not be used frequently. - It is not easy to use with OLAP tools. - It can have many tables and therefore be hard for users to use without a precise understanding of the data structure. b. Dimension-based Model (Dimensional Approach) In the “dimensional” approach, a star schema is used. Star schema consists of a central fact table surrounded by dimension tables and is frequently referred to as a multidimensional model. Although the original concept was to have up to five dimensions as a star has five points, many stars today have more or fewer than five dimensions. However, for performance reasons, the star schema should be limited to six or seven dimensions. The information in the star usually meets the following guidelines: A fact table contains numerical elements, and dimension tables contain textual data. As you can see from Figure 9, the dimension tables are: - Products - Customers - Addresses - Period Each dimension table has its own unique key. The fact table (CUSTOMER_SALES) shows multiple keys (in bold type). The true key of Customer Sales is Invoice Number and Line Item Sequence Number. However, in a star schema the key of each of the dimension tables is added to the key of the Customer Sales information to create a connection from the fact table to the dimension tables. In this way the key of the fact table is large, but it provides efficient processing of queries to join tables to provide the required user information. The dimension tables provide a mechanism to view the data from different aspects simply by changing the dimensions used in the query. For example, one query may ask for all Customer Sales that occurred for Product 123. If this is a popular product, the query may return a large answer set. However, if the dimensions were changed and now the query asked for all Customer Sales of Product 123 for Week 23, the query would return a significantly smaller answer set. By changing the dimensions, you can narrow or widen your search for information. You can alter the query for any dimension represented in the star. Another example of dimensional approach using star schema : Example of Star Schema time item time_key day day_of_the_week month quarter year Sales Fact Table time_key item_key item_key item_name brand type supplier_type branch_key location branch location_key branch_key branch_name branch_type units_sold dollars_sold avg_sales location_key street city state_or_province country Measures February 29, 2008 Data Mining: Concepts and Techniques 32 Another schema used in dimensional approach is snowflake schema. A snowflake schema is an extension of the star schema where each point of the star radiates into more points (Figure 10). In a snowflake schema, the star schema dimension tables are more normalized. The advantages are improvements in query performance because less data is retrieved and improved performance by joining smaller, normalized tables rather than larger, denormalized tables. However, it increases both the number of tables a user must deal with and the complexities of some queries. For this reason, many experts suggest refraining from using the snowflake schema. Having entity attributes in multiple tables, the same amount of information is available whether a single table or multiple tables are used. Another example of Snowflake schema : Example of Snowflake Schema time time_key day day_of_the_week month quarter year item Sales Fact Table time_key item_key item_key item_name brand type supplier_key supplier supplier_key supplier_type branch_key location branch location_key branch_key branch_name branch_type units_sold dollars_sold avg_sales Measures February 29, 2008 Data Mining: Concepts and Techniques location_key street city_key city city_key city state_or_province country 33 In building a data warehouse for some companies it is possible to narrow the focus on a set of facts; that is, if a data warehouse is to be built around sales, there are some common data elements associated with sales. In addition, there is a set of queries that must be satisfied in a certain way. For this we use dimensions. If users within this industry can agree that everyone wants to view sales by one or all of the following dimensions: time, product, customer and/or address, building the Star is an excellent option. If there are two groups of users who want to have different dimensions from which to view the data, separate stars can be created with the appropriate dimensions. A data warehouse can and frequently does consist of more than one star. However, with star, the sizes of each fact and dimension table are relatively small in width of rows to provide the best performance. Therefore, if users begin to widen the scope of elements, it may become necessary to make more than one star or it may require turning to the entity relationship model. Commonly the row length of a dimension table is rather long because textual information such as a description of a product or a customer address is stored in the dimension table. The benefits of the star schema for the data warehouse are: - It has superior performance because it is designed and tuned for a specific set of parameters. - It works well with OLAP tools. - It can be used as a data mart. - It can be used for the main warehouse for a limited scope of data. - It is flexible in user queries within the defined dimensions. The main disadvantage of the dimensional approach is that it is quite difficult to add or change later if the company changes the way in which it does business. c. Mixed Models If few groups in the corporation can agree on what they need on a regular basis and the views vary significantly, the entity relationship model is the option. A subset of the data pertinent only to a group is created in either the entity relationship model or star schema to satisfy one area. The advantage of using both models is the ability to have integrated data for which needs can change. By that we mean that many different data marts can be built for the times when specific needs can be identified. A disadvantage of using both models occurs when new data elements must be added. The addition of columns to a relational table is extremely easy. Simply alter the table, with the new elements and populate the elements. However, all of the old data in the warehouse now has one or more new columns for which there is no data to populate them. Data Warehouse Back-End Tools and Utilities Data extraction get data from multiple, heterogeneous, and external sources Data cleaning detect errors in the data and rectify them when possible Data transformation convert data from legacy or host format to warehouse format Load sort, summarize, consolidate, compute views, check integrity, and build indicies and partitions Refresh propagate the updates from the data sources to the warehouse Transformasi Data dari Operational Database ke Data Warehouse Transformasi Replikasi dilakukan merupakan mendistribusikan perusahaan. data dengan menggunakan teknologi yang bermanfaat teknologi dan Teknologi stored replikasi sangat procedure pada ke Microsoft® replikasi. seluruh SQL untuk bagian Server™ memungkinkan pengguna untuk membuat salinan data, memindahkan salinan ini ke tempat lain, dan menyinkronkan data secara otomatis sehingga semua salinan mempunyai nilai data yang sama. Replikasi dapat dilakukan antara database pada server yang sama maupun pada server yang berbeda yang dihubungkan dengan LAN, WAN, atau Internet. Ada 3 macam replikasi yaitu: Replikasi Snapshot (Snapshot Replication), Replikasi Transaksional (Transactional Replication), dan Replikasi Merge (Merge Replication). Replikasi Snapshot adalah tipe replikasi yang membuat foto data saat ini pada sebuah publikasi yang ada di Publisher dan mengganti seluruh salinan yang ada pada Subscriber secara periodik. Jadi replikasi ini tidak cuma mempublikasikan perubahan yang terjadi. Replikasi ini cocok dipakai bila Subscriber tidak memerlukan data yang selalu up-todate. Replikasi Transaksional adalah tipe replikasi yang memilih transaksi dari log (catatan) transaksi publication database untuk direplikasi dan mendistribusikannya secara asinkronous ke Subscriber sebagai perubahan berkala, sembari menjaga konsistensi transaksi. Replikasi Merge adalah tipe replikasi yang memungkinkan sebuah tempat (Publisher atau Subscriber) untuk membuat perubahan terhadap data yang direplikasi, dan kemudian perubahan akan dilakukan diseluruh tempat. transaksional. Replikasi ini tidak konsistensi Replikasi ini cocok untuk Subscriber yang mobile dan jarang berhubungan. Istilah-istilah yang dipakai pada replikasi : a. Replication menjamin Proses pembuatan salinan tabel, data, dan stored procedure (semuanya disebut artikel) dari database sumber ke database tujuan yang biasanya terletak pada server yang berbeda. b. Distributor Server yang menyimpan distribution database. Distributor menerima semua perubahan yang terjadi pada data yang akan dipublikasikan, menyimpan perubahan pada distribution database, dan mengirimkan perubahan pada Subscriber. Distributor dan Publisher bisa terdapat pada komputer yang sama, bisa juga terdapat pada komputer yang berbeda. c. local Distributor Server yang dikonfigurasi sebagai Publisher sekaligus berperan sebagai Distributor. Pada konfigurasi ini, publication database dan distribution database terletak pada komputer yang sama. d. remote Distributor Server dikonfigurasi sebagai Distributor, tapi terletak pada komputer yang berbeda dari Publisher. Pada konfigurasi ini, publication database dan distribution database terletak pada komputer yang berbeda. e. Distribution Agent Komponen replikasi yang memindahkan job-job transaksi dan snapshot yang terdapat pada tabel-tabel Subscriber. f. distribution database dalam distribution database ke Database “store-and-forward” yang menyimpan semua transaksi yang menunggu untuk didistribusikan ke Subscriber. Distribution database menerima transaksi-transaksi dari Publisher yang dibawa oleh Log Reader Agent dan menyimpannya hingga Distribution Agent memindahkannya ke Subscriber. g. distribute Memindahkan transaksi-transaksi data atau snapshot-snapshot data dari Publisher ke Subscriber, dimana data-data tersebut di tempatkan pada tabel-tabel tujuan dalam subscription database. h. Log Reader Agent Komponen replikasi transaksional yang memindahkan transaksi- transaksi yang ditandai untuk replikasi dari transaction log di Publish eke distribution database. i. publication Kumpulan artikel yang akan direplikasi dan tergabung dalam satu unit. Sebuah publikasi dapat mengandung satu atau lebih artikel table atau artikel stored procedure dari satu database pengguna. Masing-masing database pengguna dapat mempunyai satu atau lebih publikasi. j. publication database Database yang merupakan sumber dari data yang akan direplikasi. Database ini mengandung tabel-tabel yang akan direplikasi. k. publish Menyiapkan data untuk direplikasi. l. Publisher Server yang menyiapkan data untuk replikasi. Publisher mengatur publication database dan mengirimkan salinan dari seluruh perubahan pada data yang dipublikasikan ke Distributor. m. subscribe Bersedia menerima publikasi. Database tujuan pada Subscriber berlangganan data yang direplikasi dari publication database pada Publisher. n. Subscriber Server yang menerima salinan data yang dipublikasikan. o. subscription database Database yang menerima tabel-tabel dan data yang direplikasi dari publication database. p. pull subscription Tipe langganan dimana pergerakan data berawal dari Subscriber. Subscriber mengatur langganan perubahan data dari Publisher. dengan meminta atau menarik Agen Distribusi ditempatkan di Subscriber sehingga mengurangi beban pada Distributor. q. push subscription Tipe langganan dimana pergerakan data dimulai dari Publisher. Publisher mengatur langganan dengan mengirimkan atau mendorong perubahan data ke satu atau lebih Subscriber. ditempatkan pada Distributor. Agen Distribusi Gambar komponen-komponen replikasi. a. Local Distributor Computer A Publisher Publication Database Computer B Distributor Publish Log Reader Agent Distribution Database Subscriber Disribute (Push Subscription) Distribution Agent at Distributor Subscription Database Subscribe (Pull Subscription) Distribution Agent at Subscriber b. Remote Distributor Computer A Publisher Publication Database Publish Log Reader Agent Computer B Computer C Distributor Subscriber Distribution Database Disribute (Push Subscription) Distribution Agent at Distributor Subscribe (Pull Subscription) Distribution Agent at Subscriber Subscription Database Contoh Replikasi Pada contoh ini kita akan mereplikasi database Northwind. Northwind merupakan database contoh yang ada dalam software MS SQL Server. Langkah – langkah : a. Membuka Menu Replikasi i. Buka enterprise manager ii. Klik pada nama server ii. Pilih Replicate Data b. Mengkonfigurasi “Publishing and Distribution” pada server Disini kita akan membuat Publisher dan Distributor. i. Klik configure replication ii. Klik next pada layar pembuka wizard iii. Pilih server yang akan bertindak sebagai distributor dan klik next iv. Pilih default setting dan klik next v. Klik finish c. Mendefinisikan Publikasi Disini kita akan mendefinisikan sebuah publikasi i. Pada menu Replicate data, klik create or manage a publication ii. Pilih database Northwind dan klik tombol Create Publication iii. Pada layer pembuka wizard, klik next iv. Pilih tipe publikasi dan tekan next v. Pilih No, untuk Immediate-Updating Subscription dan klik next vi. Pilih tipe subscriber dan klik next vii. Pilih tabel dan stored procedure yang akan dipublish. Pilih Publish All dan tekan next viii. Ketiklah Nortwind_Snap pada publication name dan tekan next ix. Pada window selanjutnya klik Yes dan klik next x. Kemudian klik NO untuk mempublish semua data pada semua artikel dan klik next xi. Klik yes untuk memungkinkan anonymous subscriber dan klik next xii. Tentukan jadwal untuk snapshot agent dan klik next xiii. Klik finish d. Mendistribusikan Publikasi Disini kita akan mendistribusikan sebuah publikasi yang sudah kita buat pada langkah sebelumnya kepada subscriber. Ini berarti kita juga akan membuat subscriber. i. Pada menu Replicate Data, klik create or manage a publication atau bisa juga klik push a subscription ii. Pilih publication yang akan didistribusikan (Nortwind_Snap) dan klik Push New Subscription iii. Klik next pada layar pembuka wizard iv. Pilih subscriber dan klik next v. Apabila database tujuan belum dibuat, maka tekan Browse Database kemudian Create New dan ketik nama databasenya. Ketiklah NwinSnap. Anda bisa mengubah spesifikasi database jika dikehendaki vi. Klik OK, lalu pilih NwinSnap dan klik OK lagi, lalu klik next vii. Tentukan jadwal untuk agen distribusi dan klik next viii. Pilih yes untuk menginisialisasi subscriber dan klik next ix. Kalau SQL Server Agent sudah running, tekan next x. Klik finish dan close e. Memeriksa Replikasi Kita bisa memeriksa status replikasi dengan mengklik item Replication Monitor pada Enterprise Manager. Kalau anda mengklik nama publikasi, maka akan keluar status replikasi pada panel kanan Data Cleaning Setelah transformasi data dari Operasional database ke Data Warehouse, maka langkah selanjutnya adalah data cleaning. Data cleaning diperlukan karena datanya terpolusi. Dalam kasus data klien, misalkan ada data klien dengan nama sedikit berbeda tapi alamat sama, nama berbeda dengan alamat sama. Hal ini bisa terjadi baik karena kesalahan pengetikan, klien salah dalam menulis nama, klien salah dalam menulis alamat, bisa juga karena memang seorang klien sering berpindah alamat. Client Number 23003 23003 23003 23009 23013 23019 Name Johnson Johnson Johnson Clinton King Jonson Address 1 Downing Street 1 Downing Street 1 Downing Street 2 Boulevard 3 High Road 1 Downing Street Gambar : Data asli Date Purchase Made 04-15-94 06-21-93 05-30-92 01-01-01 02-28-95 12-20-94 Magazine Purchased car music comic comic sports house Gambar diatas menunjukkan data klien dimana ada klien bernama Johnson dan Jonson. Mereka mempunyai client number yang berbeda tapi dengan alamat yang sama mengindikasikan bahwa mereka adalah orang yang sama. Oleh karena itu de-duplikasi perlu dilakukan. Data cleaning juga perlu dilakukan untuk data pada kolom Date Purchase Made dimana ada tanggal yang tidak masuk akal yaitu 1 Januari 1901. Oleh karena itu kita gantikan data ini dengan NULL. Setelah data cleaning dilakukan, datanya menjadi seperti terlihat pada gambar dibawah ini. Client Number 23003 23003 23003 23009 23013 23003 Name Johnson Johnson Johnson Clinton King Johnson Address 1 Downing Street 1 Downing Street 1 Downing Street 2 Boulevard 3 High Road 1 Downing Street Date Purchase Made 04-15-94 06-21-93 05-30-92 NULL 02-28-95 12-20-94 Magazine Purchased car music comic comic sports house Gambar : Data setelah cleaning Enrichment Enrichment atau pengayaan adalah melengkapi data yang sudah dipunyai. penambahan data untuk Data untuk pengayaan bisa diperoleh dengan membeli data yang dimaksud. Misalkan kita sudah membeli data tambahan yang berhubungan dengan klien seperti tanggal lahir, pendapatan, jumlah kredit, dan kepemilikan rumah dan mobil (Lihat gambar). Client name Johnson Clinton Date of birth 04-13-76 10-20-71 Income $18,500 $36,000 Credit $17,800 $26,600 Car owner no yes House owner no no Gambar : Tabel untuk Enrichment Coding Dalam contoh ini coding dilakukan dengan MS SQL Server dan VB 6.0. Lihat contoh program.