Package 'RWeka'

... Supervised learners, i.e., algorithms for classification and regression, are termed “classifiers” by Weka. (Numeric prediction, i.e., regression, is interpreted as prediction of a continuous class.) R interface functions to Weka classifiers are created by make_Weka_classifier, and have formals formu ...

... Supervised learners, i.e., algorithms for classification and regression, are termed “classifiers” by Weka. (Numeric prediction, i.e., regression, is interpreted as prediction of a continuous class.) R interface functions to Weka classifiers are created by make_Weka_classifier, and have formals formu ...

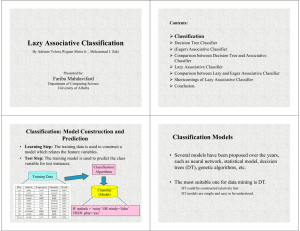

Contents

... the associated class label is unknown, the attribute values of the tuple are tested against the decision tree. A path is traced from the root to a leaf node, which holds the class prediction for that tuple. Decision trees can easily be converted to classification rules. “Why are decision tree classi ...

... the associated class label is unknown, the attribute values of the tuple are tested against the decision tree. A path is traced from the root to a leaf node, which holds the class prediction for that tuple. Decision trees can easily be converted to classification rules. “Why are decision tree classi ...

Ensemble of Feature Selection Techniques for High

... classified into three categories: filters, wrappers and hybrids [7]. In this thesis we will be using four filter based feature ranking techniques and one wrapper based feature ranking technique.Classification is a data mining technique used to classify or predict group membership for data instances. ...

... classified into three categories: filters, wrappers and hybrids [7]. In this thesis we will be using four filter based feature ranking techniques and one wrapper based feature ranking technique.Classification is a data mining technique used to classify or predict group membership for data instances. ...

Combining Classifiers with Meta Decision Trees

... obtain the final prediction from the predictions of the base-level classifiers. Cascading is an iterative process of combining classifiers: at each iteration, the training data set is extended with the predictions obtained in the previous iteration. The work presented here focuses on combining the p ...

... obtain the final prediction from the predictions of the base-level classifiers. Cascading is an iterative process of combining classifiers: at each iteration, the training data set is extended with the predictions obtained in the previous iteration. The work presented here focuses on combining the p ...

The 2009 Knowledge Discovery in Data Competition (KDD Cup

... setting, which is typical of large-scale industrial applications. A large database was made available by the French Telecom company, Orange with tens of thousands of examples and variables. This dataset is unusual in that it has a large number of variables making the problem particularly challenging ...

... setting, which is typical of large-scale industrial applications. A large database was made available by the French Telecom company, Orange with tens of thousands of examples and variables. This dataset is unusual in that it has a large number of variables making the problem particularly challenging ...

A Comparative Study of Discretization Methods for Naive

... to avoid the fragmentation problem [28]. If an attribute has many values, a split on this attribute will result in many branches, each of which receives relatively few training instances, making it difficult to select appropriate subsequent tests. Naive-Bayes learning considers attributes independen ...

... to avoid the fragmentation problem [28]. If an attribute has many values, a split on this attribute will result in many branches, each of which receives relatively few training instances, making it difficult to select appropriate subsequent tests. Naive-Bayes learning considers attributes independen ...

Chapter 6 A SURVEY OF TEXT CLASSIFICATION

... is used to predict a class label for this instance. In the hard version of the classification problem, a particular label is explicitly assigned to the instance, whereas in the soft version of the classification problem, a probability value is assigned to the test instance. Other variations of the cla ...

... is used to predict a class label for this instance. In the hard version of the classification problem, a particular label is explicitly assigned to the instance, whereas in the soft version of the classification problem, a probability value is assigned to the test instance. Other variations of the cla ...

SAWTOOTH: Learning on huge amounts of data

... achieve the best possible classification accuracy at any given point in time. Even though many algorithms have been offered to handle concept drift, the scalability problem still remains open for such algorithms. In this thesis we propose a scalable algorithm for data classification from very large ...

... achieve the best possible classification accuracy at any given point in time. Even though many algorithms have been offered to handle concept drift, the scalability problem still remains open for such algorithms. In this thesis we propose a scalable algorithm for data classification from very large ...

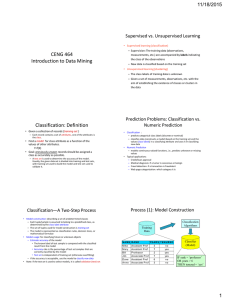

CENG 464 Introduction to Data Mining

... values (class labels) in a classifying attribute and uses it in classifying new data • Numeric Prediction – models continuous-valued functions, i.e., predicts unknown or missing values • Typical applications – Credit/loan approval – Medical diagnosis: if a tumor is cancerous or benign – Fraud detect ...

... values (class labels) in a classifying attribute and uses it in classifying new data • Numeric Prediction – models continuous-valued functions, i.e., predicts unknown or missing values • Typical applications – Credit/loan approval – Medical diagnosis: if a tumor is cancerous or benign – Fraud detect ...

Classification in the Presence of Background Domain Knowledge

... using existing domain knowledge to enrich and better focus the results on user expectations remains open to further developments [Cao, 2010; Domingos, 2003; Yang and Wu, 2006]. While it is true that significant work has been done in some areas, namely pattern mining, to inject knowledge about the do ...

... using existing domain knowledge to enrich and better focus the results on user expectations remains open to further developments [Cao, 2010; Domingos, 2003; Yang and Wu, 2006]. While it is true that significant work has been done in some areas, namely pattern mining, to inject knowledge about the do ...

ppt

... naive Bayes Classifier: P(Refund=Yes|No) = 4/9 P(Refund=No|No) = 5/9 P(Refund=Yes|Yes) = 1/5 P(Refund=No|Yes) = 4/5 P(Marital Status=Single|No) = 3/10 P(Marital Status=Divorced|No)=2/10 P(Marital Status=Married|No) = 5/10 P(Marital Status=Single|Yes) = 3/6 P(Marital Status=Divorced|Yes)=2/6 P(Marita ...

... naive Bayes Classifier: P(Refund=Yes|No) = 4/9 P(Refund=No|No) = 5/9 P(Refund=Yes|Yes) = 1/5 P(Refund=No|Yes) = 4/5 P(Marital Status=Single|No) = 3/10 P(Marital Status=Divorced|No)=2/10 P(Marital Status=Married|No) = 5/10 P(Marital Status=Single|Yes) = 3/6 P(Marital Status=Divorced|Yes)=2/6 P(Marita ...

On Combined Classifiers, Rule Induction and Rough Sets

... classification, reducing the set of attributes or generating decision rules from data. It is also said that the aim is to synthesize reduced, approximate models of concepts from data [20]. The transparency and explainability of such models to human is an important property. Up to now rough sets bas ...

... classification, reducing the set of attributes or generating decision rules from data. It is also said that the aim is to synthesize reduced, approximate models of concepts from data [20]. The transparency and explainability of such models to human is an important property. Up to now rough sets bas ...

Statistical Learning Theory

... which indicates whether this compound is useful for drug design or not. It is expensive to find out whether a chemical compound possesses certain properties that render it a suitable drug because this would require running extensive lab experiments. As a result, only rather few compounds Xi have kno ...

... which indicates whether this compound is useful for drug design or not. It is expensive to find out whether a chemical compound possesses certain properties that render it a suitable drug because this would require running extensive lab experiments. As a result, only rather few compounds Xi have kno ...

7class - Laurentian University | Program Detail

... Let X be a data sample (“evidence”): class label is unknown Let H be a hypothesis that X belongs to class C Classification is to determine P(H|X), (posteriori probability), the probability that the hypothesis holds given the observed data sample X P(H) (prior probability), the initial probability ...

... Let X be a data sample (“evidence”): class label is unknown Let H be a hypothesis that X belongs to class C Classification is to determine P(H|X), (posteriori probability), the probability that the hypothesis holds given the observed data sample X P(H) (prior probability), the initial probability ...

Algoritma Klasifikasi

... • Informally, this can be viewed as posteriori = likelihood x prior/evidence • Predicts X belongs to Ci iff the probability P(Ci|X) is the highest among all the P(Ck|X) for all the k classes • Practical difficulty: It requires initial knowledge of many probabilities, involving significant computatio ...

... • Informally, this can be viewed as posteriori = likelihood x prior/evidence • Predicts X belongs to Ci iff the probability P(Ci|X) is the highest among all the P(Ck|X) for all the k classes • Practical difficulty: It requires initial knowledge of many probabilities, involving significant computatio ...