P - Computing Science

... 2. P(A) = P([A and B) (A and not B)]) = P(A and B) + P(A and not B) – P([A and B) (A and not B)]). Disjunction Rule. 3. [A and B) (A and not B)] is logically equivalent to false, so P([A and B) (A and not B)]) =0. 4. So 2. implies P(A) = P(A and B) + P(A and not B). ...

... 2. P(A) = P([A and B) (A and not B)]) = P(A and B) + P(A and not B) – P([A and B) (A and not B)]). Disjunction Rule. 3. [A and B) (A and not B)] is logically equivalent to false, so P([A and B) (A and not B)]) =0. 4. So 2. implies P(A) = P(A and B) + P(A and not B). ...

HS curriculum for Algebra II

... Perform arithmetic operations on polynomials. (CCSS: A-APR) i. Explain that polynomials form a system analogous to the integers, namely, they are closed under the operations of addition, subtraction, and multiplication; add, subtract, and multiply polynomials. (CCSS: A-APR.1) Understand the relation ...

... Perform arithmetic operations on polynomials. (CCSS: A-APR) i. Explain that polynomials form a system analogous to the integers, namely, they are closed under the operations of addition, subtraction, and multiplication; add, subtract, and multiply polynomials. (CCSS: A-APR.1) Understand the relation ...

Introduction: Aspects of Artificial General Intelligence

... possible for the system to be an integration of several techniques, so as to be generalpurpose without a single g-factor. Also, AGI does not exclude individual difference. It is possible to implement multiple copies of the same AGI design, with different parameters and innate capabilities, and the ...

... possible for the system to be an integration of several techniques, so as to be generalpurpose without a single g-factor. Also, AGI does not exclude individual difference. It is possible to implement multiple copies of the same AGI design, with different parameters and innate capabilities, and the ...

Aalborg Universitet Learning Bayesian Networks with Mixed Variables Bøttcher, Susanne Gammelgaard

... Paper I addresses these issues for Bayesian networks with mixed variables. In this paper, the focus is on learning Bayesian networks, where the joint probability distribution is conditional Gaussian. For an introductory text on learning Bayesian networks, see Heckerman (1999). To learn the parameter ...

... Paper I addresses these issues for Bayesian networks with mixed variables. In this paper, the focus is on learning Bayesian networks, where the joint probability distribution is conditional Gaussian. For an introductory text on learning Bayesian networks, see Heckerman (1999). To learn the parameter ...

Subset Selection of Search Heuristics

... 2009], regressors [Ernandes and Gori, 2004], and metric embeddings [Rayner et al., 2011], each capable of generating multiple different heuristic functions based on input parameters. When multiple heuristics are available, it is common to query each and somehow combine the resulting values into a be ...

... 2009], regressors [Ernandes and Gori, 2004], and metric embeddings [Rayner et al., 2011], each capable of generating multiple different heuristic functions based on input parameters. When multiple heuristics are available, it is common to query each and somehow combine the resulting values into a be ...

Survey on Fuzzy Expert System

... input fuzzy sets with output fuzzy sets. A fuzzy set consist of a fuzzy IF-THEN rules and use two form of membership function (Gaussians & Triangular) where tried for input & output. Theoretically there could be 81 fuzzy rules and each of them having three linguistic levels. However, the simplify th ...

... input fuzzy sets with output fuzzy sets. A fuzzy set consist of a fuzzy IF-THEN rules and use two form of membership function (Gaussians & Triangular) where tried for input & output. Theoretically there could be 81 fuzzy rules and each of them having three linguistic levels. However, the simplify th ...

CETIS Analytics Series vol 1, No 9. A Brief History of Analytics

... analytics is the rich array and maturity of techniques for data analysis; a skilled analyst now has many disciplines to draw inspiration from and many tools in their toolbox. Finally, the increased pressure on business and educational organisations t o be more efficient and better at what they do ad ...

... analytics is the rich array and maturity of techniques for data analysis; a skilled analyst now has many disciplines to draw inspiration from and many tools in their toolbox. Finally, the increased pressure on business and educational organisations t o be more efficient and better at what they do ad ...

The Hidden Pattern

... Engineering General Intelligence (coauthored with Cassio Pennachin) and Probabilistic Logic Networks (coauthored with Matt Ikle’, Izabela Freire Goertzel and Ari Heljakka). My schedule these last few years has been incredibly busy, far busier than I’m comfortable with – I much prefer to have more “o ...

... Engineering General Intelligence (coauthored with Cassio Pennachin) and Probabilistic Logic Networks (coauthored with Matt Ikle’, Izabela Freire Goertzel and Ari Heljakka). My schedule these last few years has been incredibly busy, far busier than I’m comfortable with – I much prefer to have more “o ...

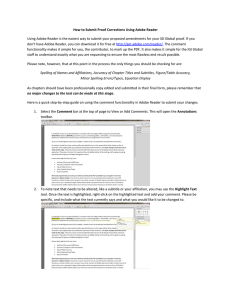

How to Submit Proof Corrections Using Adobe Reader

... Since then, several improvements and some applications were proposed to improve the efficiency of FWA. In this paper, the conventional fireworks algorithm is first summarized and reviewed and then three improved fireworks algorithms are provided. By changing the ways of calculating numbers and ampli ...

... Since then, several improvements and some applications were proposed to improve the efficiency of FWA. In this paper, the conventional fireworks algorithm is first summarized and reviewed and then three improved fireworks algorithms are provided. By changing the ways of calculating numbers and ampli ...

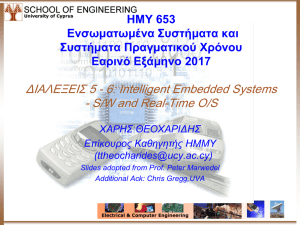

PPT

... Task: to extract features which are good for classification. Good features:• Objects from the same class have similar feature values. • Objects from different classes have different values. ...

... Task: to extract features which are good for classification. Good features:• Objects from the same class have similar feature values. • Objects from different classes have different values. ...