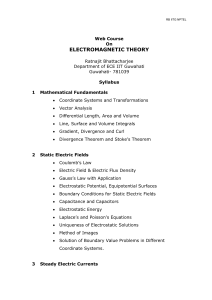

electromagnetic theory

... of the conservative property of charges under the implicit assumption that there is no accumulation of charge at the junction. Electromagnetic theory deals directly with the electric and magnetic field vectors where as circuit theory deals with the voltages and currents. Voltages and currents are in ...

... of the conservative property of charges under the implicit assumption that there is no accumulation of charge at the junction. Electromagnetic theory deals directly with the electric and magnetic field vectors where as circuit theory deals with the voltages and currents. Voltages and currents are in ...

PDF

... Fact 1. For any field K, K[X] is a PID. Fact 2. Any PID is also a UFD The two facts together tell us that we can indeed talk of factorization of polynomials in K[X]. Another useful fact is the following, and this helps us see that factorization makes sense even on multivariate polynomials. Fact 3. I ...

... Fact 1. For any field K, K[X] is a PID. Fact 2. Any PID is also a UFD The two facts together tell us that we can indeed talk of factorization of polynomials in K[X]. Another useful fact is the following, and this helps us see that factorization makes sense even on multivariate polynomials. Fact 3. I ...

THE FUNDAMENTAL THEOREM OF ALGEBRA VIA LINEAR ALGEBRA

... the implication Theorem 1 ⇒ Theorem 2 is usually how one first meets the fundamental theorem of algebra in a linear algebra course: it assures us that any complex square matrix has an eigenvector because the characteristic polynomial of the matrix has a complex root. But here, we will prove Theorem ...

... the implication Theorem 1 ⇒ Theorem 2 is usually how one first meets the fundamental theorem of algebra in a linear algebra course: it assures us that any complex square matrix has an eigenvector because the characteristic polynomial of the matrix has a complex root. But here, we will prove Theorem ...

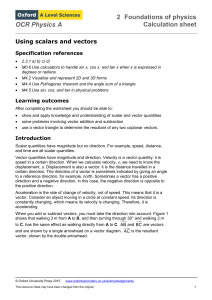

OCR Physics A Using scalars and vectors Specification references

... To combine any two vectors, we can draw a triangle like ABC in Figure 1, where the lengths of the sides represent the magnitude of the vector (for example, forces of 30 N and 20 N). The third side of the triangle shows us the magnitude and direction of the resultant force. Careful drawing of a scale ...

... To combine any two vectors, we can draw a triangle like ABC in Figure 1, where the lengths of the sides represent the magnitude of the vector (for example, forces of 30 N and 20 N). The third side of the triangle shows us the magnitude and direction of the resultant force. Careful drawing of a scale ...

Math F412: Homework 7 Solutions March 20, 2013 1. Suppose V is

... not show that this is a vector space. We extend T to a map T ∶ W → W by T(a + ib) = Ta + iTb. It’s easy to see that this map is complex linear; don’t show this. If the characteristic equation det(T − λI) has a complex root λ, then there is a vector z = x + i y in W such that T(x + i y) = λ(x + i y); ...

... not show that this is a vector space. We extend T to a map T ∶ W → W by T(a + ib) = Ta + iTb. It’s easy to see that this map is complex linear; don’t show this. If the characteristic equation det(T − λI) has a complex root λ, then there is a vector z = x + i y in W such that T(x + i y) = λ(x + i y); ...

I n - USC Upstate: Faculty

... Definition: Let A and B be two matrices. These matrices are the same, that is, A = B if they have the same number of rows and columns, and every element at each position in A equals the element at corresponding position in B. * This is not trivial if elements are real numbers subject to digital appr ...

... Definition: Let A and B be two matrices. These matrices are the same, that is, A = B if they have the same number of rows and columns, and every element at each position in A equals the element at corresponding position in B. * This is not trivial if elements are real numbers subject to digital appr ...

q-linear functions, functions with dense graph, and everywhere

... the subset M of functions which satisfies it is lineable if M ∪ {0} contains an infinite dimensional vector space. At times, we will be more specific, referring to the set M as μ-lineable if it contains a vector space of dimension μ. Also, we let λ(M) be the maximum cardinality (if it exists) of suc ...

... the subset M of functions which satisfies it is lineable if M ∪ {0} contains an infinite dimensional vector space. At times, we will be more specific, referring to the set M as μ-lineable if it contains a vector space of dimension μ. Also, we let λ(M) be the maximum cardinality (if it exists) of suc ...

Notes

... The costs of computations Our first goal in any scientific computing task is to get a sufficiently accurate answer. Our second goal is to get it fast enough4 . Of course, there is a tradeoff between the computer time and our time; and often, we can optimize both by making wise high-level decisions a ...

... The costs of computations Our first goal in any scientific computing task is to get a sufficiently accurate answer. Our second goal is to get it fast enough4 . Of course, there is a tradeoff between the computer time and our time; and often, we can optimize both by making wise high-level decisions a ...

Mathematical Programming

... Consider the (primal) LP: maxc x : Ax b, x 0 where A is an m by n matrix. Then, x must be an n-dimensional column vector, c an n-dimensional row vector, and b an m-dimensional column vector. The dual of the LP above is the linear program: min yb : yA c, y 0 where, for the products to be ...

... Consider the (primal) LP: maxc x : Ax b, x 0 where A is an m by n matrix. Then, x must be an n-dimensional column vector, c an n-dimensional row vector, and b an m-dimensional column vector. The dual of the LP above is the linear program: min yb : yA c, y 0 where, for the products to be ...

exam2topics.pdf

... So of R is the reduced row echelon form of A, row(R) =row(A) But a basis for row(R) is quick to identify; take all of the non-zero rows of R ! (The zero rows are clearly redundant.) These rows are linearly independent, since each has a ‘special coordinate’ where, among the rows, only it is non-zero. ...

... So of R is the reduced row echelon form of A, row(R) =row(A) But a basis for row(R) is quick to identify; take all of the non-zero rows of R ! (The zero rows are clearly redundant.) These rows are linearly independent, since each has a ‘special coordinate’ where, among the rows, only it is non-zero. ...

Some applications of vectors to the study of solid geometry

... to each vertex of the tetrahedron intersect the opposite face,The four vectors involved may be reduced to three by choosing an arbitrary vertex, say A, as the origin, and then writing ...

... to each vertex of the tetrahedron intersect the opposite face,The four vectors involved may be reduced to three by choosing an arbitrary vertex, say A, as the origin, and then writing ...

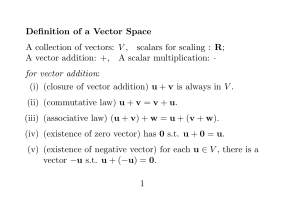

Vector space

A vector space (also called a linear space) is a collection of objects called vectors, which may be added together and multiplied (""scaled"") by numbers, called scalars in this context. Scalars are often taken to be real numbers, but there are also vector spaces with scalar multiplication by complex numbers, rational numbers, or generally any field. The operations of vector addition and scalar multiplication must satisfy certain requirements, called axioms, listed below. Euclidean vectors are an example of a vector space. They represent physical quantities such as forces: any two forces (of the same type) can be added to yield a third, and the multiplication of a force vector by a real multiplier is another force vector. In the same vein, but in a more geometric sense, vectors representing displacements in the plane or in three-dimensional space also form vector spaces. Vectors in vector spaces do not necessarily have to be arrow-like objects as they appear in the mentioned examples: vectors are regarded as abstract mathematical objects with particular properties, which in some cases can be visualized as arrows.Vector spaces are the subject of linear algebra and are well understood from this point of view since vector spaces are characterized by their dimension, which, roughly speaking, specifies the number of independent directions in the space. A vector space may be endowed with additional structure, such as a norm or inner product. Such spaces arise naturally in mathematical analysis, mainly in the guise of infinite-dimensional function spaces whose vectors are functions. Analytical problems call for the ability to decide whether a sequence of vectors converges to a given vector. This is accomplished by considering vector spaces with additional structure, mostly spaces endowed with a suitable topology, thus allowing the consideration of proximity and continuity issues. These topological vector spaces, in particular Banach spaces and Hilbert spaces, have a richer theory.Historically, the first ideas leading to vector spaces can be traced back as far as the 17th century's analytic geometry, matrices, systems of linear equations, and Euclidean vectors. The modern, more abstract treatment, first formulated by Giuseppe Peano in 1888, encompasses more general objects than Euclidean space, but much of the theory can be seen as an extension of classical geometric ideas like lines, planes and their higher-dimensional analogs.Today, vector spaces are applied throughout mathematics, science and engineering. They are the appropriate linear-algebraic notion to deal with systems of linear equations; offer a framework for Fourier expansion, which is employed in image compression routines; or provide an environment that can be used for solution techniques for partial differential equations. Furthermore, vector spaces furnish an abstract, coordinate-free way of dealing with geometrical and physical objects such as tensors. This in turn allows the examination of local properties of manifolds by linearization techniques. Vector spaces may be generalized in several ways, leading to more advanced notions in geometry and abstract algebra.