Variational Inference for Dirichlet Process Mixtures

... on the stick-breaking representation of the underlying DP. The algorithm involves two probability distributions—the posterior distribution p and a variational distribution q. The latter is endowed with free variational parameters, and the algorithmic problem is to adjust these parameters so that q a ...

... on the stick-breaking representation of the underlying DP. The algorithm involves two probability distributions—the posterior distribution p and a variational distribution q. The latter is endowed with free variational parameters, and the algorithmic problem is to adjust these parameters so that q a ...

Calibration by correlation using metric embedding from non

... Grossmann et. al. assume to know the function f , obtained with a separate calibration phase, by using a sensor with known intrinsic calibration experiencing the same scene as the camera being calibrated. Therefore, using the knowledge of f , one can recover the distances from the similarities: d(si ...

... Grossmann et. al. assume to know the function f , obtained with a separate calibration phase, by using a sensor with known intrinsic calibration experiencing the same scene as the camera being calibrated. Therefore, using the knowledge of f , one can recover the distances from the similarities: d(si ...

Procedural Knowledge Representations

... read street signs along the way to find out where it is. Thus in solving problems in AI we must represent knowledge and there are two entities to deal with: Facts -- truths about the real world and what we represent. This can be regarded as the knowledge level Representation of the facts which we ma ...

... read street signs along the way to find out where it is. Thus in solving problems in AI we must represent knowledge and there are two entities to deal with: Facts -- truths about the real world and what we represent. This can be regarded as the knowledge level Representation of the facts which we ma ...

ÚÙد ÙÙ

ÙÙ

٠تÙ

رÛÙ - Hassan Saneifar Professional Page

... statements that are either true or false. It deals only with the truth value of complete statements and does not consider relationships or dependencies between objects. ...

... statements that are either true or false. It deals only with the truth value of complete statements and does not consider relationships or dependencies between objects. ...

The Nonparametric Kernel Bayes Smoother

... be applied to any domain with hidden states and measurements, such as images, graphs, strings, and documents that are not easily modeled in a Euclidean space Rd , provided that a similarity is defined on the domain by a positive definite kernel. And, the nKB-smoother provides kernel mean smoothing i ...

... be applied to any domain with hidden states and measurements, such as images, graphs, strings, and documents that are not easily modeled in a Euclidean space Rd , provided that a similarity is defined on the domain by a positive definite kernel. And, the nKB-smoother provides kernel mean smoothing i ...

Tweety: A Comprehensive Collection of Java

... Core library which contains abstract classes and interfaces for all kinds of knowledge representation formalisms. Furthermore, the library Math contains classes for dealing with mathematical sub-problems that often occur, in particular, in probabilistic approaches to reasoning. Most other Tweety pro ...

... Core library which contains abstract classes and interfaces for all kinds of knowledge representation formalisms. Furthermore, the library Math contains classes for dealing with mathematical sub-problems that often occur, in particular, in probabilistic approaches to reasoning. Most other Tweety pro ...

. - Villanova Computer Science

... which takes advantage of irrelevant variations in instances – performance on test data will be much lower – may mean that your training sample isn’t representative – in SVMs, may mean that C is too high ...

... which takes advantage of irrelevant variations in instances – performance on test data will be much lower – may mean that your training sample isn’t representative – in SVMs, may mean that C is too high ...

Tensor rank-one decomposition of probability tables

... Figure 4: Comparison of the total size of junction tree. It should be noted that the tensor rank-one decomposition can be applied to any probabilistic model. The savings depends on the graphical structure of the probabilistic model. In fact, by avoiding the moralization of the parents, we give the t ...

... Figure 4: Comparison of the total size of junction tree. It should be noted that the tensor rank-one decomposition can be applied to any probabilistic model. The savings depends on the graphical structure of the probabilistic model. In fact, by avoiding the moralization of the parents, we give the t ...

pdf file

... by L0 = (L0 , Cn) which is based on classical propositional logic. Here L0 is the set of propositional formulas based on a set of propositional variables Var and Cn(X) can be defined as the smallest subset of L0 containing the set X ∪Ax, where Ax is a suitable set of axioms, and which is closed with ...

... by L0 = (L0 , Cn) which is based on classical propositional logic. Here L0 is the set of propositional formulas based on a set of propositional variables Var and Cn(X) can be defined as the smallest subset of L0 containing the set X ∪Ax, where Ax is a suitable set of axioms, and which is closed with ...

INTRODUCTION TO Al AND PRODUCTION SYSTEMS 9

... cannot easily handle them. The storage also presents another problem but searching can be achieved by hashing. The number of rules that are used must be minimised and the set can be produced by expressing each rule in as general a form as possible. The representation of games in this way leads to a ...

... cannot easily handle them. The storage also presents another problem but searching can be achieved by hashing. The number of rules that are used must be minimised and the set can be produced by expressing each rule in as general a form as possible. The representation of games in this way leads to a ...

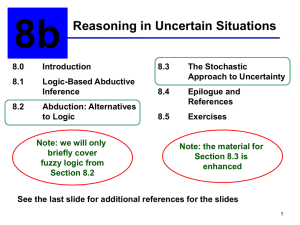

ch08b

... allowing graded beliefs. In addition, it provides a theory to assign beliefs to relations between propositions (e.g., pq), and related propositions (the notion of dependency). ...

... allowing graded beliefs. In addition, it provides a theory to assign beliefs to relations between propositions (e.g., pq), and related propositions (the notion of dependency). ...

Chapter 13 Uncertainty

... • (View as a set of 4 × 2 equations, not matrix mult.) • Chain rule is derived by successive application of product rule: P(X1, …,Xn) = P(X1,...,Xn-1) P(Xn | X1,...,Xn-1) = P(X1,...,Xn-2) P(Xn-1 | X1,...,Xn-2) P(Xn | X1,...,Xn-1) ...

... • (View as a set of 4 × 2 equations, not matrix mult.) • Chain rule is derived by successive application of product rule: P(X1, …,Xn) = P(X1,...,Xn-1) P(Xn | X1,...,Xn-1) = P(X1,...,Xn-2) P(Xn-1 | X1,...,Xn-2) P(Xn | X1,...,Xn-1) ...

Solution Manual Artificial Intelligence a Modern Approach

... steps; no agent can do better. (Note: in general, the condition stated in the first sentence of this answer is much stricter than necessary for an agent to be rational.) b. The agent in (a) keeps moving backwards and forwards even after the world is clean. It is better to do $&%('*) once the world i ...

... steps; no agent can do better. (Note: in general, the condition stated in the first sentence of this answer is much stricter than necessary for an agent to be rational.) b. The agent in (a) keeps moving backwards and forwards even after the world is clean. It is better to do $&%('*) once the world i ...

Computing Contingent Plans via Fully Observable

... generally easier to compute since integrating online sensing with planning eliminates the need to plan for a potentially exponential (in the size of relevant unknown facts) number of contingencies. In the absence of deadends, online contingent planning can be fast and effective. Recent advances incl ...

... generally easier to compute since integrating online sensing with planning eliminates the need to plan for a potentially exponential (in the size of relevant unknown facts) number of contingencies. In the absence of deadends, online contingent planning can be fast and effective. Recent advances incl ...

Relational Dynamic Bayesian Networks

... In this section we show how to represent probabilistic dependencies in a dynamic relational domain by combining DBNs with first-order logic. We start by defining relational and dynamic relational domains in terms of first-order logic and then define relational dynamic Bayesian networks (RDBNs) which ...

... In this section we show how to represent probabilistic dependencies in a dynamic relational domain by combining DBNs with first-order logic. We start by defining relational and dynamic relational domains in terms of first-order logic and then define relational dynamic Bayesian networks (RDBNs) which ...

Non-Deterministic Planning With Conditional Effects

... Search and Rescue: The Search and Rescue domain originates from the IPC-6 Probabilistic track. The planning task requires the agent to fly to various locations and explore for a lost human who must be rescued. The action of “exploring” a location non-deterministically reveals whether or not a human ...

... Search and Rescue: The Search and Rescue domain originates from the IPC-6 Probabilistic track. The planning task requires the agent to fly to various locations and explore for a lost human who must be rescued. The action of “exploring” a location non-deterministically reveals whether or not a human ...

Uncertainty reasoning and representation: A

... This Thesis is brought to you for free and open access by the Thesis/Dissertation Collections at RIT Scholar Works. It has been accepted for inclusion in Theses by an authorized administrator of RIT Scholar Works. For more information, please contact [email protected]. ...

... This Thesis is brought to you for free and open access by the Thesis/Dissertation Collections at RIT Scholar Works. It has been accepted for inclusion in Theses by an authorized administrator of RIT Scholar Works. For more information, please contact [email protected]. ...

Ordinal Decision Models for Markov Decision Processes

... in [17, 24] is based on the minimax criterion. In that setting, finding an optimal stationary deterministic policy is NP-hard, which makes this approach difficult to put into practice for large size problems for the moment. Assuming that rewards can only be ranked, [23] proposed a decision model bas ...

... in [17, 24] is based on the minimax criterion. In that setting, finding an optimal stationary deterministic policy is NP-hard, which makes this approach difficult to put into practice for large size problems for the moment. Assuming that rewards can only be ranked, [23] proposed a decision model bas ...

Combining Linear Programming and Satisfiability Solving for

... are boolean-valued; typeface are real. must be solved to solve the entire LCNF problem1 . The key to the encoding is the simple but expressive concept of triggers — each propositional variable may trigger a constraint; this constraint is then enforced whenever the variable’s truth assignment is true ...

... are boolean-valued; typeface are real. must be solved to solve the entire LCNF problem1 . The key to the encoding is the simple but expressive concept of triggers — each propositional variable may trigger a constraint; this constraint is then enforced whenever the variable’s truth assignment is true ...

Representing Tuple and Attribute Uncertainty in Probabilistic

... data [22], query languages [4, 2] and data models that can represent complex correlations [13, 20, 21] naturally produced from various applications [14, 13, 3]. The underlying data models for much of this work are based on probability theory coupled with possible worlds semantics where a probabilist ...

... data [22], query languages [4, 2] and data models that can represent complex correlations [13, 20, 21] naturally produced from various applications [14, 13, 3]. The underlying data models for much of this work are based on probability theory coupled with possible worlds semantics where a probabilist ...

Reinforcement Learning in the Presence of Rare Events

... Reinforcement learning has seen successes in many domains, from scheduling tasks such as elevator dispatching (Crites and Barto, 1996), resource-constrained scheduling (Zhang and Dietterich, 2000), job-shop scheduling (Zhang and Dietterich, 1995), and supply chain management (Stockheim et al., 2003 ...

... Reinforcement learning has seen successes in many domains, from scheduling tasks such as elevator dispatching (Crites and Barto, 1996), resource-constrained scheduling (Zhang and Dietterich, 2000), job-shop scheduling (Zhang and Dietterich, 1995), and supply chain management (Stockheim et al., 2003 ...

Liftability of Probabilistic Inference: Upper and Lower Bounds

... models is called lifted inference” [20]; “The idea behind lifted inference is to carry out as much inference as possible without propositionalizing [15]; “lifted inference, which deals with groups of indistinguishable variables, rather than individual ground atoms [21]. While, thus, the term lifted ...

... models is called lifted inference” [20]; “The idea behind lifted inference is to carry out as much inference as possible without propositionalizing [15]; “lifted inference, which deals with groups of indistinguishable variables, rather than individual ground atoms [21]. While, thus, the term lifted ...

Diagnosis of Coordination Faults: A Matrix

... the predicate γ 0 (ai , sj ) = true ⇔ γ(ai ) = sj . We will use shorthand and denote γ 0 (ai , sj ) as sij . As mentioned in the introduction, one of the novelties of this work is the possibility to gather joint coordinations to one structure. To this end, we present a function to set multiple state ...

... the predicate γ 0 (ai , sj ) = true ⇔ γ(ai ) = sj . We will use shorthand and denote γ 0 (ai , sj ) as sij . As mentioned in the introduction, one of the novelties of this work is the possibility to gather joint coordinations to one structure. To this end, we present a function to set multiple state ...

PDF

... good progress in the area of Automated Reasoning. For example, SATO (Satisfiability Testing Optimized)[30], a model generator based on the Davis-PutnamLogemann-Loveland method for propositional clauses [7, 8], has solved a number of difficult open existence problems for Quasigroups with specific algebr ...

... good progress in the area of Automated Reasoning. For example, SATO (Satisfiability Testing Optimized)[30], a model generator based on the Davis-PutnamLogemann-Loveland method for propositional clauses [7, 8], has solved a number of difficult open existence problems for Quasigroups with specific algebr ...