clustering1 - Network Protocols Lab

... BIRCH (1996): uses CF-tree and incrementally adjusts the quality of sub-clusters CURE (1998): selects well-scattered points from the cluster and then shrinks them towards the center of the cluster by a specified fraction CHAMELEON (1999): hierarchical clustering using dynamic ...

... BIRCH (1996): uses CF-tree and incrementally adjusts the quality of sub-clusters CURE (1998): selects well-scattered points from the cluster and then shrinks them towards the center of the cluster by a specified fraction CHAMELEON (1999): hierarchical clustering using dynamic ...

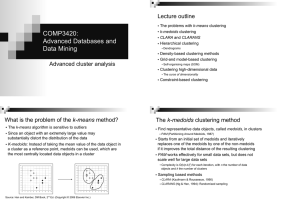

COMP3420: dvanced Databases and Data Mining

... Decompose data objects into several levels of nested partitionings (tree of clusters), called a dendrogram A clustering of the data objects is obtained by cutting the dendrogram at the desired level, then each connected component forms a cluster ...

... Decompose data objects into several levels of nested partitionings (tree of clusters), called a dendrogram A clustering of the data objects is obtained by cutting the dendrogram at the desired level, then each connected component forms a cluster ...

4) Recalculate the new cluster center using

... Given a set of N items to be clustered, and an N*N distance (or similarity) matrix, the basic process of hierarchical clustering (defined by S.C. Johnson in 1967) is this: 1. Start by assigning each item to a cluster, so that if you have N items, you now have N clusters, each containing just one ite ...

... Given a set of N items to be clustered, and an N*N distance (or similarity) matrix, the basic process of hierarchical clustering (defined by S.C. Johnson in 1967) is this: 1. Start by assigning each item to a cluster, so that if you have N items, you now have N clusters, each containing just one ite ...

Clustering Algorithms - Computerlinguistik

... Example: Use a clustering algorithm to discover parts of speech in a set of word. The algorithm should group together words with the same syntactic category. Intuitive: check if the words in the same cluster seem to have the same part of speech. Expert: ask a linguist to group the words in the data ...

... Example: Use a clustering algorithm to discover parts of speech in a set of word. The algorithm should group together words with the same syntactic category. Intuitive: check if the words in the same cluster seem to have the same part of speech. Expert: ask a linguist to group the words in the data ...

Nearest-neighbor chain algorithm

In the theory of cluster analysis, the nearest-neighbor chain algorithm is a method that can be used to perform several types of agglomerative hierarchical clustering, using an amount of memory that is linear in the number of points to be clustered and an amount of time linear in the number of distinct distances between pairs of points. The main idea of the algorithm is to find pairs of clusters to merge by following paths in the nearest neighbor graph of the clusters until the paths terminate in pairs of mutual nearest neighbors. The algorithm was developed and implemented in 1982 by J. P. Benzécri and J. Juan, based on earlier methods that constructed hierarchical clusterings using mutual nearest neighbor pairs without taking advantage of nearest neighbor chains.