A Cluster-based Algorithm for Anomaly Detection in Time Series

... Fig. 1. A time series T split into equal-sized clusters C’k, each of which of size t. The red dots are the centroids, mk, of each cluster C’k. The loop of lines 2-8 terminates when no sample ti is moved in lines 4-7. After this loop, we have the set of sets, C (see Fig. 1), composed of clusters of s ...

... Fig. 1. A time series T split into equal-sized clusters C’k, each of which of size t. The red dots are the centroids, mk, of each cluster C’k. The loop of lines 2-8 terminates when no sample ti is moved in lines 4-7. After this loop, we have the set of sets, C (see Fig. 1), composed of clusters of s ...

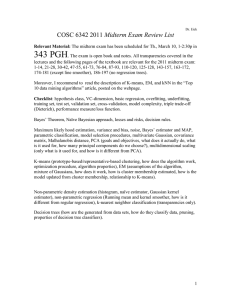

Final Exam 2007-08-16 DATA MINING

... finished, please staple these pages together in an order that corresponds to the order of the questions. • This examination contains 40 points in total and their distribution between sub-questions is clearly identifiable. Note that you will get credit only for answers that are correct. To pass, you ...

... finished, please staple these pages together in an order that corresponds to the order of the questions. • This examination contains 40 points in total and their distribution between sub-questions is clearly identifiable. Note that you will get credit only for answers that are correct. To pass, you ...

A comparison of various clustering methods and algorithms in data

... of documents within a cluster. If the number of clusters is large, the centroids cab be further clustered to produces hierarchy with in a dataset ...

... of documents within a cluster. If the number of clusters is large, the centroids cab be further clustered to produces hierarchy with in a dataset ...

Document

... – The order of nodes as selected by PRIM’s algorithm defines a linear representation, L(D), of a data set D Any contiguous block in L(D) represents a cluster if and only if its elements form a sub-tree of the MST, plus some minor additional conditions (each cluster forms a valley) ...

... – The order of nodes as selected by PRIM’s algorithm defines a linear representation, L(D), of a data set D Any contiguous block in L(D) represents a cluster if and only if its elements form a sub-tree of the MST, plus some minor additional conditions (each cluster forms a valley) ...

cluster - Computer Science, Stony Brook University

... in two. This is done by placing the selected pair into different groups and using them as seed points. All other objects in this group are examined, and are placed into the new group with the closest seed point. The procedure then returns to Step 1. • If the distance between the selected objects is ...

... in two. This is done by placing the selected pair into different groups and using them as seed points. All other objects in this group are examined, and are placed into the new group with the closest seed point. The procedure then returns to Step 1. • If the distance between the selected objects is ...

Title Goes Here - Binus Repository

... – In successive steps, look for the closest pair of points (p, q) such that one point (p) is in the current tree but the other (q) is not – Add q to the tree and put an edge between p and q ...

... – In successive steps, look for the closest pair of points (p, q) such that one point (p) is in the current tree but the other (q) is not – Add q to the tree and put an edge between p and q ...

Data Mining Project Part II: Clustering and Classification

... Part II: Clustering and Classification Task1: Cluster your data Description The goal of this task is to choose a clustering algorithm, implement it, and then test it on a real dataset from http://archive.ics.uci.edu/ml/. You can choose from the two following topics, or choose your own topic: 1) Topi ...

... Part II: Clustering and Classification Task1: Cluster your data Description The goal of this task is to choose a clustering algorithm, implement it, and then test it on a real dataset from http://archive.ics.uci.edu/ml/. You can choose from the two following topics, or choose your own topic: 1) Topi ...

Nearest-neighbor chain algorithm

In the theory of cluster analysis, the nearest-neighbor chain algorithm is a method that can be used to perform several types of agglomerative hierarchical clustering, using an amount of memory that is linear in the number of points to be clustered and an amount of time linear in the number of distinct distances between pairs of points. The main idea of the algorithm is to find pairs of clusters to merge by following paths in the nearest neighbor graph of the clusters until the paths terminate in pairs of mutual nearest neighbors. The algorithm was developed and implemented in 1982 by J. P. Benzécri and J. Juan, based on earlier methods that constructed hierarchical clusterings using mutual nearest neighbor pairs without taking advantage of nearest neighbor chains.