examples of Markov chains, irreducibility and

... The proof has three steps 1. Show that transition matrix p must have at least one eigenvector q with eigenvalue equal to 1 2. Show that any eigenvector q of p must have coordinates which are either all positive, or all negative. 3. Show thatt the space of eigenvectors for 1 is a set of one dimension ...

... The proof has three steps 1. Show that transition matrix p must have at least one eigenvector q with eigenvalue equal to 1 2. Show that any eigenvector q of p must have coordinates which are either all positive, or all negative. 3. Show thatt the space of eigenvectors for 1 is a set of one dimension ...

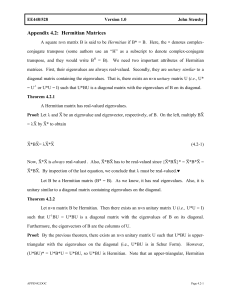

Appendix 4.2: Hermitian Matrices r r r r r r r r r r r r r r r r r r

... A square n×n matrix B is said to be Hermitian if B* = B. Here, the * denotes complexconjugate transpose (some authors use an “H” as a subscript to denote complex-conjugate transpose, and they would write BH = B). We need two important attributes of Hermitian matrices. First, their eigenvalues are al ...

... A square n×n matrix B is said to be Hermitian if B* = B. Here, the * denotes complexconjugate transpose (some authors use an “H” as a subscript to denote complex-conjugate transpose, and they would write BH = B). We need two important attributes of Hermitian matrices. First, their eigenvalues are al ...

Uniqueness of the row reduced echelon form.

... equivalent then the systems AX = 0 and BX = 0 are equivalent in the sense of Theorem 2.1 and thus have the same solutions. This makes it interesting to try to row reduce the matrix to as simple a form as possible. The first step in this is a matrix is row reduced iff the first nonzero element in any ...

... equivalent then the systems AX = 0 and BX = 0 are equivalent in the sense of Theorem 2.1 and thus have the same solutions. This makes it interesting to try to row reduce the matrix to as simple a form as possible. The first step in this is a matrix is row reduced iff the first nonzero element in any ...

- 1 - AMS 147 Computational Methods and Applications Lecture 17

... [Draw two nearly perpendicular lines to show a well-conditioned system] [Draw two nearly parallel lines to show an ill-conditioned system] ...

... [Draw two nearly perpendicular lines to show a well-conditioned system] [Draw two nearly parallel lines to show an ill-conditioned system] ...

Chapter 4 Powerpoint - Catawba County Schools

... The additive identity matrix for the set of all m x n matrices is the zero matrix 0, or Omxn ,whose elements are all zeros. The opposite, or additive inverse, of an m x n matrix A is –A. -A is the m x n matrix with elements that are the opposites of the corresponding elements of A. ...

... The additive identity matrix for the set of all m x n matrices is the zero matrix 0, or Omxn ,whose elements are all zeros. The opposite, or additive inverse, of an m x n matrix A is –A. -A is the m x n matrix with elements that are the opposites of the corresponding elements of A. ...

Definition - WordPress.com

... If the augmented matrix [A: b] in row echelon form or in reduced row echelon form has a zero row, the system of linear equations is linearly dependent. Otherwise it is linearly independent. ...

... If the augmented matrix [A: b] in row echelon form or in reduced row echelon form has a zero row, the system of linear equations is linearly dependent. Otherwise it is linearly independent. ...

Chapter 9 The Transitive Closure, All Pairs Shortest Paths

... R is computed in lg(n-1) + 1 matrix multiplications. Each multiplication requires O(n3) operations so R can be computed in O(n3 * lgn) 9.6.1 Kronrod's Algorithm It is used to multiply boolean matrices. C = A x B For example suppose: The A matrix row determines which rows of B are to be unioned to pr ...

... R is computed in lg(n-1) + 1 matrix multiplications. Each multiplication requires O(n3) operations so R can be computed in O(n3 * lgn) 9.6.1 Kronrod's Algorithm It is used to multiply boolean matrices. C = A x B For example suppose: The A matrix row determines which rows of B are to be unioned to pr ...

Lecture 30: Linear transformations and their matrices

... output vectors. We want to find a matrix A so that T (v) = Av, where v and Av get their coordinates from these bases. The first column of A consists of the coefficients a11 , a21 , ..., a1m of T (v1 ) = a11 w1 + a21 w2 + · · · + a1m wm . The entries of column i of the matrix A are de termined by T (vi ...

... output vectors. We want to find a matrix A so that T (v) = Av, where v and Av get their coordinates from these bases. The first column of A consists of the coefficients a11 , a21 , ..., a1m of T (v1 ) = a11 w1 + a21 w2 + · · · + a1m wm . The entries of column i of the matrix A are de termined by T (vi ...

Semidefinite and Second Order Cone Programming Seminar Fall 2012 Lecture 8

... The “largest eigenvalue” is a convex, but in general nonsmooth, function of its argument matrix. The common way of proving this using the Rayleigh-Ritz quotient characterization of eigenvalues of a symmetric matrix. However, we can prove this, and simultaneously give an SDP representation by observi ...

... The “largest eigenvalue” is a convex, but in general nonsmooth, function of its argument matrix. The common way of proving this using the Rayleigh-Ritz quotient characterization of eigenvalues of a symmetric matrix. However, we can prove this, and simultaneously give an SDP representation by observi ...

Revisions in Linear Algebra

... A matrix is a rectangular table of (real or complex) numbers. The order of a matrix gives the number of rows and columns it has. A matrix with m rows and n columns has order m × n. Write down the order of the following matrices: ...

... A matrix is a rectangular table of (real or complex) numbers. The order of a matrix gives the number of rows and columns it has. A matrix with m rows and n columns has order m × n. Write down the order of the following matrices: ...

Non-negative matrix factorization

NMF redirects here. For the bridge convention, see new minor forcing.Non-negative matrix factorization (NMF), also non-negative matrix approximation is a group of algorithms in multivariate analysis and linear algebra where a matrix V is factorized into (usually) two matrices W and H, with the property that all three matrices have no negative elements. This non-negativity makes the resulting matrices easier to inspect. Also, in applications such as processing of audio spectrograms non-negativity is inherent to the data being considered. Since the problem is not exactly solvable in general, it is commonly approximated numerically.NMF finds applications in such fields as computer vision, document clustering, chemometrics, audio signal processing and recommender systems.